Clear Purpose and Objectives

The primary objective of this project is to automate the evaluation of a candidate’s public GitHub profile against a job description (JD) using a secure, robust, and explainable multi-agent system. The system aims to streamline technical hiring by providing objective, reproducible, and scalable candidate assessments.

Target Audience Definition

- Technical recruiters and hiring managers

- Engineering and AI/ML teams

- HR technology solution providers

- Researchers in AI-driven talent evaluation

Problem Definition

Manual screening of open-source contributions is time-consuming, subjective, and error-prone. There is a need for an automated, transparent, and secure solution to match candidate skills and activity with job requirements.

Current State Gap Identification

- Lack of automation in JD-to-GitHub profile matching

- Insufficient security and validation in existing tools

- Limited explainability and reproducibility in candidate evaluation

- Absence of robust error handling and monitoring in demo systems

Context Establishment

This work builds on recent advances in multi-agent orchestration, LLM integration, and secure AI system design. It addresses the need for production-grade, enterprise-ready solutions in technical hiring.

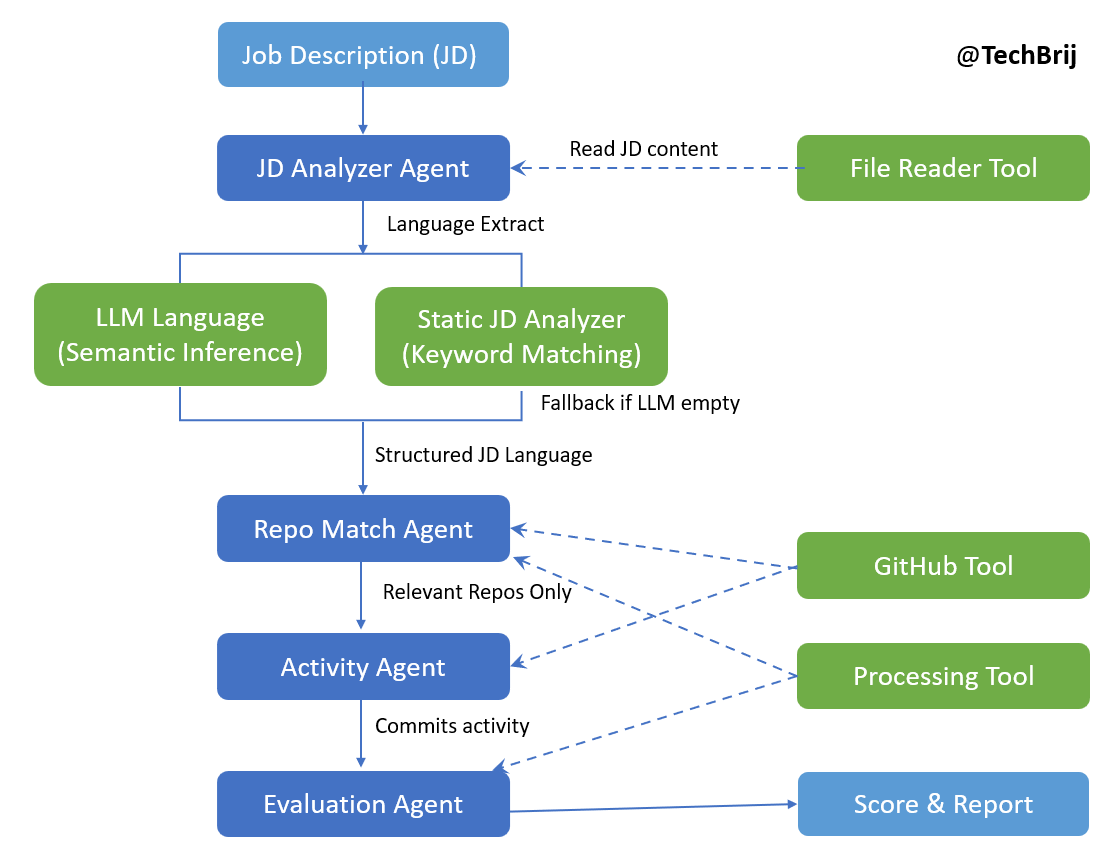

Architecture

The system is composed of four main agents, each responsible for a distinct stage in the evaluation pipeline:

| Agent Name | Role & Functionality |

|---|---|

| JD Analyzer Agent | Extracts programming languages and skills from the job description using LLM with static analysis fallback. |

| Repo Match Agent | Matches candidate's GitHub repositories to required skills. |

| Activity Agent | Analyzes commit activity in relevant repositories over the past year. |

| Evaluation Agent | Scores the match and generates a human-readable evaluation report. |

Key Tools:

- SafetyValidator: Detects toxicity and prompt injection in JD

- Retry Utility: Robust retry with exponential backoff for all network calls

- Logger: Centralized, structured logging for compliance and debugging

Workflow:

- User submits JD and GitHub username (UI/CLI)

- Inputs validated and sanitized

- Multi-agent workflow orchestrated (LangGraph)

- Results validated and presented to user

Clear Prerequisites and Requirements

- Python 3.9+

- Internet access for GitHub API and LLM

- Groq API key (for LLM-based JD analysis)

- Docker (optional, for containerized deployment)

- Streamlit (for web UI)

Tools, Frameworks, & Services

- LangGraph: Multi-agent orchestration

- Streamlit: Web user interface

- Detoxify: Toxicity detection in JD

- Pytest: Testing framework

- Docker: Containerization

- GitHub API: Data source

- Groq LLM: Language model integration

Module 3 Production Improvements

User Interface

- Web App: [NEW]

- Built with Streamlit for intuitive, guided workflows

- Input: Job description (editable), GitHub username

- Output: Score, match level, detailed report

- Error handling and user guidance throughout

- CLI:

- Interactive prompts for JD and GitHub username

- Prints evaluation report to console

Safety & Security

- Input validation (GitHub username, JD)

- Output filtering and type/range checks

- Prompt injection and toxicity checks

- Error handling

Example

- GitHub Username:

- Regex and API existence check

- Error message for invalid/blocked usernames

- Job Description:

- Detoxify model for toxicity

- Prompt injection keyword blacklist

- Length and content checks

- Output:

- Score must be float in [0,1]

- Report must be non-empty string

- All failures logged and surfaced to user

Testing & Quality Assurance

- Comprehensive Test Suite:

- Unit tests for agents and tools

- Integration tests for agent workflows

- Performance tests

- Coverage:

- greater than 80% for core functionality

- How to Run:

pytest tests/ -v --cov=src --cov-report=html- here is the coverage output

Logging, Monitoring & Resilience

- Centralized logging for all agent/tool actions

- Health monitoring via Streamlit UI

- Easy configuration and environment management

- with_retry utility wraps all network/API calls

- Handles timeouts, connection errors, and rate limits

- Exponential backoff and max retry limits

- All retry/failure events logged for traceability

Installation and Usage Instructions

- Clone the repository

- Create and activate a Python virtual environment

- Install dependencies:

pip install -r requirements.txt - Configure

.envwith API keys and parameters - Run the web UI:

streamlit run frontend.py - Or run CLI:

python -m src.app - For Docker: build and run as described in the README

- Build:

docker build -t profile-evaluator . - Run:

docker run -p 8501:8501 --env-file .env profile-evaluator

Screenshots

Toxic JD error:

Visual Demo

Jump here for the demo.

Scoring & Evaluation Logic

- Stages:

- Repository Language Match

- Activity Scoring (last year)

- Advanced Scoring (stars, issues)

- Aggregation:

Overall Score = Repo Match Score * 0.5 + Activity Score * 0.3 + Advanced Score * 0.2

- Result Levels:

- greater than 80%: Excellent

- 50–80%: Good

- less than 50%: Partial Match

Troubleshooting & FAQ

- Common Issues:

- API key/configuration errors: Check

.envand logs - Low coverage: Run

pytestand review reports - UI not loading: Ensure dependencies and port availability

- API key/configuration errors: Check

- Support:

- GitHub Issues for bug reports and feature requests

Significance and Implications of Work

This project demonstrates how to move from a research demo to a production-ready, secure, and explainable AI system for technical hiring. It sets a new standard for automation, safety, and transparency in candidate evaluation.

Features and Benefits Analysis

- Automated, objective JD-to-GitHub profile matching

- Multi-agent, extensible architecture

- Secure input/output validation and toxicity detection

- Robust error handling and monitoring

- User-friendly web and CLI interfaces

- High test coverage and reproducibility

Competitive Differentiation

- Production-grade security and validation guardrails

- Explainable, human-readable evaluation reports

- Modular, extensible agent and tool design

- Dockerized deployment and health monitoring

Originality of Work

- First open-source, production-ready multi-agent system for JD-driven GitHub evaluation

- Combines LLM, static analysis, and advanced validation in a single workflow

Innovation in Methods/Approaches

- Hybrid LLM + static fallback for skill extraction

- Prompt injection and toxicity detection in all user/LLM inputs

- Exponential backoff retry logic for all network calls

- Output validation and explainability at every step

Advancement of Knowledge or Practice

- Demonstrates best practices for productionizing AI agentic workflows

- Provides a template for secure, explainable, and robust AI system design

Real-World Applications

- Automated technical candidate screening

- AI-driven talent analytics

- Research in explainable AI for HR

- Open-source contribution analysis

Technical Asset Access Links

Performance Characteristics and Requirements

- Handles large GitHub profiles and complex JDs efficiently

- Workflow step timings and throughput tested in integration suite

- Designed for reliability under API/network failures

Maintenance and Support Status

- Actively maintained

- Issues and feature requests via GitHub

- Contributions welcome

Access and Availability Status

- Open-source, public repository

- Docker image build instructions provided

License and Usage Rights of the Technical Asset

- MIT License (see LICENSE file)

Contact Information of Asset Creators

- Author: TechBrij

- Issues: GitHub Issues