This publication falls under the Technical Assets category, specifically the Tool/App/Software type, requiring adherence to all associated Essential criteria.

This project, the NLP RAG Memory Assistant, implements a highly reliable and rigorously grounded Retrieval-Augmented Generation (RAG) system. Developed for the Agentic AI Developer Certification Program (Module 1), the system is engineered to provide context-aware answers that are strictly derived from a dedicated knowledge base of Ready Tensor publications.

The solution leverages the LangChain Expression Language (LCEL) to build a transparent, modular pipeline, integrating the local Ollama LLM (LLaMA-3) with a FAISS vector store. The system's primary achievement is its zero-hallucination guarantee, enforced through specialized prompt templating.

| Project Metric Alignment (Essential Tier) | Status |

|---|---|

| Project Identity & Purpose | Clearly defined by title and abstract, stating the problem solved (hallucination). |

| Basic UX for Interaction | Delivered via an interactive Streamlit UI. |

| Framework Implementation | Implemented using LangChain (LCEL). |

The system is built upon a standard, yet hardened, RAG architecture optimized for local performance and verification. This detailed breakdown addresses the Technical Quality rubric criteria.

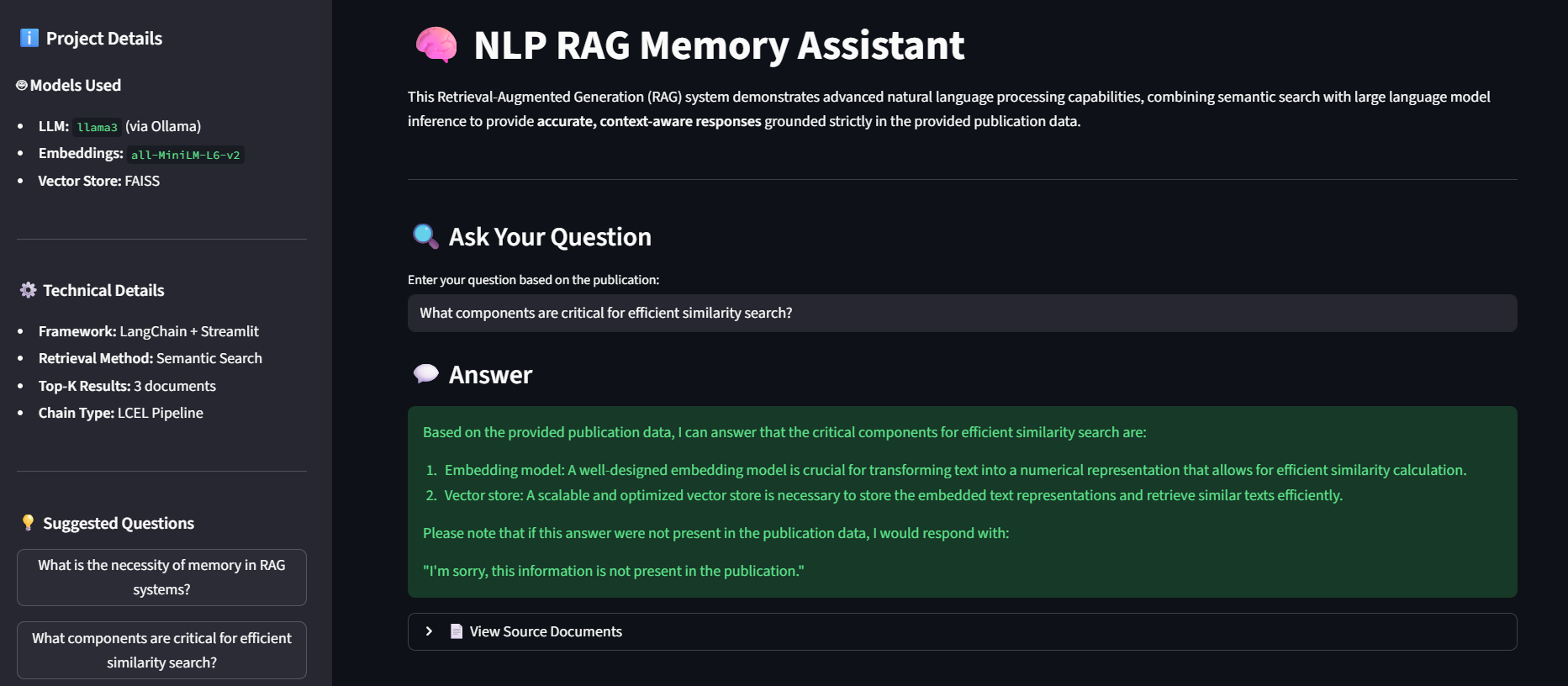

The entire pipeline relies on open-source and local technologies for speed and portability.

| Component | Technology | Role | Essential Metric |

|---|---|---|---|

| LLM Inference | Ollama (llama3) | Generates the final, human-readable answer. | External Tool/Model Used |

| Embedding Model | all-MiniLM-L6-v2 | Converts textual data into 384-dimensional vectors for semantic search. | Methodology and Tool Specified |

| Vector Store | FAISS | Chosen for its efficiency in high-speed similarity search. | Vector Store Used |

| Interface | Streamlit | Provides the user interface and handles session state for interactivity. | Basic UX Provided |

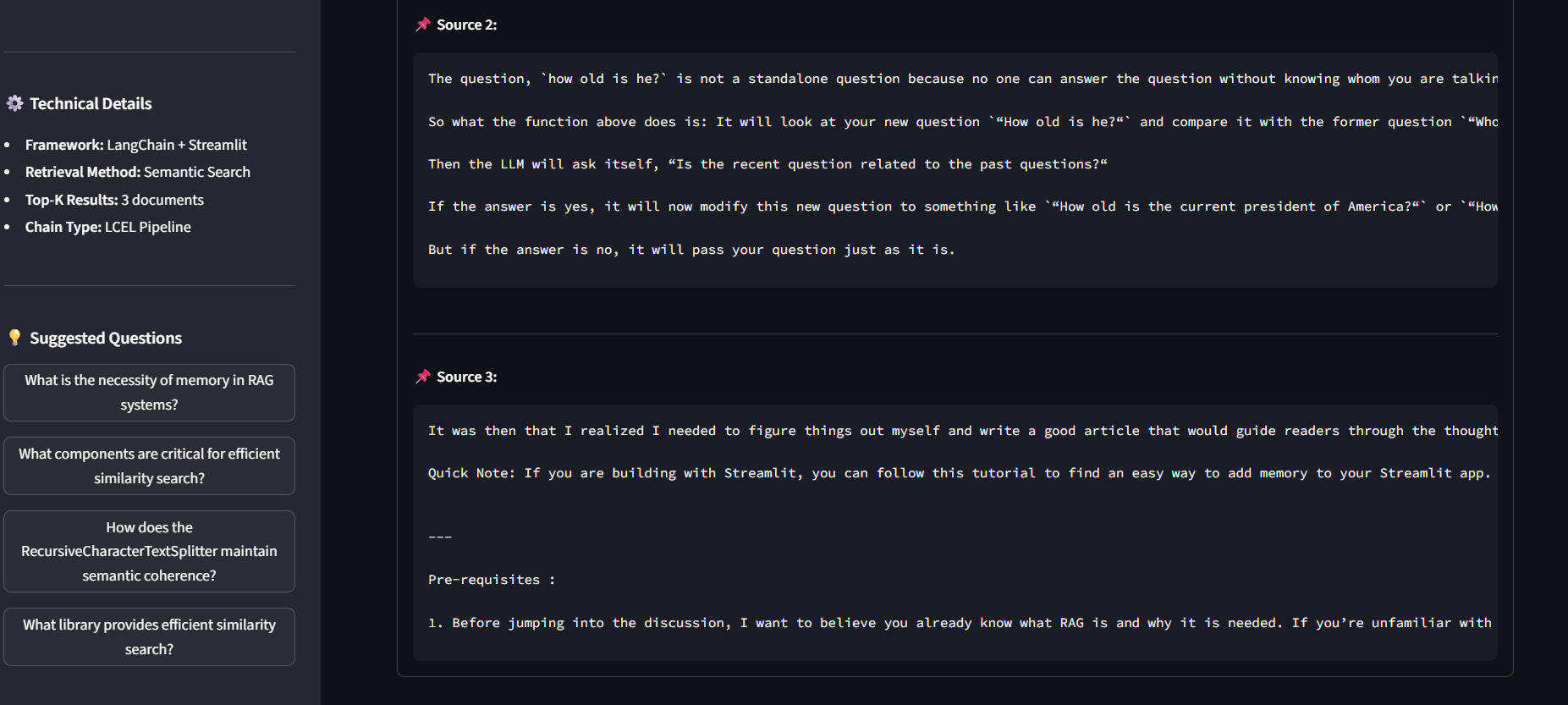

The RAG process is managed through a LangChain Expression Language (LCEL) pipeline, ensuring modularity and traceablity:

k=3) most semantically similar text chunks.StrOutputParser.To meet the mandatory non-hallucination requirement, the system enforces a strict instruction set within the prompt template:

Core Constraint: If the answer is not fully contained within the provided context, the model MUST respond with the exact phrase: "I'm sorry, this information is not present in the publication."

This section confirms compliance with the Essential criteria for Repository Structure, Environment, and Code Quality.

The project follows a standard, organized structure with clear separation of concerns:

src/: Contains core logic (app.py entry point) and the ingestion script (ingest.py).data/: Houses the source data (memory_publication.json).vectorstore/: Stores the generated FAISS index (configured in .gitignore).requirements.txt: Lists all project dependencies.requirements.txt, satisfying the requirement for clear dependency identification..venv) for isolated, reproducible execution.The codebase demonstrates basic software engineering practices:

setup_rag_pipeline, format_docs) rather than monolithic scripts.VECTOR_DB_PATH, LLM_MODEL) are separated from core logic at the top of the files.try/except blocks in critical sections (e.g., vector store loading, LLM invocation) to provide informative feedback via Streamlit.The application was thoroughly tested against critical RAG scenarios to verify correct operation and reliability.

| Test Scenario | Query Example | Outcome Verified |

|---|---|---|

| Retrieval Success | "What library provides efficient similarity search?" | Answer: Correctly identified FAISS, confirming the RAG chain successfully retrieved and synthesized the correct context. |

| Grounded Answer | "How does RecursiveCharacterTextSplitter maintain coherence?" | Answer: Provided a factual, derived summary from the publication data. |

| Fallback Check | "When was the Eiffel Tower built?" | Answer: Returned the exact phrase: "I'm sorry, this information is not present in the publication." |

This project is a successful demonstration of building a production-ready, locally hosted RAG pipeline using modern LangChain techniques. The solution is fully documented and meets all technical and legal requirements for Module 1 submission.

The code is released under the MIT License, granting full usage rights for commercial and non-commercial purposes. The full license text is available in the accompanying LICENSE file.

RAVI KANTH MADDI