As Artificial Intelligence continues to evolve at a rapid pace, keeping track of its history, technical breakthroughs, and foundational research papers becomes increasingly difficult. Generic AI models often hallucinate details when asked about specific historical papers or complex technical implementations.

To solve this, I built the AI Research Guardian, a specialized Retrieval-Augmented Generation (RAG) system. Unlike a standard chatbot, this tool is strictly scoped to the domain of AI History and Technical Research. It allows researchers and developers to chat directly with a curated library of PDF academic papers, ensuring that every answer is grounded in factual documentation.

This project was built as part of the AAIDC Module 1 Certification. While the initial requirement was a basic RAG system, I enhanced the application to focus on security, relevance metrics, and a user-friendly web interface.

The primary goal of this system is to serve as an intelligent archive for AI knowledge. The system is designed to handle:

This RAG system was trained on the following curated collection of AI research papers and reports:

AI Watch - Defining Artificial Intelligence 2.0 (European Commission Joint Research Centre : https://publications.jrc.ec.europa.eu/repository/handle/JRC120469

Artificial Intelligence and the Future of Teaching and Learning (U.S. Department of Education : https://www.ed.gov/sites/ed/files/documents/ai-report/ai-report.pdf

History and Evolution of Artificial Intelligence (IJSET - International Journal of Scientific Engineering and Technology) : https://www.ijset.in/wp-content/uploads/IJSET_V12_issue3_565.pdf

A Comprehensive Study on Artificial Intelligence (IJRTI - International Journal for Research Trends and Innovation) : https://www.ijrti.org/papers/IJRTI2304061.pdf

Artificial Intelligence: Short History, Present Developments, and Future Outlook (MIT Lincoln Laboratory : https://www.ll.mit.edu/sites/default/files/publication/doc/2021-03/Artificial%20Intelligence%20Short%20History%2C%20Present%20Developments%2C%20and%20Future%20Outlook%20-%20Final%20Report%20-%202021-03-16_0.pdf

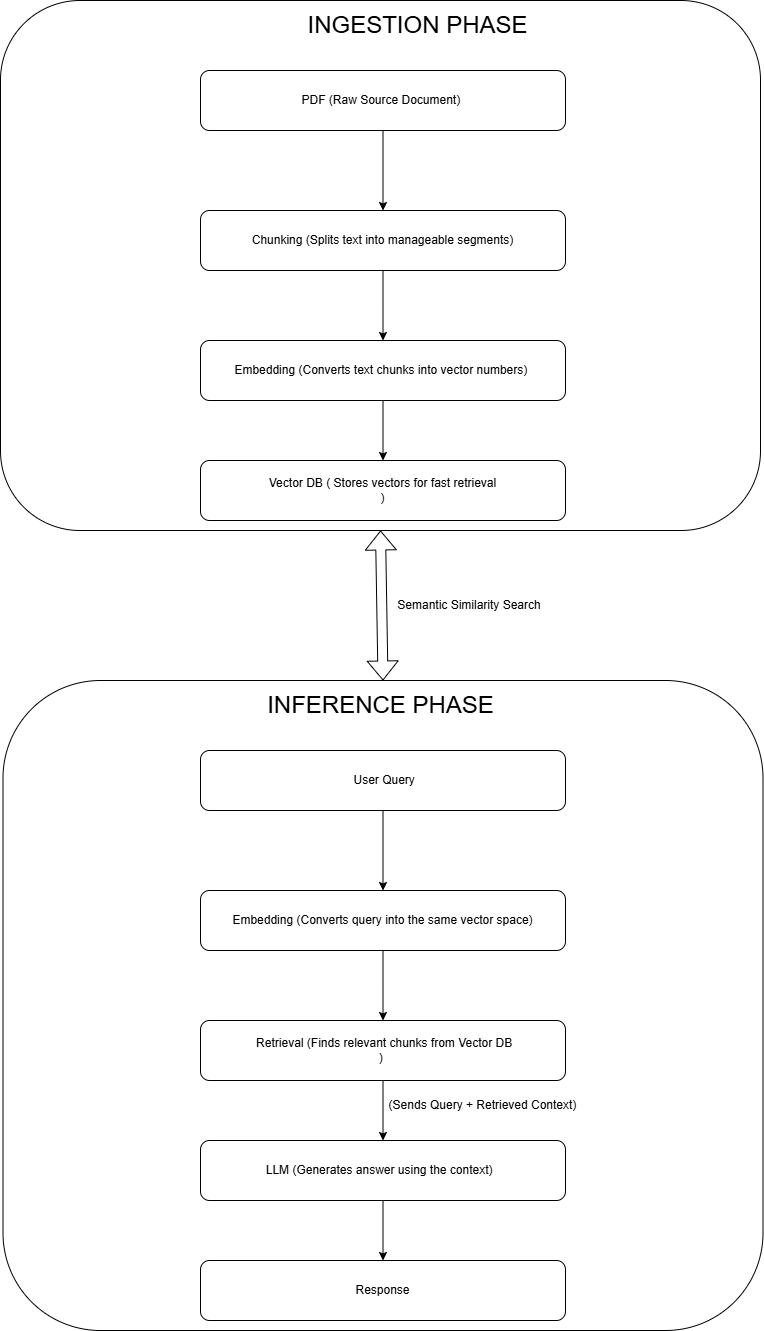

The application follows a linear RAG pipeline designed for accuracy and transparency.

A critical challenge in RAG systems is losing meaning when breaking down large documents. I utilized the RecursiveCharacterTextSplitter instead of simple whitespace splitting. This method respects paragraph and sentence boundaries, ensuring that technical explanations remain coherent when fed into the AI.

For storage, I selected ChromaDB due to its lightweight nature and compatibility with Python environments. The system uses Cosine Similarity (via L2 distance) to find the most relevant pieces of text. This allows the user to search by meaning (e.g., "How do neural networks learn?") rather than just keyword matching.

During development, I encountered significant compatibility issues between modern AI libraries (langchain, chromadb) and newer Python versions (3.13+). Specifically, the onnxruntime dependency required by ChromaDB is not yet stable on the latest Python releases. I resolved this by enforcing a strict Python 3.11 environment and pinning specific versions of pydantic in the requirements.txt file to prevent runtime errors.

A key requirement for any modern AI system is safety. I implemented two specific layers of defense to prevent "hallucinations" and "jailbreaks."

Before any processing occurs, the system sanitizes the user's input. Queries that are empty, too short, or contain malicious formatting are rejected immediately. This saves API costs and prevents basic prompt injection attacks.

Standard RAG systems often try to be helpful even when the user asks irrelevant or harmful questions (e.g., "How do I make a weapon?"). To prevent this, I implemented a Distance Check.

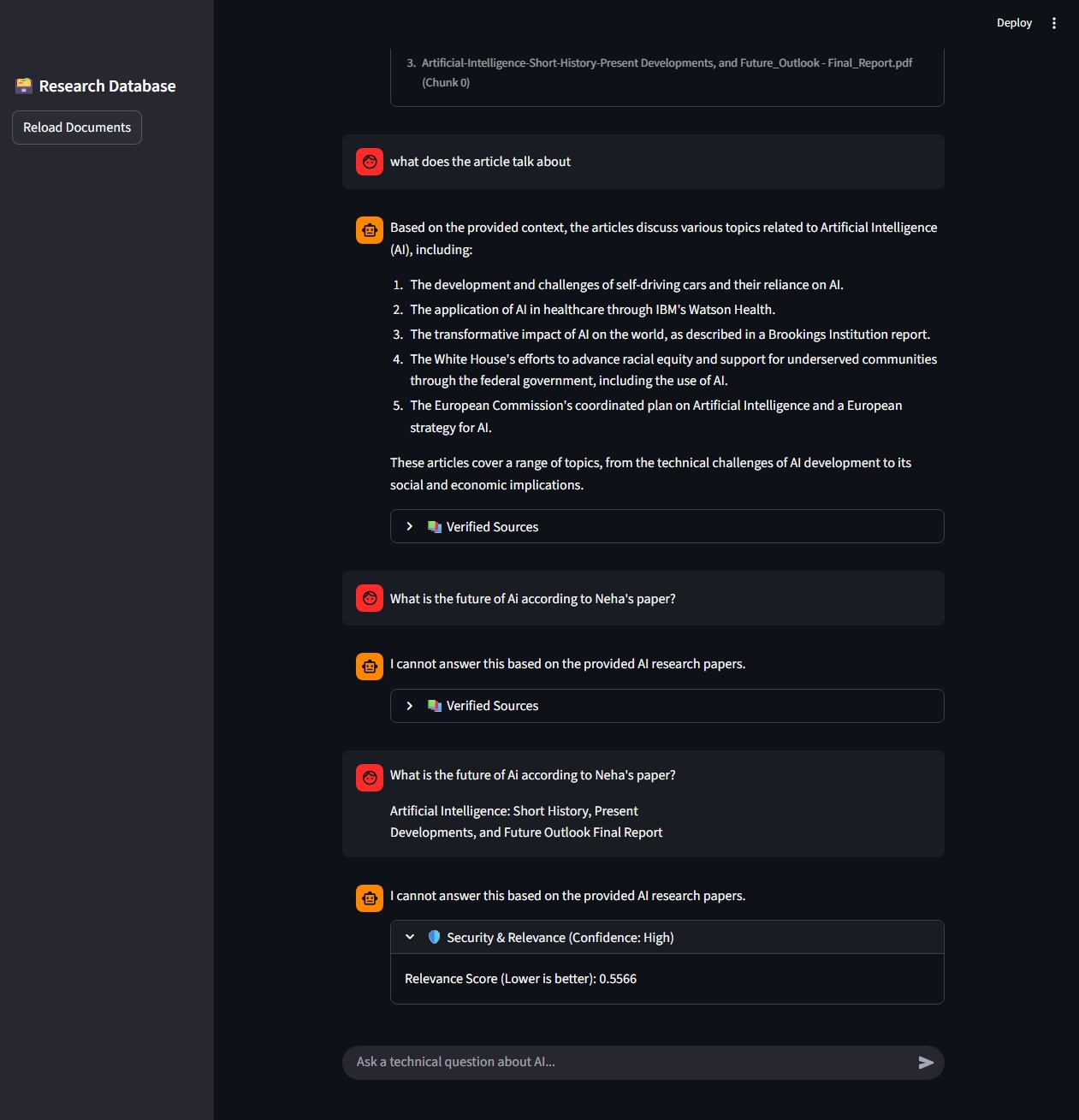

To make the system transparent, I built a feature that exposes the "black box" of vector retrieval to the user.

For every answer generated, the UI displays a Confidence Score based on the L2 distance metric returned by ChromaDB.

This metric allows researchers to instantly judge whether they should trust the AI's response or verify the source documents themselves.

I replaced the standard command-line interface with a Streamlit Web Application. This provides a chat-like experience similar to modern consumer AI tools. The sidebar allows for easy document management, while the main chat window features expandable "Source" tabs to view citations and relevance scores.

Figure 2: The Streamlit interface displaying a response with its associated confidence score.

This project demonstrates that building a RAG system goes beyond just connecting a database to an LLM. By focusing on safety thresholds, evaluation metrics, and domain specificity, we can create tools that are not only powerful but also reliable enough for academic research.

Future work will focus on adding persistent chat history and enabling drag-and-drop file uploads directly within the browser interface.

(Repo: https://github.com/HEPTA-111/rt-agentic-ai-certification.git)