https://github.com/lemessaA/rt-aaidc-project1.git

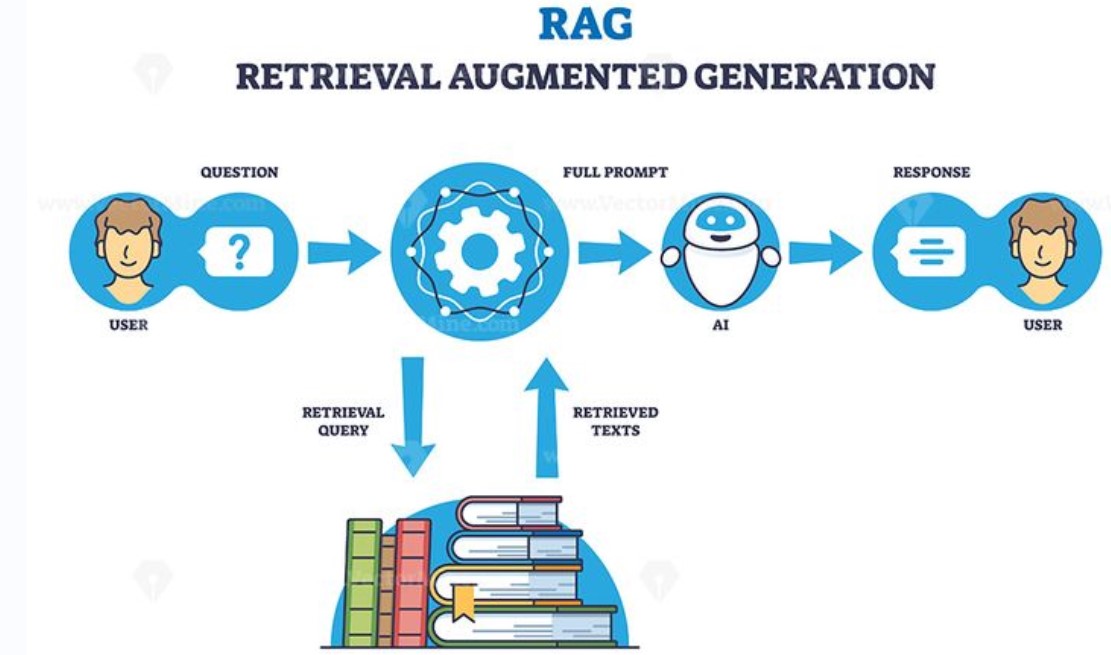

Retrieval-Augmented Generation (RAG) systems combine information retrieval with large language models to produce context-aware, accurate responses. While a basic RAG pipeline works, it usually behaves like a goldfish. No memory, no accountability, and no idea what went wrong when it fails.

This document explains how to enhance a RAG system by:

. Adding basic logging and observability to understand system behavior

. Introducing session-based memory to maintain conversational context across interactions

These enhancements improve reliability, debuggability, and user experience without turning the system into an overengineered nightmare.

This work presents an enhanced Retrieval-Augmented Generation (RAG) system designed to improve response accuracy, contextual continuity, and system observability. The architecture is modular and consists of four core components: Query Handler, Retrieval Engine, Language Model Generator, and Session and Observability Layer. Figure references can be added if needed, but the explanation below stands on its own.

The Query Handler serves as the system’s entry point. It receives user queries, assigns a unique session identifier, and performs basic preprocessing such as normalization and validation. Session identifiers ensure that all subsequent retrieval, generation, and logging activities can be traced back to a single interaction.

The Retrieval Engine is responsible for fetching relevant documents from a vector database based on semantic similarity. User queries are embedded using a selected embedding model and matched against pre-indexed document embeddings.

The retrieval output includes:

Document identifiers

Similarity scores

Optional metadata (source, timestamp, section)

These retrieved documents form the external knowledge context supplied to the language model.

3# . Language Model Generator

The Language Model Generator combines the original user query, retrieved document content, and session memory to produce a final response. Prompt construction is dynamically enriched with contextual signals to improve coherence and reduce hallucination.

Performance metrics such as response latency and token usage are captured during this phase for observability and optimization.

A dedicated Session and Observability Layer manages conversational state and system-level telemetry. This layer is responsible for both session-based memory integration and logging and observability.

Session-Based Memory Integration

Session-based memory is scoped strictly to a single user session and persists only during active interaction. It stores:

Previous user queries

Model-generated responses

References to retrieved documents

This memory is injected into:

Retrieval phase: to augment search queries with contextual continuity

Generation phase: to enrich prompt context sent to the language model

This approach improves multi-turn dialogue consistency without introducing long-term privacy risks.

Basic logging is implemented at each stage of the RAG pipeline. Logs capture:

User queries and session identifiers

Retrieved document identifiers and similarity scores

Generation latency and token usage

System errors and exceptions

From these logs, key observability metrics are derived:

Response time

Retrieval success rate

Error frequency

Request-level tracing is used to follow a single query across retrieval and generation phases, enabling effective debugging and performance analysis.

Text Chunking Strategy

Document preprocessing is performed using a fixed text chunking strategy to balance retrieval accuracy and computational efficiency.

Recommended configuration:

Chunk size: 500–1,000 tokens

Chunk overlap: 50–150 tokens

Smaller chunks improve retrieval granularity, while overlap ensures semantic continuity across chunk boundaries. The optimal configuration depends on document structure and embedding model limits.

Vector Store and Embedding Model Selection

Vector Stores

Commonly supported vector databases include:

FAISS (lightweight, local deployments)

Pinecone (managed, scalable cloud solution)

Weaviate or Milvus (feature-rich, metadata filtering)

Selection criteria should consider dataset size, latency requirements, and deployment environment.

Embedding Models

Embedding models should be chosen based on:

Semantic accuracy

Dimensionality compatibility with the vector store

Computational cost

Both open-source and hosted embedding models are supported, provided they produce consistent vector representations during indexing and querying.

Installation Instructions

Clone the project repository.

Install required dependencies listed in the configuration file.

Configure environment variables for the language model and vector store.

Preprocess documents and build the vector index.

Start the application server.

Usage Instructions

Submit a query through the user interface or API endpoint.

The system retrieves relevant documents using semantic search.

The language model generates a response using retrieved context and session memory.

Logs and observability metrics are recorded automatically.

Experiments were conducted to evaluate the impact of logging, observability, and session-based memory on system performance and response quality.

Two system configurations were compared:

Baseline RAG without logging or memory

Enhanced RAG with logging, observability, and session-based memory

Test scenarios included single-turn queries, multi-turn conversations, and ambiguous follow-up questions. Performance metrics and qualitative response relevance were recorded across multiple sessions.

The enhanced RAG system demonstrated measurable improvements across all evaluation dimensions. Session-based memory significantly improved the relevance of responses in multi-turn interactions. Follow-up questions showed higher contextual accuracy compared to the baseline system.

Logging and observability enabled precise identification of retrieval failures and latency bottlenecks. Error diagnosis time was reduced, and system behavior became more transparent during evaluation.

Overall, the enhanced system produced more coherent, consistent, and traceable responses.

This study demonstrates that incorporating basic logging, observability, and session-based memory substantially improves the effectiveness of RAG systems. Logging and observability provide essential insight into system operations, while session-based memory enables contextual continuity across interactions.

These enhancements require minimal architectural changes yet deliver significant gains in reliability, maintainability, and user experience. Future work may explore long-term memory strategies and adaptive retrieval optimization based on observed session behavior.