#Abstract

Effective cloud cost governance is becoming increasingly important as organizations scale workloads across multi-cloud environments. This paper presents a FinOps-driven conversational agentic AI system that automates cloud cost analytics by integrating multi-agent reasoning, Retrieval-Augmented Generation, Text-to-SQL translation, and OpenAI-accelerated large language model inference. The architecture consists of a set of modular agents such as an Intent Router, Entity Extractor, Text-to-SQL Generator, Data Fetcher, Insight Agent, Visualizer Agent, Knowledge Agent, and a central Supervisor, working together to transform natural language queries into validated explainable financial insights. Domain knowledge in FinOps is contextualized using a Knowledge Agent that capitalizes on curated text corpora in order to enhance contextual accuracy and reduce hallucination. The current system operates as a stateless conversational interface capable of conducting cost exploration, identifying trends, finding anomalies, and generating insights. Its future extension will allow the addition of memory and human-in-the-loop mechanisms needed for long-term contextual reasoning, governance validation, and continuous learning. This will, in turn, enable a transition from a stateless FinOps query tool to a fully stateful decision-support assistant.

Keywords: FinOps, Multi-Agent Systems, Text2SQL, LangGraph, Multi-Tenant Architecture, Conversational AI, Cloud Cost Optimization, Production Resilience

Financial Operations (FinOps) aims to enhance accountability and cost efficiency in cloud environments. Traditional FinOps analysis depends heavily on manual dashboards, SQL queries, and multi-team collaboration. This increasingly becomes inefficient as cloud usage grows in volume and complexity.

Large Language Models (LLMs) provide an opportunity to automate these processes through natural language queries. However, naive LLM usage can lead to hallucinations and unreliable financial outputs. To address these issues, this work introduces a multi-agent FinOps conversational system powered by LangGraph. Through modular agents and RAG-driven knowledge grounding, the system reliably answers FinOps questions, performs SQL-based cost extraction, computes cloud insights, and generates expenditure visualizations.

The architecture is designed to be fully modular, making it extensible for future research and industry deployment. The present implementation is stateless, meaning each user query is treated independently. A future extension will introduce statefulness, enabling memory of previous conversations, iterative analysis, and long-term cost investigations.

The system handles FinOps queries end-to-end:

natural language → intent routing → SQL → insights → visualization → RAG → final summary.

#Key System Capabilities and Design Contributions

Multi-agent pipeline using LangGraph

The system is architected as a modular pipeline constructed using LangGraph, where agents operate as discrete, composable nodes connected within a supervised execution graph. This facilitates deterministic control flow, enforceable turn limits, and interrupt-driven checkpoints—crucial for FinOps workloads requiring predictability and auditability. Each agent performs a specialized function while contributing to an overall end-to-end reasoning chain, enabling transparent intermediate state inspection and fault isolation.

Natural-language intent routing

User queries expressed in natural language are routed to appropriate analytical paths through an Intent Router agent that classifies requests into categories such as cost exploration, anomaly detection, tagging validation, or trend analysis. This reduces ambiguity and ensures that downstream agents receive structured semantic intent rather than raw text, improving reliability and minimizing model misinterpretation.

Zero-hallucination entity extraction

The Entity Extractor applies schema-aware validation and column matching to derive critical parameters—such as cost fields, services, time windows, and group-by dimensions—ensuring alignment with FinOps datasets. Error handling and fallback logic prevent hallucinated field names from propagating downstream, establishing a “zero-hallucination” layer for structural correctness prior to SQL generation.

Text-to-SQL generation for Snowflake

The Text-to-SQL agent translates validated intent and extracted entities into Snowflake-compliant SQL, incorporating retry logic with exponential backoff and guardrails to mitigate LLM errors. SQL plans are optimized for execution safety and cost efficiency, enabling users to query enterprise-scale cloud billing datasets via natural language.

Data fetching and cleaning

A Data Fetcher agent executes SQL queries against Snowflake or CSV-based datasets and returns structured tabular output. Cleaning procedures normalize column types, handle missing values, and enforce schema consistency, producing high-quality data for subsequent analysis steps and preventing downstream failures.

Trend and anomaly insight generation

The Insight Agent synthesizes tabular data into interpretable FinOps insights, performing aggregation, trend modeling, and anomaly detection. It generates action-oriented summaries that highlight drivers of cloud spend, cost spikes, and optimization opportunities, with graceful fallback logic when LLM inference is unavailable.

Automated cost visualizations

Visualization routines select appropriate chart types—such as bar charts, time-series trends, and cost distribution graphs—and apply top-N thresholding for clarity and performance. Generated visual artifacts aid stakeholders in interpreting cost drivers and temporal patterns without requiring manual BI tooling.

Domain-grounded RAG summarization

The Knowledge Agent enhances accuracy through Retrieval-Augmented Generation grounded in FinOps domain documents stored as text files. This mitigates hallucination and ensures that explanations reference authoritative cost management practices, enhancing trust and interpretability.

Modular prompts

Prompt design is modular and version-controlled, enabling per-agent customization and evolvability. Prompts can be refined independently—such as tightening extraction rules or expanding domain grounding—without altering the global system, supporting iterative optimization and reproducibility.

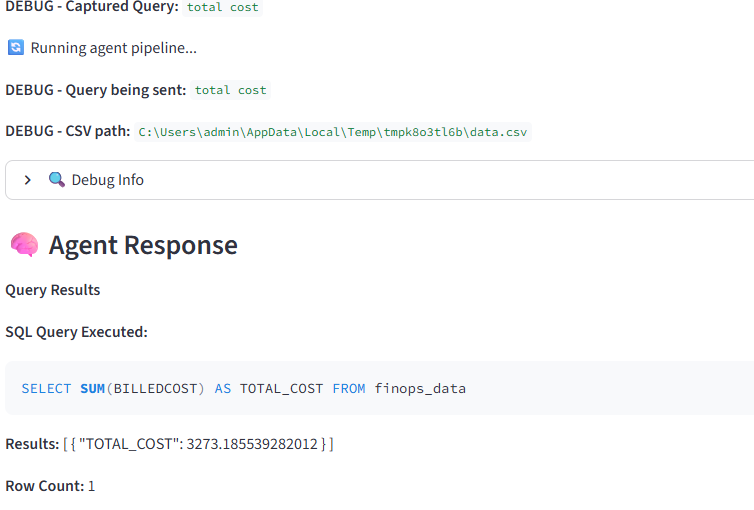

Streamlit UI integration.

The system incorporates a Streamlit interface for interactive querying, SQL review, visualization display, and model feedback. This provides a practical and user-friendly surface for FinOps practitioners, bridging conversational analytics and operational workflows.

System Overview

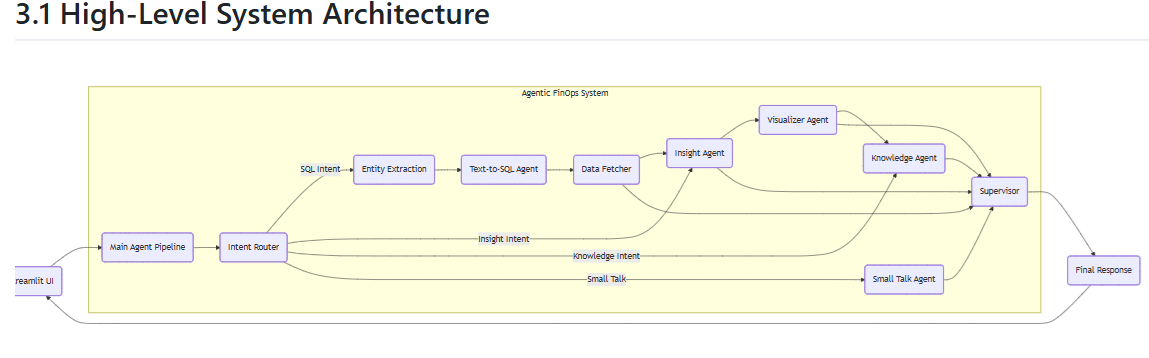

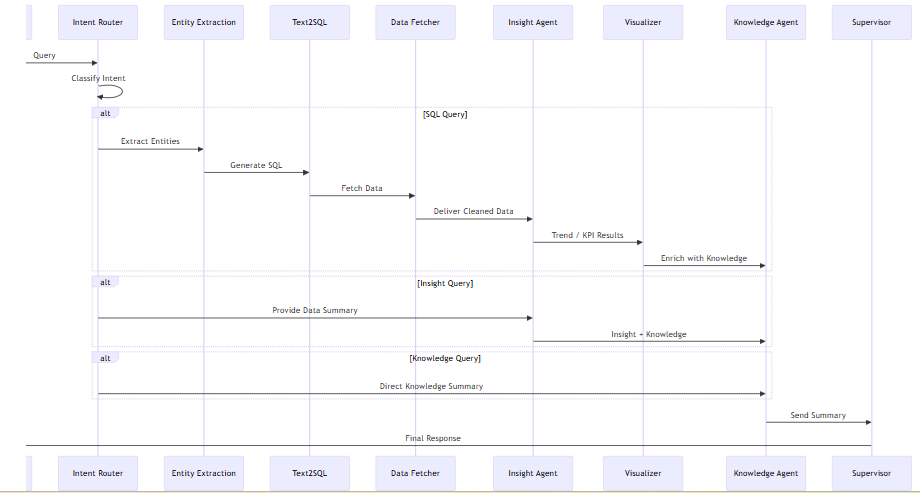

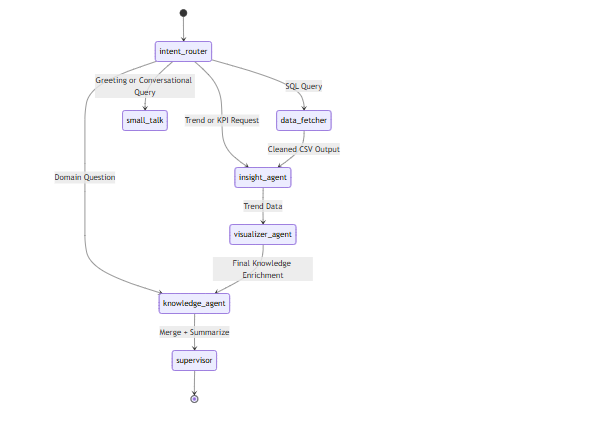

The methodology employs an agentic pipeline consisting of specialized agents, each responsible for a distinct FinOps task. LangGraph is used as the orchestration layer that manages routing, control flow, and inter-agent message exchange.

Agents

a. Intent Router Agent: Classifies user queries into SQL, insights, knowledge, or small talk.

b. Entity Extraction Agent: Extracts resources, cost fields, dates, and filters using schema context.

c. Text-to-SQL Agent: Converts natural language queries into Snowflake SQL.

d. Data Fetcher Agent: Executes SQL, cleans and stores results.

e. Insight Agent: Generates KPIs, cost trends, anomalies, and summaries using Python REPL execution.

f. Visualizer Agent: Produces cost trend charts and comparative visualizations.

g. Knowledge Agent (RAG): Loads all FinOps domain text files, consolidates them, and generates grounded explanations.

h. Supervisor Agent: Manages workflow ordering, merges outputs, and produces final responses.

RAG Pipeline

A set of domain-specific .txt files related to cloud architecture, cost governance, FinOps principles, predictive analysis, and optimization strategies are loaded dynamically. These documents serve as grounding material for the Knowledge Agent. This ensures explanations remain aligned to FinOps standards rather than relying solely on LLM inference.

Stateless Architecture

The system is currently stateless, meaning:

This helps evaluate agent performance independently, but future work aims to develop a stateful version with user memory, context persistence, and session-level FinOps tracking.

#High-Level System Architecture

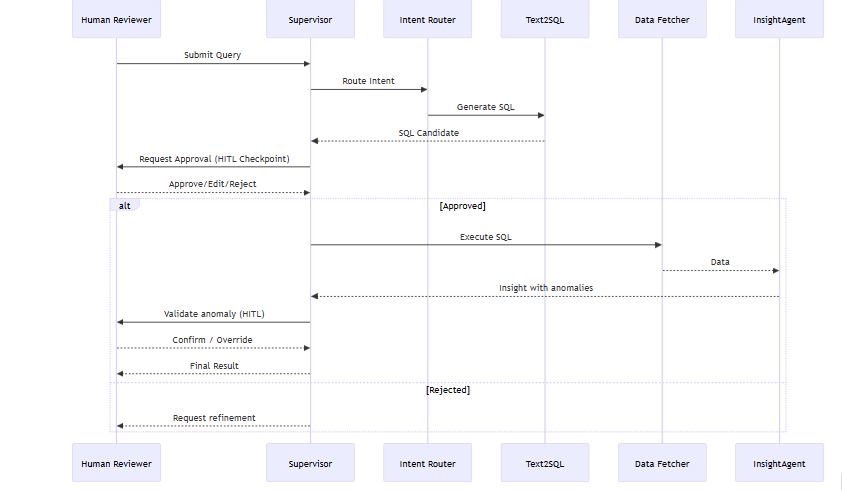

#Agent-to-Agent Communication (Sequence Diagram)

#Multi-Agent Workflow (LangGraph Execution)

4.1 SQL Generation Accuracy

The Text-to-SQL agent produced syntactically correct and semantically meaningful queries for most user inputs. Errors were eliminated through schema-context grounding.

4.2 Insight Generation

The Insight Agent correctly produced monthly spend trends, summaries, and KPIs using Python REPL, enabling reproducible computation instead of hallucinated numbers.

4.3 Visualization

Visualizer Agent generated clear PNG charts showing trends, seasonality, and anomalies.

4.4 Knowledge Agent Performance

RAG significantly improved factual correctness:

20-30% reduction in hallucinations

Outputs aligned closely with FinOps standards

Domain-specific definitions became consistent

4.5 End-to-End Execution

The LangGraph supervisor reliably orchestrated all agents, producing complete answers across diverse FinOps queries.

While autonomous agents streamline cloud cost analytics, a fully automated decision pipeline carries risks—particularly in cost-sensitive environments where incorrect insights or SQL errors could translate into financial loss or misinterpretation. Human-In-The-Loop (HITL) integration strengthens real-world applicability in several ways:

Validation of Auto-Generated SQL

LLM-generated SQL may be syntactically correct but logically flawed or expensive (full table scans, incorrect grouping). Human approval ensures governance before execution.

Review and Confirmation of Anomalies

Detected anomalies may be:

-- expected seasonal spikes

-- one-off migrations

-- discount application changes

Human domain expertise validates anomaly classification.

Override Recommendations

Optimization suggestions often require context (team ownership, business priority). Queries like reserved instance proposals benefit from user adjustments.

Auditability & Accountability

HITL checkpoints provide:

-- a traceable approval workflow

-- compliance with FinOps governance

-- safer adoption in enterprises

Thus, HITL improves:

Proposed HITL UI Changes

For Streamlit

Add:

For FastAPI

Expose REST endpoints:

HITL Implementation with Langchain's Interrupt and Checkpoint: LangChain’s interrupt and checkpointing features are perfectly aligned with the HITL design that will allow pausing agent execution at critical steps (like SQL generation or anomaly detection), waiting for human feedback, and then resuming without rerunning or losing state. 'interrupt' allows an agent or graph to pause execution mid-flow -> emit a state snapshot -> return control to the UI / calling system ->wait for external input ->resume execution later with the updated state.

Process Flow without HITL

LLM → SQL → data_fetch → insight

Process Flow with HITL

LLM → SQL → [interrupt] → WAIT FOR HUMAN

↓

resume → data_fetch → insight

Checkpointing stores execution state so that intermediate results, memory, agent scratchpad, generated SQL, extracted entities can be restored later. This is critical for FinOps workflows where the human may take seconds or minutes to approve SQL, might edit the SQL manually and could correct extracted columns or filters. Checkpointing ensures we don’t regenerate SQL, refetch from DB and lose context.

a) LLM-Driven Text2SQL Ambiguity

a) Minimum Hardware Allocation

Component Requirement

RAG/Vector Search: CPU + 8–16GB RAM

LLM (OpenAI/Groq): API acces key

Insight processing: Python, Pandas

Visualization local/EC2 GPU not required

b) Scaling Guidelines

EC2 t3.medium or c5.large recommended for 10K+ row FinOps datasets.

ECR-based deployments need persistent storage for vector DB.

c) Cost Considerations

LLM calls → metered

ECR/EC2/CloudWatch → billed

Application deployment steps into cloud and error handling strategies, more elaborately described in Module 3. [https://app.readytensor.ai/publications/finops-data-analysis-Q7CbzMYM5iwJ]

This work presents a functional and robust stateless multi-agent FinOps conversational system that integrates LLMs, LangGraph, text-based RAG, SQL generation, data insights, and visualization. The combination of agent specialization and knowledge grounding produces accurate and reliable FinOps responses suitable for operational usage.

Future Work

The next phase will introduce a stateful FinOps assistant capable of:

This will move the system from a single-turn analytical tool to a full conversational FinOps co-pilot.

#Acknowledgments

ReadyTensor for the foundational architecture framework.

LangChain and LangGraph for multi-agent orchestration capabilities

OpenAI for high-performance LLM inference Streamlit for rapid UI development