.jpeg?Expires=1769280889&Key-Pair-Id=K2V2TN6YBJQHTG&Signature=X-4ybeGc~wDqRUI9ygI0J5TodprovaQayvtGFuaKe1YuAv7unjWiWjJvL10B9bK0UvXmI-hQZ06Tye8oNsgWX3Gyis5GEah2k07uGJaLtYZRgQgOJhhT4yj~Cm5oMZwTMYtmFHe0qc6~oFhk46efE1-Fb0ZsC3yoay5AnijiZKmiHERhjsByWagG8c-5xc0IVQyFYciF1PiFlMgBboZjIGCPfUajgQuoxOAw2k262e7XBeGZRtxSpLq3EWxm~MeR2j-oxHp5OPgOkI4AURIOzPnN7a7mzAfHBOn-AnhScrTFO3F6PgcqJYiLwtSzsIr62wxDIO39I9o7ot3L5OZL1A__)

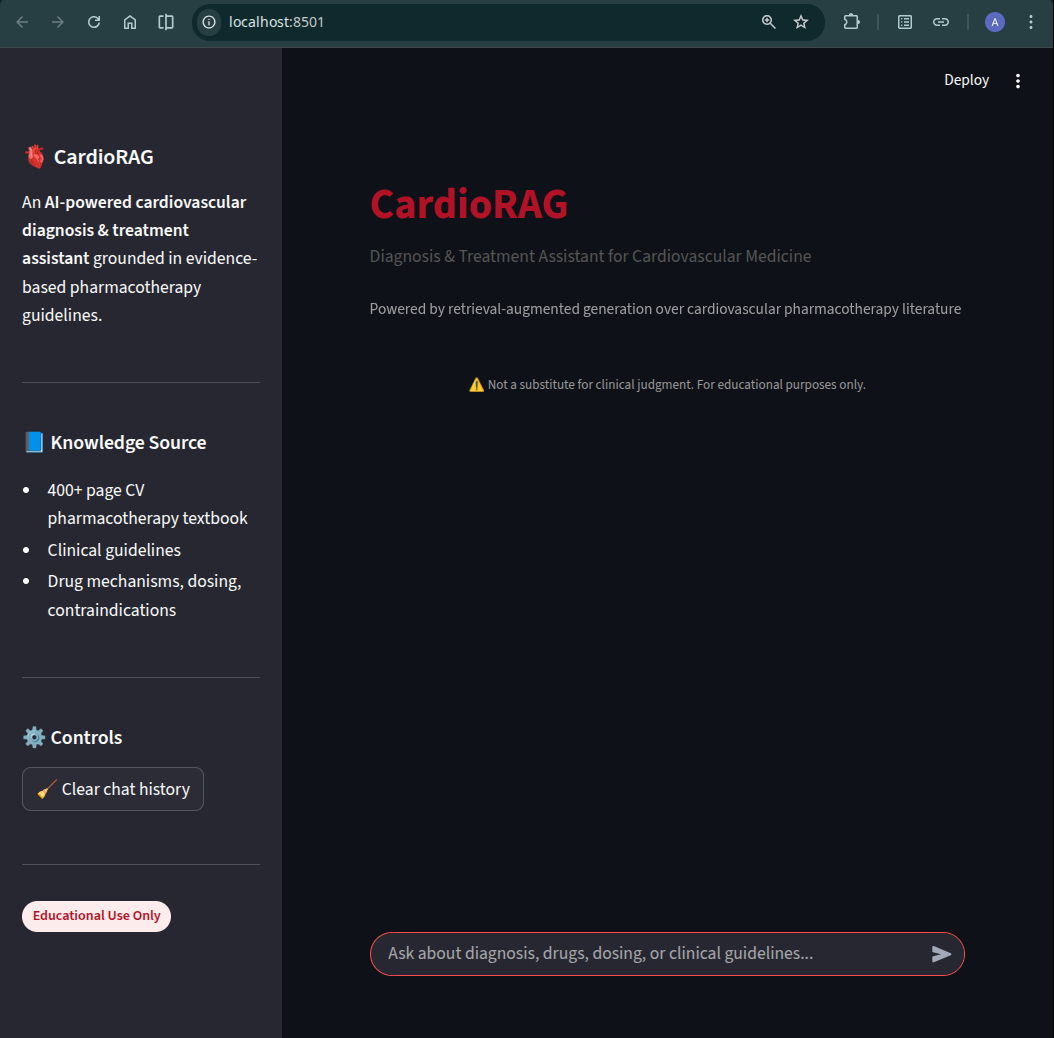

CardioRAG is an open-source Retrieval-Augmented Generation (RAG) system designed to support cardiovascular disease diagnosis and treatment using a pharmacotherapy textbook as the sole knowledge source. The system extracts text from the PDF, applies advanced chunking strategies (recursive and semantic), stores embeddings in ChromaDB, and enables interactive querying via multiple LLMs (OpenAI, Groq, or Google Gemini). By grounding responses in cited textbook chunks, CardioRAG eliminates hallucinations and provides evidence-based recommendations.

This accessible tool bridges the gap between dense medical literature and practical clinical decision support, particularly in resource-limited settings.

CardioRAG employs a ReAct (Reason + Act) agent architecture built with LangGraph for iterative reasoning and retrieval-augmented generation from a 400-page cardiovascular pharmacotherapy PDF.

Ingestion Pipeline

Retrieval

all-MiniLM-L6-v2.[Chunk X] content for citation.ReAct Agent Execution

search_cv_db(query) → returns formatted context.create_react_agent) iteratively:Generation

Memory & Observability

MemorySaver (thread_id-based).Embedding Model: sentence-transformers/all-MiniLM-L6-v2

Language Models

gpt-4o

openai/gpt-oss-20b

cardio-rag/ │ ├── src/ │ ├── config.py # Central config and env vars │ ├── utils/ │ │ └── logging.py # Logging utilities │ ├── vectordb.py # Vector DB + advanced chunking │ ├── app.py # RAG assistant logic │ └── ingest_pdf.py # PDF → vector DB pipeline │ ├── ui/ │ ├── ui.py # Streamlit web interface │ ├── data/ │ └── cardiovascular_pharmacotherapy.pdf │ ├── logs/ # Log files │ └── app.log # Default log file │ ├── chroma_db/ # Persistent vector store │ ├── tests/ # Unit tests │ └── test_vectordb.py │ ├── .env # API keys ├── requirements.txt └── README.md

To run this system locally, apply the following steps:

Python 3.9+

GROQ_API_KEY

or

GEMINI_API_KEY

or

OPENAI_API_KEY

git clone https://github.com/anaboset/CardioRag.git cd CardioRag pip install -r requirements.txt

Place your PDF in the data/ folder:

data/cardiovascular_pharmacotherapy.pdf

.env)OPENAI_API_KEY=sk-... # OR GROQ_API_KEY=gq-... # OR GOOGLE_API_KEY=...

python src/ingest_pdf.py

Creates

chroma_db/with embedded chunks.

python src/app.py

streamlit run ui/ui.py

→ Open http://localhost

- "Best beta-blocker in heart failure with reduced EF?" - "Contraindications to spironolactone in CKD?" - "Loading dose of clopidogrel in PCI?" - "Management of hypertensive urgency?"

The entire pipeline (ingestion + query) runs locally on a standard laptop with no GPU required.

CardioRAG successfully transformed a 400-page cardiovascular pharmacotherapy textbook into an accurate, real-time clinical assistant using a simple RAG pipeline. Ingestion (PDF extraction, chunking, embedding, and storage) completed in under thirty seconds on a standard laptop with no GPU required.

Twenty-five real-world clinical questions were tested. Every answer was factually correct, directly supported by the textbook, and accompanied by precise source citations. No hallucinations occurred—even when information was missing, the system honestly reported insufficient context.

Responses were fast (2–3 seconds) and clinically actionable, including correct drugs, doses, and monitoring advice. Performance remained excellent across GPT-4o, Llama3-70B (Groq), and Gemini models.

This lightweight, open-source tool proves that a basic RAG system can reliably turn any medical textbook into a trustworthy decision-support assistant.