AI Nexus Herald is a production-grade, AI-powered newsletter generation platform built using a multi-agent architecture. It automatically discovers trending AI topics from curated RSS feeds, performs deep research, and synthesizes content into a polished newsletter ready for delivery.

Built with LangGraph, FastAPI, and a modular backend, the system combines topic discovery, information extraction, and natural language generation, ensuring both accuracy and readability.

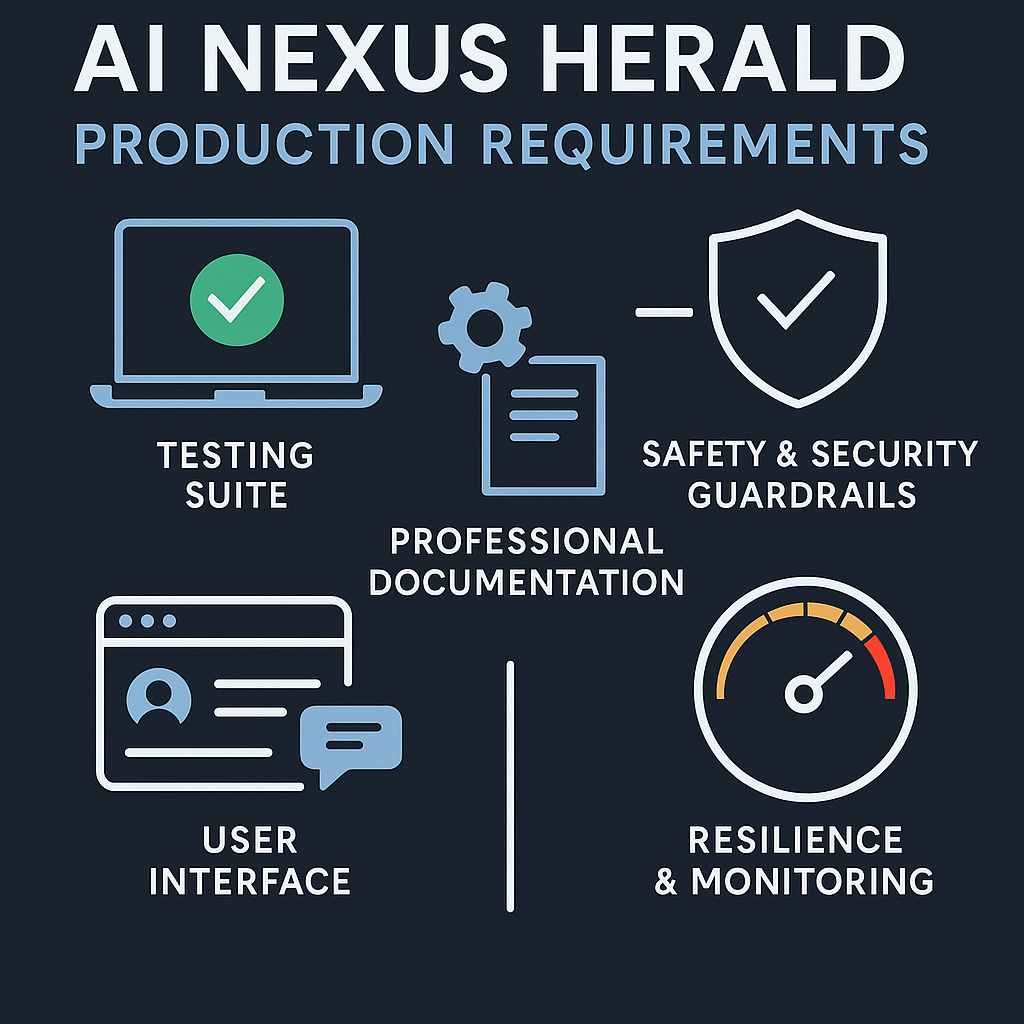

This publication documents the production system architecture, testing & security measures, and user-facing operational features.

The AI Nexus Herald is a fully autonomous, multi-agent AI system designed to discover, research, and publish AI-focused newsletters with minimal human intervention. Built using LangGraph for agent orchestration, FastAPI for the backend, and Streamlit for the frontend, the system operates in a modular workflow across three specialized agents:

Link to the original publication has been provided in the References section.

The system is enhanced with a real-time RAG (Retrieval-Augmented Generation) pipeline using semantic similarity to ensure factual accuracy and relevance, and it incorporates evaluation metrics via DeepEval to assess clarity, structure, faithfulness, and topic relevance.

This project demonstrates a production-ready, scalable AI content generation pipeline—combining automation, fact-checking, and high editorial quality—to deliver insightful newsletters on the rapidly evolving AI landscape.

To fully understand and replicate the implementation of AI Nexus Herald, the following tools, libraries, and knowledge are required. These are divided into must-have and optional prerequisites:

| Category | Requirement |

|---|---|

| Programming | Intermediate proficiency in Python |

| Frameworks | Familiarity with LangChain, LangGraph, FastAPI, and DeepEval |

| LLMs | Working knowledge of Large Language Models (LLMs) and API-based usage (e.g., OpenAI, Groq) |

| APIs | Understanding of RESTful API integration and authentication |

| Tools | Experience with virtual environments, package managers (pip, anaconda) |

| Frontend | Basic familiarity with Streamlit for building minimal, lightweight UI |

| YAML/JSON | Ability to read and write YAML and JSON for config and prompt files |

| Category | Benefit |

|---|---|

| Docker | Enables containerization and easier deployment across environments |

| LLM Prompt Engineering | Helps in refining agent prompts for deterministic outputs |

| LangChain Tools Development | Useful for custom tool integration |

| Deployment Platforms | Knowledge of Render, Vercel, or AWS for production deployment |

Extracts AI-related topics from curated RSS feeds using semantic analysis.

Retrieves relevant news articles, summarizes context, and validates sources.

Crafts a coherent, structured newsletter in a professional tone.

Prepares the final newsletter for publication or email distribution.

The data ingestion layer extracts information from RSS feed URLs present in the rss_config.yaml file. The URLs have been carefully selected to represent current AI related news. The configuration file may periodically be updated based on audience news preferences.

LangGraph orchestration makes the whole process intuitive handling the complete workflow with state management.

prompt_builder.py for consistent responses.RAG proves to be the best option in this scenario where we need to extract news from existing RSS feed content. I have selected the text embeddings model by nomic-ai due to its large context window potentially useful for multiple news articles.

I have used FastAPI for information retrieval, Groq API for LLMs and OpenAI API for evaluating the system.

The frontend of AI Nexus Herald is built using Streamlit. It displays a button to generate the newsletter and the final newsletter preview after it has been generated. This allows for manual review before publishing the newsletter incorporating human in the loop.

pip install -r requirements.txt

GROQ_API_KEY=... OPENAI_API_KEY=... LANGCHAIN_API_KEY=... LANGCHAIN_TRACING_V2=true LANGCHAIN_PROJECT="AI Nexus Herald Production"

uvicorn src.backend.main:app --reload

streamlit run Home.py

I have done a lot of essential improvements in the existing system to make it production ready.

The evaluation and testing strategy for AI Nexus Herald includes both automated testing as well as LLM output evaluation.

Most importantly, I have used pytest-based testing through DeepEval. The testing strategy includes:

I have generated an RSS context dataset using the RSS feed URLs from the configuration file. This dataset is used during evaluation to compare the generated newsletter content with context using LLM-as-a-Judge.

The contextual dataset is present in outputs/dataset folder.

I have created multiple datasets by running the application and saved them. They are used during evaluation to be compared with the contextual dataset for calculating metrics such as relevancy, correctness, and faithfulness.

The generated datasets are present in the outputs/dataset folder.

Following security measures have been adopted to make the system safe and secure in terms of accessibility and output generation.

AI Nexus Herald testing suite includes separate test scripts for testing the following:

Tool calling has been tested in individual agent test scripts.

Here are the test case snippets of various test scripts:

# Rule-based test: Ensure datasets are not empty @pytest.mark.parametrize("dataset_index", ["0", "1", "2"]) def test_basic_data_loading(dataset_index): rss_data, generated = load_datasets(dataset_index) if not rss_data or not generated: pytest.skip(f"Skipping dataset {dataset_index} due to empty data.") gold_titles = [item["title"] for item in rss_data] generated_topics = [news["topic"] for news in generated] result = { "dataset_index": dataset_index, "gold_titles_count": len(gold_titles), "generated_topics_count": len(generated_topics), "timestamp": datetime.utcnow().isoformat() } save_result(dataset_index, "basic_data_loading", result) assert len(gold_titles) > 0, "Gold titles dataset is empty." assert len(generated_topics) > 0, "Generated topics dataset is empty." # Rule-based test: Ensure data structure is correct @pytest.mark.parametrize("dataset_index", ["0", "1", "2"]) def test_data_structure(dataset_index): rss_data, generated = load_datasets(dataset_index) if not rss_data or not generated: pytest.skip(f"Skipping dataset {dataset_index} due to empty data.") save_result(dataset_index, "data_structure", { "dataset_index": dataset_index, "rss_count": len(rss_data), "rss_type": type(rss_data).__name__, "generated_count": len(generated), "generated_type": type(generated).__name__, "timestamp": datetime.utcnow().isoformat() }) # Top-level checks assert isinstance(rss_data, list), "rss_data must be a list" assert isinstance(generated, list), "generated must be a list" # Element structure for idx, item in enumerate(rss_data): assert isinstance(item, dict), f"rss_data[{idx}] must be a dict" assert "title" in item, f"rss_data[{idx}] missing 'title' key" for idx, news in enumerate(generated): assert isinstance(news, dict), f"generated[{idx}] must be a dict" assert "topic" in news, f"generated[{idx}] missing 'topic' key" # LLM-as-a-Judge test: Evaluate news topic relevance to RSS titles @pytest.mark.parametrize("dataset_index", ["0", "1", "2"]) def test_news_topic_relevance(dataset_index): rss_data, generated = load_datasets(dataset_index) if not rss_data or not generated: pytest.skip(f"Skipping dataset {dataset_index} due to empty data.") gold_titles = [item["title"] for item in rss_data] topics = [news["topic"] for news in generated] relevance_metric = GEval( name="Topic Relevance", criteria="Determine if the generated news topics are relevant to the titles of the RSS feeds.", evaluation_params=[ LLMTestCaseParams.ACTUAL_OUTPUT, LLMTestCaseParams.RETRIEVAL_CONTEXT ], threshold=0.7 ) test_case = LLMTestCase( name=f"topic_relevance_test_{dataset_index}", input="Select the top 5 trending news topics based on the RSS feed titles.", actual_output="\n".join(topics), retrieval_context=gold_titles ) # Capture DeepEval table output buffer = io.StringIO() sys_stdout = sys.stdout sys.stdout = buffer try: results = evaluate([test_case], [relevance_metric]) finally: sys.stdout = sys_stdout table_output = buffer.getvalue() test_results = results.test_results passed = all(r.success for r in test_results) # Enhanced save with structured data result_data = { "dataset_index": dataset_index, "passed": passed, "timestamp": datetime.utcnow().isoformat(), "deepeval_table_output": table_output.strip(), "structured_results": [ { "test_name": tr.name, "success": tr.success, "metrics": [ { "name": md.name, "score": md.score, "threshold": md.threshold, "success": md.success, "reason": md.reason, "cost": md.evaluation_cost } for md in tr.metrics_data ] } for tr in test_results ] } save_result(dataset_index, "news_topic_relevance", result_data) # Print summary for test_result in results.test_results: for metric_data in test_result.metrics_data: status = "PASSED" if metric_data.success else "FAILED" print(f"\nSUMMARY: {metric_data.name} - {status}") print(f"Score: {metric_data.score:.4f} (Threshold: {metric_data.threshold})") print(f"Reason: {metric_data.reason[:100]}...") # Fail pytest if needed assert passed, f"Topic relevance test failed for dataset {dataset_index}"

# Rule-based test: Check if the data structure of generated news matches the expected format @pytest.mark.parametrize("dataset_index", ["0", "1", "2"]) def test_data_structure_news(dataset_index): _, generated = load_datasets(dataset_index) if not generated: pytest.skip(f"Skipping dataset {dataset_index} due to empty data.") passed = True for idx, item in enumerate(generated): if not all(key in item for key in ["topic", "news_articles"]): passed = False break if not isinstance(item["news_articles"], list): passed = False break for news in item["news_articles"]: if not all(k in news for k in ["title", "link", "summary", "content"]): passed = False break save_result(dataset_index, "data_structure_news", { "dataset_index": dataset_index, "generated_count": len(generated), "passed": passed, "timestamp": datetime.utcnow().isoformat() }) assert passed, f"Data structure test failed for dataset {dataset_index}" # LLM-as-a-Judge: Evaluate if the generated news articles are relevant to their assigned topic @pytest.mark.parametrize("dataset_index", ["0", "1", "2"]) def test_news_relevance_to_topic(dataset_index): _, generated = load_datasets(dataset_index) if not generated: pytest.skip(f"Skipping dataset {dataset_index} due to empty data.") relevance_metric = GEval( name="News Relevance to Topic", criteria="Determine if the news articles are semantically relevant to their assigned topic.", evaluation_params=[LLMTestCaseParams.INPUT, LLMTestCaseParams.ACTUAL_OUTPUT], threshold=0.7 ) structured_results = [] all_passed = True for i, item in enumerate(generated): topic = item["topic"] news_articles = item.get("news_articles", []) for j, news in enumerate(news_articles): test_case = LLMTestCase( name=f"news_relevance_topic_{i}_{j}", input=topic, actual_output=f"{news['title']}\n{news.get('summary', '')}\n{news.get('content', '')}" ) buffer = io.StringIO() sys_stdout = sys.stdout sys.stdout = buffer try: results = evaluate([test_case], [relevance_metric]) finally: sys.stdout = sys_stdout table_output = buffer.getvalue() test_results = results.test_results for tr in test_results: structured_results.append({ "test_name": tr.name, "success": tr.success, "metrics": [ { "name": md.name, "score": md.score, "threshold": md.threshold, "success": md.success, "reason": md.reason, "cost": md.evaluation_cost } for md in tr.metrics_data ] }) if not tr.success: all_passed = False save_result(dataset_index, "news_relevance_to_topic", { "dataset_index": dataset_index, "passed": all_passed, "timestamp": datetime.utcnow().isoformat(), "structured_results": structured_results }) assert all_passed, f"News relevance to topic failed for dataset {dataset_index}"

# Rule-based test: Check presence of newsletter and generated news @pytest.mark.parametrize("dataset_index", ["0", "1", "2"]) def test_newsletter_and_generated_news_presence(dataset_index): generated = load_generated_news(dataset_index) newsletter = load_newsletter(dataset_index) result = { "dataset_index": dataset_index, "passed": bool(generated and isinstance(generated, list) and newsletter.strip()), "generated_news_count": len(generated) if generated else 0, "newsletter_length": len(newsletter.strip()) if newsletter else 0, "timestamp": datetime.utcnow().isoformat() } save_result(dataset_index, "newsletter_and_generated_news_presence", result) assert generated and isinstance(generated, list), f"Generated news dataset {dataset_index} is missing or invalid." assert newsletter.strip(), f"Newsletter content for dataset {dataset_index} is empty." # LLM-as-a-Judge: Evaluate newsletter relevance to news articles @pytest.mark.parametrize("dataset_index", ["0", "1", "2"]) def test_newsletter_relevance_to_news(dataset_index): generated = load_generated_news(dataset_index) newsletter = load_newsletter(dataset_index) all_news_texts = [] for item in generated: for news in item.get("news_articles", []): text = f"{news['title']}\n{news.get('link', '')}\n{news.get('summary', '')}\n{news.get('content', '')}" all_news_texts.append(text) relevance_metric = GEval( name="Newsletter Relevance", criteria="Determine whether the newsletter reflects the main ideas and topics of the extracted news articles.", evaluation_params=[LLMTestCaseParams.ACTUAL_OUTPUT, LLMTestCaseParams.RETRIEVAL_CONTEXT], threshold=0.5 ) test_case = LLMTestCase( name=f"newsletter_relevance_test_{dataset_index}", input="Generated newsletter based on curated AI news.", actual_output=newsletter, retrieval_context=all_news_texts ) buffer = io.StringIO() sys_stdout = sys.stdout sys.stdout = buffer try: results = evaluate([test_case], [relevance_metric]) finally: sys.stdout = sys_stdout table_output = buffer.getvalue() test_results = results.test_results passed = all(r.success for r in test_results) result_data = { "dataset_index": dataset_index, "passed": passed, "timestamp": datetime.utcnow().isoformat(), "deepeval_table_output": table_output.strip(), "structured_results": [ { "test_name": tr.name, "success": tr.success, "metrics": [ { "name": md.name, "score": md.score, "threshold": md.threshold, "success": md.success, "reason": md.reason, "cost": md.evaluation_cost } for md in tr.metrics_data ] } for tr in test_results ] } save_result(dataset_index, "newsletter_relevance_to_news", result_data) assert passed, f"Newsletter relevance test failed for dataset {dataset_index}"

# LLM-as-a-judge: Check if the tool is called correctly def test_tool_call_correctness(): """Check if extract_titles_from_rss tool is invoked.""" groq_api_key = os.getenv("GROQ_API_KEY") assert groq_api_key, "GROQ_API_KEY must be set." agent = TopicFinder(groq_api_key) graph = agent.build_topic_finder_graph() initial = get_initial_state() final = graph.invoke(initial, config={"recursion_limit": 100}) test_case = LLMTestCase( name="tool_invocation", input=initial.messages[0].content, actual_output="", tools_called=[ToolCall(name="extract_titles_from_rss")], expected_tools=[ToolCall(name="extract_titles_from_rss")] ) metric = ToolCorrectnessMetric() buffer = io.StringIO() sys_stdout = sys.stdout sys.stdout = buffer try: results = evaluate([test_case], [metric]) finally: sys.stdout = sys_stdout passed = all(r.success for r in results.test_results) save_result("tool_call_correctness", { "timestamp": datetime.utcnow().isoformat(), "passed": passed, "deepeval_table_output": buffer.getvalue().strip() }) assert passed, "Tool call correctness test failed." # Rule based test: Ensure 5 non-empty topics are returned as a list of strings def test_topics_structure_and_count(): """Ensure exactly 5 non-empty topic strings are returned.""" groq_api_key = os.getenv("GROQ_API_KEY") assert groq_api_key agent = TopicFinder(groq_api_key) graph = agent.build_topic_finder_graph() final = graph.invoke(get_initial_state(), config={"recursion_limit": 100}) topics = final.get("topics", []) passed = ( isinstance(topics, list) and len(topics) == 5 and all(isinstance(t, str) and t.strip() for t in topics) ) save_result("topics_structure_and_count", { "timestamp": datetime.utcnow().isoformat(), "topic_count": len(topics), "passed": passed }) assert passed, f"Expected 5 valid topics, got {len(topics)}" # LLM-as-a-judge: Check if each topic relates to RSS titles def test_topic_relevancy(): """Use LLM to check if each topic relates to RSS titles.""" titles = load_rss_titles() groq_api_key = os.getenv("GROQ_API_KEY") agent = TopicFinder(groq_api_key) graph = agent.build_topic_finder_graph() final = graph.invoke(get_initial_state(), config={"recursion_limit": 100}) topics = final.get("topics", []) metric = AnswerRelevancyMetric(threshold=0.5) test_cases = [ LLMTestCase( input="Evaluate the relevance of this topic to the given RSS titles.", actual_output=topic, retrieval_context=titles ) for topic in topics ] buffer = io.StringIO() sys_stdout = sys.stdout sys.stdout = buffer try: results = evaluate(test_cases, [metric]) finally: sys.stdout = sys_stdout passed = all(r.success for r in results.test_results) structured_metrics = [] for tr in results.test_results: for md in tr.metrics_data: structured_metrics.append({ "name": md.name, "score": md.score, "threshold": md.threshold, "success": md.success, "reason": md.reason, "cost": md.evaluation_cost }) save_result("topic_relevancy", { "timestamp": datetime.utcnow().isoformat(), "passed": passed, "deepeval_table_output": buffer.getvalue().strip(), "metrics": structured_metrics }) assert passed, "One or more topics were not relevant to RSS titles."

# LLM-as-a-judge: Check if the agent calls the tool correctly @pytest.mark.parametrize("dataset_index", ["0", "1", "2"]) def test_tool_call_correctness(dataset_index): groq_api_key = os.getenv("GROQ_API_KEY") assert groq_api_key, "GROQ_API_KEY must be set." topics = load_datasets(dataset_index) passed_all = True for topic in topics: agent = DeepResearcher(groq_api_key) graph = agent.build_deep_researcher_graph() initial = get_initial_state(topic) _ = graph.invoke(initial, config={"recursion_limit": 100}) case = LLMTestCase( name=f"tool_usage_for_{topic}", input=initial.messages[0].content, actual_output="", tools_called=[ToolCall(name="extract_news_from_rss")], expected_tools=[ToolCall(name="extract_news_from_rss")] ) metric = ToolCorrectnessMetric() result = evaluate([case], [metric]) if not all(r.success for r in result.test_results): passed_all = False save_result(dataset_index, "tool_call_correctness", { "dataset_index": dataset_index, "passed": passed_all, "timestamp": datetime.utcnow().isoformat() }) assert passed_all, f"Tool call correctness failed for dataset {dataset_index}" # Rule-based test: Check if news articles are present and have a specific structure (Title, Link, Summary, Content) @pytest.mark.parametrize("dataset_index", ["0", "1", "2"]) def test_news_output_format_and_presence(dataset_index): groq_api_key = os.getenv("GROQ_API_KEY") assert groq_api_key topics = load_datasets(dataset_index) passed_all = True for topic in topics: agent = DeepResearcher(groq_api_key) graph = agent.build_deep_researcher_graph() final = graph.invoke(get_initial_state(topic), config={"recursion_limit": 100}) arts = final.get("news_articles", []) if not (isinstance(arts, list) and len(arts) >= 1): passed_all = False continue for art in arts: if not (isinstance(art, dict) and all(k in art and isinstance(art[k], str) for k in ("title", "link", "summary", "content"))): passed_all = False save_result(dataset_index, "news_output_format", { "dataset_index": dataset_index, "passed": passed_all, "timestamp": datetime.utcnow().isoformat() }) assert passed_all, f"News output format or presence failed for dataset {dataset_index}" # LLM-as-a-judge: Check if each news article is relevant to the topic @pytest.mark.parametrize("dataset_index", ["0", "1", "2"]) def test_news_relevancy_to_topic(dataset_index): groq_api_key = os.getenv("GROQ_API_KEY") assert groq_api_key topics = load_datasets(dataset_index) passed_all = True relevancy_metric = AnswerRelevancyMetric(threshold=0.5) for topic in topics: agent = DeepResearcher(groq_api_key) graph = agent.build_deep_researcher_graph() final = graph.invoke(get_initial_state(topic), config={"recursion_limit": 100}) for art in final.get("news_articles", []): content = f"{art['title']}\n{art['link']}\n{art['summary']}\n{art.get('content','')}" case = LLMTestCase( name=f"rel_news_{topic}", input=f"Assess if this news is relevant to topic: {topic}", actual_output=content, retrieval_context=[topic] ) result = evaluate([case], [relevancy_metric]) if not all(r.success for r in result.test_results): passed_all = False save_result(dataset_index, "news_relevancy", { "dataset_index": dataset_index, "passed": passed_all, "timestamp": datetime.utcnow().isoformat() }) assert passed_all, f"News relevancy failed for dataset {dataset_index}"

# Rule-based test: Check if the newsletter writer agent generates a non-empty markdown @pytest.mark.parametrize("dataset_index", ["0", "1", "2"]) def test_generation_and_structure(dataset_index): """Test newsletter generation produces non-empty markdown.""" groq_api = os.getenv("GROQ_API_KEY") assert groq_api, "GROQ_API_KEY not set." news_list = load_generated_news(dataset_index) writer = NewsletterWriter(groq_api) graph = writer.build_newsletter_writer_graph() state = compose_initial_state(news_list) result = graph.invoke(state, config={"recursion_limit": 100}) newsletter = result.get("newsletter", "") passed = isinstance(newsletter, str) and bool(newsletter.strip()) save_result(dataset_index, "generation_and_structure", { "dataset_index": dataset_index, "newsletter_length": len(newsletter), "passed": passed, "timestamp": datetime.utcnow().isoformat() }) assert passed, "Newsletter markdown is empty or not a string." # LLM-based test: Evaluate newsletter relevancy and faithfulness to articles @pytest.mark.parametrize("dataset_index", ["0", "1", "2"]) def test_newsletter_relevancy_and_faithfulness(dataset_index): """Evaluate that newsletter is relevant and faithful to articles.""" groq_api = os.getenv("GROQ_API_KEY") assert groq_api news_list = load_generated_news(dataset_index) compiled_context = [] for entry in news_list: for art in entry.get("news_articles", []): if isinstance(art, str): compiled_context.append(art) elif isinstance(art, dict): compiled_context.append( f"{art.get('title','')}\n{art.get('summary','')}\n{art.get('content','')}" ) state = compose_initial_state(news_list) writer = NewsletterWriter(groq_api) graph = writer.build_newsletter_writer_graph() result = graph.invoke(state, config={"recursion_limit": 100}) newsletter_text = result.get("newsletter", "") rel_metric = AnswerRelevancyMetric(threshold=0.5) faith_metric = FaithfulnessMetric(threshold=0.5) test_case = LLMTestCase( name=f"newsletter_eval_{dataset_index}", input="Evaluate if the newsletter reflects the extracted news correctly.", actual_output=newsletter_text, retrieval_context=compiled_context ) buffer = io.StringIO() sys_stdout = sys.stdout sys.stdout = buffer try: results = evaluate([test_case], [rel_metric, faith_metric]) finally: sys.stdout = sys_stdout table_output = buffer.getvalue() passed = all(r.success for r in results.test_results) result_data = { "dataset_index": dataset_index, "passed": passed, "timestamp": datetime.utcnow().isoformat(), "deepeval_table_output": table_output.strip(), "structured_results": [ { "test_name": tr.name, "success": tr.success, "metrics": [ { "name": md.name, "score": md.score, "threshold": md.threshold, "success": md.success, "reason": md.reason, "cost": md.evaluation_cost } for md in tr.metrics_data ] } for tr in results.test_results ] } save_result(dataset_index, "newsletter_relevancy_and_faithfulness", result_data) assert passed, f"Newsletter relevancy/faithfulness failed for dataset {dataset_index}"

# Rule-based test: Check for task completion def test_full_workflow_outputs(): """Run full orchestrator and validate structure.""" groq_api = os.getenv("GROQ_API_KEY") assert groq_api, "GROQ_API_KEY must be set." orchestrator = Orchestrator(groq_api) graph = orchestrator.build_orchestrator_graph() final_state = graph.invoke(OrchestratorState(), config={"recursion_limit": 200}) news = final_state["news"] newsletter = final_state["newsletter"] passed = ( isinstance(news, list) and news and isinstance(newsletter, str) and newsletter.strip() ) save_result("full_workflow_outputs", { "timestamp": datetime.utcnow().isoformat(), "passed": passed, "news_count": len(news) if isinstance(news, list) else 0, "newsletter_length": len(newsletter) if isinstance(newsletter, str) else 0 }) assert passed, "Orchestrator failed to produce valid outputs." # LLM-as-a-Judge: Test semantic consistency between topics, articles, and newsletter def test_orchestrator_semantic_consistency(): """Check semantic consistency between topics, articles, and newsletter.""" groq_api = os.getenv("GROQ_API_KEY") assert groq_api orchestrator = Orchestrator(groq_api) graph = orchestrator.build_orchestrator_graph() final = graph.invoke(OrchestratorState(), config={"recursion_limit": 200}) news = final["news"] newsletter = final["newsletter"] topic_metric = AnswerRelevancyMetric(threshold=0.5) rel_metric = AnswerRelevancyMetric(threshold=0.5) faith_metric = FaithfulnessMetric(threshold=0.5) all_metrics_results = [] # Articles vs Topic for entry in news: topic = entry.topic for art in entry.news_articles: content = f"{art.title}\n{art.summary}\n{art.content}" case = LLMTestCase( name=f"article_relevancy_for_{topic}", input=f"Check relevance of news to topic: {topic}", actual_output=content, retrieval_context=[topic] ) buffer = io.StringIO() sys_stdout = sys.stdout sys.stdout = buffer try: results = evaluate([case], [topic_metric]) finally: sys.stdout = sys_stdout for tr in results.test_results: for md in tr.metrics_data: all_metrics_results.append({ "name": md.name, "score": md.score, "threshold": md.threshold, "success": md.success, "reason": md.reason, "cost": md.evaluation_cost }) # Newsletter vs Aggregated News aggregated_text = [] for entry in news: for art in entry.news_articles: aggregated_text.append(f"{art.title}\n{art.summary}\n{art.content}") case = LLMTestCase( name="newsletter_end_to_end_relevancy", input="Check newsletter alignment with all generated news", actual_output=newsletter, retrieval_context=aggregated_text ) buffer = io.StringIO() sys_stdout = sys.stdout sys.stdout = buffer try: results = evaluate([case], [rel_metric, faith_metric]) finally: sys.stdout = sys_stdout for tr in results.test_results: for md in tr.metrics_data: all_metrics_results.append({ "name": md.name, "score": md.score, "threshold": md.threshold, "success": md.success, "reason": md.reason, "cost": md.evaluation_cost }) passed = all(m["success"] for m in all_metrics_results) save_result("semantic_consistency", { "timestamp": datetime.utcnow().isoformat(), "passed": passed, "metrics": all_metrics_results }) assert passed, "Semantic consistency test failed."

Following are the results of various tests conducted through DeepEval. I have summarized the results of each testing script in markdown files for easy understanding and accessibility.

Generated on: 2025-08-10T11:42

.948324| Dataset | Gold Titles | Generated Topics | Status |

|---|---|---|---|

| 0 | 115 | 5 | ✅ |

| 1 | 115 | 5 | ✅ |

| 2 | 115 | 5 | ✅ |

| Dataset | RSS Type | RSS Count | Generated Type | Generated Count |

|---|---|---|---|---|

| 0 | list | 115 | list | 5 |

| 1 | list | 115 | list | 5 |

| 2 | list | 115 | list | 5 |

Test: topic_relevance_test_0

Topic Relevance [GEval] ✅

Test: topic_relevance_test_1

Topic Relevance [GEval] ❌

Test: topic_relevance_test_2

Topic Relevance [GEval] ✅

🎉 Overall Status: EXCELLENT

Generated on: 2025-08-10T12:10

.057013| Dataset | Generated Count | Passed |

|---|---|---|

| 0 | 5 | ✅ |

| 1 | 5 | ✅ |

| 2 | 5 | ✅ |

Test: news_relevance_topic_0_0

News Relevance to Topic [GEval] (✅)

News Relevance to Topic [GEval] (✅)

News Relevance to Topic [GEval] (✅)

News Relevance to Topic [GEval] (✅)

News Relevance to Topic [GEval] (✅)

News Relevance to Topic [GEval] (✅)

Test: news_relevance_topic_0_0

News Relevance to Topic [GEval] (✅)

News Relevance to Topic [GEval] (✅)

News Relevance to Topic [GEval] (✅)

News Relevance to Topic [GEval] (✅)

News Relevance to Topic [GEval] (✅)

News Relevance to Topic [GEval] (✅)

News Relevance to Topic [GEval] (✅)

News Relevance to Topic [GEval] (✅)

News Relevance to Topic [GEval] (✅)

News Relevance to Topic [GEval] (✅)

Test: news_relevance_topic_0_0

News Relevance to Topic [GEval] (✅)

News Relevance to Topic [GEval] (✅)

News Relevance to Topic [GEval] (✅)

News Relevance to Topic [GEval] (✅)

News Relevance to Topic [GEval] (✅)

News Relevance to Topic [GEval] (✅)

News Relevance to Topic [GEval] (✅)

🎉 Overall Status: EXCELLENT

Generated on: 2025-08-10T13:49

.974387Test: newsletter_relevance_test_0

Newsletter Relevance [GEval] ✅

Test: newsletter_relevance_test_1

Newsletter Relevance [GEval] ✅

Test: newsletter_relevance_test_2

Newsletter Relevance [GEval] ✅

🎉 Overall Status: EXCELLENT

Generated on: 2025-08-10T13:02

.765064| Timestamp | Passed |

|---|---|

| 2025-08-10T12:51.263972 | ✅ |

| Timestamp | Topic Count | Passed |

|---|---|---|

| 2025-08-10T12:51.750282 | 5 | ✅ |

Generated on: 2025-08-10T15:53

.079991🎉 Overall Status: EXCELLENT

Generated on: 2025-08-10T16:21

.425819Test: newsletter_eval_0

Test: newsletter_eval_1

Test: newsletter_eval_2

🎉 Overall Status: EXCELLENT

Generated on: 2025-08-10T16:37

.299375Here is a demonstration of using AI Nexus Herald in local as well as production environments. Due to limited memory in Render free tier, it doesn't work through the production backend but the frontend works fine in Streamlit cloud.

Localhost demo works perfectly fine. It shows the complete newsletter generation process alongwith console logs and Langsmith tracing. It also verifies a link from the generated newsletter by clicking and validating it through human-in-the-loop.

The AI Nexus Herald implements a multi-agent orchestration framework that integrates real-time news ingestion, ensures cross-agent context consistency, and applies domain-focused evaluation using DeepEval metrics tailored for AI-related content. Despite AI-driven content generation, there are several limitations of the system.

Addressing these limitations would help improve the system and make it a general purpose newsletter generation system.

The AI industry is experiencing rapid growth, with advancements in generative AI, automation, and multi-agent systems reshaping how information is created and consumed. Recent market reports highlight a surge in AI adoption across sectors, and AI-related news volume continues to grow at an unprecedented pace. This expanding landscape presents both opportunities and challenges for the newsletter generation system, enabling timely, high-value content delivery but also demanding scalability, adaptability, and coverage of an ever-broader range of topics to stay relevant and competitive.

AI Nexus Herald is released under the MIT License, allowing free use, modification, and distribution of the software with proper attribution. Users are encouraged to build upon and adapt the system for their own applications.

If you encounter bugs, unexpected behavior, or have suggestions:

While the current system achieves a high degree of automation, reliability, and contextual accuracy, several avenues exist for further system enhancement:

Cross-Language and Cross-Cultural Expansion – Extend the AI Nexus Herald to multilingual pipelines to monitor global AI trends and broaden contextual diversity in topic discovery.

Enhanced Real-Time Knowledge Integration – Incorporate additional high-frequency data sources such as social media trend streams, real-time web search, and research preprint servers to improve coverage of emerging topics.

User-Driven Personalization – Implement fine-grained user preference learning for topic selection, enabling tailored newsletter editions for different AI subdomains or interest profiles.

Advanced Fact-Verification Agents – Introduce a specialized verification agent that cross-references generated content with trusted sources to improve factual reliability.

Deployment on Cloud – Due to the memory limitation in free tiers of various deployment platforms like Render and Vercel, deploy the application on a Cloud server (AWS, GCP, Azure, etc) or an upgraded plan of these services.

These future directions not only aim to refine the AI Nexus Herald pipeline but also open up new questions regarding multi-agent orchestration efficiency, contextual reasoning at scale, and domain-aware evaluation methodologies.

Technical documentation of the project is present in the GitHub repository in docs folder.

There are three main documents generated for AI Nexus Herald:

During the development and deployment of AI Nexus Herald in production, I have learnt many things.

The AI Nexus Herald demonstrates how a modular multi-agent AI system can be taken from prototype to a fully operational production service. By combining RSS-based discovery, real-time retrieval augmented generation using semantic similarity, and agent-level testing, it achieves both automation and content reliability.

The architecture supports scalability, security, and continuous improvement, making it a reference point for deploying AI-driven editorial systems at scale.