⬅️ Previous Readiness for LLM Engineering

By now, you know what this program is about — mastering the full lifecycle of large language models.

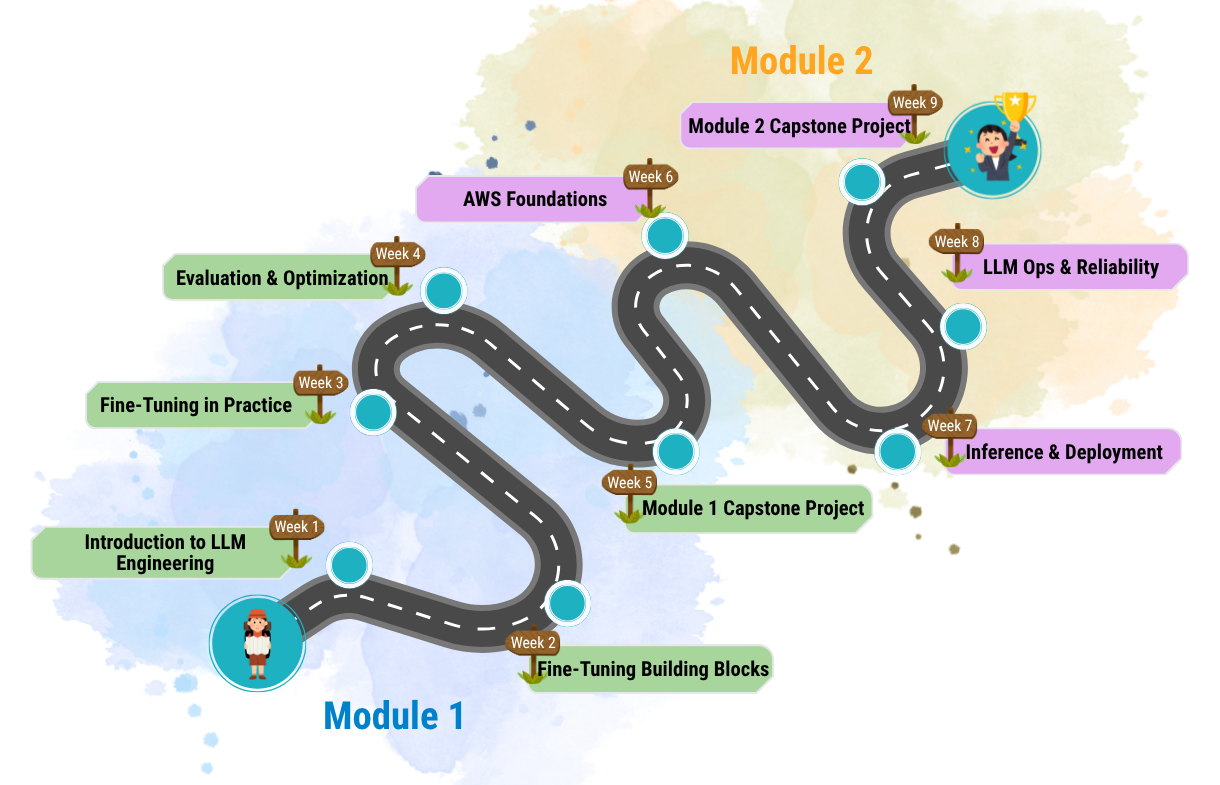

This lesson shows you how it all fits together: your 9-week roadmap from foundation to deployment, with two modules and two portfolio-grade projects leading to certification.

The video walks through each module, explains the weekly flow, and shows how the capstone projects connect to your certification goals.

The program is divided into two modules — the first focuses on fine-tuning large language models, and the second on deployment and production-grade engineering.

You’ll learn to adapt open-source foundation models for specific domains using Hugging Face, DeepSpeed, and Axolotl.

This module covers dataset preparation, parameter-efficient training, evaluation, and optimization.

By the end, you’ll complete a fine-tuned model with an evaluation report and quantized weights.

You’ll learn how to deploy, monitor, and maintain models on platforms like SageMaker, Bedrock, and vLLM.

This module focuses on inference, scalability, cost-performance tradeoffs, and production best practices.

Each module concludes with a capstone project that contributes to your certification.

Here is the detailed schedule for the program:

| Week | Focus Area | Key Themes |

|---|---|---|

| 1 | Introduction to LLM Engineering | Model types, use cases, and fine-tuning motivations. |

| 2 | Fine-Tuning Building Blocks | Tokenization, dataset prep, LoRA/QLoRA training. |

| 3 | Fine-Tuning in Practice | Full training runs using Hugging Face, Axolotl, DeepSpeed. |

| 4 | Evaluation & Optimization | lm-eval, model merging, quantization, and versioning. |

| 5 | Module 1 Capstone Project | Deliver a fine-tuned, evaluated, quantized model. |

| 6 | AWS Foundations | SageMaker training, Bedrock customization, and cost control. |

| 7 | Inference & Deployment | Deploy models using vLLM, Modal, or AWS endpoints. |

| 8 | LLM Ops & Reliability | Monitoring, observability, security, and governance. |

| 9 | Module 2 Capstone Project | Deploy a production-ready endpoint with monitoring. |

This program is self-paced — the week numbers are a suggested order, not deadlines.

Move faster, slower, or jump around based on what you already know.

Your progress depends only on completing both projects and meeting the certification rubric.

Weeks are learning stages, not fixed timelines.

You decide how deep to go and when to move forward.

You’ll gain hands-on experience with the same tools modern AI teams rely on:

Everything you learn leads to two major deliverables:

These projects form your professional portfolio and are the basis for certification.

This roadmap is meant to help you build real, hands-on capability — not just theoretical understanding.

As you move through the lessons, you’ll learn to fine-tune, evaluate, and deploy large language models using the same tools production teams use every day.

When you’re ready, continue to Module 1: LLM Fine-Tuning & Optimization to begin your first phase of hands-on work.

🏠 Home - All Lessons

➡️ Next - How to Succeed in This Program