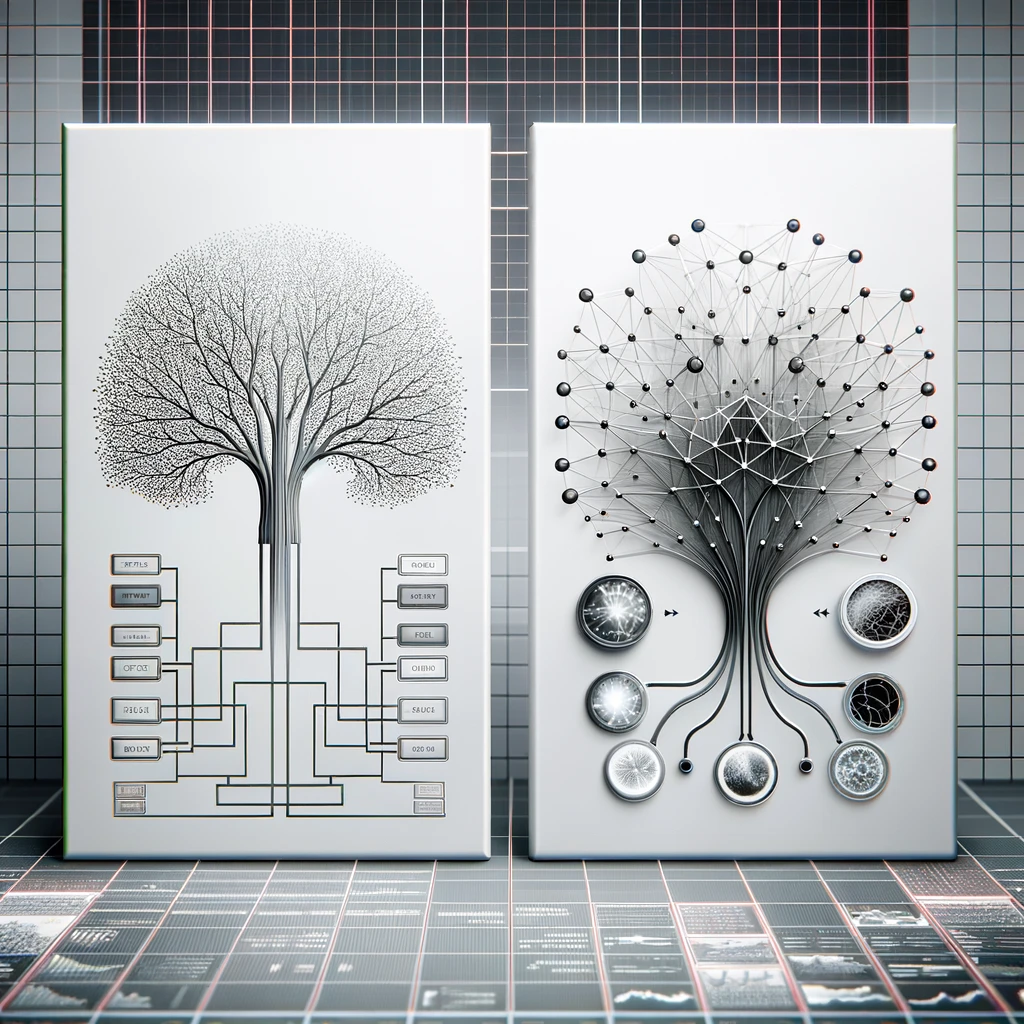

Why Do Deep Learning Models Struggle With Tabular Data?

Deep learning has revolutionized how we handle image, language, and audio data, but its success hasn't translated as seamlessly into the realm of tabular data. Here, traditional decision tree models like XGBoost continue to hold sway. Despite the advanced capabilities of deep learning, it struggles to adapt to the unique challenges posed by tabular data, which is often heterogeneous, small in scale, and subject to extreme values that can disrupt typical learning models.

The challenge primarily stems from the way deep learning models are constructed. These models excel with data that exhibits spatial and sequential invariances—characteristics inherent to images and audio. Tabular data, however, lacks these invariances, making it a tougher nut to crack for deep learning without significant customization.

This gap has spurred active research into developing deep learning architectures tailored for tabular data. However, many of these innovations falter when applied to new datasets. This is often due to a lack of robust benchmarks that accurately reflect real-world data challenges, resulting in models that perform well in controlled tests but underperform in practical applications.

Further complicating matters is the size of available tabular datasets. Unlike expansive image datasets like ImageNet, tabular datasets tend to be smaller and noisier, complicating the training process for deep learning models. This situation leads to issues with replicability and consistency in model evaluations, exacerbated by variable efforts in hyperparameter tuning and statistical uncertainties in benchmark results.

Recognizing these issues, researchers have developed a new benchmarking system for tabular data. Their methodology focuses on providing precise criteria for dataset inclusion and detailed hyperparameter tuning strategies. The goal is to establish a fair and comprehensive testing ground for both tree-based and deep learning models. This new benchmark not only tests these models across a variety of settings but also shares the raw results of extensive evaluations. By doing so, it opens the door for other researchers to refine and test their algorithms against a fixed standard, fostering greater innovation and effectiveness in the field.

The findings from these benchmarks are enlightening, showing why tree-based models often outperform deep learning on tabular data. Through empirical investigations and targeted dataset transformations, the researchers have begun to uncover the inherent biases and strengths of different modeling approaches. This insight is crucial for developing deep learning models that can match or even surpass the effectiveness of tree-based methods in handling the complex and varied nature of tabular data.

Current Efforts and Innovations

Research reviewed by Borisov et al. (2021) reveals extensive efforts to mold deep learning to better suit tabular data. These include:

- Data Encoding Techniques: Initiatives to adapt tabular data for deep learning have been detailed by researchers like Hancock and Khoshgoftaar (2020) and Yoon et al. (2020).

- Hybrid Methods: Integrations that combine the adaptability of neural networks (NNs) with the strong inductive biases of tree-based models are being developed by Lay et al. (2018) and Popov et al. (2020).

- Specific Architectures: Innovations like tabular-specific transformers and modified Multi-Layer Perceptrons (MLPs) are being tailored to address tabular data's unique challenges more effectively.

Challenges in the Landscape

Despite these advances, the field faces significant hurdles:

- Lack of Standard Benchmarks: Unlike areas such as computer vision or NLP, tabular data lacks universally accepted benchmarks, complicating the evaluation of new models.

- Dataset Diversity: Many studies use a limited range of datasets, often tailored or too favorable towards the models being tested.

- Hyperparameter Tuning Costs: The resource-intensive nature of hyperparameter optimization adds another layer of complexity and expense.

A Path Forward

Addressing these challenges, the paper introduces a robust benchmark system using 45 diverse datasets to evaluate and compare the effectiveness of both tree-based and neural network models on tabular data. This benchmark is not only more comprehensive but also designed to provide a clearer and more consistent framework for assessing model performance across various settings.

The introduction of this benchmark is poised to advance our understanding of why tree-based models typically outperform NNs in handling tabular data. By exploring the underlying reasons through empirical studies, such as the specific needs for regularization in MLPs, this work contributes significantly to the field. It highlights the critical need for bespoke solutions and methodological advancements to leverage deep learning's potential fully in processing tabular datasets.

This comprehensive approach offers a promising avenue for bridging the gap between deep learning innovations and the practical realities of tabular data. As the field grows, so too does the potential for deep learning to finally match and even exceed the benchmarks set by traditional methods in this crucial area.

Selection Criteria for Datasets

- Heterogeneous Columns: The datasets chosen include columns that represent diverse features, excluding those like images or signals where columns may just be different sensors of the same type.

- Dimensionality: Only datasets with a dimension-to-sample size ratio (d/n ratio) below 1/10 are included, ensuring that the datasets are not excessively high-dimensional.

- Information Richness: Any dataset with insufficient documentation is excluded unless it's clear that the features are diverse and meaningful.

- Independence and Identical Distribution (I.I.D.): Datasets resembling time series or streams, which are not I.I.D., are removed to maintain the integrity of statistical assumptions.

- Practical Relevance: Artificial datasets are generally excluded unless they simulate real-world phenomena that are of practical importance, such as the Higgs dataset.

- Sufficient Complexity and Size: Extremely small or simple datasets are excluded. This includes datasets with fewer than four features or 3,000 samples, and datasets that are too easily predictable.

- Non-deterministic: Any dataset where outputs are a deterministic function of the inputs, such as those used in games like poker or chess, are removed to focus on more unpredictable real-world data.

Avoiding Complications in Data

- Dataset Size Standardization: The training set is capped at 10,000 samples for larger datasets to focus on medium-sized datasets. Large-sized datasets are studied separately with a cap at 50,000 samples.

- Handling Missing Data: Datasets with missing entries are cleansed of any rows with missing data, and columns with prevalent missing data are omitted to avoid the complexities associated with various imputation methods.

- Balanced Classes: For classification tasks, datasets are adjusted to have balanced classes by selecting the two most prevalent classes and equalizing their sample sizes.

- Feature Cardinality: Categorical features with more than 20 categories and numerical features with fewer than 10 unique values are removed to simplify the feature space. Numerical features with only two unique values are treated as categorical.

This meticulous approach to dataset selection and preparation is designed to ensure that the benchmark provides a fair, rigorous, and meaningful evaluation of machine learning models, particularly in differentiating the capabilities and performances of tree-based models versus neural networks in handling tabular data. The benchmarks aim to address the current gaps in tabular data analysis by establishing standard metrics and conditions that reflect real-world complexities.

Benchmarking Procedure Overview

Hyperparameter Tuning and Variance

- Challenge of Variance: Hyperparameter tuning is acknowledged for introducing a high degree of variance into model evaluations, particularly when the budget for model evaluations is limited. This variance can significantly affect the reliability of benchmark results.

- Methodology: The procedure utilizes a random search strategy for hyperparameter tuning, as recommended by Bergstra et al. (2013). This approach is chosen to sample the variance effectively across different hyperparameter configurations.

Implementation of Random Search

- Search Space: The hyperparameter search spaces are sourced from Hyperopt-Sklearn (Komer et al., 2014) where applicable, supplemented by configurations from the original research and specific settings for MLP, Resnet, and XGBoost from Gorishniy et al. (2021).

- Execution: For each dataset, approximately 400 iterations of random searches are conducted. Tree-based models are processed using CPU resources, while neural networks (NNs) utilize GPU capabilities to ensure efficient computation.

Evaluation Strategy

- Iteration and Evaluation: The performance of models is evaluated based on the best hyperparameter settings found during the validation phase across n random search iterations. This process is repeated 15 times with shuffled orders of random search to bootstrap the expected test scores and mitigate any ordering effects.

- Starting Point: Each random search begins with the default hyperparameters for each model to establish a baseline for improvements.

Resources and Accessibility

- Code Accessibility: All scripts and methodologies used in the benchmarking are made publicly available on GitHub at LeoGrin/tabular-benchmark. This transparency supports reproducibility and allows the community to validate and build upon the work.

- Data Sharing: In addition to the code, a detailed data table containing the outcomes of all 20,000 compute-hour random searches is shared. This resource is invaluable for researchers looking to compare new algorithms under the same conditions without the need for extensive computational resources.

This section of the paper elaborates on the methodologies for aggregating results across datasets and preparing data for consistent and fair evaluation of machine learning models on tabular data.

Aggregating Results Across Datasets

Measurement Metrics

- Performance Metrics: Model performance is quantified using test set accuracy for classification tasks and R2 score for regression tasks, providing standard benchmarks for evaluation.

Normalization Method

- Average Distance to the Minimum (ADTM): The paper employs a normalization technique known as the Distance to the Minimum (ADTM), which scales test accuracies between 0 and 1. This scaling is performed through affine renormalization between the best and worst-performing models.

- Handling Outliers: Instead of considering the absolute worst scores, which may be outliers, the normalization process uses models at the 10% quantile for classification and the 50% quantile for regression, ensuring that the benchmarks reflect more typical model performances and not outlier effects.

- Score Clipping: For regression tasks, any scores below 50% are clipped to zero to minimize the impact of poorly performing models on the overall evaluation.

3.5 Data Preparation

The approach to data preparation aims to minimize manual intervention while ensuring that the data is optimally configured for the machine learning models being tested.

Transformations Applied

-

Gaussianization of Features: For neural network training, features are transformed using Scikit-learn’s QuantileTransformer to approximate a Gaussian distribution, thus normalizing data distribution and potentially enhancing model performance.

-

Target Variable Transformation: In regression settings, heavy-tailed target variables (e.g., house prices) are log-transformed to normalize their distribution. Additionally, there's an option to Gaussianize the target variable for the model fitting phase, which is then inversely transformed for performance evaluation, again using Scikit-learn’s tools.

-

Handling Categorical Features: For models that do not inherently process categorical data, features are encoded using Scikit-learn’s OneHotEncoder. This encoding transforms categorical variables into a format that neural networks and other models can process more effectively.

Results and Analysis

The benchmarking results provide valuable insights into the performance of tree-based models and neural networks across a diverse range of tabular datasets. The findings highlight the strengths and weaknesses of each model type, shedding light on the factors that contribute to their relative performance.

Benchmark on medium-sized datasets, with only numerical features. Dotted lines

correspond to the score of the default hyperparameters, which is also the first random search iteration.

Each value corresponds to the test score of the best model (on the validation set) after a specific

number of random search iterations, averaged on 15 shuffles of the random search order. The ribbon

corresponds to the minimum and maximum scores on these 15 shuffles.

Benchmark on medium-sized datasets, with only numerical features. Dotted lines

correspond to the score of the default hyperparameters, which is also the first random search iteration.

Each value corresponds to the test score of the best model (on the validation set) after a specific

number of random search iterations, averaged on 15 shuffles of the random search order. The ribbon

corresponds to the minimum and maximum scores on these 15 shuffles.

Benchmark on medium-sized datasets, with both numerical and categorical features.

Dotted lines correspond to the score of the default hyperparameters, which is also the first random

search iteration. Each value corresponds to the test score of the best model (on the validation set)

after a specific number of random search iterations, averaged on 15 shuffles of the random search

order. The ribbon corresponds to the minimum and maximum scores on these 15 shuffles.

Benchmark on medium-sized datasets, with both numerical and categorical features.

Dotted lines correspond to the score of the default hyperparameters, which is also the first random

search iteration. Each value corresponds to the test score of the best model (on the validation set)

after a specific number of random search iterations, averaged on 15 shuffles of the random search

order. The ribbon corresponds to the minimum and maximum scores on these 15 shuffles.

Key Points:

-

Hyperparameter Tuning: Even with advanced tuning techniques, NNs do not match the performance of tree-based models for tabular data. This underscores the possibility that NNs might inherently lack certain advantages that tree-based models naturally possess, such as handling mixed data types and non-linear relationships without extensive feature engineering.

-

Categorical Variables: The analysis suggests that the structural and algorithmic setup of NNs may not be optimally suited for tabular data's diverse characteristics, irrespective of data type. This is an important insight for data scientists and machine learning engineers, indicating that choosing the right model type based on data characteristics is crucial.

Findings

NNs are biased to overly smooth solutions

The first finding is that neural networks (NNs) exhibit a bias towards generating smoother function approximations compared to tree-based models. This bias is observed through experiments involving Gaussian kernel smoothing of the target function, which intentionally smooths out irregularities to assess how models adapt to these changes.

Key Insights:

-

Gaussian Kernel Smoothing: The researchers apply a Gaussian Kernel smoother to the output of each train set with varying length-scale values to assess how models handle smoothing in their predictions. This method intentionally smooths out the irregularities in the target function to examine how different models, specifically NNs and tree-based models, adapt to these changes.

-

Impact on Model Performance: It's observed that while tree-based models show a marked decrease in accuracy with increased smoothing (especially at smaller length scales), neural networks are relatively unaffected. This indicates that NNs naturally bias towards generating smoother function approximations, which may not capture the complex, high-frequency variations present in the actual data.

-

Comparison with Tree-Based Models: Unlike NNs, tree-based models, which learn piece-wise constant functions, do not exhibit this smoothing bias. This difference highlights the inherent design and functional divergences between these model types, where tree-based models excel at capturing more granular and discontinuous patterns in the data.

-

Literature Consistency: The findings align with existing literature (e.g., Rahaman et al., 2019) that suggests NNs are predisposed towards low-frequency functions. This section does not refute other studies advocating the benefits of regularization and optimization for tabular data, which might help NNs approximate irregular patterns more effectively.

-

Potential Solutions: The authors hint that implementing specific mechanisms like the ExU activation (from the Neural-GAM paper by Agarwal et al., 2021) or using periodic embeddings (Gorishniy et al., 2022) might allow NNs to better capture the higher-frequency components of the target function, potentially overcoming their inherent smoothing bias.

Finding 2: Uninformative Features Affect Neural Networks More Than Tree-Based Models

The second key finding of the paper reveals that neural networks (especially MLP-like architectures) are more negatively impacted by uninformative features in tabular datasets compared to tree-based models like Random Forests or Gradient Boosting Trees. This finding highlights a significant challenge for deep learning when working with real-world tabular data, which often contains many irrelevant or redundant features.

Key Insights:

-

Tabular Data and Uninformative Features:

Tabular datasets typically contain a mixture of important and uninformative (irrelevant or redundant) features. In many cases, a large portion of the features may not significantly contribute to the prediction task. The study conducted experiments by systematically removing features according to their importance (as ranked by Random Forests) to evaluate the impact of irrelevant features on model performance. -

Tree-Based Models' Robustness:

Tree-based models, particularly Gradient Boosting Trees (GBT), are relatively unaffected by the presence of uninformative features. These models can efficiently prioritize the most informative features during training and ignore irrelevant ones. The performance of GBTs remained stable even when up to 50% of the least important features were removed, showing a high level of robustness in handling noisy features. -

Neural Networks' Sensitivity to Uninformative Features:

In contrast, neural networks demonstrated a higher sensitivity to uninformative features. The performance gap between neural networks and tree-based models widened when uninformative features were added to the dataset, indicating that neural networks struggle to filter out these irrelevant features effectively. When a significant portion of uninformative features was removed, the performance of MLPs and ResNet architectures improved, but they still lagged behind tree-based models. This suggests that neural networks are not as naturally equipped to deal with noisy feature spaces, which is common in tabular data. -

Rotation Invariance and Feature Importance:

One possible explanation for this finding is the inherent rotation invariance of neural networks, meaning that the model treats all features equally at the start and cannot easily distinguish between important and unimportant features. As a result, neural networks require more training and tuning to identify the most relevant features. In contrast, decision trees and their ensembles (such as Random Forests and GBTs) can directly evaluate feature importance, making them more adept at handling uninformative or redundant data. -

Experimental Validation:

The study validated these insights through multiple experiments. In one, they observed that the removal of uninformative features narrowed the performance gap between neural networks and tree-based models. Conversely, when additional uninformative features were added, the performance of MLPs deteriorated faster than that of tree-based models, further emphasizing the neural networks' susceptibility to noisy data.

Finding 3: Data in Tabular Form Is Not Invariant to Rotation, and Neither Should Learning Procedures Be

The third key finding of the paper focuses on the concept of rotation invariance in learning algorithms, particularly in how neural networks handle tabular data. The authors found that data in tabular form typically carries intrinsic structure and meaning tied to specific features, and applying rotation to such data distorts this structure. Neural networks, particularly Multi-Layer Perceptrons (MLPs) and ResNets, exhibit rotation invariance, which negatively impacts their ability to effectively model tabular data. In contrast, tree-based models are not rotation-invariant, which gives them a significant advantage when working with tabular datasets.

Key Insights:

-

The Problem of Rotation Invariance in Neural Networks:

Neural networks, especially those like MLPs and ResNets, are rotation invariant. This means that if the features of a dataset are rotated (i.e., transformed by a linear combination that mixes the features), the model's performance should not change. While this property might be beneficial in domains like image processing, where pixel values do not have specific, independent meanings, it is detrimental in tabular data.Tabular data is often structured with specific feature columns like "age," "weight," or "income," where each column carries distinct, independent meaning. When neural networks treat these features as if they can be mixed or rotated, it loses important information about the inherent relationships between features.

-

Tree-Based Models’ Advantage:

Tree-based models, such as Random Forests and Gradient Boosting Trees (GBTs), are not rotation invariant. These models treat each feature independently and make decisions based on the exact values of features without mixing them. This enables tree-based models to maintain the natural structure of the data and take advantage of the important information each feature carries without confusing it with others.In the experiments, random rotations were applied to the datasets to see how different models reacted. The results showed that after applying random rotations, the performance of tree-based models declined less compared to neural networks. In fact, under random rotations, the performance ranking reversed, with neural networks slightly outperforming tree-based models. This shows that tree-based models capitalize on the natural structure of the data that rotation invariance in neural networks ignores.

-

Impact of Rotation on Model Performance:

After applying random rotations to the data, the authors found that the performance of neural networks (especially ResNets) remained largely unchanged, reflecting their insensitivity to the original orientation of the data. However, tree-based models experienced a notable drop in performance after rotation, further proving that they rely heavily on the natural feature orientation of the data.This experiment highlights that in domains where features are heterogeneous and carry distinct meanings, rotation invariance can be a major drawback for neural networks. It also suggests that neural networks need to preserve the original structure of the data rather than treating all features as equal and interchangeable.

-

Relation to Uninformative Features:

The study also linked rotation invariance to the issue of uninformative features (explored in Finding 2). Since MLPs and ResNets cannot naturally differentiate between informative and uninformative features, their performance is further affected when rotation makes it harder for the model to distinguish relevant patterns. Removing uninformative features before applying a rotation caused a less significant performance drop in all models, showing that neural networks are more sensitive to this issue. -

Empirical Evidence:

When the authors removed 50% of the least important features from the datasets and then applied a rotation, the performance of tree-based models still dropped, but not as sharply as it did for neural networks. This suggests that while tree-based models can suffer from feature mixing, they are still better at handling irrelevant data due to their inherent design.

Discussion and Conclusion

Discussion:

This study systematically benchmarks the performance of tree-based models and neural networks on tabular data, uncovering several critical insights that highlight why tree-based models continue to outperform deep learning approaches in this domain. Despite the immense progress made in deep learning for tasks such as image, text, and audio, tabular data presents unique challenges that deep learning models are still not adequately equipped to handle.

One of the major reasons behind the underperformance of deep learning models, such as MLPs and Transformers, on tabular data is the mismatch between inductive biases. Tabular datasets often contain heterogeneous features, uninformative attributes, and irregular target functions, none of which are common in the domains where deep learning has traditionally excelled. Neural networks tend to struggle with these irregular patterns because they are inherently biased toward learning smoother, more continuous functions, which makes them unsuitable for the complex, non-smooth relationships typically found in tabular data.

The paper identifies three main challenges for neural networks in tabular learning:

- Handling uninformative features: Neural networks, especially MLPs, are more sensitive to irrelevant features compared to tree-based models, which can easily ignore unimportant information. This suggests a need for better feature selection or attention mechanisms in deep learning models.

- Data orientation and rotation invariance: Neural networks are rotationally invariant, meaning they treat all features equally without preserving the natural structure of the data. This is a major disadvantage for tabular data, where features often have specific and independent meanings.

- Learning irregular functions: Neural networks are biased toward smoother solutions and struggle to capture the irregular, non-smooth target functions typical of tabular data. This explains why tree-based models, which learn piecewise constant functions, perform better on such datasets.

These challenges highlight why tree-based models are still the preferred choice for most practitioners working with tabular data, despite the excitement surrounding deep learning methods.

The findings also reveal that while deep learning models can sometimes approach the performance of tree-based models after extensive hyperparameter tuning, they require significantly more computation and training time, which is another practical drawback for their application in real-world tabular data scenarios.

Conclusion:

In conclusion, the study provides compelling evidence that tree-based models remain state-of-the-art for tabular data, outperforming neural networks across a wide range of benchmarks. Despite numerous attempts to adapt deep learning architectures for tabular data, tree-based methods, particularly ensemble models like XGBoost and Random Forests, consistently show superior performance in terms of accuracy, robustness, and training efficiency. These findings suggest that while deep learning holds promise, current neural network architectures are not inherently suited to tabular data, and further research is required to address the following key challenges:

- Robustness to uninformative features: Deep learning models must improve their ability to ignore irrelevant features, which are common in tabular data.

- Preserving the natural orientation of the data: Rotation invariance in neural networks is a significant disadvantage in tabular datasets, where feature names and meanings are critical. Developing models that respect this inherent structure is crucial.

- Capturing irregular patterns in data: Neural networks need to become better at learning non-smooth and irregular functions, as these are frequently encountered in real-world tabular datasets.

This paper’s contribution of a robust benchmarking methodology and the provision of open datasets and hyperparameter search results offers a solid foundation for future research aimed at improving deep learning architectures for tabular data. The hope is that by addressing the unique challenges of tabular data, researchers can close the performance gap between deep learning and tree-based models, leading to the development of more versatile and powerful machine learning models.

References

- Borisov, A., Grankin, A., Isachenko, R., & Kuznetsov, A. (2021). Why Do Deep Learning Models Struggle With Tabular Data? arXiv preprint arXiv.02178.