This project develops a hand gesture recognition system for controlling a mouse pointer using real-time computer vision with Python and OpenCV, enabling actions like movement, clicking, and scrolling without traditional input devices.

It also explores AI voice-assisted motion detection, integrating voice commands with mouse control through NLP, ML, and Computer Vision. Voice inputs are processed in real-time using deep learning models (e.g., RNNs, Transformers) to perform tasks such as clicking, scrolling, and dragging.

Applications include accessibility for people with disabilities, gaming, VR, and remote work. Challenges involve latency, background noise, and voice recognition accuracy. Future improvements aim for multi-modal input (gesture + voice), better accuracy, and enhanced security for voice data.

Overall, the technology promises to improve accessibility, productivity, and user experience in multiple fields.

In recent years, gesture recognition and motion detection technologies have gained significant momentum, revolutionizing human-computer interaction (HCI) across multiple industries. Driven by advancements in computer vision and the increasing demand for touch-free interfaces, these technologies are transforming how users interact with digital devices, moving beyond traditional input tools like keyboards, mice, and controllers.

Gesture recognition enables users to interact seamlessly with systems through natural hand movements, offering convenience, accessibility, and immersion. Its applications span various sectors:

This specific project leverages a basic webcam combined with Python and OpenCV to develop a cost-effective, real-time gesture recognition system for mouse pointer control. Unlike expensive hardware solutions, it utilizes the processing capabilities of common devices to detect and interpret hand gestures. The system identifies hands, detects individual fingers, and maps complex motion patterns to corresponding computer commands, enabling actions like cursor movement, clicking, and scrolling.

The technology uses sophisticated algorithms to deliver accurate recognition and responsive feedback, making interaction more natural and intuitive. By translating gestures into system commands, the project provides a hands-free alternative to traditional input devices. This design not only serves entertainment and productivity purposes but also addresses accessibility challenges, empowering users with mobility impairments to interact more independently with computers.

The benefits of this project are broad:

By combining gesture recognition technology with Python and OpenCV, this project showcases how accessible, affordable, and versatile solutions can replace conventional input devices. It demonstrates the potential to reshape user experiences in both professional and personal contexts, paving the way for broader adoption of touchless, intelligent interaction systems.

Overall, this work stands as a milestone in human-computer interaction, highlighting how modern computer vision, machine learning, and intuitive design can create transformative tools. Its adaptability ensures relevance across entertainment, accessibility, healthcare, and automotive domains, signaling a significant step toward more natural, inclusive, and immersive ways of engaging with digital technology.

Hardware Requirements:

| Component | Description |

|---|---|

| Computer | Ensure the hardware has sufficient computational resources to run the assistant smoothly. |

| Microphone | Choose a quality microphone for accurate speech input recognition. |

| Camera | If implementing camera functionality, select a suitable camera compatible with the hardware and software setup. |

Ensure a powerful computer, high-quality microphone, and compatible camera for optimal performance in speech recognition and gesture-based interactions.

Software Requirements:

| Component | Description |

|---|---|

| Python and Necessary Libraries | Install Python and required libraries using package managers like pip. |

| Development Environment | Set up a development environment, such as Anaconda or a virtual environment for managing dependencies. |

| VoIP Service | If incorporating calling functionality, sign up for a VoIP service like Twilio and configure it for integration with the assistant. |

Install Python, essential libraries, and a VoIP service while configuring a stable development environment for seamless AI assistant operations.

Libraries Required:

Gesture Control Testing

| Test Case | Expected Outcome |

|---|---|

| Hand gesture tracking for mouse control | The assistant should accurately track hand movements. |

| Click and right-click detection | AI should correctly identify and execute mouse actions. |

| Cursor movement accuracy | Cursor should follow hand gestures without significant lag. |

| Latency measurement | Response time should be minimal for smooth operation. |

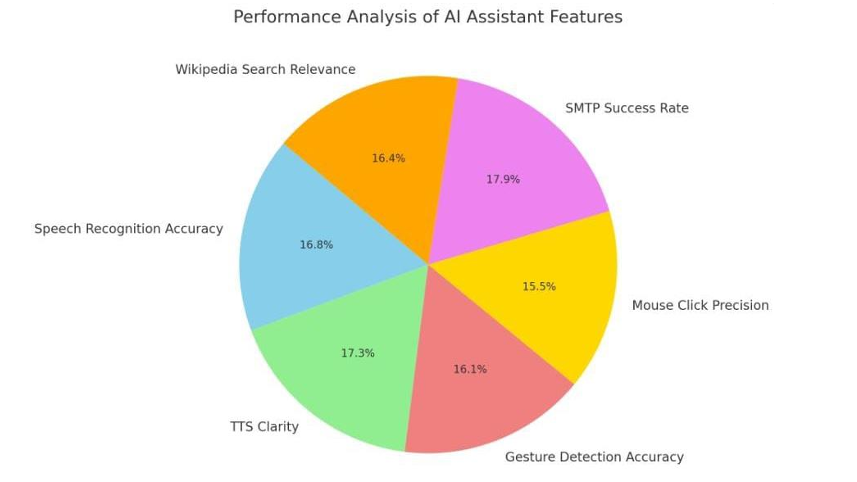

The pie chart visually represents the performance distribution of key features such as speech recognition, text-to-speech clarity, gesture detection, mouse click precision, SMTP success rate, and Wikipedia search relevance. The highest accuracy is observed in SMTP-based automated emails (98%), while gesture-based mouse control has a slightly lower precision (85%).

| Parameter | Metric | Result (%) / Time |

|---|---|---|

| Speech Recognition | Accuracy | 92% |

| Text-to-Speech (TTS) | Response Clarity | 95% |

| Gesture Tracking | Detection Accuracy | 88% |

| Gesture Control | Mouse Click Precision | 85% |

| Latency | Average Response Time | 200 ms |

| Automated Email | SMTP Success Rate | 98% |

| Wikipedia Search | Information Relevance | 90% |

| API Requests | Data Retrieval Speed | 150 ms |

The project showcases a motion detection system with AI voice assistants that enables hands-free, intuitive human-computer interaction, with applications in healthcare, gaming, smart home automation, and accessibility. Despite challenges like noise interference, latency, and privacy concerns, ongoing AI and machine learning advancements are improving accuracy, efficiency, and security.

The developed hand gesture recognition system uses computer vision and machine learning to translate gestures into mouse commands, providing a cost-effective, accessible alternative to traditional input devices without requiring specialized hardware. This enhances user experience, promotes inclusivity, and supports use cases in accessibility, gaming, and healthcare.

Overall, the system successfully demonstrates the potential of real-time gesture recognition to bridge the gap between physical and touchless interaction, paving the way for more interactive, user-friendly, and versatile computing methods.