Abstract

As code-switching on social media becomes more common, the amount of bilingual users and code-switched data is rapidly increasing. This presents unique challenges for sentiment analysis, which has traditionally focused on monolingual text. Sentiment analysis involves categorizing sentiments from comments, reviews, or tweets into positive, negative, or neutral. To address the scarcity of research in code-switched sentiment analysis, this project contributes the first code-switched corpus for Egyptian Arabic-English sentiment analysis (EESA), featuring 4,100 annotated YouTube comments. Another contribution is the implementation of various sentiment analysis models, including traditional neural models (BiLSTM-Attention and Hybrid-Transformer) and the utilization of advanced language models (Gemini and GPT). Traditional models, using non-contextual, contextual, and character embeddings with additional word features, achieved an ensemble test F1-score of 92.54%. Advanced models, evaluated in zero-shot and fine-tuned configurations, showed significant potential. Gemini-1.5 and GPT-4o performed well as zero-shot models, and the fine-tuned GPT-4o model with sentiment cues achieved the highest test F1-score of 95.35%.

Introduction

The popularity of social media platforms has skyrocketed, resulting in a rapid rise in user numbers and a substantial amount of data being shared daily. These platforms have become vital for promoting products and services and gathering customer feedback. Opinions and reviews shared on social media significantly influence sales and business strategies. To categorize these opinions by sentiment, a robust sentiment analysis (SA) tool is essential. Sentiment analysis involves studying and analyzing people's opinions about products, services, organizations, individuals, events, and issues. It identifies sentiments in reviews, comments, or tweets and classifies them into positive, negative, or neutral.

Sentiment analysis faces several challenges, including sarcasm, comparative opinions, and differing perspectives between the author and the reader. Additionally, Arabic introduces complexity with its rich morphology, diverse dialects, and informal language common on social media platforms, such as slang, emoticons, and inconsistent spelling. Adding to these complexities is the phenomenon of code-switching (CS), which further complicates sentiment analysis tasks. CS refers to the practice of alternating between two or more languages within a conversation or text. Most SA research focuses on monolingual texts, leaving CS largely under-explored. Egyptian users frequently post Arabic-English code-switched content. To the best of our knowledge, no sentiment analysis work has been conducted on Egyptian Arabic-English code-switched data, and no datasets are available for this task.

The project contributions include:

- Introducing the EESA corpus, the first code-switched corpus for Egyptian Arabic-English sentiment analysis, featuring 4,100 YouTube comments.

- Introducing two automated code-switching analysis models, comparing traditional neural models with advanced language models.

Sentiment analysis models were implemented for Egyptian Arabic-English code-switched text. These models include traditional neural architectures such as BiLSTM-Attention and Hybrid-Transformer. Advanced language models like Gemini and GPT were evaluated in their existing form. The traditional neural models were trained and tested on the EESA corpus using non-contextual, contextual, and character embeddings, supplemented with novel additional word features. Their ensemble achieved a test F1-score of 92.54%. In contrast, advanced language models were evaluated in both zero-shot and fine-tuned configurations on the same corpus. The fine-tuned GPT-4o with sentiment cues achieved the highest test F1-score of 95.48%.

Egyptian Arabic-English Code-Switched Corpus

This section provides the techniques used to collect, preprocess, and annotate Egyptian Arabic-English code-switched comments for the SA task.

Corpus Collection

All data was collected from YouTube. Due to the variety of Arabic dialects, a single dialect was chosen to ensure adequate training examples for the trained model. The selected dialect was Egyptian Arabic, as the annotation team possesses a strong command of Egyptian Arabic and English. A diverse corpus was curated from comments across domains such as Egyptian celebrities, movies, and series, adhering to ethical guidelines for private data protection and copyright compliance. The search queries included the top 90 actors, 30 Egyptian singers, 60 movies (2018-2022), and 46 series (2021-2022), all conducted in Arabic.

A total of 29,556 Arabic-English code-switched comments were extracted and identified using specific Unicode ranges for Arabic and English characters. Two members of the annotation team manually filtered these, retaining only those in Egyptian Arabic and English, and excluded comparative opinions and inappropriate remarks. Out of 10,000 preprocessed comments, 4,770 were retained.

Corpus Annotation

Nine Egyptians participated in the annotation process. Annotators were divided into groups of three. The process involved annotating 4,770 comments, with each comment receiving labels from three annotators. The annotation team followed annotation guidelines, considering the author's perspective. Three main sentiment classes were defined: positive, negative, and neutral, with definitions and examples provided for each. Additionally, examples were given to explain the differences between the author's and reader's perspectives, including one example of sarcasm. The annotators engaged in discussions in case of disagreements among themselves. Each annotator provided their reasoning for the chosen label. There were situations where one annotator provided a different label, and their reasoning persuaded the other two annotators to change their labels willingly. 133 mixed comments were excluded.

The manual filtering process unintentionally kept some comments that were supposed to be excluded. Comparative opinions and non-Egyptian Arabic dialect comments were identified and removed during discussions, resulting in 4,131 retained comments. The Cohen's Kappa agreement is 0.98. Comments with unanimous agreement are included in the corpus. The EESA corpus contains 4,100 Egyptian Arabic-English code-switched annotated comments, distributed as 1,818 positive (44.34%), 1,294 neutral (31.56%), and 988 negative (24.10%). The corpus is divided into a 60% training set, 20% development set (dev), and 20% testing set. Stratified sampling was applied to mitigate sampling bias in the unbalanced corpus.

Traditional Neural Models

Traditional neural models are grouped into BiLSTM-Attention and Hybrid-Transformer. Four preprocessing phases were applied to the EESA corpus to improve its quality for the trained models. Additional word features were introduced to enhance the performance of these models. In addition, both models were trained and tested with LSTM and CNN character embedding layers, utilizing Arabic FastText for non-contextual embeddings and AraBERT-Twitter for contextual embeddings. The comment polarity is affected by the context of positive and negative words. To address this, the Arabic FastText (AFT) embeddings were concatenated with contextual models. This ensured that the trained model recognized the same positive or negative words from FastText while preserving the contextual information associated with those words. We experimented with various Arabic contextual embeddings and Arabic-English code-switch contextual embeddings, with AraBERT-Twitter embeddings achieving the highest performance among them.

Preprocessing Phases

The preprocessing phases listed below were applied to the EESA to enhance the quality of the trained models. Each phase was built on the previous one.

1- Space Addition: Added spaces between words, dates, and numbers to ensure better segmentation and accurate representation.

2- Symbol Removal: Eliminated website links, newline characters, hashtag symbols, and Arabic diacritics to normalize text representations.

3- Character Normalization: Removed redundant characters and converted English tokens to lowercase for consistency and streamlined vocabulary.

4- Emotion Standardization: Removed repeated emoticons, normalized similar ones into a unified category, and added spaces for better representation.

Additional Word Features

Each word embedding included 19 novel binary features, enhancing the model's ability to capture nuanced linguistic patterns. A sentiment lexicon was constructed from the EESA corpus, encompassing 871 positive words, 520 compound positive phrases, 571 negative words, and 362 compound negative phrases. This lexicon was integrated with NileULex, which comprises 5,953 words and phrases from Modern Standard Arabic and Egyptian Arabic. The neutral, negative, and positive features were derived from the merged lexicon.

A list of negation words, intensified tokens, and named entities were extracted from the EESA corpus to obtain negation, intensified, and named entity features. In addition, a compound phrase feature was implemented to analyze multi-word expressions conveying specific sentiment. Identified compound phrases are assigned compound phrase and polarity features. The repeated character feature detects words with more than two consecutive repeated characters. The contextual feature identifies non-neutral words and phrases. The remaining features include emoticons, text, numbers (Arabic and English), words (Arabic and English), different cases (lowercase, uppercase, mixed case), and others.

BiLSTM-Attention Model

The Bidirectional Long Short-Term Memory (BiLSTM) and self-attention layer are critical components of the BiLSTM-Attention model. The BiLSTM layer mitigates the vanishing gradient problem and captures long-term dependencies between words. The self-attention layer assigns different weights to words based on their importance. It can learn to assign more weight to positive and negative words, enhancing the model performance. The BiLSTM and Attention components empower the model by preserving context and identifying word importance. The model consists of an embedding layer, a BiLSTM layer, a self-attention layer, a dense layer, and a softmax output layer.

Hybrid-Transformer Model

The Hybrid-Transformer model is novel due to its modified positional embeddings and the incorporation of a post-embedding weight layer inserted after the positional embeddings. The Hybrid-Transformer model takes a novel approach to positional embeddings by concatenating them before the word and character embeddings. This concatenation step increases the embedding size of each token while preserving the word and character embeddings. It ensures that the non-contextual part remains consistent for identical tokens. Each word embedding in this model contains positional, non-contextual, contextual, and character embeddings. The model is not aware of the different parts of these embeddings during the training process. In response, the post-embedding weight layer was introduced. This layer assigns varying weight percentages to the different embedding parts, thereby enhancing the model's ability to leverage the unique information contained in each part. The initial weights of the post-embedding layer are updated after each epoch.

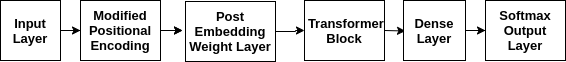

The architecture of the Hybrid-Transformer model is presented below

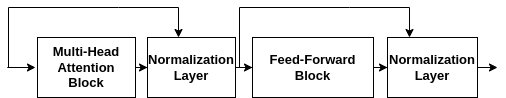

The following diagram illustrates the design of the Transformer Block

Advanced Language Models

Advanced language models like Gemini and GPT revolutionized the natural language processing (NLP) field. Gemini and GPT are trained on large and diverse datasets across numerous languages. This enables them to deeply understand the text and respond effectively to users. They are particularly suitable for Arabic-English code-switched sentiment analysis since their tokenizers support both Arabic and English, eliminating the need for additional preprocessing phases. Furthermore, these models can operate as zero-shot models, allowing them to perform sentiment analysis without requiring a specific training dataset. This not only simplifies implementation but also reduces costs significantly as no training data is needed.

Specific models from Gemini and GPT can be fine-tuned on particular tasks, which further enhances their performance and applicability. The fine-tuned models underwent training on the entire train set. The Gemini-1.5 model is currently not available for fine-tuning, leaving only the Gemini-1.0 model accessible for this purpose. Annotators were provided with annotation guidelines. Novelty, these annotation guidelines were included in the prompt for zero-shot models. We incorporated sentiment clues into some fine-tuned models during the training and testing phases.

Below are examples of these sentiment clues:

- Positive words or phrases: {positiveWordsList}

- Negative words or phrases: {negativeWordsList}

- Named entities: {namedEntitiesList}

- Words with repeated characters: {repeatedCharsWordsList}

- Intensified words: {intensifiedWordsList}

- Negation words: {negationWordsList}

A list of negation words, intensified tokens, and named entities were extracted from the EESA corpus, along with a comprehensive sentiment lexicon.

Each term in curly braces represents a distinct linguistic feature category within comments. For instance, {positiveWordsList} includes positive sentiment words or phrases, while {negativeWordsList} contains negative ones. The {namedEntitiesList} highlights specific entities, such as people, organizations, or locations. {repeatedCharsWordsList} captures words with repeated characters, commonly used to convey emphasis or emotion (e.g., "woooow"). {intensifiedWordsList} consists of intensified words expressing stronger sentiment (e.g., "very"), and {negationWordsList} identifies words that negate sentiment (e.g., "not"). These categories are instrumental in accurately assessing the overall sentiment of a comment.

Experiments and Results

In this section, the performance comparison between traditional neural models and advanced language models is investigated. Traditional neural models incorporated different preprocessing phases along with additional word features. The Hybrid-Transformer model incorporated different weights of post-embedding weight layers. Additionally, results from an ensemble model combining traditional neural models are showcased. Advanced language models were evaluated as zero-shot models and, where applicable, in fine-tuned configurations. The performance of each model was evaluated using the micro F1-score.

Traditional Neural Models Results

Table 1 presents the results of models trained with additional word features across various preprocessing phases.

Table 1: The F1-score of all models that were trained with additional word features

| Preprocessing Phase | BiLSTM-Attention (Dev) | BiLSTM-Attention (Test) | Hybrid-Transformer (Dev) | Hybrid-Transformer (Test) |

|---|---|---|---|---|

| Space Addition | 91.69% | 89.61% | 90.22% | 90.34% |

| Symbol Removal | 91.93% | 89.98% | 90.10% | 87.90% |

| Character Normalization | 91.20% | 90.10% | 89.73% | 87.41% |

| Emotion Standardization | 92.91% | 91.44% | 90.95% | 91.20% |

Table 2 outlines the results of the best Hybrid-Transformer model using different weight combinations of the post-embedding weight layer. The top four results are shown for brevity. The combination of (2.5,10,55,30,2.5) improved the test F1-score to 91.56%. This combination assigns extra weights of 2.5%, 10%, 55%, 30%, and 2.5% to positional size, non-contextual, contextual, additional word features, and character embeddings.

Table 2: Best Hybrid-Transformer with various post-embedding weight combinations

| Combinations | Dev | Test |

|---|---|---|

| (2.5,10,55,30,2.5) | 91.56% | 91.56% |

| (5,20,40,25,10) | 91.44% | 90.71% |

| (10,20,30,25,15) | 90.83% | 89.85% |

| (5,20,35,20,20) | 91.44% | 90.10% |

The ensemble model combines the outputs of two models using different methods. One method is majority voting. Another involves taking the output layers from both models (3 units each) and feeding them into a hidden layer with 6 units, followed by a softmax output layer with 3 neurons. Both methods were used in the ensemble model. Additionally, this second method was varied by adding an extra hidden layer with 6 units in one version and removing the hidden layer in another. Table 3 presents the results of different ensemble techniques between the top BiLSTM-Attention and Hybrid-Transformer models. The ensemble model with one hidden layer achieved the highest F1-score of 92.54% on the test set.

Table 3: The F1-score of using different ensemble techniques

| Ensemble Approach | Dev | Test |

|---|---|---|

| Average voting | 92.05% | 92.18% |

| No hidden layer | 92.18% | 91.56% |

| One hidden layer | 92.30% | 92.54% |

| Two hidden layer | 92.05% | 92.30% |

Advanced Language Models Results

Table 4 presents the results of different Gemini and GPT models, including both zero-shot and fine-tuned configurations. The fine-tuned GPT-4o with sentiment cues achieved the highest test F1-score of 95.35%.

Table 4: The F1-score of the Zero-Shot and fine-tuned models

| Model | Dev | Test |

|---|---|---|

| Gemini-1.0-pro | 77.02% | 77.14% |

| Gemini-1.0-pro+annotation guidelines | 83.74% | 84.47% |

| Fine-tuned Gemini-1.0-pro | 89.36% | 88.63% |

| Gemini-1.5-pro-latest | 88.75% | 88.26% |

| Gemini-1.5-pro+annotation guidelines | 91.20% | 90.22% |

| GPT-4o | 86.92% | 85.57% |

| GPT-4o+annotation guidelines | 89.61% | 89.73% |

| Fine-tuned GPT-4o | 94.50% | 94.74% |

| Fine-tuned GPT-4o+sentiment cues | 94.87% | 95.35% |

Conclusion and Future Work

We introduced the EESA corpus, comprising 4100 code-switched comments. The EESA corpus is the first corpus for the Egyptian Arabic-English code-switched SA task. A sentiment lexicon was constructed from the EESA corpus, including 2324 positive and negative words and compound phrases.

Sentiment analysis models fall into two groups: traditional neural models like BiLSTM-Attention and Hybrid-Transformer, and advanced language models like Gemini and GPT. Traditional neural models using Arabic FastText and AraBERT-Twitter embeddings achieved peak performance, enhanced further by an Emotion Standardization phase and additional word features. BiLSTM-Attention and Hybrid-Transformer models achieved test F1-scores of 91.44% and 91.56%. An ensemble model with one hidden layer between them achieved an F1-score of 92.54%. Meanwhile, Gemini-1.5-pro with annotation guidelines reached an F1-score of 90.22%. However, the fine-tuned GPT-4o model with sentiment cues achieved the highest test F1-score of 95.35%.

This project offers valuable insights for Egyptian bilingual users, enabling them to better monitor social media sentiment and make more informed decisions. Advanced language models can be adapted to other Arabic dialects and Arabic-English code-switched data through fine-tuning, broadening their applicability. Despite its substantial size, the EESA has the limitation of unbalanced classes. Therefore, future project work will explore data augmentation techniques to address this limitation. Additionally, exploring various prompt engineering techniques for advanced language models remains a promising avenue for enhancing model generalization and adaptability in future sentiment analysis tasks.