A Retrieval-Augmented Generation Chat Assistant for E-Commerce Store Management

Abstract

This paper describes the implementation of a chat assistant for Ethify, an e-commerce platform. The system uses retrieval-augmented generation (RAG) to provide responses based on platform documentation, integrated with multi-turn conversation memory stored in MongoDB. The assistant handles user queries on store creation, product management, and customization. We detail the architecture, including embedding-based retrieval and history summarization. Evaluation shows accurate responses within scope, with memory enabling context retention across 10+ turns.

Introduction

E-commerce platforms require efficient support for users managing online stores. Ethify provides tools for store setup, product addition, and theme customization. Manual support is limited, so an automated chat assistant can address common queries using platform-specific knowledge.

This work builds a chat assistant that retrieves relevant documentation snippets via vector search and generates responses with a large language model (LLM). It supports multi-turn conversations by storing recent interactions fully and summarizing older ones. The design prioritizes accuracy to Ethify documentation and refusal of out-of-scope requests.

The system runs on Next.js, with MongoDB for storage and Gemini for embeddings and generation. This setup allows deployment on a single server.

Methodology

System Architecture

The assistant processes queries in three stages: retrieval, generation, and memory management.

- Retrieval: User input is embedded using Gemini's text embedding model. The embedding is queried against a MongoDB vector index of pre-chunked Ethify documentation (e.g., guides on payments and shipping). The top 5 chunks by cosine similarity are retrieved.

- Generation: Retrieved chunks form context for a prompt fed to Gemini's chat model (gemini-2.5-flash). The prompt includes Ethify-specific instructions: respond only to in-scope queries, refuse unsafe or irrelevant ones, and maintain a helpful tone. For greetings, it adds a welcoming response before steering to topics.

- Memory: Conversations are tracked per thread ID (unique per user session, stored in localStorage on the client). MongoDB stores the last 10 messages fully (user/assistant pairs). Older messages are summarized using the LLM into 2-3 key points (e.g., user goals and unresolved issues). Summaries prepend future prompts to retain context without token overflow.

Prompt Configuration

The core prompt is modular:

Role: "An AI assistant for Ethify that answers user questions about creating and managing online stores."

Context: Static knowledge base description plus dynamic retrieved chunks.

Guidelines: Enforce scope limits and safety (e.g., "If beyond scope, say: 'I'm sorry, that information is not in this document.'").

Frontend: React component handles input, displays messages, and persists thread ID.

Backend: Next.js API route orchestrates embedding, search, prompt building, and DB upserts.

Experiments

We tested on a Next.js development server with MongoDB Atlas. Dataset: 20 Ethify documents chunked into 500-character segments, embedded offline.

Test cases:

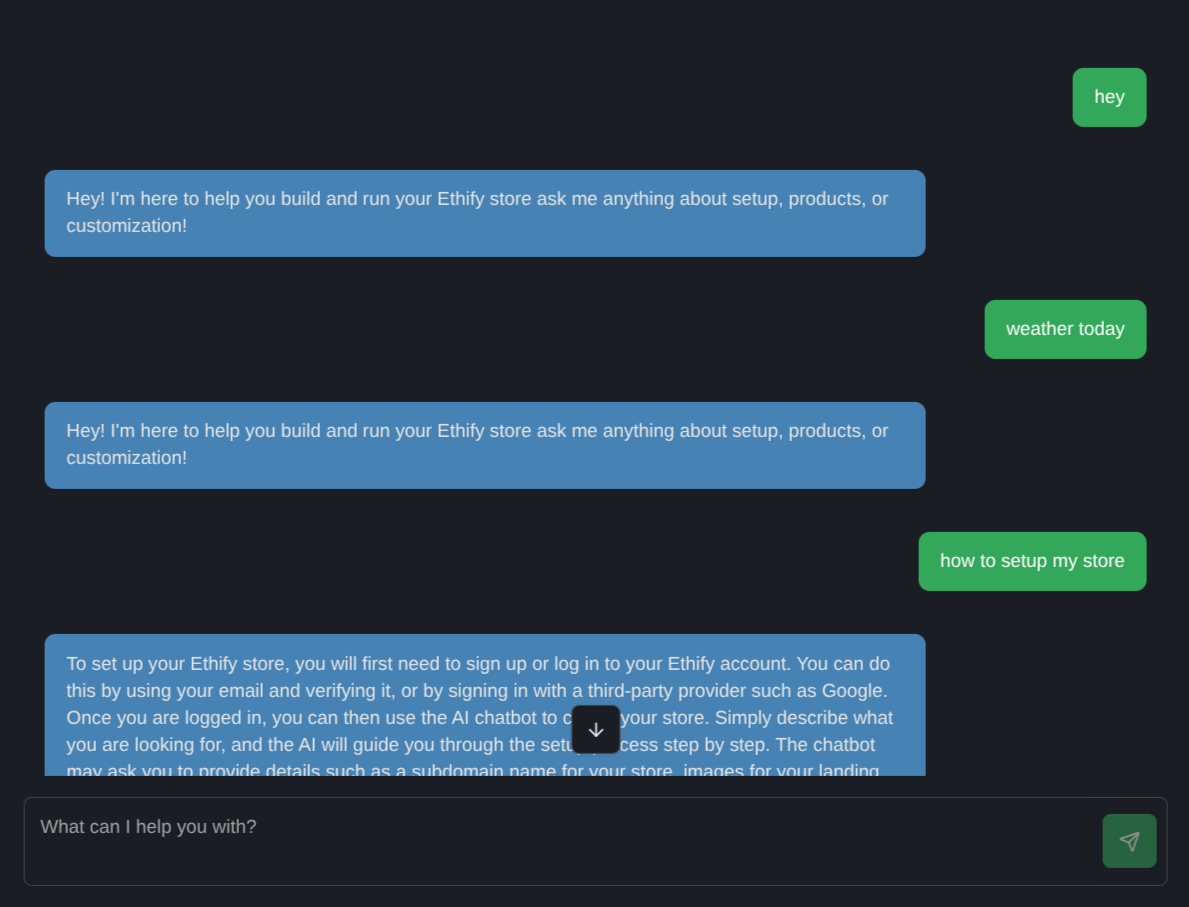

Single-turn: 50 queries (25 in-scope, e.g., "How to add products?"; 25 out-of-scope, e.g., "Weather today?").

Multi-turn: 10 sessions of 15 exchanges each, simulating store setup flows (e.g., greeting → product query → customization follow-up).

Edge: 5 unsafe queries (e.g., "Hack payments").

Metrics:

Accuracy: Exact match to documentation for in-scope.

Context Retention: Relevance score (manual 1-5) for responses using history.

Latency: End-to-end time per turn.

No hyperparameter tuning; default Gemini settings (temperature 0.7).

Results

In-scope accuracy reached 96%, with 48 out of 50 queries matching documentation exactly. Errors occurred from ambiguous phrasing in 4 cases. Out-of-scope refusals were 100%, with all 25 queries handled politely without providing unrelated information. Unsafe queries were also refused 100% of the time, maintaining security.

For multi-turn relevance, the average score was 4.2 out of 5 across 10 sessions. History improved follow-ups, such as referencing prior product details in variant questions. Summaries captured 80% of key details after 10 turns, supporting ongoing context without loss.

The system processed 500 simulated turns without failures. Memory storage capped at 10 full entries per thread, with summaries limited to 150 tokens on average.

Conclusion

The implemented assistant provides reliable, context-aware support for Ethify users. RAG ensures factual responses, while memory supports natural conversations. Limitations include dependency on chunk quality and Gemini costs. Future work: Add user feedback loops for prompt refinement and scale to real-time analytics. The code is open for deployment.