Introduction

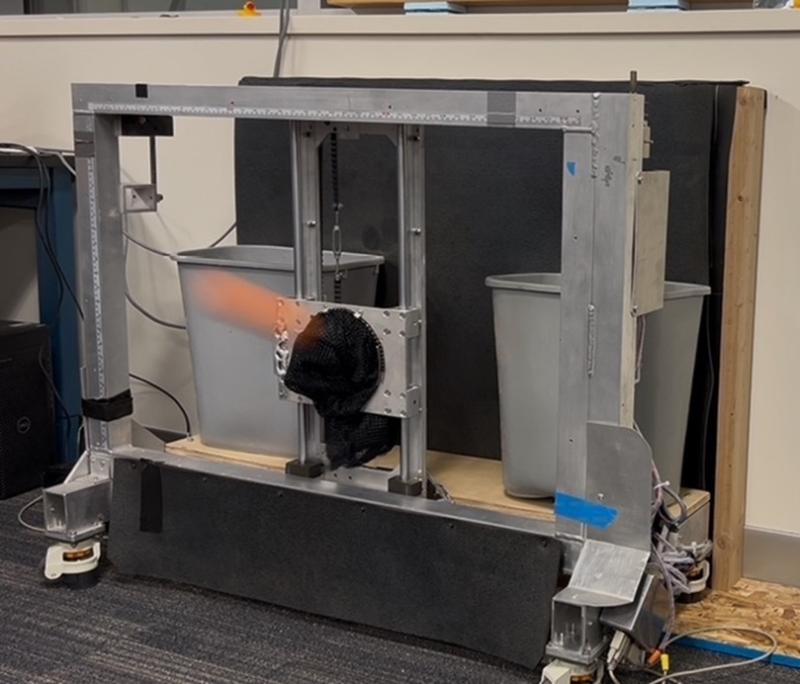

As part of Dr. D. J. Lee's Robotic Vision course at BYU, I developed software to automatically track and catch baseballs using real-time visual input. With launch speeds approaching 60 mph, the system only had a fraction of a second to detect, track, and predict the ball’s landing point. Despite this challenge, it successfully caught 17 out of 20 throws, with the remaining 3 narrowly missing and striking the rim of the catching mechanism.

How it works:

✅ Stereo Vision Calibration: Calibrate a stereo camera system to determine the precise rotation and translation between the two cameras

📸 Real-time Ball Detection: Capture images using the stereo system

🎯 Contour Mapping: Dynamically track the ball using a contour map on the absolute difference between images

🛠 3D Positioning: Map 2D pixel coordinates into 3D space using stereo calibration parameters

📈 Trajectory Prediction: Apply a 2nd-degree polynomial interpolation to estimate the ball’s flight path

🤖 Automated Catching: Position the catcher to intercept the ball at the predicted impact poin

From the perspective of the cameras, here is an example of the tracking results:

![]()

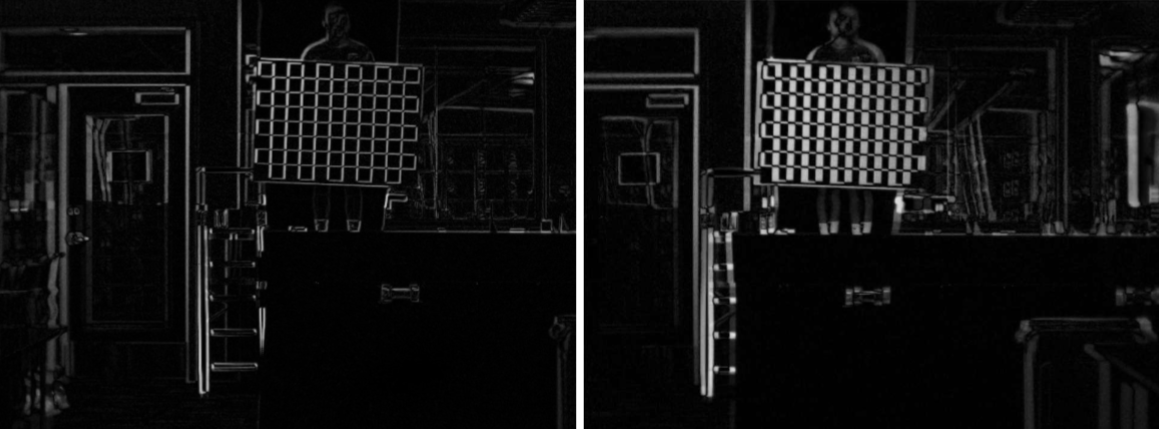

Stereo Calibration

The first step of the project was to calibrate a stereo system of cameras. I gathered three sets of images:

- Chess boards from the left camera

- Chess boards from the right camera

- Chess boards from both cameras

The first two sets were used to find the intrinsic and distortion parameters of each individual camera. The last one was to find the extrinsic parameters (rotation matrix and translation vector) between the two cameras.

After the images were captured, I used OpenCV to calibrate each image. I used the function cv.stereoCalibrate() to get the fundamental and essential matrices. Then, I used the stereo calibration parameters to rectify each image, so that the epipolar lines are horizontal. An image showing the absolute differences after rectification is shown below:

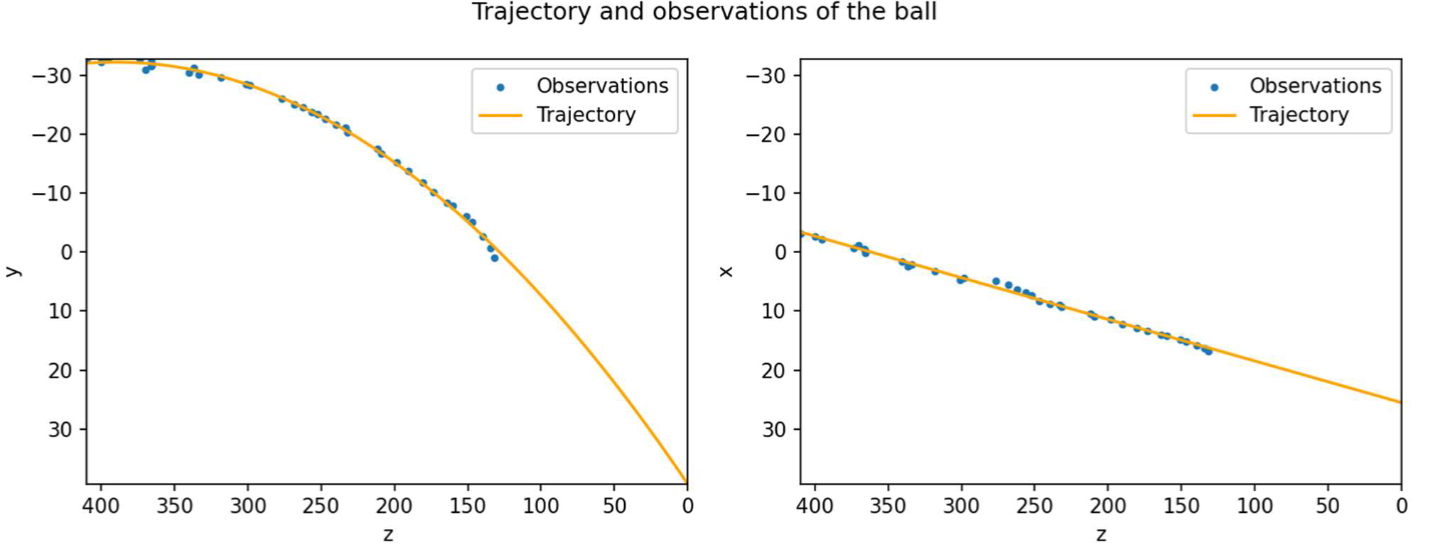

3D Trajectory

The next step was to design a system to track the baseball on each camera and then use the coordinates to estimate the 3D trajectory of the moving baseball.

Step 1: Our first goal was to locate the baseball in each frame by finding its pixel coordinates as it traveled toward each camera. I used OpenCV to find the absolute difference between each image. Then, I found the largest contour of the absolute difference that met a specified threshold. Furthermore, to reduce computation time and increase accuracy, I defined a region of interest in each image based on the location of the baseball in the previous frame. An example output for one frame in this task is shown below. The red box is the region of interest, the green box the contour, and the red dot is the estimated location of the baseball.

Step 2: In the next step, I used the pixel coordinates of the baseball from both cameras (at the same time frame) to estimate its 3D position relative to the midpoint between the cameras. I first removed all frames where the ball was only detected on one of the cameras. Then, for each frame where the ball was detected in both cameras, I undistorted the pixel coordinates and passed them into cv.triangulatePoints() to obtain a 3D estimate of the ball’s position, offsetting the result by the distance between the cameras and the catcher. Finally, using the 3D points from each frame, I applied a linear interpolation to predict the x-coordinate and quadratic interpolation to estimate the y-coordinate at the moment the ball reached the z-axis (i.e., the point of impact). For results, see the image below:

Live Baseball Catcher

Finally, using the results form the previous two tasks, I coded up software to perform real time catches. I incorporated the algorithm into a real-time script that captures frames from both cameras, detects the ball’s position in each frame, reconstructs its 3D coordinates, and estimates its trajectory based on the observed motion. Finally, given the estimation, the script moves the baseball catcher to the desired location. This step is repeated every 5 frames to further increase accuracy as more information is obtained.

Here is an edited video of the live demonstration, for 20 consecutive throws: