The Reserve Bank of Zimbabwe publishes vast amounts of critical policy and regulatory content concerning electronic payment systems (e-payments). However, this information is often embedded in lengthy documents and not easily searchable or comprehensible by everyday users such as bank agents, retailers, auditors, or financial educators. These users frequently need quick and authoritative responses regarding matters like point-of-sale (POS) security, mobile money transaction limits, or anti-fraud procedures but the traditional search and manual reading process is time-consuming and error-prone.

To address this accessibility challenge, the Reserve Bank RAG Assistant was conceptualized and built: a domain-specific, intelligent chatbot that allows users to ask natural language questions and receive high-quality, document-grounded answers instantly. The system leverages cutting-edge retrieval-augmented generation (RAG) techniques, enabling it to blend semantic search with generative AI.

To solve this information retrieval and accessibility problem, the following technical requirements were established and successfully implemented:

Document ingestion from multiple formats, including PDF and Markdown, drawn from public RBZ archives.

Semantic chunking and embedding using a HuggingFace transformer model, with support for CUDA/MPS acceleration.

Persistent vector storage using ChromaDB to facilitate fast, accurate semantic search.

Dynamic prompt building with LangChain to ensure prompt format consistency and context inclusion.

Use of a high-speed inference backend via Groq’s LLM API to keep latency low and throughput high.

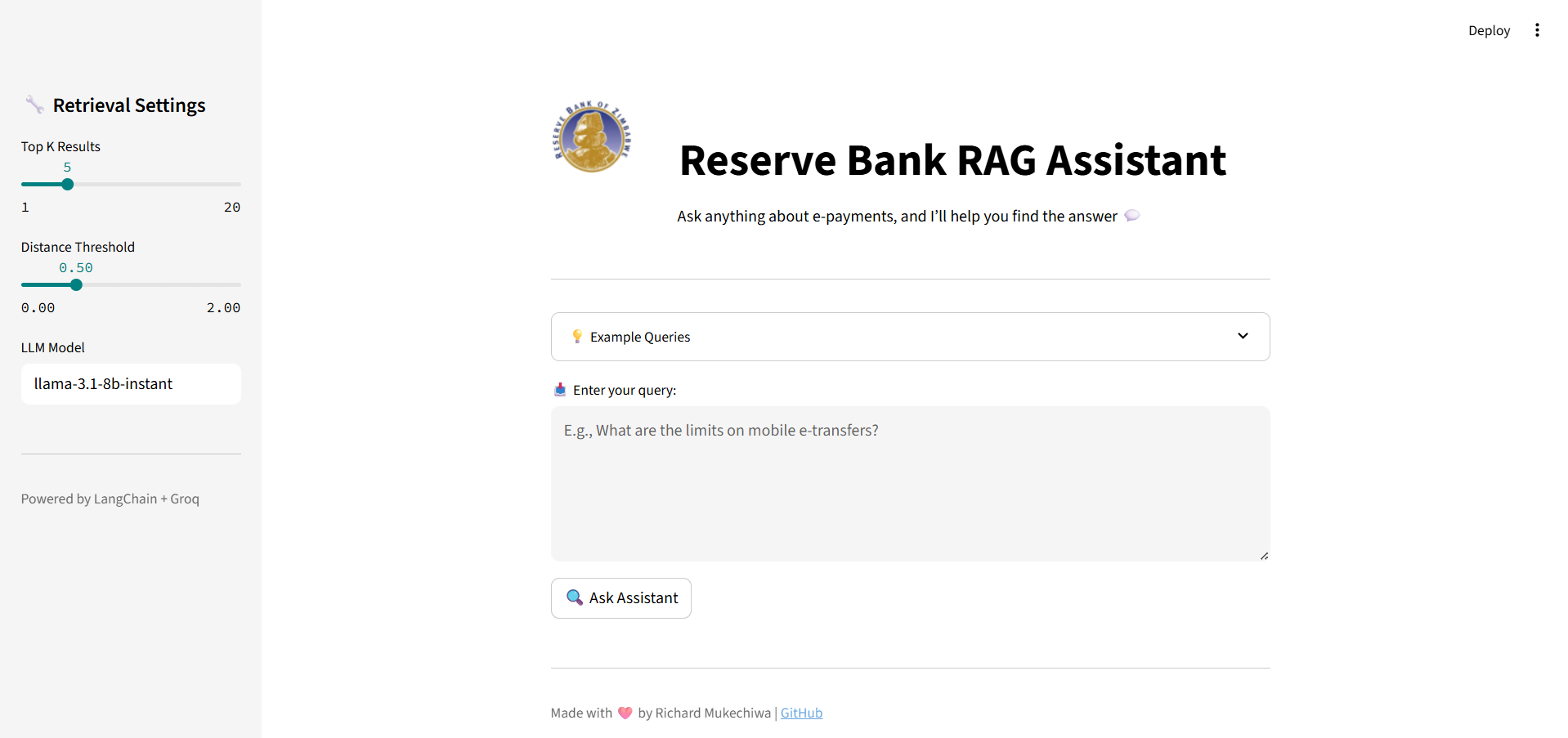

An interactive Streamlit front-end tailored for RBZ branding and optimized for user intuitiveness.

Logging, environment management, and parameter configuration for both local development and scalable deployment.

The Reserve Bank RAG Assistant architecture is composed of a structured pipeline, where each component is modular, configurable, and optimized for efficient information retrieval and generation.

The user interacts with a lightweight web interface powered by Streamlit, which captures free-form queries and displays the assistant’s answers in a conversational layout.

Upon submission, the user query is semantically embedded using a HuggingFace sentence transformer (all-MiniLM-L6-v2). This transforms the text into a numerical vector representation that captures meaning.

The vectorized query is sent to a ChromaDB vector store, which performs a nearest-neighbor search using cosine similarity. The Top-K most relevant document chunks are retrieved, filtered using a configurable similarity threshold.

Relevant documents and the user's question are merged into a unified context using a prompt template loaded from YAML. LangChain handles this formatting via its dynamic prompt builder.

The final prompt is passed to a Groq-hosted language model via the ChatGroq API, which generates a grounded, context-aware answer.

The assistant's answer is displayed back to the user within the Streamlit interface, along with an expandable section showing the source documents that contributed to the response.

The backend is composed of clearly separated concerns:

The ingestion pipeline loads all publications using the load_all_publications function. Texts are chunked semantically via RecursiveCharacterTextSplitter with a default size of 1000 characters and 200-character overlap. Each chunk is embedded using sentence-transformers/all-MiniLM-L6-v2, with device-specific optimizations. The embeddings and corresponding texts are stored in a persistent ChromaDB collection under the namespace "publications". This pipeline can be reinitialized by passing delete_existing=True when calling initialize_db.

At query time, the same embedding model transforms the user's question into a vector. The retrieve_relevant_documents function then queries the ChromaDB collection and filters results using a cosine distance threshold. All results below the defined threshold are returned for LLM input. Logging is heavily employed at each step to track performance and behavior.

collection.query( query_embeddings=[query_embedding], n_results=n_results, include=["documents", "distances"] )

Prompts are generated via the build_prompt_from_config utility, which follows a YAML-defined format. This ensures consistency across sessions and user queries. Once the prompt is built, the assistant sends it to a Groq-backed LLM using the ChatGroq class. Responses are returned as structured markdown and rendered cleanly within the app.

input_data = f"Relevant documents:\n{docs}\nUser's question:\n{query}" prompt = build_prompt_from_config(rag_prompt_template, input_data=input_data)

llm = ChatGroq(model=model, api_key=os.getenv("GROQ_API_KEY")) response = llm.invoke(prompt)

The UI, deployed via app.py, features Reserve Bank branding, intelligent input forms, parameter tuning sliders, and expandable source document sections. Users can adjust Top-K results, similarity thresholds, and model choice on the fly. Common question examples are provided for guidance, and branding is supported with logos and stylistic markdown elements.

For advanced users or testing purposes, a CLI-based version of the assistant exists, enabling configuration via terminal and parameter updates without redeploying the UI.

The solution achieved its goal of significantly improving access to RBZ's institutional knowledge. During internal validation and testing:

Retrieval accuracy remained high, especially with thresholds set between 0.3 and 1.0, thanks to cosine-based filtering.

The Groq LLM returned answers in under 3 seconds in most cases, providing an efficient user experience.

The assistant was able to consistently cite grounded content from RBZ publications, improving the transparency of LLM-generated answers.

Extensive logging allowed for empirical tracking of user queries, response quality, and document coverage, laying the groundwork for future tuning and evaluation.

The Reserve Bank RAG Assistant represents a significant step forward in democratizing regulatory information access. Rather than requiring users to manually search through PDFs and policy sheets, the assistant provides accurate, explainable answers instantly. This has several key impacts:

Improved Financial Literacy and Compliance: Bank tellers, merchants, and citizens gain direct access to official guidance, improving informed decision-making.

Reduced Operational Overhead: Institutions can reduce the burden on support teams who previously handled repetitive, document-based queries.

Scalability Across Domains: The system can be easily adapted to other domains such as taxation, national ID systems, or education by simply modifying the documents ingested and prompt templates used.

Localized Innovation: This project applies state-of-the-art AI tooling to a real Zimbabwean use case, demonstrating how frontier technologies can address regional challenges effectively.

GitHub – Richard Mukechiwa

Reserve Bank of Zimbabwe ConsumerHub

The SmartBank Assistant is more than a chatbot, it’s a framework for delivering domain-grounded intelligence in real-time. It combines state-of-the-art semantic search with configurable, lightweight infrastructure to make large-scale information consumable for everyone. Whether you're a policymaker, a developer, or a certification judge, this project proves how modern AI pipelines can create real impact, even in settings with constrained resources.

Built by Richard Mukechiwa