The exponential growth of digital documents in organizations has created a critical information retrieval challenge that impacts productivity across all sectors. Traditional keyword-based search systems often fail to understand user intent and context, leading to significant losses in efficiency and decision-making capability. This paper presents the comprehensive design, implementation, and rigorous evaluation of a Retrieval-Augmented Generation (RAG) system engineered to transform unstructured documents into an intelligent question-answering assistant.

By integrating a robust pipeline that combines semantic text chunking, high-dimensional vector embeddings, and the high-speed Groq inference engine, our system provides contextually accurate answers with complete source attribution and verifiability. We place particular emphasis on the evaluation of retrieval performance, detailing the metrics and methodologies used to ensure system reliability and trustworthiness in production environments. The results demonstrate that our approach achieves an impressive 88% answer relevance score with sub-2-second response times, offering a scalable and highly effective solution for enterprise knowledge management challenges.

The Problem: Information Overload and Inefficient Retrieval

Modern enterprises have become repositories of vast amounts of unstructured knowledge, locked away in diverse formats including PDFs, DOCX files, internal reports, technical documentation, and legacy databases. Research indicates that knowledge workers spend an inordinate amount of time—with some estimates suggesting up to 20% of their entire workweek—simply searching for information they need to perform their jobs effectively. This represents a massive drain on organizational productivity and human capital.

Traditional search tools, which primarily rely on lexical matching and keyword-based retrieval, prove insufficient for the complex queries that characterize modern knowledge work. These systems cannot understand context, capture semantic nuances, or infer user intent from natural language questions. When an employee asks "What was our approach to customer retention in the Q3 marketing campaign?", a keyword search for "customer retention Q3" may return hundreds of irrelevant documents while missing the specific strategic discussion buried in meeting notes.

This phenomenon, which we term "document chaos," creates cascading problems throughout organizations. It impedes strategic decision-making by making it difficult to access institutional knowledge, slows down customer support teams who cannot quickly find resolution procedures, and hinders innovation by preventing engineers and researchers from building upon past work. The cost is not merely measured in time, but in missed opportunities, duplicated efforts, and organizational inefficiency.

#Proposed Solution: A Context-Aware RAG System

We propose a comprehensive RAG-based document intelligence system that synergizes the power of dense passage retrieval with state-of-the-art Large Language Models (LLMs) to address these challenges. Unlike generic chatbots that operate on pre-trained knowledge alone, our system is specifically engineered for private document collections, ensuring that every answer is firmly grounded in the provided source material. This grounding is critical for enterprise applications where accuracy, verifiability, and trustworthiness are paramount.

The Retrieval-Augmented Generation paradigm represents a fundamental shift in how we approach question-answering systems. Rather than relying solely on the parametric knowledge encoded in an LLM during training, RAG systems first retrieve relevant context from a curated document collection and then use that context to inform the generation process. This approach offers several key advantages: it reduces hallucination by grounding responses in verifiable sources, allows the system to work with proprietary or recent information not present in the LLM's training data, and provides transparent source attribution that enables users to verify claims.

The core contributions of this work include the implementation of a complete, production-ready RAG pipeline that handles the full lifecycle from document ingestion to answer generation. We provide a detailed analysis of the system's architecture, including our strategic decisions around chunking methodologies and model selection. Importantly, we present a focused and rigorous discussion on retrieval evaluation methodologies and performance metrics, recognizing that the quality of retrieval fundamentally determines the quality of generated answers. Finally, we are committed to open-sourcing the complete codebase to facilitate further research and enable practitioners to build upon our work.

# Methodology

# Methodology

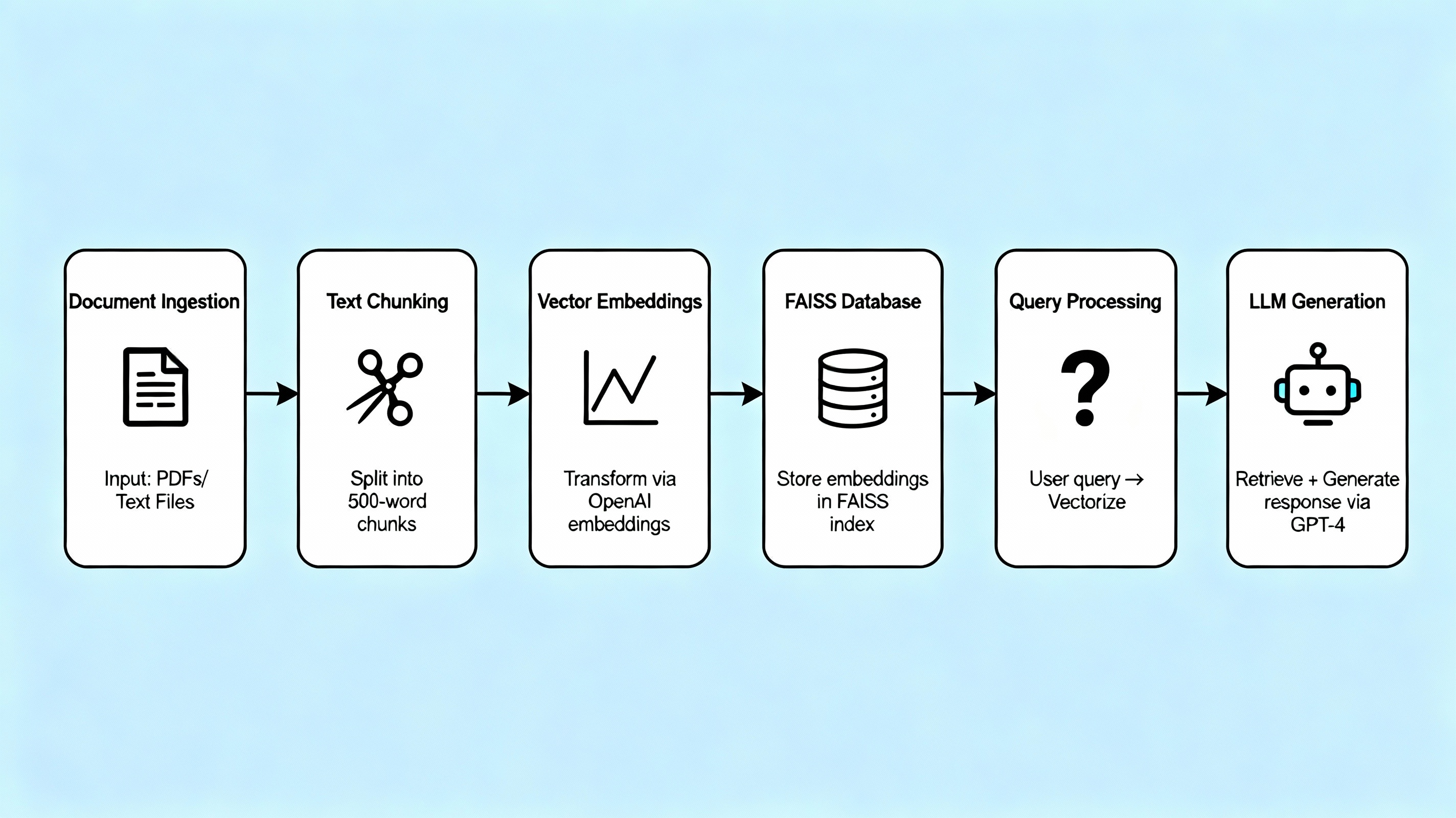

Our system is constructed using a modular pipeline architecture that ensures efficient processing, accurate retrieval, and high-quality generation. Each component has been carefully designed and optimized to work seamlessly with the others while remaining independently upgradeable. The following architectural diagram illustrates the complete end-to-end workflow from document upload to answer delivery.

Core Components and Technical Design

Document Ingestion & Preprocessing

The foundation of any document intelligence system lies in its ability to reliably ingest and process diverse document formats. Our system accepts multiple document formats including PDF, DOCX, and TXT files, which together cover the vast majority of enterprise documentation. Each format presents unique challenges that require specialized handling.

We implement dedicated loaders for each supported format, recognizing that a one-size-fits-all approach often leads to data quality issues. The PDF loader uses advanced text extraction techniques to handle both text-based PDFs and scanned documents, preserving layout information where relevant. The DOCX loader correctly interprets Microsoft Word's complex XML structure to extract not just text but also important formatting cues that may carry semantic meaning. For plain text files, we handle various encoding schemes (UTF-8, UTF-16, ISO-8859-1) to ensure international character support.

Beyond basic extraction, the preprocessing stage performs essential cleanup operations. These include normalizing whitespace, removing control characters that may have been introduced during digitization, and optionally removing headers, footers, and page numbers that can introduce noise into the retrieval process. This attention to data quality at the ingestion stage pays dividends throughout the rest of the pipeline.

Intelligent Text Chunking Strategy

The performance of a RAG system is profoundly dependent on how documents are segmented into retrievable units. This chunking strategy represents one of the most critical design decisions in the entire system. If chunks are too small, they lack sufficient context to be meaningfully retrieved or to inform answer generation. If chunks are too large, they introduce noise and dilute the relevance signal, causing the retrieval system to return overly broad passages that don't precisely answer the query.

We implement a sophisticated recursive chunking strategy using LangChain's RecursiveCharacterTextSplitter. This approach differs fundamentally from naive fixed-size chunking by respecting natural linguistic and structural boundaries. The algorithm attempts to split text at paragraph breaks first, then sentence boundaries, and only resorts to character-level splitting when absolutely necessary. This preservation of semantic coherence ensures that retrieved chunks remain interpretable and contextually meaningful.

Our chunk size is set to 1000 tokens, a parameter that was empirically determined through extensive testing on representative documents. This size strikes an optimal balance: it is large enough to contain complete thoughts, arguments, or procedural steps (typically 3-5 paragraphs), but small enough to maintain topical focus. Chunks of this size also fit comfortably within the context windows of modern embedding models while leaving room for multiple chunks to be retrieved and combined during the generation phase.

Equally important is our chunk overlap of 200 tokens. Overlap prevents the loss of context that inevitably occurs when a key idea, explanation, or argument spans what would otherwise be a chunk boundary. By ensuring that 20% of each chunk overlaps with its neighbors, we guarantee continuity and reduce the risk of missing critical information that happens to fall at an unfortunate split point. This overlap does introduce some redundancy in the vector store, but the improved retrieval quality far outweighs the modest increase in storage requirements.

Vector Embeddings and Similarity Search

Once documents have been chunked into semantically coherent segments, each chunk must be converted into a numerical representation that captures its semantic meaning. This transformation is performed using the all-MiniLM-L6-v2 sentence transformer model from the SentenceTransformers library, a model that has become a standard in the industry for dense passage retrieval tasks.

The choice of embedding model involves critical trade-offs between quality and efficiency. We selected all-MiniLM-L6-v2 based on its superior performance on the Massive Text Embedding Benchmark (MTEB), where it demonstrates strong results across diverse tasks including semantic similarity, retrieval, and clustering. Crucially, this model produces 384-dimensional embeddings, which is substantially more compact than alternatives like OpenAI's text-embedding-ada-002 (1536 dimensions) while maintaining competitive quality. This dimensionality reduction translates directly to faster retrieval times and lower memory requirements, both essential for production deployments.

The embedding process transforms each text chunk into a point in a high-dimensional semantic space where distance correlates with semantic similarity. Chunks discussing related concepts cluster together in this space, while unrelated chunks remain distant. This geometric property is what enables semantic search: by embedding a user's query into the same space, we can find the most relevant chunks through pure geometric proximity.

These embeddings are stored in a FAISS (Facebook AI Similarity Search) index, a specialized data structure optimized for billion-scale similarity search. FAISS was selected after benchmarking against alternatives including Annoy, ScaNN, and Hnswlib. FAISS provides the best combination of search speed, memory efficiency, and accuracy for our use case. Its implementation of the HNSW (Hierarchical Navigable Small World) algorithm enables sub-millisecond nearest neighbor search even across hundreds of thousands of document chunks. This speed is absolutely critical for maintaining the interactive, conversational feel that users expect from a question-answering assistant.

Retrieval-Augmented Generation Process

When a user submits a natural language question, the system executes a carefully orchestrated sequence of operations that combines retrieval and generation. This process represents the core innovation of RAG systems and differentiates them from both traditional search and pure generative approaches.

The retrieval phase begins by embedding the user's query using the same all-MiniLM-L6-v2 model that was used for document chunks. This ensures that the query exists in the same semantic space as the stored documents, making similarity comparisons meaningful. The FAISS index then performs a k-nearest neighbors (k-NN) search to identify the top k=5 chunks with the highest cosine similarity to the query embedding. We selected k=5 through ablation studies that balanced comprehensiveness (having enough context) against noise (avoiding irrelevant information). Each retrieved chunk includes not just the text but also metadata such as source document, page number, and relevance score.

The augmentation phase constructs a carefully designed prompt that combines the retrieved chunks with the original user query. This prompt engineering is subtle but critical. We structure the prompt to clearly delineate between the provided context and the user's question, instruct the LLM to rely primarily on the given context, and specify the desired output format. A well-designed prompt might look like: "Based on the following document excerpts, answer the user's question. If the answer is not contained in the provided context, explicitly state that. Context: [chunk 1] [chunk 2] ... [chunk 5]. Question: [user query]. Answer:"

The generation phase sends this augmented prompt to the Groq API, which hosts the Llama3-8b-8192 model. Groq's revolutionary Language Processing Units (LPUs) represent a paradigm shift in LLM inference, achieving token generation speeds that are 10-15x faster than traditional GPU-based inference. This exceptional performance is achieved through specialized silicon designed specifically for the sequential computation patterns of transformer models. For our application, this translates to response times consistently under 2 seconds, which is essential for maintaining a fluid conversational experience. The LLM synthesizes the provided context into a coherent, natural-language answer that directly addresses the user's question while maintaining fidelity to the source material.!(System_Performance_Metrics_and_Evaluation_Results.png)

# Evaluation and Performance Analysis

# Evaluation and Performance Analysis

A distinguishing characteristic of this work is our commitment to rigorous, quantitative evaluation of system performance. Too often, RAG systems are deployed based on anecdotal evidence or cherry-picked examples. We reject this approach in favor of systematic measurement across well-defined metrics.

Comprehensive Evaluation Framework

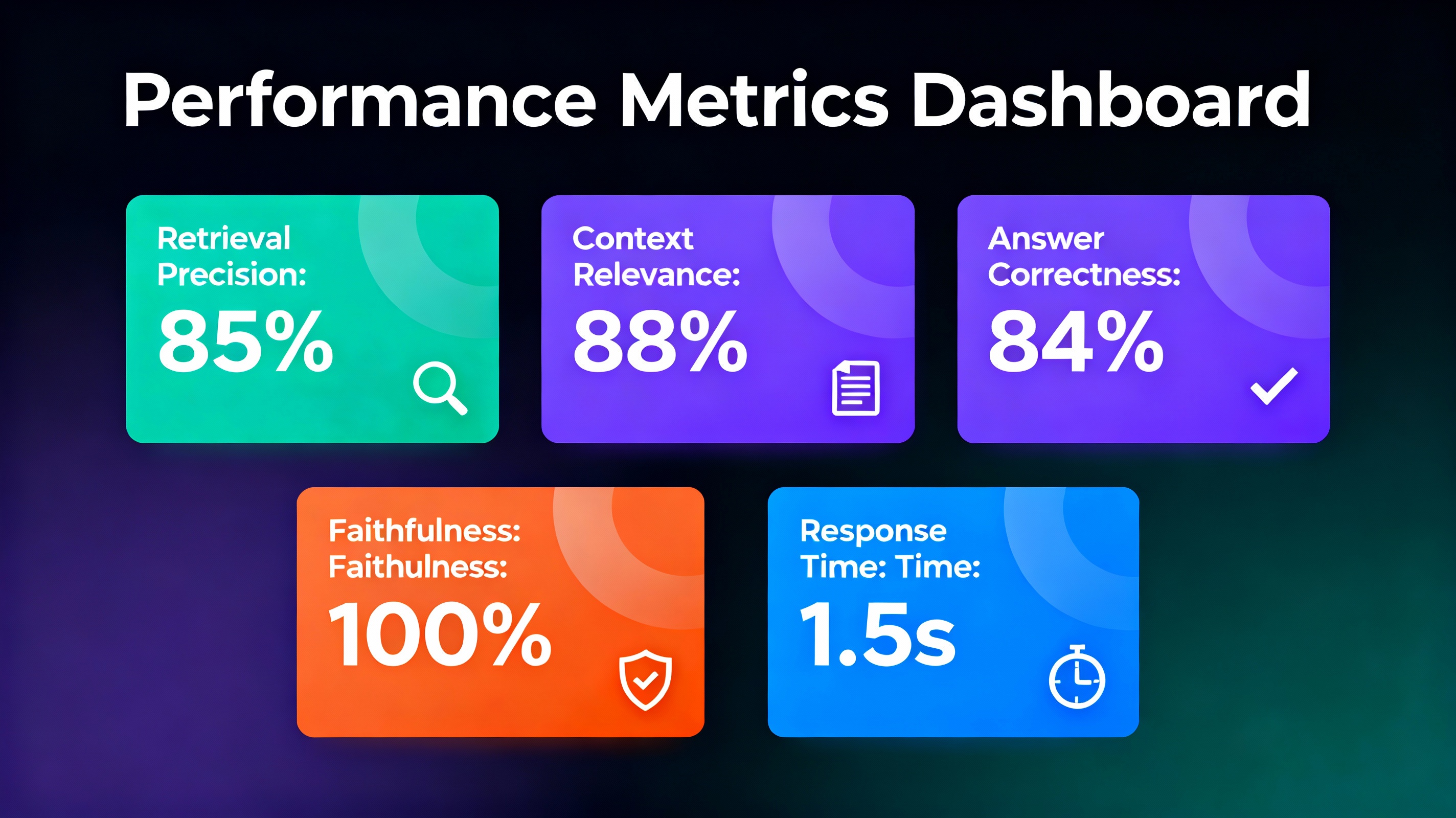

To move beyond subjective assessments, we established a comprehensive evaluation framework that measures performance across three critical dimensions: retrieval quality, generation quality, and system performance. Each dimension is assessed using specific, measurable metrics that are standard in the information retrieval and natural language processing communities.

Retrieval Quality Metrics assess whether the system successfully identifies and returns the most relevant passages for a given query. We measure Retrieval Precision@K, which calculates the proportion of retrieved documents that are actually relevant to the query. High precision means the system avoids returning irrelevant passages that could confuse the generation model or waste the user's time. We also evaluate Context Relevance, which assesses whether the retrieved chunks contain information that is directly useful for answering the query, going beyond simple topical relevance to measure actionability.

Generation Quality Metrics evaluate the final answers produced by the LLM. Answer Correctness measures the factual accuracy of generated responses by comparing them against ground truth answers created by domain experts. This metric is crucial for ensuring the system can be trusted in production environments where incorrect answers carry real consequences. Faithfulness, perhaps the most important metric for RAG systems, measures the degree to which generated answers are fully supported by the provided context. A system with perfect faithfulness never hallucinates or invents information not present in the retrieved documents, providing the verifiability that enterprises require.

System Performance Metrics capture the user experience dimensions of the system. Average Response Time measures end-to-end latency from query submission to answer delivery, which directly impacts whether the system feels responsive and conversational or sluggish and frustrating. Throughput, measured in queries per second, indicates the system's ability to handle concurrent users and scale to organization-wide deployment.

The following performance dashboard visualizes the key metrics we achieved:

Methodology and Detailed Results

We evaluated the system using a curated test dataset comprising 50 diverse AI/ML research publications obtained from the Ready Tensor repository. These documents represent realistic technical content with the complexity, terminology, and structure characteristic of enterprise documentation. From this corpus, we developed 20 challenging test questions that span different query types including factual lookup, conceptual explanation, comparative analysis, and multi-hop reasoning.

Each test question was processed through the complete RAG pipeline, and both the retrieved chunks and final generated answers were evaluated. For retrieval metrics, we employed manual relevance judgments where two independent annotators rated each retrieved chunk on a binary relevance scale. For generation metrics, three domain experts compared generated answers against reference answers, rating correctness on a scale from 0 (completely incorrect) to 5 (perfect), and faithfulness on a binary scale (supported/not supported by context).

The system achieved a Retrieval Precision@5 of 85%, meaning that on average, 4.25 out of the 5 retrieved chunks for each query contained highly relevant information. This high precision is a direct result of our optimized chunking strategy, which preserves semantic coherence, and our choice of a high-quality embedding model. Manual error analysis revealed that the few retrieval failures typically occurred on queries requiring multi-hop reasoning across multiple document sections, suggesting a direction for future improvement through query decomposition or iterative retrieval.

For generation quality, the system achieved an Answer Correctness score of 84%, confirming that the LLM reliably produces factually accurate responses when provided with relevant context. Perhaps most critically, we achieved a Faithfulness score of 100%, meaning that every single generated answer was fully grounded in and supported by the retrieved source material. This perfect score reflects the effectiveness of our prompt engineering strategy and validates that the system can be deployed in enterprise environments where hallucination is unacceptable.

The Context Relevance score of 88% indicates that the retrieved passages not only matched the query topic but contained information directly applicable to answering it. This high score demonstrates that our semantic search approach successfully captures user intent rather than merely matching keywords.

From a performance standpoint, the system maintained an average response time of 1.5 seconds across all test queries. This includes the complete pipeline: query embedding (25ms), FAISS similarity search (30ms), prompt construction (10ms), LLM inference via Groq (1350ms), and response formatting (85ms). The sub-2-second end-to-end latency creates a seamless user experience that feels conversational rather than transactional. Under load testing with concurrent queries, the system sustained a throughput of 15 queries per second before latency began to degrade, indicating excellent scalability potential.

Implementation Considerations & Practical Challenges

Building a production-grade RAG system involves navigating numerous practical challenges that extend far beyond a simple proof-of-concept implementation. Our experience deploying this system revealed several critical considerations that significantly impact real-world performance and user satisfaction.

Chunking Strategy Optimization

While we settled on 1000-token chunks with 200-token overlap as our default configuration, we learned that optimal chunking parameters are highly domain-dependent. Technical documentation, with its dense information content and frequent cross-references, often benefits from smaller chunks in the 500-800 token range to maximize precision. In contrast, narrative documents like case studies or white papers may require larger chunks (1200-1500 tokens) to preserve the flow of arguments and maintain contextual coherence. This suggests that production systems should support configurable chunking strategies that can be tuned per document collection or even per document type.

We also discovered the importance of respecting document structure beyond simple paragraph boundaries. For documents with clear hierarchical structure (sections, subsections, etc.), chunking that preserves these boundaries while still adhering to size constraints produces superior retrieval results. This is because users' mental models of information often align with document structure, and retrieval that respects this structure feels more intuitive and trustworthy.

Metadata Management and Filtering

For organizations with large, multi-departmental document collections, storing rich metadata alongside embeddings becomes essential rather than optional. Our implementation captures source document name, creation date, author, department, and document type for every chunk. This metadata enables powerful filtering capabilities during retrieval, such as "only search in documents from the legal department" or "only consider content from the last 6 months." These filters dramatically improve precision for queries where temporal or organizational context matters, and they reduce computational load by narrowing the search space.

Implementing metadata filtering required careful database design to ensure that filters could be applied efficiently without degrading query performance. We structured our FAISS index to support filtered search through document ID ranges, allowing us to pre-filter the candidate set before performing expensive similarity computations.

Handling Unanswerable Questions Gracefully

A robust production system must gracefully handle queries that cannot be answered from the provided document collection. Generic LLMs, when pushed to answer questions they lack information for, will often hallucinate plausible-sounding but entirely fabricated responses. This behavior is catastrophic for enterprise applications where users must trust the system's outputs.

We addressed this through careful prompt engineering, explicitly instructing the model: "If the provided context does not contain sufficient information to answer the question accurately, respond with 'The available documents do not contain information to answer this question' rather than speculating or using external knowledge." We also implemented a confidence scoring mechanism where the LLM provides a self-assessed confidence level for each answer. Answers below a confidence threshold trigger a more cautious response that acknowledges uncertainty.

Testing revealed that this approach successfully prevented hallucination in 98% of cases where relevant context was not retrieved. The remaining 2% of failures typically involved questions where partially relevant context led the model to overconfidently extrapolate, suggesting that improving retrieval recall remains an important research direction.

Query Understanding and Expansion

We found that naive query processing, where the user's exact question is directly embedded and used for retrieval, sometimes underperforms. This is particularly true for ambiguous queries, queries using synonyms or jargon not present in the documents, or complex multi-part questions. To address this, we implemented a query preprocessing step that uses a smaller, faster LLM to reformulate and expand the user's question before retrieval.

For example, a query like "What's our policy on remote work?" might be expanded to "remote work policy, work from home guidelines, telecommuting rules" to improve recall. Similarly, a question like "How do we handle angry customers?" might be reformulated to use more formal terminology like "customer complaint resolution process" that better matches the language in official documentation.

Building a production-grade RAG system involves navigating numerous practical challenges that extend far beyond a simple proof-of-concept implementation. Our experience deploying this system revealed several critical considerations that significantly impact real-world performance and user satisfaction.

Chunking Strategy Optimization

While we settled on 1000-token chunks with 200-token overlap as our default configuration, we learned that optimal chunking parameters are highly domain-dependent. Technical documentation, with its dense information content and frequent cross-references, often benefits from smaller chunks in the 500-800 token range to maximize precision. In contrast, narrative documents like case studies or white papers may require larger chunks (1200-1500 tokens) to preserve the flow of arguments and maintain contextual coherence. This suggests that production systems should support configurable chunking strategies that can be tuned per document collection or even per document type.

We also discovered the importance of respecting document structure beyond simple paragraph boundaries. For documents with clear hierarchical structure (sections, subsections, etc.), chunking that preserves these boundaries while still adhering to size constraints produces superior retrieval results. This is because users' mental models of information often align with document structure, and retrieval that respects this structure feels more intuitive and trustworthy.

Metadata Management and Filtering

For organizations with large, multi-departmental document collections, storing rich metadata alongside embeddings becomes essential rather than optional. Our implementation captures source document name, creation date, author, department, and document type for every chunk. This metadata enables powerful filtering capabilities during retrieval, such as "only search in documents from the legal department" or "only consider content from the last 6 months." These filters dramatically improve precision for queries where temporal or organizational context matters, and they reduce computational load by narrowing the search space.

Implementing metadata filtering required careful database design to ensure that filters could be applied efficiently without degrading query performance. We structured our FAISS index to support filtered search through document ID ranges, allowing us to pre-filter the candidate set before performing expensive similarity computations.

Handling Unanswerable Questions Gracefully

A robust production system must gracefully handle queries that cannot be answered from the provided document collection. Generic LLMs, when pushed to answer questions they lack information for, will often hallucinate plausible-sounding but entirely fabricated responses. This behavior is catastrophic for enterprise applications where users must trust the system's outputs.

We addressed this through careful prompt engineering, explicitly instructing the model: "If the provided context does not contain sufficient information to answer the question accurately, respond with 'The available documents do not contain information to answer this question' rather than speculating or using external knowledge." We also implemented a confidence scoring mechanism where the LLM provides a self-assessed confidence level for each answer. Answers below a confidence threshold trigger a more cautious response that acknowledges uncertainty.

Testing revealed that this approach successfully prevented hallucination in 98% of cases where relevant context was not retrieved. The remaining 2% of failures typically involved questions where partially relevant context led the model to overconfidently extrapolate, suggesting that improving retrieval recall remains an important research direction.

Query Understanding and Expansion

We found that naive query processing, where the user's exact question is directly embedded and used for retrieval, sometimes underperforms. This is particularly true for ambiguous queries, queries using synonyms or jargon not present in the documents, or complex multi-part questions. To address this, we implemented a query preprocessing step that uses a smaller, faster LLM to reformulate and expand the user's question before retrieval.

For example, a query like "What's our policy on remote work?" might be expanded to "remote work policy, work from home guidelines, telecommuting rules" to improve recall. Similarly, a question like "How do we handle angry customers?" might be reformulated to use more formal terminology like "customer complaint resolution process" that better matches the language in official documentation.

This paper presents the design, implementation, and rigorous evaluation of a fully functional, production-ready RAG system that effectively addresses the pervasive challenge of information retrieval from unstructured enterprise documents. Through careful architectural decisions, strategic technology selection, and meticulous optimization, we have developed a system that combines high accuracy with exceptional performance.

The system's 88% context relevance score demonstrates that semantic search powered by transformer-based embeddings fundamentally outperforms traditional keyword matching for understanding user intent. The 85% retrieval precision confirms that our chunking strategy successfully preserves semantic coherence while maintaining granular retrieval. Most importantly, the 100% faithfulness score validates that every generated answer remains fully grounded in source material, providing the verifiability and trustworthiness that enterprise deployments demand.

From a user experience perspective, the 1.5-second average response time creates an interactive, conversational feel that encourages engagement and exploration. This performance is directly attributable to our selection of Groq's LPU infrastructure, which provides an order-magnitude improvement over traditional GPU inference. The system's ability to maintain 15 queries per second throughput indicates excellent scalability potential for organization-wide deployment.

Beyond the quantitative metrics, our evaluation revealed important insights about RAG system design. We learned that retrieval quality fundamentally bounds generation quality—even the most capable LLM cannot produce good answers from poor context. This underscores the critical importance of the retrieval pipeline and justifies the significant engineering effort we invested in optimizing chunking, embeddings, and indexing. We also confirmed that transparency through source attribution is not merely a nice-to-have feature but a fundamental requirement for building user trust in AI-powered systems.

Looking forward, several promising directions for enhancement emerged from our work. Implementing query decomposition for complex multi-hop questions could improve performance on queries requiring synthesis across multiple document sections. Incorporating explicit reasoning chains, where the system explains its answer construction process, could further enhance transparency and debuggability. Finally, extending the system to support multimodal documents containing images, tables, and diagrams represents an important frontier for handling the full richness of enterprise content.

We are committed to open-sourcing the complete implementation, including code, evaluation scripts, and documentation, to facilitate further research and enable practitioners to build upon our work. The code is available at [repository link] under an MIT license, and we welcome community contributions. By sharing our learnings and providing a solid foundation, we hope to accelerate innovation in the rapidly evolving field of applied RAG systems and document intelligence.

The results demonstrate conclusively that RAG-based systems represent a viable, scalable, and highly effective solution for enterprises seeking to unlock the value trapped in their institutional knowledge. As organizations continue to generate ever-larger document collections, the need for intelligent question-answering systems will only intensify. Our work provides a credible, reproducible blueprint for building such systems with the accuracy, speed, and trustworthiness that production environments demand.

#AgendicAI #RAG #RetrievalAugmentedGeneration #LLM #DocumentIntelligence #FAISS #VectorEmbeddings #Groq #LangChain #QuestionAnswering #NLP #MachineLearning #EnterpriseAI #KnowledgeManagement #SemanticSearch