With the advancement of large language models (LLMs) and Retrieval-Augmented Generation (RAG) techniques, it is now possible to build intelligent systems capable of reading complex legal documents and answering natural language questions based on their content.

With this in mind, we developed RAG for Legal Documents, an open-source system focused on legal text analysis. The solution allows users to upload PDF files, generate semantic embeddings using state-of-the-art models, index the data with FAISS, and respond to queries using LLMs accelerated via Groq.

In this project, you'll learn:

How to structure a full RAG pipeline using Python, LangChain, and FAISS

How to use Docling to extract and split legal documents into meaningful chunks

How to build an interactive interface with Streamlit for real-time querying

What are the limitations and opportunities when applying RAG to the legal domain

This article is intended for developers, AI enthusiasts, and legal professionals who want to understand how AI can transform the way we interact with legal information.

The RAG for Legal Documents project also serves as an accessible and practical starting point for building personal AI assistants capable of understanding and interacting with contracts, legal opinions, court rulings, and other complex legal texts.

Legal Document Ingestion and Structured Extraction with Docling

(Optional) Chunk Splitting for Optimization

Embedding Generation using the E5 model

Vector Indexing with FAISS

Semantic Retrieval using Maximum Marginal Relevance (MMR)

LLM Integration with Groq + LLaMA3

Interactive User Interface with Streamlit

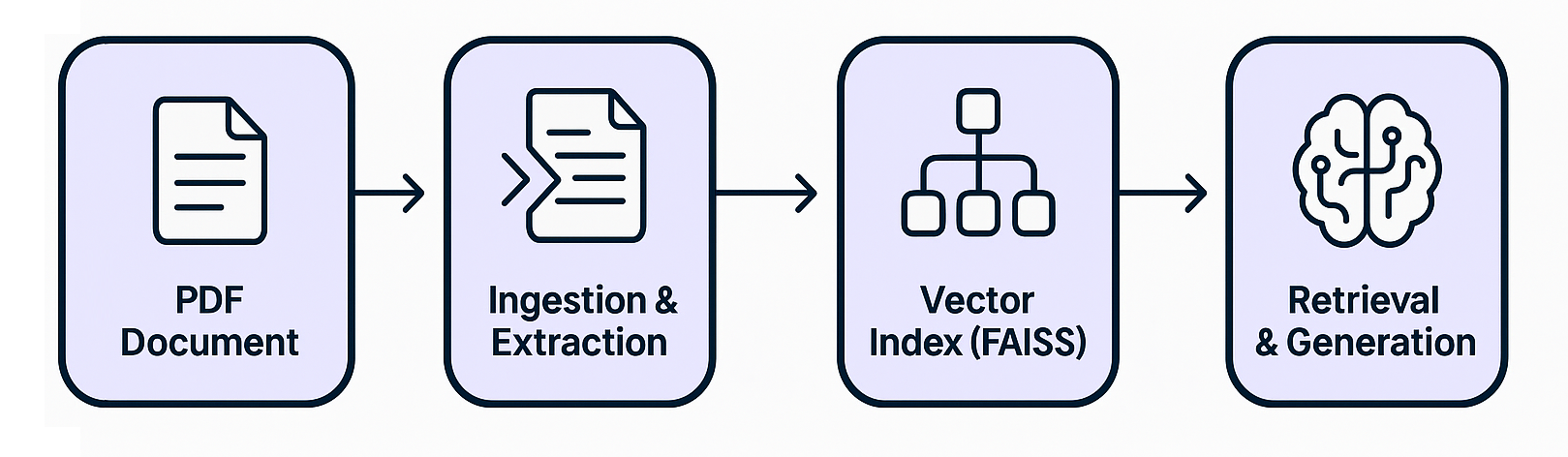

The pipeline begins by loading legal documents using DoclingLoader, which extracts structured content from PDF files—retaining document structure such as titles, sections, and tables.

from langchain_docling import DoclingLoader from langchain_docling.loader import ExportType loader = DoclingLoader(file_path="document.pdf", export_type=ExportType.DOC_CHUNKS) documents = loader.load()

Although this step is supported, it is optional in this project. That’s because DoclingLoader already provides well-structured document chunks, making manual chunking unnecessary in most cases.

from langchain_text_splitters import RecursiveCharacterTextSplitter splitter = RecursiveCharacterTextSplitter(chunk_size=300, chunk_overlap=100) chunks = splitter.split_documents(documents)

⚠️ Note: The above step is commented out by default in the source code to preserve the original structure extracted by Docling.

We use the intfloat/multilingual-e5-large model to create semantic embeddings and index them using FAISS for fast and scalable vector retrieval.

from langchain_huggingface import HuggingFaceEmbeddings from langchain_community.vectorstores import FAISS embeddings = HuggingFaceEmbeddings(model_name="intfloat/multilingual-e5-large") vectorstore = FAISS.from_documents(documents, embeddings) vectorstore.save_local("index_path")

We use the llama3-8b-8192 language model from Meta, hosted by Groq, to generate fast and accurate responses.

from langchain_groq import ChatGroq llm = ChatGroq( temperature=0.1, model_name="llama3-8b-8192", api_key=st.secrets["GROQ_API_KEY"] )

To maximize both diversity and relevance of retrieved context, the retriever uses Maximum Marginal Relevance (MMR).

retriever = vectorstore.as_retriever( search_type="mmr", search_kwargs={"k": 20, "fetch_k": 100, "lambda_mult": 0.8}, )

The prompt guides the LLM to act as a legal assistant, answering objectively based only on the context from the documents.

You are a specialized legal assistant. Carefully analyze the following context extracted from legal documents and respond objectively, without adding external information...

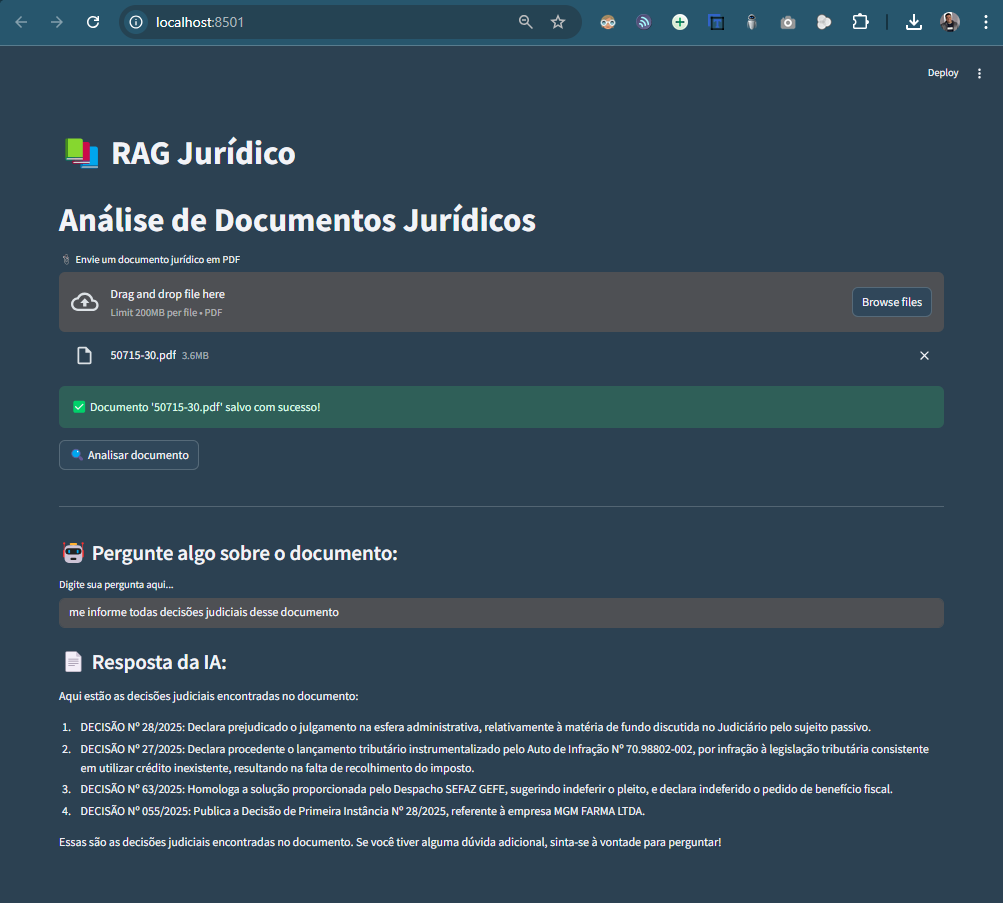

PDF document upload

Automatic document processing and FAISS indexing

Real-time question-answering interface

Persistent chat history

app.py: Streamlit frontend and session control

rag_pipeline.py: Core logic (loading, embedding, indexing, retrieval)

utils.py: Helper functions (token counting, hashing, formatting, logging)

This modular approach allows:

✅ Easy domain adaptation (e.g., contracts, technical manuals, audit reports)

✅ Integration with other APIs or vector databases

✅ Customization for enterprise or institutional use cases

The RAG for Legal Documents project has demonstrated how generative AI and Retrieval-Augmented Generation (RAG) can be used to make working with legal documents more efficient, accessible, and accurate.

With a modular architecture and modern technologies, the system allows users to query complex legal documents in natural language and receive contextual answers—even without advanced technical or legal knowledge.

Law firms that handle large volumes of contracts.

Public institutions that want to provide better access to legal opinions, decrees, and court decisions.

Corporate teams that need to quickly interpret legal clauses.

Compliance or audit teams looking to automate initial reviews.

Additionally, the simple Streamlit interface ensures accessibility for non-technical users, while the Groq API with LLaMA3 delivers fast and relevant responses.

Implementation of LangGraph for multi-step reasoning workflows.

Integration with multiple legal sources (APIs, databases, etc.).

Adding conversational memory and advanced agent behavior.

Adapting to other languages or legal domains.

This repository is open-source and intended as a practical starting point for anyone interested in building AI-powered legal assistants. Feel free to clone it, extend it, and apply it to your organization or personal project.

I’m excited to share this open-source project with the global AI community and contribute to the growing ecosystem of practical, real-world applications for legal intelligence.

Feel free to connect with me on LinkedIn— I'm always open to exchanging ideas, collaborating, and learning with others passionate about AI and innovation.