Abstract

This project implements a Retrieval-Augmented Generation (RAG) powered assistant using LangChain, designed to answer questions and generate summaries based on custom document sets. By integrating document ingestion, vector-based retrieval, and LLM responses, the assistant delivers accurate, context-aware outputs—ideal for use cases like legal research or academic analysis. Built with a modular Python architecture, it's scalable, customizable, and currently in active development with plans for UI enhancements and broader deployment.

Currently in active development, it features a modular architecture using LangChain and Python, with plans for broader deployment and UI enhancements. Whether you're a law student preparing briefs or a practitioner navigating complex case files, this assistant streamlines research by combining:

LLM-powered responses tailored to your documents

Vector-based retrieval for pinpoint accuracy

Modular architecture for easy customization and scaling

Introduction

This project implemented in a (Retrieval-Augmented-Generation) powered by LLM (Large language model) using legal case template assistant using LangChain. The assistant answered questions and summarized based on the custom documents. The tool combines document ingestion, vector-based (Chroma) retrieval, and large language model responses to deliver accurate, and relevant context-aware answers.

Methodology

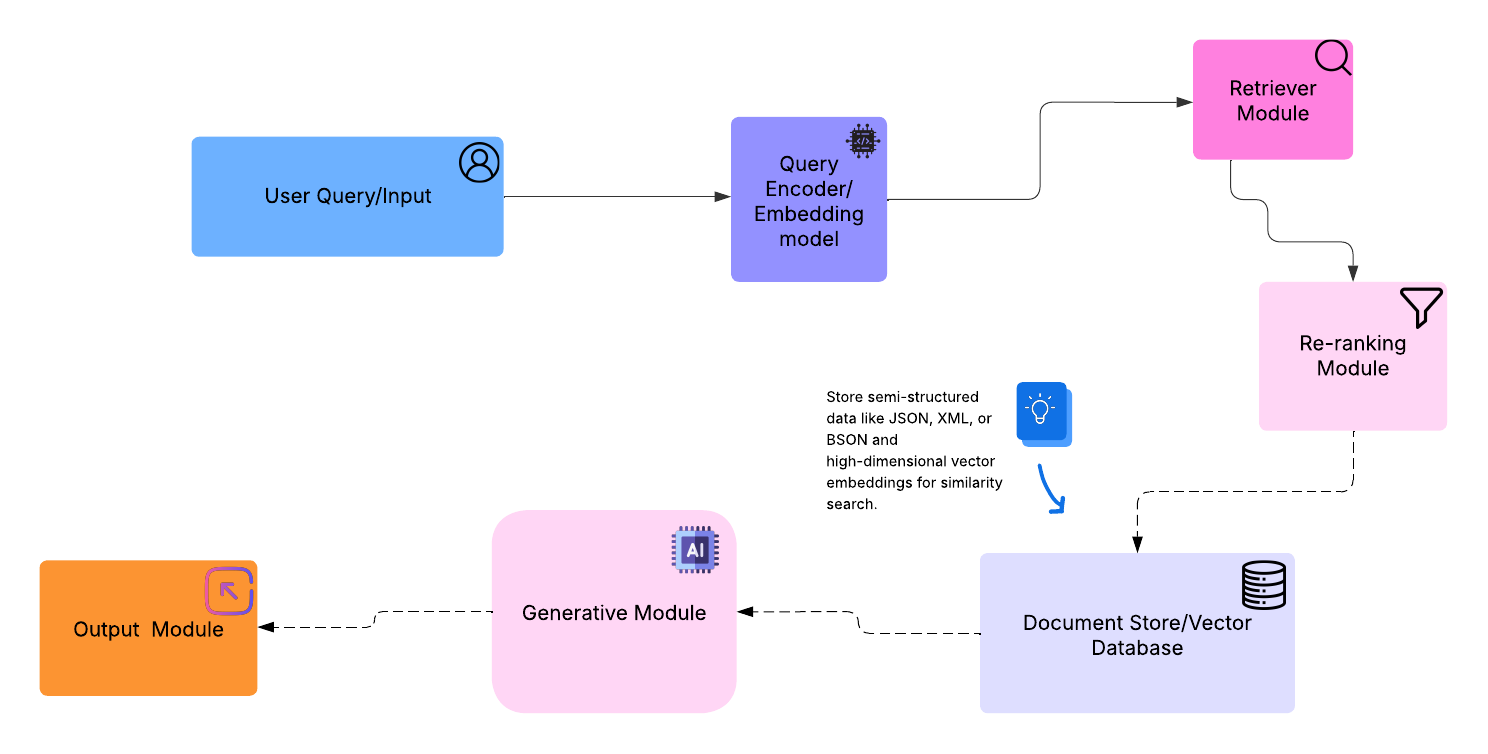

Here's a visual breakdown of a typical Retrieval-Augmented Generation (RAG) architecture,

Document Ingestion

Legal Brief Companion supports ingestion of multiple document formats including .pdf, .docx, .txt, and .md. The ingestion pipeline is modular, transparent, and optimized for legal workflows.

Ingestion Workflow

1. File Loader

- Uses LangChain’s UnstructuredFileLoader, PyMuPDFLoader, and TextLoader depending on file type.

- Converts documents into plain text while preserving semantic structure (e.g., headings, paragraphs).

2.Text Chunking

- Applies RecursiveCharacterTextSplitter to break text into manageable chunks (default: 500 characters with 50-character overlap).

- Ensures semantic continuity across chunk boundaries for better retrieval accuracy.

3.Embedding Generation

- Uses sentence-transformers/all-MiniLM-L6-v2 to convert chunks into dense vector representations.

- Embeddings are cached and stored in ChromaDB for fast, persistent retrieval.

4.Metadata Tagging

- Each chunk is tagged with source filename, page number (if applicable), and timestamp.

- Enables traceability and citation toggling in responses.

5.Persistence

- Vector store is saved in chroma_db/ for reuse across sessions.

- Supports incremental ingestion and re-indexing without reprocessing entire corpora.

Example Ingestion Output

{

"chunk_id": "doc_001_chunk_03",

"source": "case_law_ethiopia_2022.pdf",

"text": "The court held that...",

"embedding": [0.123, -0.456, ...],

"metadata": {

"page": 3,

"timestamp": "2025-10-21T09:00:00Z"

}

}

Modularization Tips

- Swap loaders based on domain (e.g., PDFMinerLoader for scanned rulings).

- Add preprocessing hooks for redaction or anonymization.

- Enable ingestion logging for audit trails in legal workflows.

- Integrate citation toggles to surface source metadata in responses.

Prompting Strategy & Hallucination Mitigation

Legal Brief Companion uses a layered prompting approach to ensure responses are accurate, grounded, and context-aware. This is especially important in legal workflows, where hallucinations can lead to misinformation or misinterpretation.

Prompt Construction

System Prompt: Defines assistant behavior, tone, and citation expectations.

User Query: Captured verbatim and embedded using sentence-transformers/all-MiniLM-L6-v2.

Context Injection: Retrieved document chunks are appended with metadata (source, page, timestamp).

Prompt Template:

You are a legal assistant. Answer the following question using only the provided context.

If the context is insufficient, say so. Cite sources when possible.

Context:

[retrieved_chunks]

Question:

[user_query]

This structure discourages unsupported speculation and encourages citation-backed responses.

Hallucination Mitigation Techniques

| Technique | Description |

|---|---|

| Context-only grounding | LLM is instructed to answer only from retrieved chunks. |

| Chunk relevance filtering | Top-k chunks are scored for semantic similarity before inclusion. |

| Citation toggles | Metadata (filename, page) is optionally surfaced in the response. |

| Fallback logic | Python, Streamlit(UI) |

| Model selection | Groq is preferred for deterministic, low-latency responses. Gemini fallback planned. |

Example Response Behavior

Query: “What precedent did the court rely on?” Retrieved Context: Includes case summary from page 4 of case_law_ethiopia_2022.pdf Response: “The court relied on the precedent established in State v. Abebe, as noted on page 4 of the uploaded document.”

If no relevant precedent is found:

“The provided context does not include any cited precedent.”

Modularization Tips

- Add a toggle for “strict context mode” vs. “suggestive mode” for exploratory queries.

- Log prompt inputs and outputs for auditability in sensitive workflows.

- Consider integrating citation tracebacks for courtroom-grade transparency.

Memory Handling & Reasoning Mechanism

Legal Brief Companion is designed to reason over domain-specific content with contextual precision. While it does not persist long-term memory across sessions, it uses session-level memory and retrieval-based reasoning to simulate continuity and support multi-turn interactions.

Reasoning Mechanism

The assistant uses a retrieval-augmented reasoning loop that combines semantic search with prompt-based logic:

1.Query Embedding

- User input is embedded using sentence-transformers/all-MiniLM-L6-v2.

2.Context Retrieval - Top-k relevant chunks are retrieved from ChromaDB using cosine similarity.

3.Prompt Construction - Retrieved context is injected into a structured prompt template that guides the LLM to reason only from available evidence.

4.LLM Response Generation - Groq generates a response that is coherent, grounded, and optionally cites source metadata.

5.Session Memory Simulation - Previous queries and responses are cached in memory for multi-turn context chaining.

Example Reasoning Flow

Turn 1 “Summarize the ruling in case A.” → Retrieves ruling from page 3 of case_A.pdf → Generates summary.

Turn 2 “Did the court cite precedent?” → Uses cached context from Turn 1 + new retrieval → Responds with citation details.

Modularization Tips

- Add a context window manager to control how many past turns are included in each prompt.

- Use retrieval chaining to simulate reasoning across multiple documents or jurisdictions.

- Integrate source tracebacks to show which chunks influenced the final response.

- Consider adding a reasoning log for auditability in legal workflows

Technical Architecture

Core Components

| Component | Tool used |

|---|---|

| Embeddings | sentence-transformers/all-miniLM-L6-V2 |

| Vector Database | ChromaDB |

| Language Model | Groq |

| Development | Python, Streamlit(UI) |

Key Features

- Supports multiple document formats: .pdf, .docx, .txt, .md

- Uses ChromaDB for vector storage and retrieval

- Embeddings generated via Hugging Face’s sentence-transformers

- Compatible with OpenAI, Groq, and Google Gemini LLMs,

- Modular and API-ready architecture

- Environment-driven model selection via .env file

Pipeline Overview:

How It Works

- Document Loading: Reads and converts documents into plain text.

- Vector Embedding: Splits text into chunks, embeds them, and stores in ChromaDB.

- Querying: Retrieves relevant chunks and sends them with the query to the selected LLM for response generation.

- Retriever: converts user queries into vector embedding using sentence-transformers/all-miniLM-L6-V2

. Searches a ChromaDB index to find top-k relevant document chunks

6.Generator: Combines the query and retrieved chunks.

Sends them to Groq to generate a coherent, fact-grounded response.

Example Interaction

User Query: “Could you summarize this case?”

Response: A concise, context-aware summary grounded in the uploaded legal document.

Project Structure Highlights

- LEGAL_BRIEF_COMPANION/app.py: Main logic for document loading and LLM pipeline

- LEGAL_BRIEF_COMPANION/retrieval: Handles embeddings and retrieval

- data/: Folder for source documents

- chroma_db/: Persistent vector database

- .env: API keys and model configs

Installation & Usage

Requirements:

Python 3.8+

Streamlit

ChromaDB

Sentence Transformers

Groq API Key

Setup Instructions

- Clone the repo and create a virtual environment.

- Install dependencies from requirements.txt. using via poetry and pyproject.toml

- Add API keys and model settings in a .env file.

- Run the assistant using poetry run streamlit run app.py

Limitations & Trade-offs

- Retrieval precision may degrade with vague queries

- Performance depends on document quality (e.g., OCR accuracy)

- Currently optimized for English-language legal texts

Future Directions

- UI modularization for courtroom-grade deployment(Upload based on the the user desire case)

- Citation toggles and source traceability

- Gemini integration for multilingual support

- Fine-tuning for jurisdiction-specific legal language

Conclusion

Legal Brief Companion demonstrates the power of modular design in building domain-specific AI assistants. By integrating Retrieval-Augmented Generation (RAG) with LangChain, ChromaDB, and Groq, the assistant delivers context-aware, citation-ready responses tailored to legal workflows.

This project showcases:

- A reproducible ingestion pipeline for multi-format legal documents

- Vector-based retrieval for precision and traceability

- Prompt engineering and hallucination mitigation for courtroom-grade reliability

- Session-level memory and reasoning mechanisms for multi-turn interactions

- UI modularization plans for user-driven document selection and citation toggling

License

MIT License — free to use and modify and extended