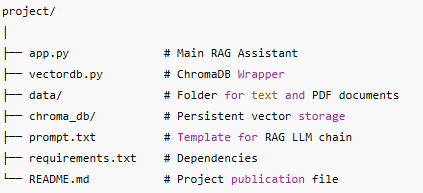

RAG-Based AI Assistant Using ChromaDB and Multi-Model LLM Integration

Abstract

This project presents a Retrieval-Augmented Generation (RAG) Assistant that combines local knowledge retrieval with reasoning from advanced large language models (LLMs).

The system integrates multiple providers — OpenAI, Groq, and Google Gemini — ensuring flexibility, cost-efficiency, and fault tolerance.

At its core, the assistant uses ChromaDB as a persistent vector database to store and retrieve semantically meaningful document chunks.

Users can query their custom knowledge base or ask general questions directly to the LLM, enabling both factual precision and open-domain intelligence.

System Overview

The RAGAssistant class serves as the main orchestrator of the system. It:

Detects available API keys and dynamically selects an LLM provider (OpenAI, Groq, or Gemini).

Loads and processes text and PDF documents automatically from the data/ directory.

Uses LangChain’s ChatPromptTemplate and StrOutputParser to create a flexible LLM chain.

Provides two modes of interaction:

/chat: for general conversational queries.

Standard input: for RAG-based, knowledge-grounded responses.

The VectorDB class encapsulates ChromaDB operations and text preprocessing.

It:

Initializes a persistent ChromaDB collection (./chroma_db).

Splits text into manageable chunks using RecursiveCharacterTextSplitter.

Extracts and attaches detailed metadata (file name, creation date, page number, etc.).

Adds text chunks to the ChromaDB collection with unique IDs and traceable metadata.

Handles semantic search using Chroma’s internal similarity-based query engine.

Key Features

🔁 Multi-LLM Flexibility: Automatically selects between OpenAI, Groq, and Google Gemini APIs based on available credentials.

📄 Cross-Format Document Support: Reads both .txt and .pdf files, extracting structured content and metadata.

💾 Persistent Knowledge Base: Stores embeddings locally using ChromaDB, allowing offline reusability.

⚙️ Modular Architecture: Clear separation between ingestion, retrieval, and generation logic for easier maintenance.

💬 Interactive CLI Interface: Guides users through query and chat operations with color-coded feedback.

Workflow Summary

Document Ingestion

Files in the data/ directory are automatically read, parsed, and categorized.

Text Chunking & Metadata Extraction

Each document is split into chunks (≈500–1000 characters), and metadata is attached for contextual recall.

Vectorization & Storage

ChromaDB stores the vector representations persistently for future retrieval.

Query Handling

User queries are converted into semantic vectors and compared against stored chunks.

Response Generation

Relevant chunks are passed to the LLM, which synthesizes a natural-language answer via a structured RAG prompt.

Applications

🤖 Intelligent Knowledge Assistants for research, legal, or educational domains.

🧠 Domain-Specific Chatbots that answer from custom document sets.

🔍 Research Retrieval Tools that combine vector similarity with natural reasoning.

🏢 Enterprise Knowledge Systems where secure, local document retrieval is essential.

Future Scope

Integration with custom embedding models for domain adaptation.

Development of a web-based interface (GUI) for non-technical users.

Addition of multi-user session handling and access control.

Real-time document synchronization and background reindexing.

Technical Highlights

Component Technology Used Description

LLM Interface LangChain + OpenAI / Groq / Google Gemini Dynamically selects available API key

Vector Database ChromaDB Persistent vector storage and retrieval

Text Chunking LangChain RecursiveCharacterTextSplitter Overlap-based document segmentation

Document Parsing PyPDF2 Page-level PDF parsing with metadata

Orchestration Custom Python Classes Modular, extendable RAG pipeline

Conclusion

The RAG-Based AI Assistant exemplifies how retrieval and generation can merge into a unified AI workflow — combining the precision of vector search with the fluency of large language models.

Its modular structure, local persistence, and multi-provider compatibility make it ideal for both academic learning and enterprise deployment.

The project demonstrates practical understanding of retrieval-augmented AI systems, aligning with Ready Tensor’s goal of advancing real-world machine intelligence capabilities.!