Abstract

This project explores the task of grocery product classification using images captured with smartphones under natural conditions. The primary objective is to develop a robust machine learning pipeline that can effectively categorize grocery items into predefined classes. To accomplish this, two distinct methodologies were employed:

- a neural network was built and trained from scratch to perform the classification task

- a pretrained neural network was fine-tuned using transfer learning to adapt it to the dataset at hand.

The dataset utilized in this project comprises 43 diverse product categories, encompassing fruits, vegetables, and packaged goods. By leveraging modern neural network techniques and methodologies, this work aims to provide a reliable and scalable solution for automated grocery product recognition, which could have significant applications in retail and inventory management.

Methodology

Dataset

The dataset represents the backbone of this project and consists of high-quality images depicting grocery products in realistic settings. These images are captured under various lighting conditions, guaranteeing the dataset to show diversity and complexity. The images are also augmented with rotations and crops to enhance models' performances.

First Neural Network from scratch

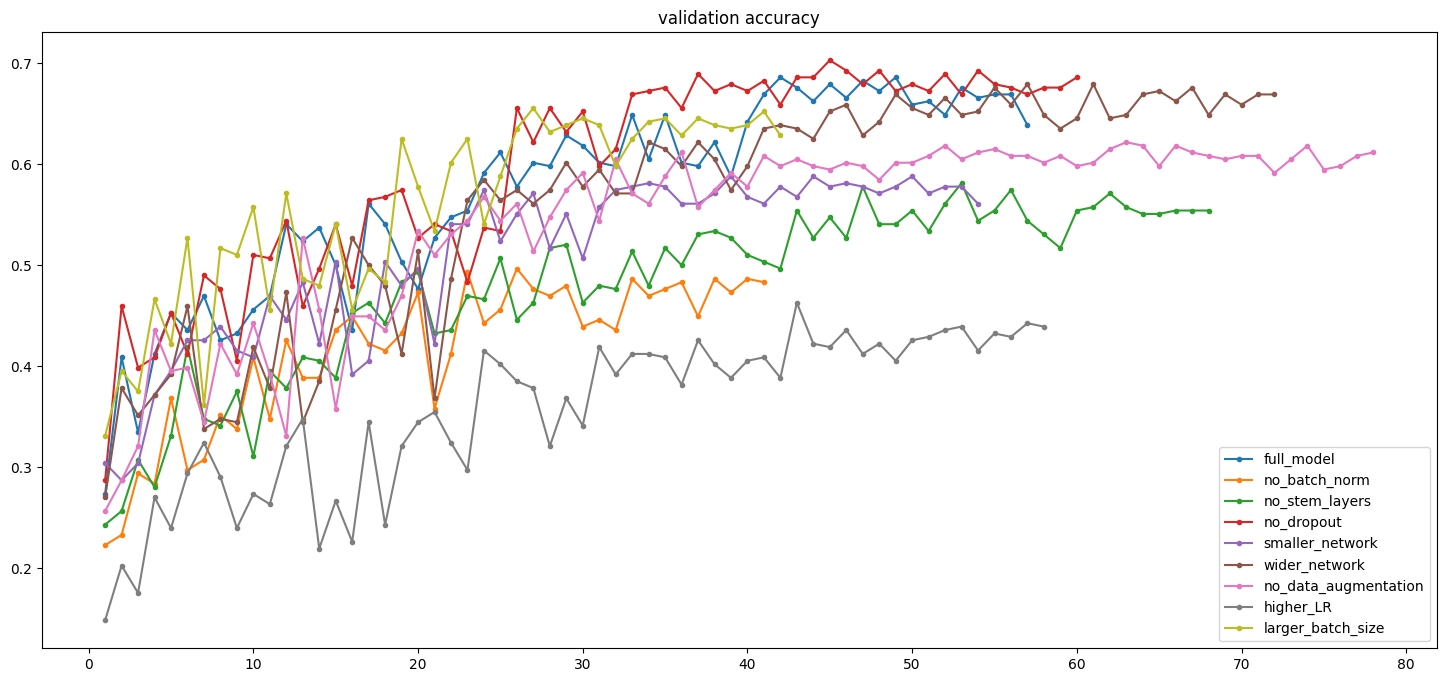

In the first approach, a VGG-inspired Convolutional Neural Network (CNN) was designed and implemented from scratch to classify the product images. The architecture of the CNN was carefully crafted to extract meaningful features from the input images, which were then used to predict the corresponding product classes. All the components necessary to build the model have been described and analysed, showing also how each of them affects the general performances. In the following figure there is the progression of the accuracy of each training evolution.

The expalination of the effects of each choice are described accurately in the attached notebook.

Fine-Tuning ResNet18

The second approach leveraged transfer learning by employing the pretrained version of ResNet18 from PyTorch’s model zoo. This method involved using a network that had already been trained on a large dataset, such as ImageNet, and fine-tuning its parameters to adapt it to the grocery classification task. By doing so, the pretrained model's rich feature representations, learned from a diverse set of images, could be repurposed for this specific application. During the fine-tuning process, the final layers of the network were modified to match the number of classes in the grocery dataset, while earlier layers were either frozen or adjusted to retain the pretrained knowledge.

This process has been repeated for both the hyperparameters used for first approach and specifically tuned hyperparameters, inspired by the paper "Rethinking the hyperparameters for fine-tuning." (Li, Hao, et al. 2020). The performances are shown in the following table (further details are in the notebook).

| validation accuracy | validation loss | |

|---|---|---|

| base_hyperparameters | 0.847973 | 0.625091 |

| tweak_hyperparameters | 0.915541 | 0.308289 |

Results

The custom CNN provided a solid baseline for the classification task, showing effectiveness for its low number of parameters. However, its performance highlighted areas where deeper feature extraction could yield improvements. Indeed, the ResNet18 achieved significantly higher accuracy and robustness across different product categories, as it enabled the model to make use of pretrained knowledge, reducing the need for extensive training on the grocery dataset.

These findings suggest that leveraging pretrained models can significantly enhance performance and efficiency, especially when working with specialized datasets. There are several avenues for future research and development based on this project. One potential direction is to expand the dataset further, either by collecting more images or by employing data augmentation techniques to generate synthetic variations of the existing images. This could help improve the model's robustness and ability to handle edge cases.