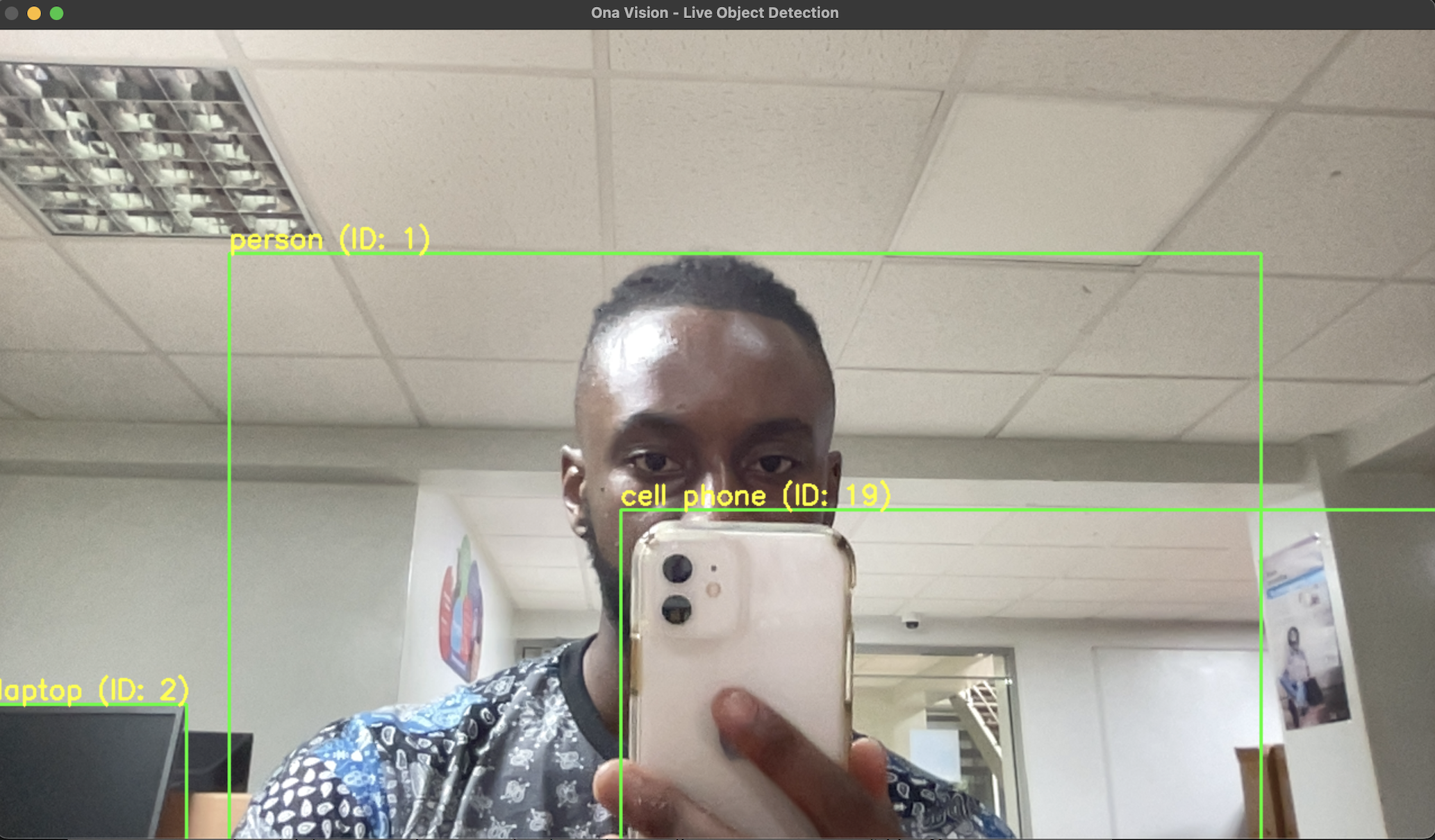

Ona Vision 🎯 — Real-Time Object Detection & Monitoring with YOLOv8, DeepSORT, and MLOps

Overview

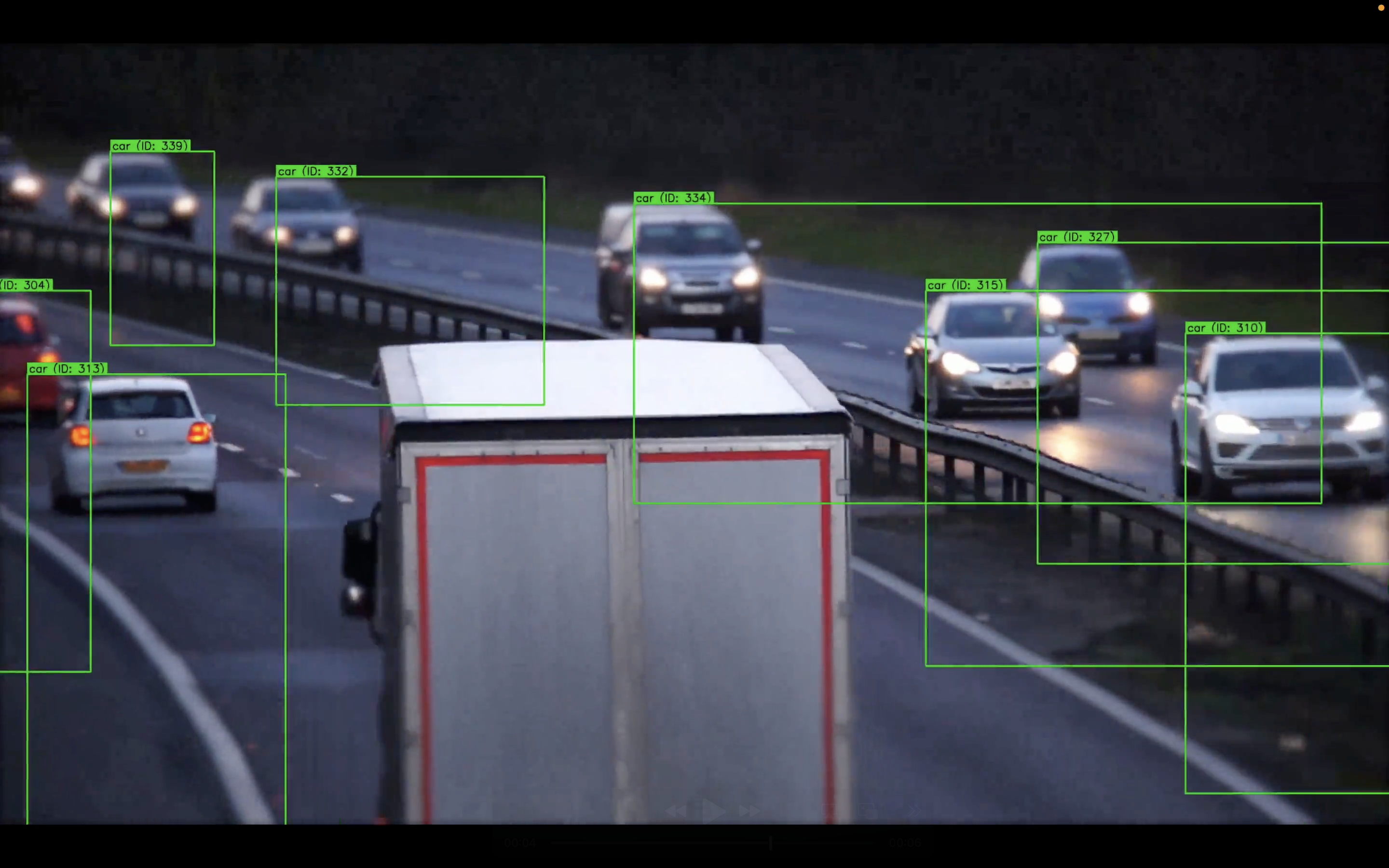

Ona Vision is a real-time object detection and tracking system built using YOLOv8 and DeepSORT. It captures live video, detects objects, tracks them across frames, and streams processed video over the network. The system integrates observability using Prometheus to monitor performance metrics like FPS, inference time, and resource usage.

Designed as a full MLOps pipeline, Ona Vision covers model training, deployment, scaling, and monitoring. It leverages Docker, Kubernetes, and GitOps principles to ensure scalability and reliability for production environments.

The Inspiration

Ona Vision was inspired by a personal experience where I lost my AirPods and had to manually sift through hours of CCTV footage—an inefficient and frustrating process. This project aims to automate and simplify video surveillance by enabling real-time detection and tracking of specific objects, making environments safer and security systems smarter.

Key Features

- State-of-the-art detection with YOLOv8

- Multi-object tracking using DeepSORT for consistent IDs and reliable re-identification

- Real-time video processing and network streaming with Python sockets

- Observability and monitoring via Prometheus (FPS, inference time, CPU/memory usage)

- Web UI dashboard built with Flask for easy visualization and control

How It Works

- The server captures frames from the webcam and runs YOLOv8 for object detection.

- Bounding boxes and labels are added to the frame.

- The processed frame is serialized and sent to the client over a socket connection.

- The client receives and displays the video in real-time.

- Prometheus metrics are collected in the background, tracking performance and inference statistics.

Architecture Diagram

flowchart TD A[Webcam] --> B[Server: Capture Frames] B --> C[YOLOv8: Object Detection] C --> D[Add Bounding Boxes & Labels] D --> E[Serialize Processed Frame] E --> F[Send Frame over Socket] F --> G[Client: Receive & Display Video] B --> H[Prometheus Metrics Collection] H --> I[Performance & Inference Stats] style A fill:#dbe9ff,stroke:#333,stroke-width:1px,color:#000 style B fill:#a3c4f3,stroke:#333,stroke-width:1px,color:#000 style C fill:#8ccf7e,stroke:#333,stroke-width:1px,color:#000 style D fill:#a3c4f3,stroke:#333,stroke-width:1px,color:#000 style E fill:#a3c4f3,stroke:#333,stroke-width:1px,color:#000 style F fill:#a3c4f3,stroke:#333,stroke-width:1px,color:#000 style G fill:#f7c978,stroke:#333,stroke-width:1px,color:#000 style H fill:#f4dbb7,stroke:#333,stroke-width:1px,color:#000 style I fill:#f4dbb7,stroke:#333,stroke-width:1px,color:#000

Setup and Usage

pip install -r requirements.txt python main.py # Start detection server python client.py # Display real-time video stream cd ui python app.py # Launch Flask web UI at http://localhost:5000

Then visit http://localhost:5000 in your browser.

Observability Metrics

| Metric | Description |

|---|---|

| FPS | Frames per second for performance monitoring |

| Inference Time | Time taken for YOLOv8 inference per frame |

| CPU Usage | System CPU utilization during processing |

| Memory Usage | System memory consumption during processing |

| Detection Confidence | Average confidence of detected objects per frame |

| Class-wise Object Count | Tracks the number of detected objects per class |

Repo

You can visit my repository at the link below.

https://github.com/josiah-mbao/Ona-Vision

To see a demo of the system in action click the link below. The demo uses video footage that comes from Pixabay, and inference on T4 GPUs from Google Colab.

🎥 Click here to view the demo video

Use Cases and Examples

Ona Vision is designed to provide real-time object detection and tracking for a variety of scenarios, making it a versatile tool for industries and individuals alike. Here are some example use cases:

Security and Surveillance

- Automate monitoring of restricted areas to detect unauthorized persons or objects

- Real-time tracking of suspicious activities in retail stores or public spaces

- Assist security personnel by highlighting detected objects and tracking their movement

Asset Tracking

- Monitor valuable equipment or assets in warehouses and construction sites

- Track the movement of vehicles or packages in logistics centers

Traffic and Transportation

- Detect and track vehicles or pedestrians at intersections for traffic analysis

- Monitor parking lots to identify available spots or detect unauthorized parking

Retail Analytics

- Analyze customer movement patterns and dwell times within stores

- Detect product interactions or shelf stock-outs

Smart Home and Personal Use

- Real-time detection of family members, pets, or personal belongings

- Enhance home security systems with intelligent object detection

Vision & Future Work

Ona Vision was born from a real and frustrating experience—losing my AirPods and spending hours scrubbing through CCTV footage at my university, only to walk away with nothing. That process felt deeply inefficient and made me wonder: With so many cameras deployed in campuses, businesses, and public spaces, are we really using that video data to its full potential?

The Vision

My long-term vision for Ona Vision is to enrich video data with insights and make it searchable—imagine being able to ask, "Show me all instances of a person picking up an AirPod on Tuesday between 2–4 PM." This would transform how we interact with surveillance footage, turning it into an intelligent, searchable asset instead of just a passive recording.

Potential Improvements

To move Ona Vision toward that goal, I’m focusing on a few key areas:

-

Access to Scalable Compute

Currently, I rely on Google Colab for inference. I’m in the process of enabling billing for my GCP account, but GPU compute costs remain a key challenge. Long-term, exploring more cost-effective edge inference or using spot instances could help. -

More Robust MLOps Pipeline

I want to expand the current system to include:- Experiment tracking and model versioning

- Model drift detection and automatic retraining workflows

- A flexible API to let users select models based on their use-case or budget

-

Batch Video Processing

Real-time detection is powerful, but batch processing pre-recorded video is far more affordable and often sufficient for forensic or analytics use-cases. Building support for offline processing pipelines is a top priority. -

Edge Deployment on Raspberry Pi or Jetson Nano

Running Ona Vision on edge devices like the Raspberry Pi or NVIDIA Jetson Nano would allow for local, low-latency inference without relying on the cloud. This is ideal for privacy-sensitive environments (like homes or schools), or areas with limited internet connectivity. Lightweight YOLO variants or quantized models can make real-time detection feasible even on constrained hardware. -

Cloud Integration to Store Detection Data

Integrating with cloud platforms like Google Cloud, AWS, or Azure will enable long-term storage of inference results, logs, and video clips. This would support features like:- Historical data analysis

- Detection alerts via webhooks or email

- Centralized dashboards across multiple camera feeds

Cloud storage would also enable collaboration, auditing, and future training of improved models using collected data.

-

Web-Based Visualization Using Flask or FastAPI

While the current Flask UI offers basic monitoring, I aim to extend this into a full-featured dashboard with:- Real-time video feeds with detection overlays

- Historical detection logs searchable by timestamp, object type, or location

- Metrics visualization (e.g., FPS, object counts over time, GPU usage)

- User controls to toggle models or configure detection zones

This would make Ona Vision more accessible for non-technical users and easier to manage at scale.

License

This project is open-source under the Apache 2.0 License.

Author

Josiah Mbao – Software Engineer | MLOps Developer