Abstract

NewsGuard AI is a lightweight, real-time fake news detection system delivered through a browser extension. The system is powered by a fine-tuned BERT (Bidirectional Encoder Representations from Transformers) model trained on the FakeNewsNet dataset. The model is deployed as a RESTful API using Flask and hosted on Render, enabling seamless interaction with the browser extension frontend. Users can input or paste news content directly into the extension, which then communicates with the backend API to classify the content as either Fake or Real, along with confidence scores.

The model achieves an accuracy of 97.37%, demonstrating strong predictive performance on the classification task. The project showcases how transformer-based NLP models can be effectively integrated into real-world applications to promote media literacy and combat misinformation online. By offering an accessible, responsive, and easy-to-use interface, NewsGuard AI empowers users to critically evaluate the credibility of online content in real time.

Introduction

The rapid spread of misinformation through digital media has raised significant concerns about the credibility of online content. As social platforms and news aggregators become primary sources of information, distinguishing between real and fake news has become a critical challenge. Manual fact-checking methods are not scalable, making automated solutions essential for real-time credibility assessment.

NewsGuard AI addresses this challenge by combining the power of transformer-based language models with accessible browser technology. The system leverages BERT (Bidirectional Encoder Representations from Transformers), a state-of-the-art NLP model, fine-tuned on the FakeNewsNet dataset. By deploying the model as a Flask API and integrating it into a browser extension, users can evaluate the authenticity of news content directly within their browsing environment.

This project demonstrates a complete machine learning pipeline—from data preparation and model training to deployment and frontend integration—making it an ideal example of how transformer models can be applied in real-world use cases. With an achieved accuracy of 97.37%, NewsGuard AI serves as a robust foundation for building practical, user-facing AI applications that promote media literacy and counter online misinformation.

Dataset

This project utilizes the FakeNewsNet dataset, a well-established benchmark for fake news detection that aggregates labeled news articles from multiple verified sources. The dataset includes subsets from GossipCop and PolitiFact, representing fake and real news respectively.

The primary text feature used for classification is the title field, which contains the news headline. This choice is based on the premise that headlines often carry sufficient cues for determining news authenticity.

The preprocessing pipeline involves:

- Loading CSV files

gossipcop_fake.csv,gossipcop_real.csv,politifact_fake.csv, andpolitifact_real.csv. - Assigning binary labels manually based on source: GossipCop entries are labeled as Fake (label = 1) and PolitiFact entries as Real (label = 0).

- Cleaning the text by removing special characters, extra spaces, and converting all text to lowercase to normalize input.

- Combining all processed data into a single dataset.

- Splitting the dataset into training and testing sets with an 80 ratio, maintaining class distribution through stratified random sampling.

This results in clean, balanced CSV files that serve as input for subsequent model training and evaluation.

Modeling & Training

The project employs the BERT (Bidirectional Encoder Representations from Transformers) model, chosen for its proven effectiveness in natural language understanding tasks, including text classification and fake news detection.

Key steps in modeling and training include:

-

Data Preprocessing:

The news headlines were tokenized using the BERT tokenizer (bert-base-uncased) with padding and truncation to a maximum sequence length of 512 tokens. This standardizes input length for the model. -

Model Architecture:

A pre-trained BERT model (bert-base-uncased) was fine-tuned with a classification head adapted for binary classification (fake vs. real). -

Training Setup:

The model was trained using the Hugging FaceTrainerAPI with the following hyperparameters:- Batch size: 8 (both training and evaluation)

- Number of epochs: 3

- Weight decay: 0.01

- Evaluation and checkpoint saving performed at each epoch

-

Dataset Sampling:

To optimize training time while maintaining performance, only 10% of the original FakeNewsNet training and testing datasets were sampled randomly with a fixed seed for reproducibility. -

Checkpoint Saving:

The best model checkpoint was saved after training and subsequently used for inference in the deployed backend API.

This fine-tuning process enabled the model to achieve a high classification accuracy, making it suitable for real-time fake news detection.

Model Training Code

The model was fine-tuned using the Hugging Face Transformers library. Below is an excerpt illustrating dataset preparation and training setup:

from transformers import BertTokenizer, BertForSequenceClassification, Trainer, TrainingArguments from torch.utils.data import Dataset import torch class FakeNewsDataset(Dataset): def __init__(self, texts, labels, tokenizer, max_length=512): self.texts = texts self.labels = labels self.tokenizer = tokenizer self.max_length = max_length def __getitem__(self, idx): encoding = self.tokenizer( self.texts[idx], padding='max_length', truncation=True, max_length=self.max_length, return_tensors='pt' ) return { "input_ids": encoding["input_ids"].squeeze(0), "attention_mask": encoding["attention_mask"].squeeze(0), "labels": torch.tensor(self.labels[idx], dtype=torch.long) } tokenizer = BertTokenizer.from_pretrained("bert-base-uncased") model = BertForSequenceClassification.from_pretrained("bert-base-uncased", num_labels=2) training_args = TrainingArguments( output_dir="./bert_model", num_train_epochs=3, per_device_train_batch_size=8, eval_strategy="epoch", save_strategy="epoch", ) trainer = Trainer( model=model, args=training_args, train_dataset=train_dataset, # Defined earlier eval_dataset=test_dataset, # Defined earlier ) trainer.train()

For the complete training code and dataset preprocessing, please refer to the project repository:

https://github.com/nallarahul/NewsGuard-AI

Backend API Deployment

The trained BERT model is exposed via a RESTful API implemented using Flask, a lightweight and widely used Python web framework. This backend serves as the bridge between the frontend browser extension and the machine learning model, enabling real-time fake news detection.

Key Components:

-

Flask Application (

app.py):

The Flask app initializes by loading the fine-tuned BERT model and tokenizer from the Hugging Face Model Hub. The model is loaded into memory once at startup to optimize inference speed. -

API Endpoint (

/predict):

A POST endpoint/predictaccepts JSON payloads containing the news text to be analyzed. The text is first tokenized using the BERT tokenizer with appropriate truncation and padding to ensure compatibility with the model’s input requirements. -

Inference Process:

After tokenization, the text is passed through the BERT model to generate logits, which are converted to probabilities using the softmax function. The class with the highest probability is selected as the prediction (FakeorReal), and confidence scores for both classes are returned in the response. -

Cross-Origin Resource Sharing (CORS):

CORS is enabled via the Flask-CORS extension, allowing secure communication between the backend API and the browser extension frontend, even though they may be hosted on different domains. -

Deployment on Render:

The API is deployed on Render, a cloud-based platform for hosting web applications and services. Render provides automatic scaling, uptime guarantees, and manages server infrastructure, ensuring the API is reliably accessible via a public URL.

This architecture provides a responsive, scalable, and secure backend service that integrates seamlessly with the frontend, allowing users to receive instant feedback on news authenticity directly within their browsers.

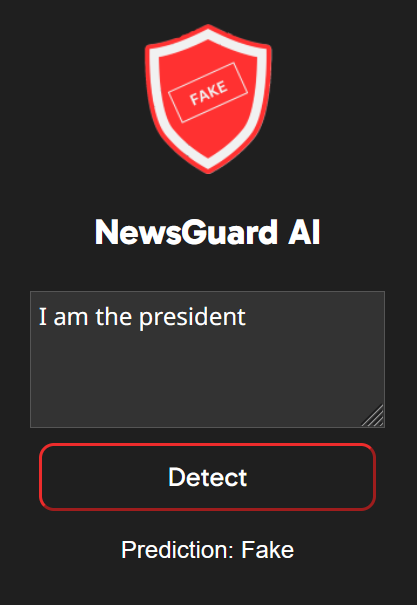

Frontend - Browser Extension

The frontend is implemented as a browser extension designed to provide users with seamless, real-time fake news detection while browsing.

Key Features and Technologies:

-

Technologies Used:

The extension is built using standard web technologies including HTML for structure, CSS for styling, and JavaScript for functionality. -

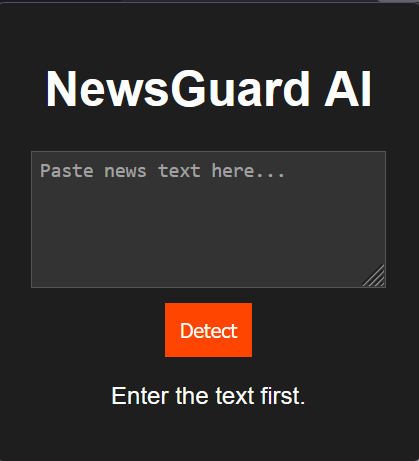

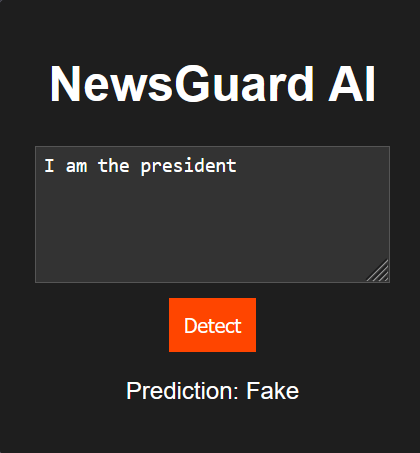

User Interface:

The extension presents a simple interface where users can input or paste news text for verification. Upon clicking the “Detect” button, the input text is sent to the backend API for analysis. -

API Integration:

JavaScript’s Fetch API is used to send asynchronous POST requests to the Flask backend’s/predictendpoint, transmitting the user’s input text in JSON format. The API endpoint is:

https://newsguard-backend.onrender.com/predict

Requests are sent in the following format:

{ "text": "Your news article text here" }

The response format:

{ "prediction": "Fake", "confidence": { "Fake": 85.6, "Real": 14.4 } }

-

Response Handling:

The extension receives the JSON response containing the predicted label (FakeorReal) and confidence scores. It then updates the user interface dynamically to display the prediction result. -

User Experience Flow:

- Users open the extension popup while browsing news articles or social media.

- They paste or type the news snippet into the text box.

- Upon triggering detection, the extension shows a loading indicator, then displays the prediction once received.

- Clear feedback messages guide users in case of errors or empty input.

-

Cross-Origin Considerations:

The extension’s requests to the backend API respect CORS policies enabled in the Flask server, ensuring secure cross-domain communication.

This approach ensures a lightweight, responsive user experience, allowing users to verify news authenticity without leaving their browsing context.

Results & Evaluation

The fine-tuned BERT model achieved an accuracy of 97.37% on the test set sampled from the FakeNewsNet dataset, indicating strong performance in distinguishing fake news headlines from real ones.

Evaluation Metrics:

- Accuracy: The proportion of correctly classified news headlines over the total number of samples.

Performance Highlights:

- The model demonstrated reliable classification on diverse news topics, effectively capturing linguistic cues indicative of fake news.

- Confidence scores returned by the API offer transparency on prediction certainty, enhancing user trust in the extension’s outputs.

Limitations:

- Some misclassifications occur, particularly with ambiguous or satirical headlines where contextual nuances are subtle.

- The model’s performance depends heavily on the quality and representativeness of the training data subset.

Example Predictions:

| News Text (Headline) | Prediction | Confidence (Fake / Real) |

|---|---|---|

| "Breaking: Celebrity endorses miracle cure" | Fake | 92.5% / 7.5% |

| "Government announces new environmental policy" | Real | 4.3% / 95.7% |

These results underscore the model’s effectiveness while highlighting areas for further refinement, such as incorporating more varied datasets and contextual features.

Conclusion & Future Work

This project successfully demonstrates the application of a fine-tuned BERT model for real-time fake news detection through a browser extension. Leveraging the FakeNewsNet dataset, the model achieved high accuracy, enabling effective classification of news headlines as fake or real. The integration of the model with a Flask backend API and a responsive frontend extension provides a practical tool for users to verify news authenticity seamlessly while browsing.

What Went Well:

- Effective use of transfer learning with BERT for text classification.

- Clean and efficient preprocessing pipeline ensuring quality input data.

- Robust backend API deployment on Render, facilitating scalable and reliable model inference.

- Intuitive browser extension interface with real-time interaction and clear feedback.

Future Improvements:

- Expand dataset coverage to include more sources and multi-lingual news to improve generalizability.

- Incorporate additional contextual features such as full article content, source credibility, and metadata.

- Enhance the model evaluation with additional metrics like Precision, Recall, and F1-score for a more comprehensive performance assessment.

- Explore lightweight transformer models or distillation techniques to reduce inference latency and resource consumption.

- Implement continuous learning pipelines to update the model as new data becomes available, adapting to evolving misinformation patterns.

This work lays a foundation for further development of accessible tools combating misinformation, emphasizing transparency, scalability, and user engagement.

References

[1] Shu, Kai, et al. “FakeNewsNet: A Data Repository with News Content, Social Context and Dynamic Information for Fake News Research.” arXiv preprint arXiv

.01286 (2018). https://github.com/KaiDMML/FakeNewsNet[2] Devlin, Jacob, et al. “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding.” arXiv preprint arXiv

.04805 (2018). https://arxiv.org/abs/1810.04805[3] Hugging Face. “Transformers Library.” https://huggingface.co/transformers/

[4] Render. “Render - Cloud Platform for Hosting Applications.” https://render.com/

[5] Flask. “Flask Web Framework.” https://flask.palletsprojects.com/