This publication demonstrates the design and implementation of a multi-agent AI report generation system using LangGraph, Groq API, and Tavily Search.

The purpose is to automate analytical report writing through coordinated AI agents that can plan, research, write, reflect, and refine content — producing structured, source-backed reports without human intervention.

Readers will learn:

For: AI engineers, LangChain/LangGraph developers, automation researchers, and data professionals.

Use Cases:

Traditional LLM systems can generate text, but they often lack memory, structure, and self-evaluation.

This system addresses that by building an autonomous pipeline of agents capable of:

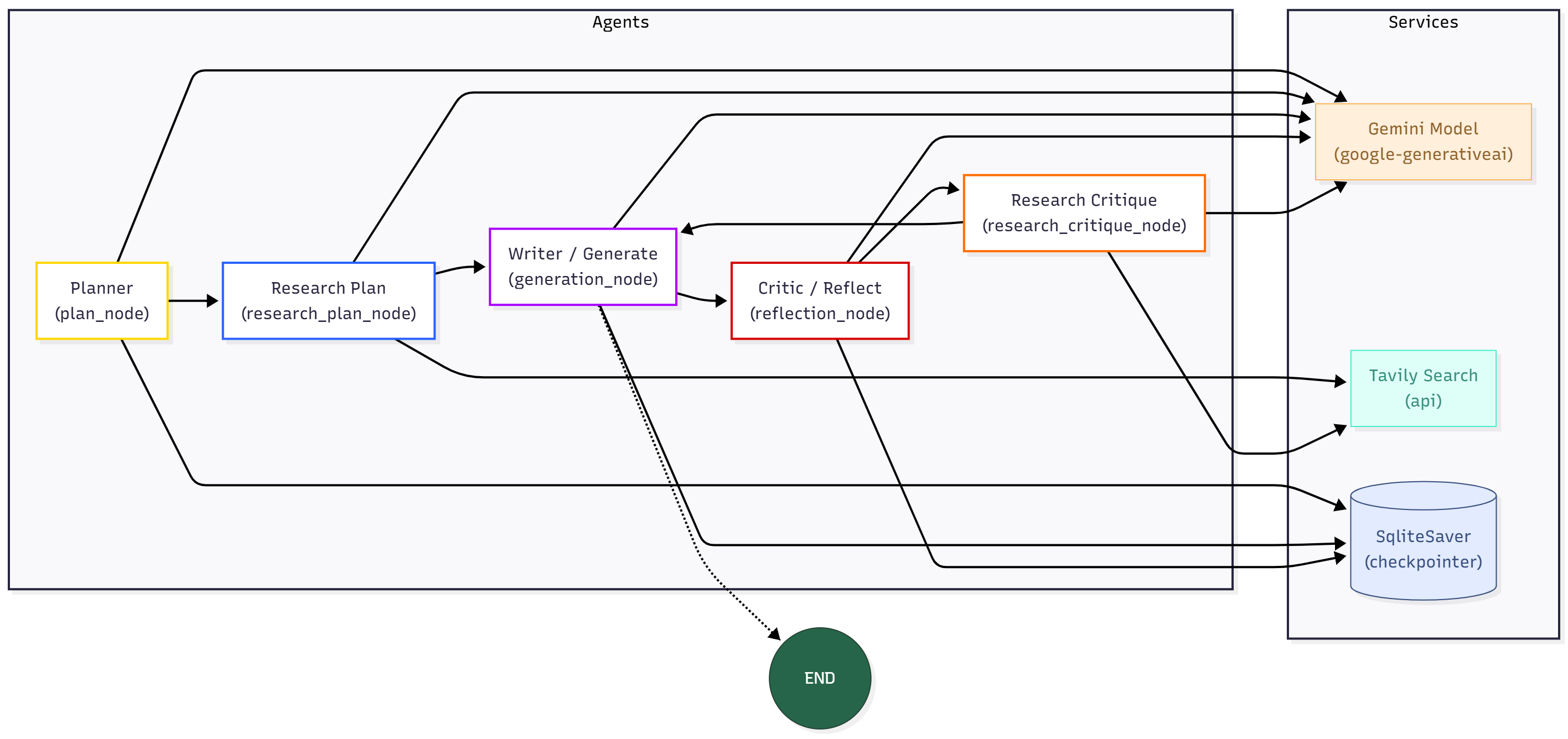

The system is structured as a LangGraph workflow, where each node represents a specific agent behavior.

State persistence and safety are handled through SQLite checkpoints.

The workflow ensures agents pass structured data (JSON) for machine tasks and plain text for human output.

Core Workflow:

builder = StateGraph(AgentState) builder.add_node("planner", plan_node) builder.add_node("research_plan", research_plan_node) builder.add_node("file_fetch", file_tool_node) builder.add_node("compute_stats", compute_stats_node) builder.add_node("generate", generation_node) builder.add_node("reflect", reflection_node) builder.add_node("research_critique", research_critique_node) builder.set_entry_point("planner") builder.add_conditional_edges("generate", should_continue, {END: END, "reflect": "reflect"}) builder.add_edge("planner", "research_plan") builder.add_edge("research_plan", "file_fetch") builder.add_edge("file_fetch", "compute_stats") builder.add_edge("compute_stats", "generate") builder.add_edge("reflect", "research_critique") builder.add_edge("research_critique", "generate") graph = builder.compile(checkpointer=memory)

Each node updates or consumes fields from a shared state dictionary:

class AgentState(TypedDict): task: str plan: str draft: str critique: str content: List[str] revision_number: int max_revisions: int

| Agent | Functionality |

|---|---|

| Reader | Handles JSON/text input, cleans and routes tasks |

| Analyzer | Extracts goals, keywords, and constraints |

| Researcher | Uses Tavily API for real-time verified web search |

| Writer | Generates text using Groq; adaptive JSON/text output |

| Reflector | Evaluates factual accuracy and style |

| Finalizer | Merges reports, ensures proper citations |

| Tool | Role |

|---|---|

| LangGraph | Defines stateful agent workflow |

| Groq API | Handles reasoning and generation |

| Tavily API | Provides web-based factual information |

| SQLite | Persists memory checkpoints |

| Gradio | Interactive interface for report requests |

Environment Setup:

pip install -r requirements.txt python chat_interface.py

Access at http://127.0.0.1:7860

The system includes built-in quality assurance through the Reflector Agent.

Each iteration triggers reflection before moving to the next revision until reaching the defined max_revisions.

The should_continue() function enforces safety and prevents infinite loops.

if state.get("revision_number", 0) >= state.get("max_revisions", 0): print("✅ Revision limit reached") return END

This architecture is portable to:

Memory persistence ensures recoverability even if an execution is interrupted.

If you like what we are building here and want to support the project,

the easiest way is to hit the ⭐ Star button on GitHub.

Your support helps us improve this multi-agent AI system and keep building useful tools for research and report generation.

Try running the system locally and extend it:

prompts.py to fit your domain.