●9 reads●MIT License

MongoDB RAG System Powered by Llama-3.1-8B-Instant

- ChatGroq

- Flask

- LangChain

- LangGraph

- LLMs

- MongoDB

- Render

Table of contents

🛢️ MongoDB RAG System Powered by Llama-3.1-8B-Instant 🍃

📖 Overview

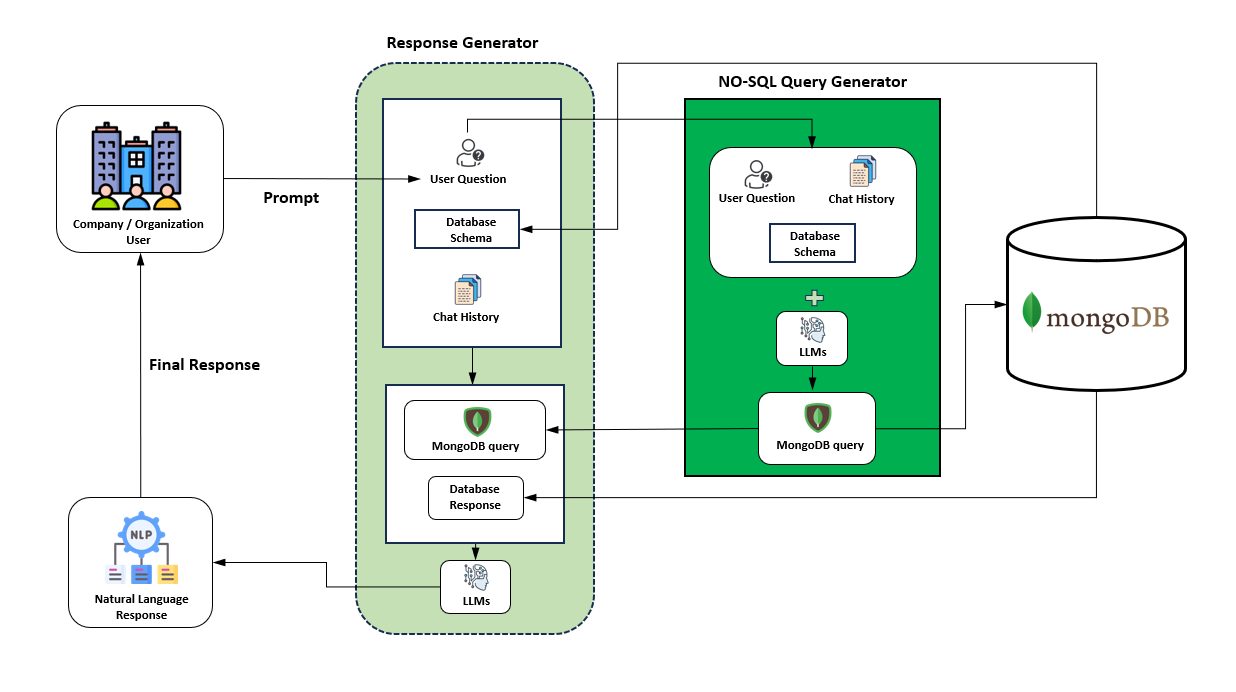

Mongodb-RAG is a Python project that implements a Retrieval Augmented Generation (RAG) system using MongoDB for storage and the powerful llama-3.1-8b-instant LLM for generation. This project demonstrates scalable, context-aware response generation by combining efficient document retrieval from MongoDB with state-of-the-art language modeling.

✨ Features

- Retrieval Augmented Generation (RAG): Combines MongoDB-based retrieval with LLM-powered answer generation.

- MongoDB Integration: Efficient storage and retrieval of documents and context.

- Latest LLM: Utilizes

llama-3.1-8b-instantfor high-quality, instant responses. - Web Deployment Ready: Easily deployable to Render.

- Clean Python Codebase: Modular Python scripts for easy understanding and customization.

📁 Folder Structure

├── __pycache__/ ├── .gitignore ├── app.py ├── index.py ├── mongodb_database.py ├── query_generator.py └── requirements.txt

- app.py: Main application logic (entry point for web deployment).

- index.py: Likely includes web server/router code.

- mongodb_database.py: MongoDB connection and data handling logic.

- query_generator.py: Core RAG logic for generating and retrieving responses.

- requirements.txt: Python package dependencies.

🚀 Getting Started

✅ Prerequisites

- Python 3.8+

- MongoDB instance (local or Atlas)

- (Optionally) Node.js & Render CLI for deployment

⚙️ Installation

-

Clone the repository

git clone https://github.com/YUGESHKARAN/Mongodb-RAG.git cd Mongodb-RAG -

Install dependencies

pip install -r requirements.txt -

Configure environment

- Set up your MongoDB URI and any required environment variables.

-

Run the application

python app.py

☁️ Deployment

To deploy on Render:

- Create a new Web Service on Render.

- Connect your GitHub repository.

- Set your build and start commands (typically,

pip install -r requirements.txtandpython app.py). - Add your environment variables (e.g.,MODEL_API_KEY, MongoDB URI) in the Render dashboard.

- Deploy!

🌐 Usage

- Access the service via the URL provided by Render after deployment.

- The system retrieves relevant documents from MongoDB and generates responses using the

llama-3.1-8b-instantLLM.

📊 Monitoring LLMs Logs

- Using LangSmith to monitor LLMs logs, operations, token usage, costs, and responses.

🤝 Contributing

Contributions, suggestions, and improvements are welcome! Open an issue or pull request.

🙌 Acknowledgements

Maintained by YUGESHKARAN.