When Anthropic introduced the Model Context Protocol (MCP), it didn’t just give developers a new tool; it gave them a glimpse of tomorrow. MCP reimagined how AI models connect to data, memory, and tools, turning what used to be isolated systems into something almost alive, aware, adaptive, and context-rich.

“MCP, a year tomorrow” isn’t about time; it’s about trajectory. The protocol feels like a message sent from the future, showing us what intelligent systems could look like when context becomes limitless. Today, developers use it to make models smarter. Tomorrow, it might define how AIs think, collaborate, and build alongside us.

Anthropic introduced the Model Context Protocol (MCP) in late 2024 as part of a quiet yet fundamental shift in how large language models interact with the world around them. The goal wasn’t just to make models smarter; it was to make them contextually aware.

Before MCP, developers had to rely on scattered methods, APIs, plugin systems, or external databases to give their models access to information. These solutions worked in isolation but couldn’t scale together. The problem was simple but profound: AI lacked continuity of context. A model could process text beautifully, but it couldn’t remember, connect, or collaborate effectively.

The Anthropic team built MCP to solve exactly that. The protocol established a universal way for models to access and share context across tools, apps, and data sources. With it, developers could create extensions that enabled AI to see, recall, and reason seamlessly in real time.

In less than a year, the impact has been massive. MCP has inspired a new wave of agentic systems, more innovative developer tools, and even interoperable AI workspaces. What started as a bridge between models and context has quickly become one of the most defining layers of the modern AI stack, the quiet infrastructure of tomorrow’s intelligent systems.

The Model Context Protocol (MCP) is built around a simple yet powerful idea: creating a shared, dynamic context layer between AI models and the tools they use. To make that work, MCP relies on four key components:

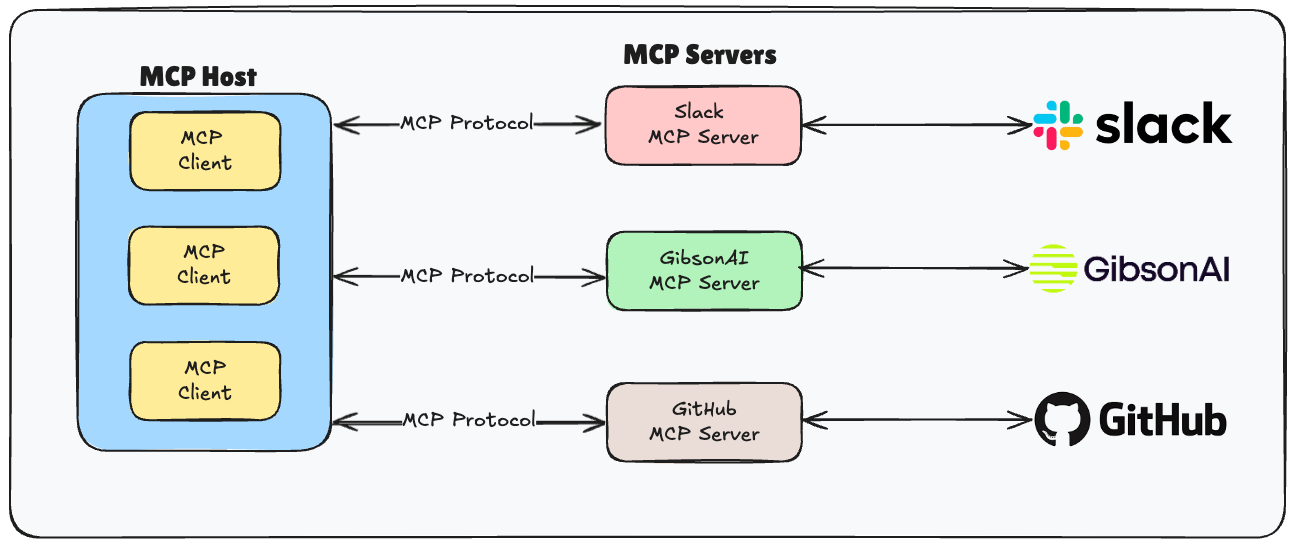

The MCP Server is the brain that exposes structured information, such as files, APIs, databases, or external systems to the model. It translates those resources into standardised context objects that models can understand.

The MCP Client acts as the bridge. It connects your model (for instance, OpenAI’s GPT models) to one or multiple MCP servers, fetching context, instructions, and data as needed.

Tools are what make your model truly “agentic.” They define actions your AI can take, from querying an API to running a computation. Each tool can be registered on the MCP Server and accessed via the protocol.

MCP uses a schema to define what context actually means in a task, what data is shared, how it’s structured, and how models should interpret it. This ensures interoperability across models and environments.

At first glance, an MCP server might sound like any other server. It receives requests, processes data, and returns a response. But that’s where the similarity ends. Traditional servers are designed for delivery, while MCP servers are designed for understanding.

In a conventional setup, a server hosts an API, handles requests, and returns static or dynamic data. The interaction is one-directional. You ask for something, it responds, and the conversation ends there. The server doesn’t know who you are, what you’re building, or how the data it provides will be used. It simply serves.

MCP servers, however, play a different game. They don’t just return data; they offer context, structured, interpretable, and dynamic information that an AI model can use to make decisions, reason, or act. Instead of one-off exchanges, they maintain a living, contextual thread with the client. In this case, an AI system or agent.

While traditional servers focus on serving data, MCP servers serve meaning. They know how to collaborate with models, interpret schema-based context, and even adapt to changing states across multiple tools.

In short, traditional servers power the web.

MCP servers will power the next generation of intelligent, context-aware AI systems.

If you’ve ever built an AI system, you know the pain of context limits. Models forget things, APIs don’t sync, and every new integration feels like duct-taping intelligence together. That’s the exact frustration the Model Context Protocol (MCP) was designed to end.

MCP gives developers a common language to connect their models, tools, and data without having to hack context together. It lets your AI system see what’s happening across different environments, remember what matters, and act in real time.

But beyond the technical beauty, here’s why MCP actually matters:

First, it makes your AI systems scalable. Instead of building custom connectors for every tool or source, you define them once, and any MCP-compatible model can use them. That means less overhead, fewer integration headaches, and faster iteration.

Second, it makes your AI systems smarter. By treating context as a first-class citizen, MCP allows models to reason dynamically, pulling only the data that’s relevant at that moment. No retraining, no fine-tuning, just real-time awareness.

Third, it unlocks collaboration. Multiple agents can now share context through MCP, enabling systems that think and work together—like a team of specialised models solving problems in sync.

In short, you should use MCP because it does what every developer has wanted AI to do from the beginning: stay aware, connected, and continuously evolving.

To see how easy it is to connect external tools to your AI agent, below is a config.json file you can use to integrate the GIbsonAI MCP server into your IDE agent easily

{ "mcpServers": { "gibson": { "command": "uvx", "args": ["--from", "gibson-cli@latest", "gibson", "mcp", "run"] } } }

While the idea of “context protocols” may sound abstract, MCP is already demonstrating its power in real systems. Here’s how developers and companies are applying it today:

Tools like Cursor IDE and Claude’s new MCP integration let AI models read project files, interpret codebases, and even modify them directly via MCP connections. This turns the AI from a static helper into a context-aware coding partner that understands your entire repository.

Businesses are using MCP to connect LLMs to private data, CRMs, analytics tools, and knowledge bases without compromising security. Instead of dumping data into the model, MCP allows the LLM to fetch context only when needed. Imagine an AI that can answer,

“What were last quarter’s top-performing campaigns?”

by securely querying live business data through MCP.

Researchers are integrating MCP with data pipelines and notebooks so that AI models can query datasets, run analyses, and summarise results on demand. It’s a new way to blend reasoning with live computation, particularly useful for academic projects and data science workflows.

From task managers to note-taking apps, developers are experimenting with MCP-enabled copilots that can reference your documents, schedules, and reminders contextually without sending your entire digital life to the cloud.

Some teams are using MCP to enable multiple AI agents to coordinate around shared data, each with a role (e.g., planner, executor, verifier). MCP acts as the communication bridge, ensuring they all share the same context in real time.

The truth is, MCP isn’t just a protocol; it’s a preview of what’s coming.

Right now, it sits quietly behind the scenes, powering a new generation of AI systems that can reason, recall, and interact with the world around them. But the deeper you look, the more it feels like the foundation of something bigger. A shift from prompt-based AI to context-native intelligence.

In the near future, MCP could evolve into the backbone of multi-agent ecosystems, where multiple AIs, each with unique skills and memories, collaborate through shared context instead of shared prompts. Imagine an ecosystem where one agent analyses real-time financial data, another interprets user behaviour, and a third writes recommendations, all seamlessly synchronised via MCP.

Beyond that, we’ll likely see autonomous orchestration systems that manage their own tools, APIs, and environments, dynamically updating their own context. MCP could make AI not just responsive, but self-directing, capable of learning what to connect to next without human prompting.

The most exciting vision lies in a persistent, living context —an AI that doesn’t just remember a chat or a session, but builds a continuous relationship with you. It learns your tools, your data, your patterns and is always there, constantly evolving. That’s what “a year tomorrow” truly means: not time passing, but time arriving.

MCP isn’t a reflection of what AI was; it’s a revelation of what AI is becoming.

As developers, we’ve spent years trying to teach machines how to understand us. But with the Model Context Protocol, it feels like we’re finally learning how to meet them halfway, giving AI not just information, but awareness. MCP turns code into conversation, systems into collaborators, and data into living memory.

So when we say “MCP, a year tomorrow,” we’re not talking about a milestone; we’re talking about momentum. The kind that doesn’t look back. The type that quietly redefines how intelligence connects, grows, and remembers.

Tomorrow isn’t coming. With MCP, it’s already here.