This publication details the development of a fully local, Retrieval-Augmented Generation (RAG) question-answering assistant. The system leverages the LangChain framework to orchestrate a pipeline that uses a local Mistral Large Language Model (LLM) served by Ollama and an in-memory FAISS vector database. By processing a custom corpus of AI and Machine Learning publications, the tool provides accurate, context-aware answers without relying on external cloud APIs, ensuring data privacy and offline functionality. This work serves as a practical guide for developers and researchers looking to build and deploy self-contained, RAG-powered applications.

The proliferation of powerful Large Language Models (LLMs) has revolutionized how we interact with information. However, many state-of-the-art models are accessible only through cloud-based APIs, raising concerns about data privacy, cost, and dependency on internet connectivity. The challenge, therefore, is to harness the power of these models in a secure, private, and self-contained environment.

This project addresses that challenge by creating the Local-RAG Assistant, a tool that runs entirely on a user's local machine. Its purpose is to provide a blueprint for building applications that can reason over a private or custom set of documents. By using open-source tools like Ollama, LangChain, and FAISS, this assistant demonstrates a practical approach to democratizing powerful AI technology. This publication will walk through the methodology, present the results of the working application, and discuss its implications for developers in the AI space.

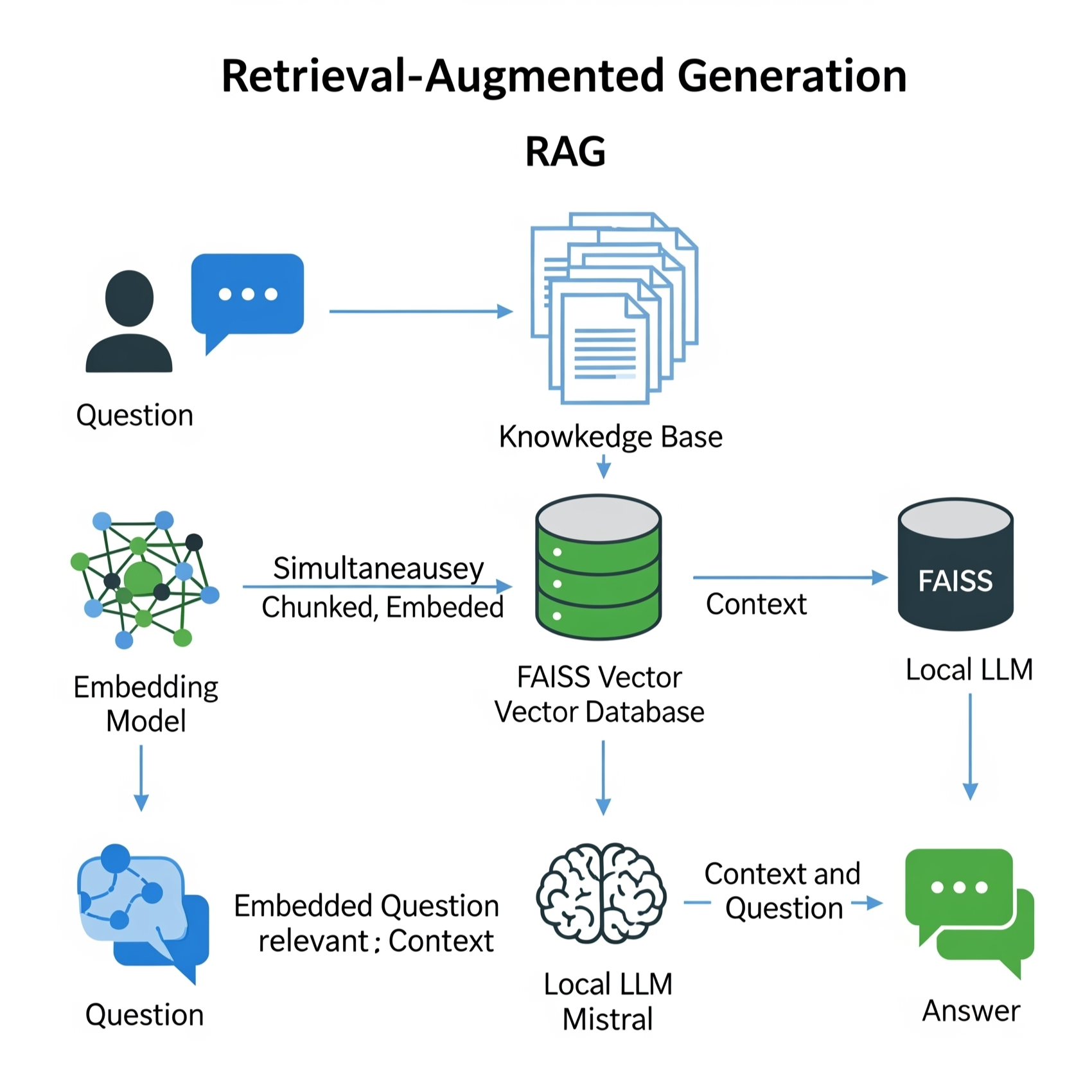

The assistant is built upon the Retrieval-Augmented Generation (RAG) architecture, which enhances the reliability of LLM responses by grounding them in a specific set of documents. The entire workflow is orchestrated using the LangChain framework and can be broken down into the following components.

The first step involves creating a knowledge base from the provided project_1_publications.json file. The text from each publication is loaded and then split into smaller, overlapping chunks of 1,000 characters using LangChain's RecursiveCharacterTextSplitter. This chunking strategy is essential for fitting the context within the LLM's prompt limits while maintaining semantic cohesion between the pieces.

Once the documents are chunked, they must be converted into a machine-readable format. We use the OllamaEmbeddings class to generate vector embeddings for each text chunk using the locally run Mistral model. These embeddings are high-dimensional vectors that capture the semantic meaning of the text.

These vectors are then stored in a FAISS (Facebook AI Similarity Search) database. FAISS is a highly efficient, in-memory vector store that allows for rapid similarity searches, which is the core mechanism for retrieving relevant context for a given query.

The core logic of the assistant is managed by a LangChain retrieval chain. When a user submits a question, the following occurs:

The assistant's knowledge is sourced from the project_1_publications.json file, a curated collection of articles from the Ready Tensor platform.

title, username, and publication_description, which serves as the primary text for the knowledge base.Before installation, please ensure your system meets the following requirements:

ollama pull mistral.Follow these steps to set up the assistant:

git clone https://github.com/gourishankar27/local_RAG_agent_system.git cd local_RAG_agent_system

pip install -r requirements.txt

Start the interactive assistant from your terminal:

python assistant.py

The application will first index the documents (a one-time process) and will then prompt you to ask a question.

Performance was evaluated qualitatively by testing the assistant's ability to answer questions accurately based on the knowledge base.

Performance Characteristics:

Example Results:

Query:

What are the core components of an AI agent?

Response: The provided context mentions two key components of an AI agent: Learning Logic Models (LLMs) and knowledge graphs. Learning Logic Models allow AI agents to make decisions and determine their course of action, while a knowledge graph represents a digital mirror of relationships in the real world, enabling powerful queries that would be complex or impossible with traditional databases. Auto-encoders are mentioned as a type of neural network used for unsupervised learning, but they are not explicitly stated as core components of an AI agent. However, it is important to note that the context does not provide a comprehensive list of all possible components of an AI agent, and more research might reveal additional elements.

This tool serves as a strong proof-of-concept but has several limitations:

Future work could address these limitations by:

The final application is a command-line interface that successfully answers questions based on the content within the AI/ML publications. The assistant demonstrates a strong ability to extract and synthesize information accurately.

Example Interactions:

Query 1:

What’s the role of memory in LangGraph?

Response:

The provided context does not explicitly explain the role of memory in LangGraph as it only discusses ChatGPT's use of memory. However, considering that Langchain-OpenAI and knowledge graphs share similarities with how ChatGPT functions (by using connections between entities), we can infer that memory in LangGraph would play a role in enabling the platform to remember relationships between various entities (films, actors, directors, etc.) and use this information to answer complex queries efficiently.

For a more accurate answer, it would be best to consult documentation or research about Langchain-OpenAI specifically.

Query 2:

How do I define a custom agent?

Response:

In the provided context, there is no information about defining a custom agent for a specific task such as a game or a chatbot. However, if we are talking about a Neural Network model (like NBeats), here's how you might define a custom agent:

- Identify the problem you want to solve with your custom agent. This could be anything from playing a game like chess or Go, to controlling a robot, to generating text in a chatbot.

- Choose an appropriate architecture for your model based on the problem you're trying to solve. For instance, if it's a sequence-to-sequence task like text generation, you might choose an LSTM or Transformer architecture. If it's a game with a large state space, you might consider using a deep reinforcement learning model.

- Prepare your dataset and labels for training the agent. This might involve collecting data from the environment, cleaning the data, and possibly preprocessing it in some way to make it suitable for the neural network.

- Train the model on your dataset using an appropriate loss function and optimizer. This might involve splitting your data into a training set and a validation set, and using techniques like cross-validation to ensure that your model is learning effectively from the data.

- Test your trained agent on new data to evaluate its performance. This could involve running simulations, playing games, or generating text. You'll want to measure some metrics of performance to determine how well your custom agent is doing compared to other possible solutions.

- Iterate on your model by fine-tuning hyperparameters, adding layers to the network, changing the loss function or optimizer, or collecting more data to improve its performance if necessary.

This process can be complex and time-consuming, but with careful design and experimentation, it's possible to create a custom agent that is effective at solving the problem you've defined.

Exiting assistant. Goodbye!

Query 3:

Can you explain what QLoRA is?

Response:

I'm sorry for any confusion, but there was no mention of QLoRA in the provided context. It appears that the conversation revolves around the topics of reproducibility, knowledge graphs, and graph databases, as well as CLIP (Contrastive Language-Image Pretraining). If you have more information or a different context where QLoRA is mentioned, I'd be happy to help explain it!

This project successfully demonstrates the creation of a self-contained, RAG-powered question-answering assistant. By leveraging open-source tools like Ollama, LangChain, and FAISS, it is possible to build powerful, private AI applications that run on local consumer hardware. This approach not only ensures data privacy but also opens the door for developers and researchers to create highly customized tools without incurring API costs.

While the current implementation is a simple command-line tool, it establishes a robust foundation. Future work could include adding conversational memory to handle follow-up questions, developing a more user-friendly interface with Streamlit or Gradio, or wrapping the functionality in a FastAPI for integration with other applications.