🚀 LLM Evaluation Chatbot

🌐 Overview

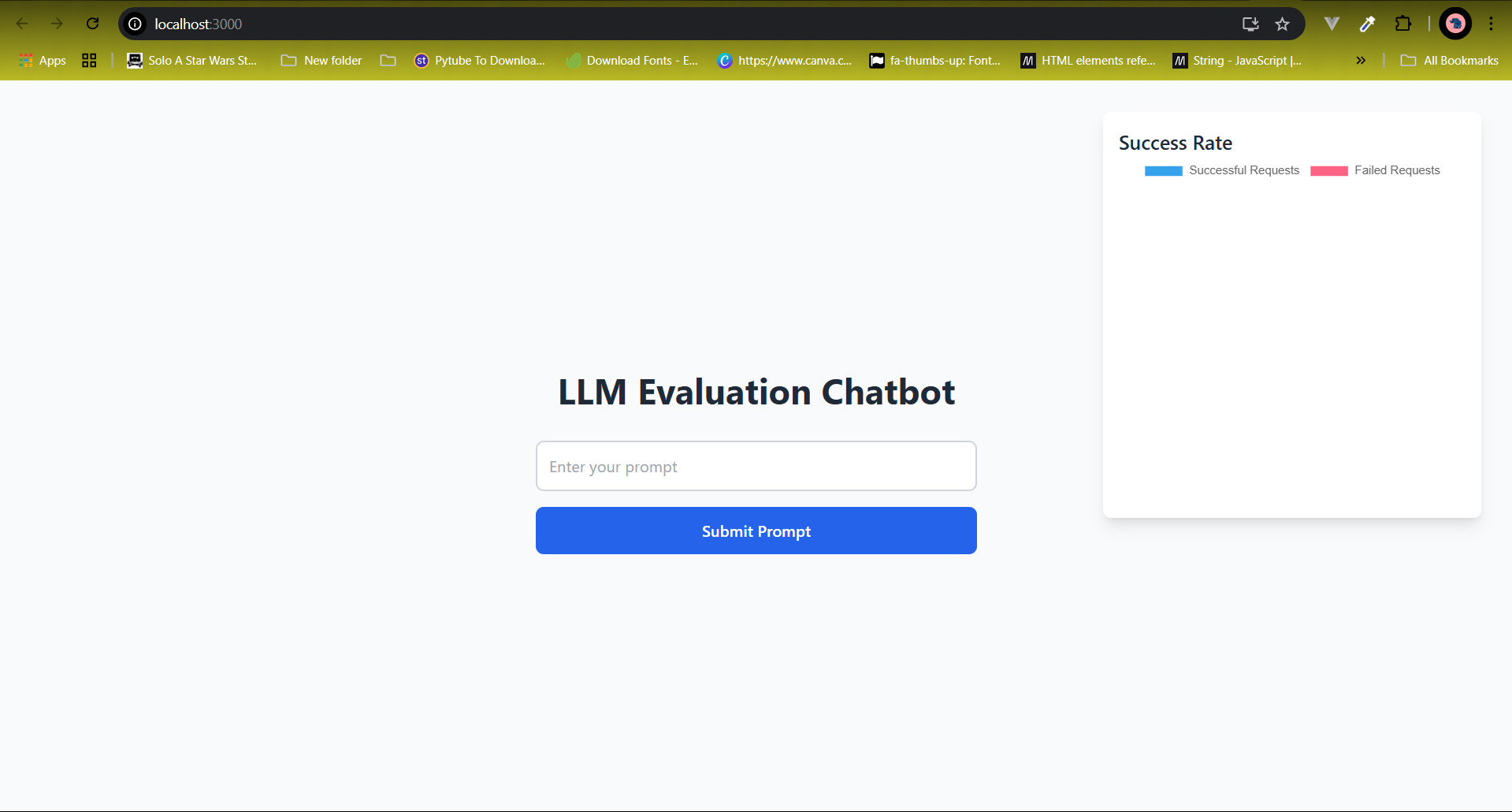

The LLM Evaluation Chatbot is an interactive web application designed to evaluate the responses of various language models (LLMs) in real-time. It allows users to submit prompts, receive AI-generated responses, and provide feedback through a rating system. By collecting user-generated evaluations, this system helps analyze and benchmark model performance — essential for understanding the effectiveness and alignment of LLMs.

Try it on Hugging Face-hosted models like

GPT-2,DistilGPT-2, andBERT-base-uncased.

🎯 Key Features

- 📝 Prompt Submission: Simple interface to input queries and receive AI-generated responses.

- 💬 Real-time Response: Instantly fetches responses via the Hugging Face Inference API.

- ⭐ User Ratings: Collects user feedback on response quality (e.g., 1-5 stars).

- 📊 Interactive Data Visualization: Visualizes rating trends and statistics with dynamic charts.

- 🔄 Model Switching: Easily configure and switch between different LLMs via

.envsettings. - ⚙️ Full Stack Architecture: Combines React.js (frontend), TypeScript/Express (backend), and SQLite (database).

🛠️ Technologies Used

✅ Frontend

- React.js — Dynamic component-based user interface.

- Axios — For efficient API communication.

- Chart.js — Interactive data visualization.

✅ Backend

- Node.js & Express.js — Lightweight REST API with TypeScript.

- TypeScript — Type-safe backend development.

- Hugging Face API — Integration for language model responses.

✅ Database

- SQLite — Lightweight local storage for user prompts, responses, and ratings.

✅ Developer Tools

- ESLint & Prettier — Code linting and formatting.

- Github Actions — Unit and integration testing framework.

🚀 Installation & Usage

⚙️ Backend Setup

# Clone the repository git clone https://github.com/PhilJotham14/llm-evaluation-chatbot.git cd llm-evaluation-chatbot/backend # Install backend dependencies npm install # Run backend server npx ts-node server.ts

⚙️ Frontend Setup

cd ../frontend # Install frontend dependencies npm install # Run frontend app npm start

🌐 Open http://localhost:3000 to use the application.

Change Model via:

Copy Edit HF_API_URL=https://api-inference.huggingface.co/models/gpt2 ✳️ Alternative models supported: bert-base-uncased distilgpt2

🎯 Purpose and Agentic AI Relevance

The LLM Evaluation Chatbot contributes to agentic AI research by enabling human-in-the-loop feedback on LLM outputs. Through collected evaluations, the system aids in understanding model alignment, helpfulness, and user satisfaction. This facilitates creating more aligned, human-centered AI systems, which is a core goal of agentic AI innovation.

🔑 Notices & Extensions

Model Customization: Easily switch models for better or different evaluations.

Future Enhancements: Potential to integrate larger models (e.g., GPT-4) and alignment datasets.

User Data Privacy: Ratings and prompts are stored locally (SQLite);

📂 Repository

📌 GitHub Repo: https://github.com/PhilJotham14/llm-evaluation-chatbot

📞 Contact

For collaboration or inquiries:

📧 Email: p.jothamokiror@gmail.com