Overview

This project implements a Monte Carlo Tree Search (MCTS) algorithm designed to enhance the creative capabilities of large language models (LLMs). The system employs a tree-based exploration strategy to iteratively refine LLM responses, optimizing quality through multiple feedback-driven iterations.

Inspired by decision-making algorithms used in games and planning, this approach adapts MCTS for creative contexts, such as short story generation, AI-user conversations and content fine-tuning. By combining structured feedback loops and quality metrics, it ensures systematic improvement of outputs.

This framework is particularly useful for tasks that require high-quality text, such as blog post creation, social media marketing, story creation and more.

Key Features

- Tree-Based Exploration:

- Each response is treated as a node in a tree. New variations are generated as child nodes.

- Nodes are evaluated for quality using a combination of LLM-based scoring, metric-driven evaluation, and historical feedback analysis.

- Feedback and Refinement:

- Nodes receive structured feedback in JSON format, identifying key issues such as coherence, readability, or creativity gaps.

- Responses are iteratively refined based on feedback and historical trends (e.g., recurring issues in prior nodes).

- Objective Metrics:

- The system uses a diverse range of metrics to evaluate responses, including:

- Perplexity: Measures fluency.

- Coherence: Assesses logical flow and consistency.

- Diversity: Evaluates lexical richness and originality.

- Readability: Gauges clarity using Flesch Reading Ease scores.

- Entity/Term Consistency: Ensures factual accuracy.

- Sentiment Consistency: Maintains emotional tone.

- These metrics are dynamically weighted based on the task type (e.g., creative vs. mathematical reasoning).

- Local LLM Integration:

- The system interfaces with local LLM models to generate and refine responses.

- Context-specific instruction sets (e.g., Alpaca, Vicuna, Llama3) tailor prompts to the task.

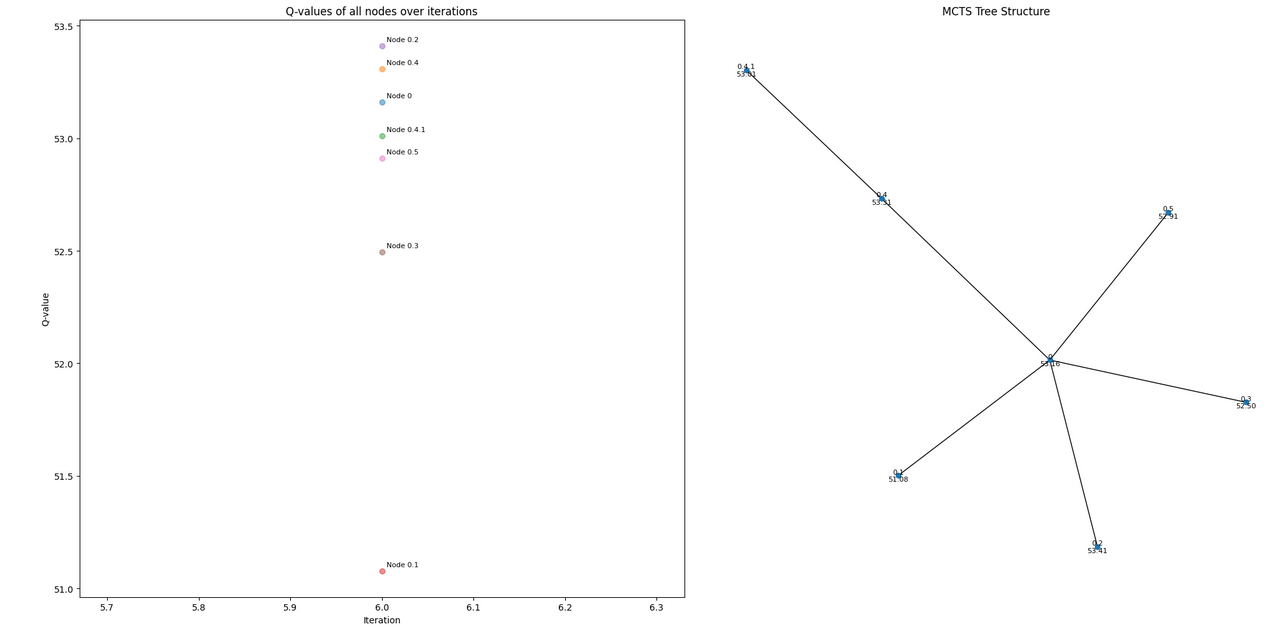

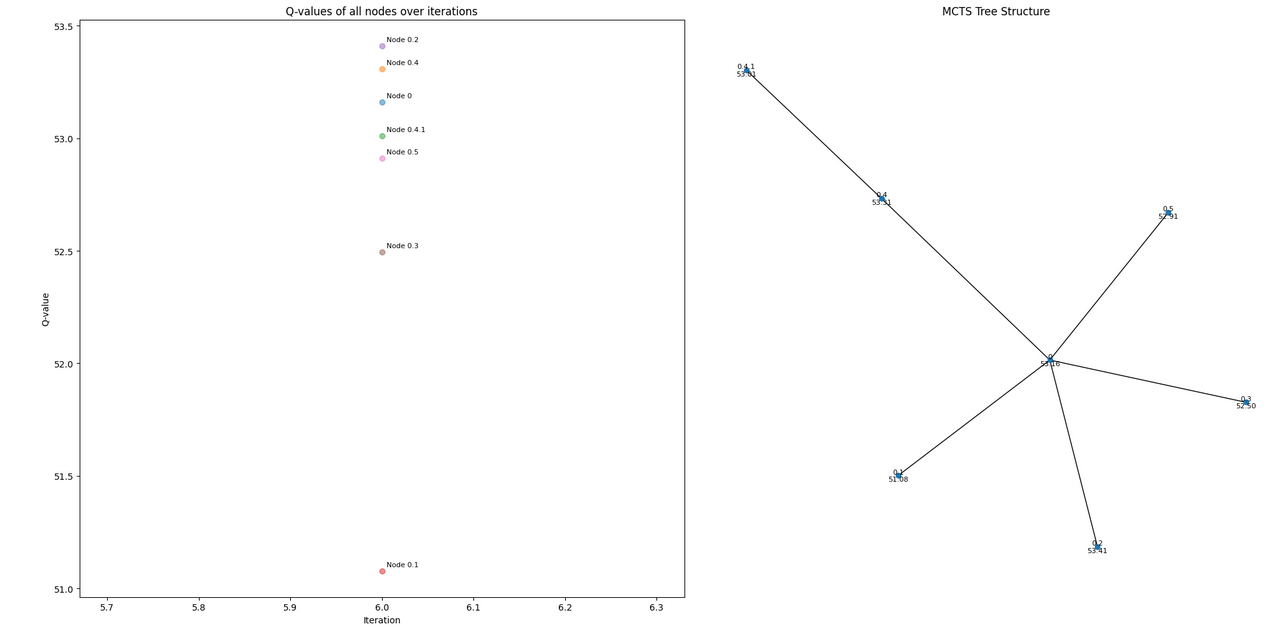

- Visualization:

- Real-time visualizations of the MCTS tree structure and node evaluations help track progress.

- Q-values (quality scores) are plotted over iterations, making it easy to identify high-performing nodes.

- Save and resume:

- Supports saving (each XX iterations) and loading states directly from a JSON file, which also makes a full analysis of the MCTS process possible.

Applications

Current Implementation

- Creative Writing:

- Generate and refine short stories or creative content.

- Identify and address common issues like lack of coherence, repetitive language, or weak narrative structure.

- Mathematical Reasoning (Preliminary):

- Solve step-by-step problems by improving logical reasoning and accuracy.

- Evaluate consistency in mathematical expressions and solutions.

Future Potential

- Translation:

- Apply MCTS to refine translations, ensuring both linguistic accuracy and contextual relevance.

- Example: Iteratively improving technical translations for domain-specific content. (Not implemented yet)

- Domain-Specific Knowledge:

- Tailor LLM outputs to specialized fields like medicine, engineering, or law.

- Example: Enhancing factual accuracy and terminology in scientific writing. (Not implemented yet)

- Fine-Tuning via Direct Preference Optimization (DPO):

- Use MCTS to guide LLM fine-tuning by aligning outputs with desired preferences and styles.

- Applicable for model training pipelines.

How It Works

1. Initialization

- The process begins with a query (e.g., "Write a short story about a cat finding an owner").

- A root node is created, containing the initial response generated by the LLM.

2. Tree Expansion

- Nodes are expanded by generating new variations of the response (child nodes).

- Each child represents a refinement or alternative based on feedback and metrics.

3. Simulation

- Each node undergoes evaluation using:

- Structured feedback: Identifies areas for improvement (e.g., coherence, creativity).

- Quality metrics: Computes scores for various attributes (e.g., diversity, readability).

- LLM-based scoring: Uses the model's own evaluation capabilities to assign scores.

4. Backpropagation

- Scores are propagated back up the tree to update parent nodes.

- Q-values are adjusted to represent the quality of a node and its descendants.

5. Best Path Selection

- The algorithm identifies the best-performing path in the tree (i.e., the sequence of nodes leading to the highest-quality response).

Technical Details

Monte Carlo Tree Search Components

- Node Representation:

- Each node stores:

- Query and response.

- Feedback and refined response.

- Quality metrics (Q-values, rewards).

- Parent-child relationships.

- Selection:

- Nodes are selected using a UCB (Upper Confidence Bound) strategy:

- Balances exploration (unvisited nodes) and exploitation (nodes with high Q-values).

- Expansion:

- New child nodes are created until the maximum children limit is reached.

- Each child represents a refined version of the parent response.

- Simulation:

- Nodes are evaluated using feedback and metrics to simulate their performance.

- Backpropagation:

- Updates Q-values for all ancestor nodes based on the child's performance.

Feedback Mechanism

- Feedback is generated in JSON format, highlighting issues such as:

- Coherence gaps: "Plot lacks logical progression."

- Readability issues: "Sentence structure is overly complex."

- Creativity improvements: "Add more vivid imagery."

Evaluation Metrics

- Perplexity: Measures fluency by computing the likelihood of the text.

- Coherence: Evaluates logical flow using cosine similarity of sentence embeddings.

- Diversity: Assesses lexical variety using n-gram entropy and type-token ratios.

- Readability: Uses Flesch Reading Ease to measure clarity.

- Entity Consistency: Ensures factual accuracy by tracking named entities.

- Sentiment Consistency: Maintains emotional tone across sentences.

- LLM auto-evaluation: The LLM will auto-evaluate its own output.

Each metric can be enabled or disabled individually, and weights can be assigned to prioritize one metric over the others. The combined score (ranging from 0 to 100) is then used to assist in selecting the best node to expand.

Importance Sampling

- Nodes with higher rewards are prioritized for expansion, ensuring efficient exploration of promising paths.

Visualization

The system includes a real-time visualization tool that displays:

- Tree Structure:

- Nodes and edges representing the MCTS structure.

- Q-values and other metrics annotated on nodes.

- Q-Value Progression:

- A scatter plot showing Q-values of all nodes over iterations.

Getting started

Basic usage:

query = "Write a short story about..."

mcts_sr_algorithm(

query,

topic="creative",

iterations=5,

qvalue_threshold=80,

importance_sampling=True

)

Example: Write a short story about a cat finding an owner.

- Iteration 1: Initial response lacks coherence and creativity.

Whiskers had been on her own for as long as she could remember. She scavenged for food and shelter, always keeping to herself. But as the days went by, she began to feel the loneliness belonging to her. She longed for a warm lap to snuggle up in, a gentle hand to pet her soft fur, and a loving voice to whisper sweet nothings in her ear.

One crisp autumn morning, Whiskers decided to explore the small town she lived in. She had heard rumors of a cozy little bakery on Main Street, where the aroma of freshly baked bread wafted out into the streets. As she padded along the sidewalk, her ears perked up at the sweet scent, and her tail twitched with excitement.

As she pushed open the door, a bell above it rang out, announcing her arrival. The baker, Mrs. Jenkins, looked up from her work and smiled. Whiskers was immediately smitten with the kind, round-faced woman. She padded over to the counter, rubbing against Mrs. Jenkins' legs in search of attention.

Mrs. Jenkins laughed and reached out to pet Whiskers, who began to purr contentedly. "Ah, a stray cat," she said to herself. "Just what I need." She scooped Whiskers up gently and cradled her in her arms, showering her with affection.

From that moment on, Whiskers was hooked. She followed Mrs. Jenkins everywhere, purring loudly as the baker petted her, fed her treats, and even built her a cozy little bed in the bakery. The townspeople soon grew accustomed to seeing the pair, and children would giggle with delight as Whiskers chased after scraps of bread on the floor.

As winter rolled in, Whiskers would snuggle up beside Mrs. Jenkins on cold days, her fur a warm blanket against the chill. On spring mornings, she'd watch with wonder as the snow melted away, revealing a world full of new sights and smells.

Whiskers knew she had found her forever home. And as she drifted off to sleep each night, surrounded by the comforting sights and smells of the bakery, she knew she would never have to face the loneliness again. She was exactly where she belonged, with the warm and loving Mrs. Jenkins by her side.

- Iteration 5: Best-performing response identified with a Q-value of 85. The refined response shows improved narrative flow and vivid details.

Whiskers had been roaming the streets for as long as she could remember. She scavenged for food and shelter, always keeping to herself. But as the days went by, she began to feel the ache of loneliness. She longed for a warm place to call her own, a gentle touch to soothe her fur, and a loving voice to calm her worries. The winds howled through the autumn nights, and Whiskers huddled in her makeshift bed, her ears peeled for the sound of footsteps.

One crisp morning, Whiskers decided to explore the small town she lived in. The sun cast long shadows across the pavement as she padded along Main Street, her ears perked up at the aroma of freshly baked bread wafting from the cozy little bakery. The scent was intoxicating, a mix of warm spices and sweet vanilla that made her tail twitch with excitement. She pushed open the door, and the bell above it rang out in greeting.

Mrs. Jenkins, the baker, looked up from her work and smiled. Whiskers was taken aback by the kind, round-faced woman. She padded over to the counter, rubbing against Mrs. Jenkins' legs in search of attention. Mrs. Jenkins laughed and reached out to pet Whiskers, her gentle touch sending shivers down Whiskers' spine. "Ah, a stray cat," she said to herself. "Just what I need." She scooped Whiskers up gently and cradled her in her arms, showering her with affection.

From that moment on, Whiskers was hooked. She followed Mrs. Jenkins everywhere, purring loudly as the baker petted her, fed her treats, and even built her a cozy little bed in the bakery. But despite her newfound sense of security, Whiskers' fear of animal control still lingered. She watched the streets anxiously, her ears tuned to the sound of sirens.

One fateful day, animal control showed up at the bakery. Whiskers' heart skipped a beat as she cowered behind Mrs. Jenkins. But the baker refused to let them take Whiskers away, and a fierce argument ensued. Mrs. Jenkins stood firm, her voice calm but resolute. "You're a part of this family now, little one," she whispered to Whiskers. "We're not going to let you go." The authorities left empty-handed, and Whiskers purred with relief as Mrs. Jenkins cradled her in her arms once more.

As the seasons changed, Whiskers and Mrs. Jenkins grew closer still. Whiskers learned to recognize Mrs. Jenkins' gentle touch and soothing voice, and her independence began to fade. Mrs. Jenkins, for her part, found comfort in Whiskers' companionship, and their bond became a source of strength and solace. They sat together on the bakery's windowsill, watching the sun set over the rooftops, Whiskers' purrs a gentle accompaniment to the sweet scent of freshly baked bread.

Significance

This project bridges the gap between decision-making algorithms and language model optimization. By adapting MCTS to creative contexts, it enables:

- Systematic refinement of LLM outputs.

- Objective evaluation using diverse metrics.

- Scalability to various domains (e.g., creative writing, mathematical reasoning).

Benefits

- Enhanced Output quality: Iterative feedback loops improve creativity and coherence, even with relatively weak local models (8B quantized llama3.1 for example).

- Fine-Tuning Framework: Provides a robust framework for aligning LLM outputs with user preferences.

- Cross-Domain Applications: Adaptable to tasks requiring precision, such as translation or scientific writing.

- vLLM-ready: A vLLM API (provided) is used to speed-up the entire process, using ngram decoding and structured output for faster and accurate generation without any memory cost.

Future Directions

- Domain-Specific Fine-Tuning:

- Train models for specialized tasks using MCTS-guided optimization.

- Integration with RLHF (Reinforcement Learning with Human Feedback): Combine MCTS with RLHF pipelines to further enhance LLM outputs.

Conclusion

This project demonstrates the potential of Monte Carlo Tree Search to elevate the quality and creativity of large language models. By combining structured feedback, rigorous evaluation, and iterative refinement, it offers a powerful framework for optimizing LLM outputs across diverse applications.