The purpose of this project is to deliver a production-ready agentic AI research assistant from experimental setups into production environments with robust testing and deployment pipelines that automates two critical tasks for researchers:

By building on the prior prototype Previous Publication

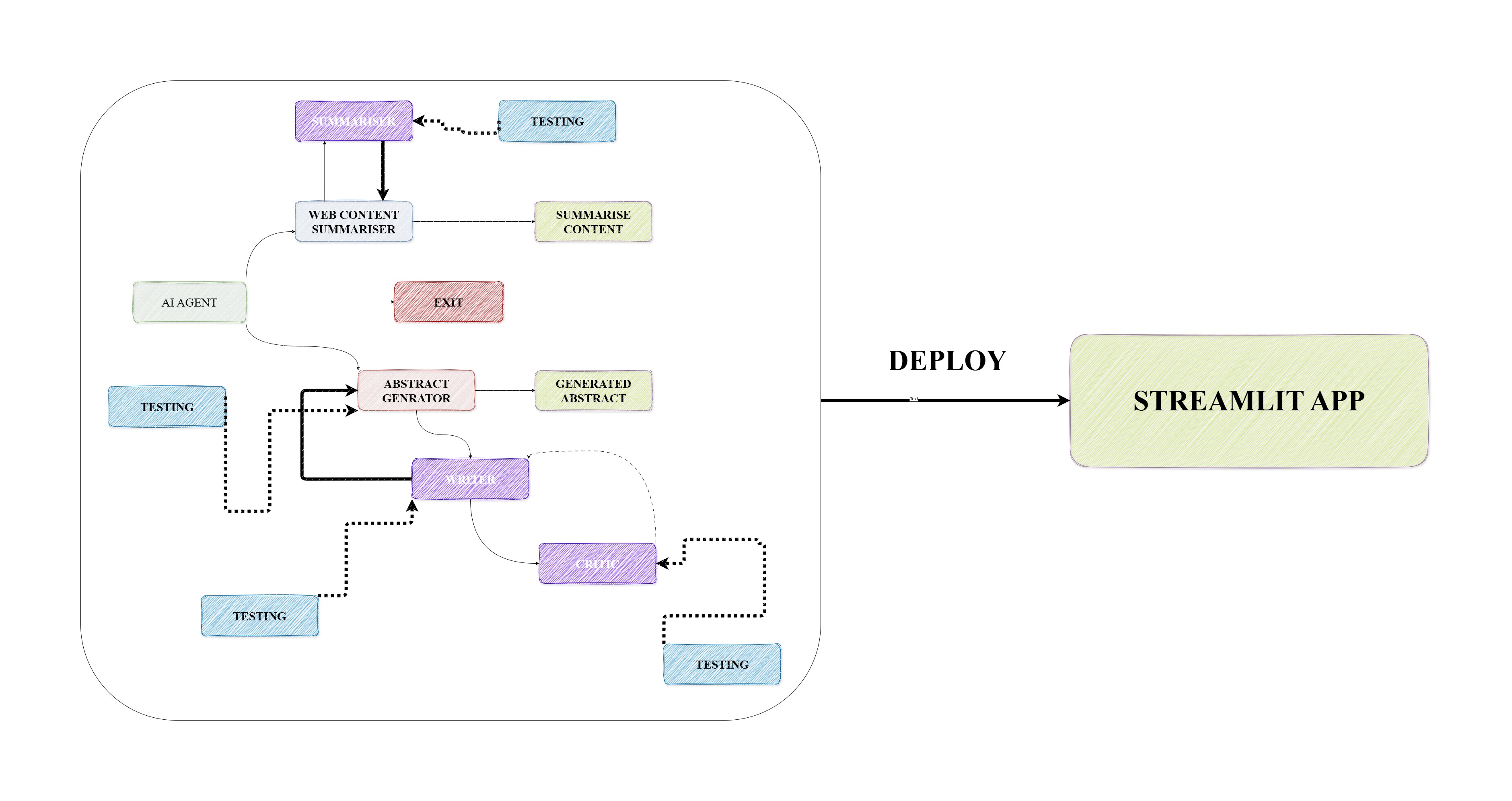

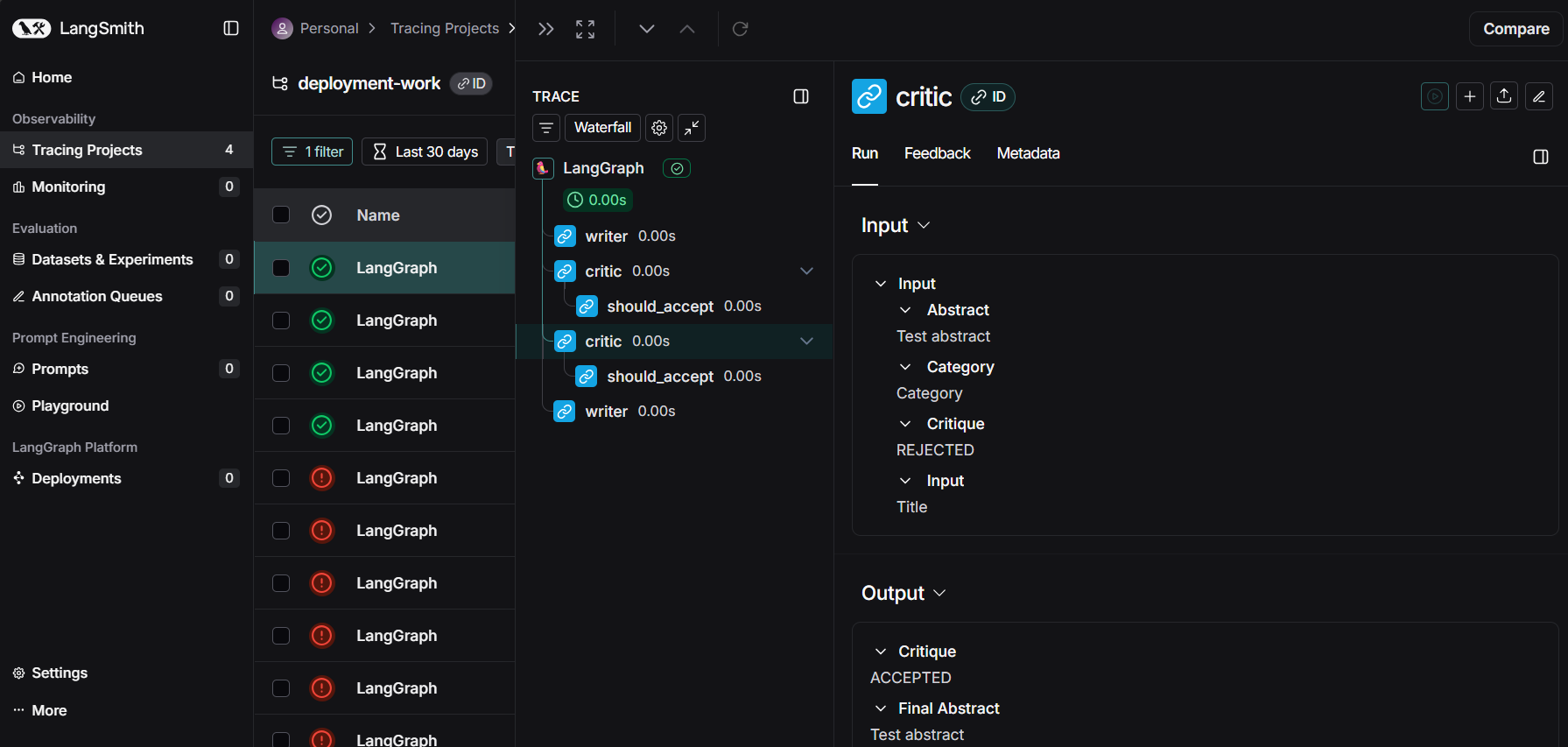

, via extending it with a stronger focus on testing, reliability, and deployment readiness. By combining LangGraph for agent orchestration, LangSmith for workflow tracing, and LLM-powered agents from HuggingFace, the project provides a modular, reproducible, and user-friendly tool that can be easily deployed via Streamlit.

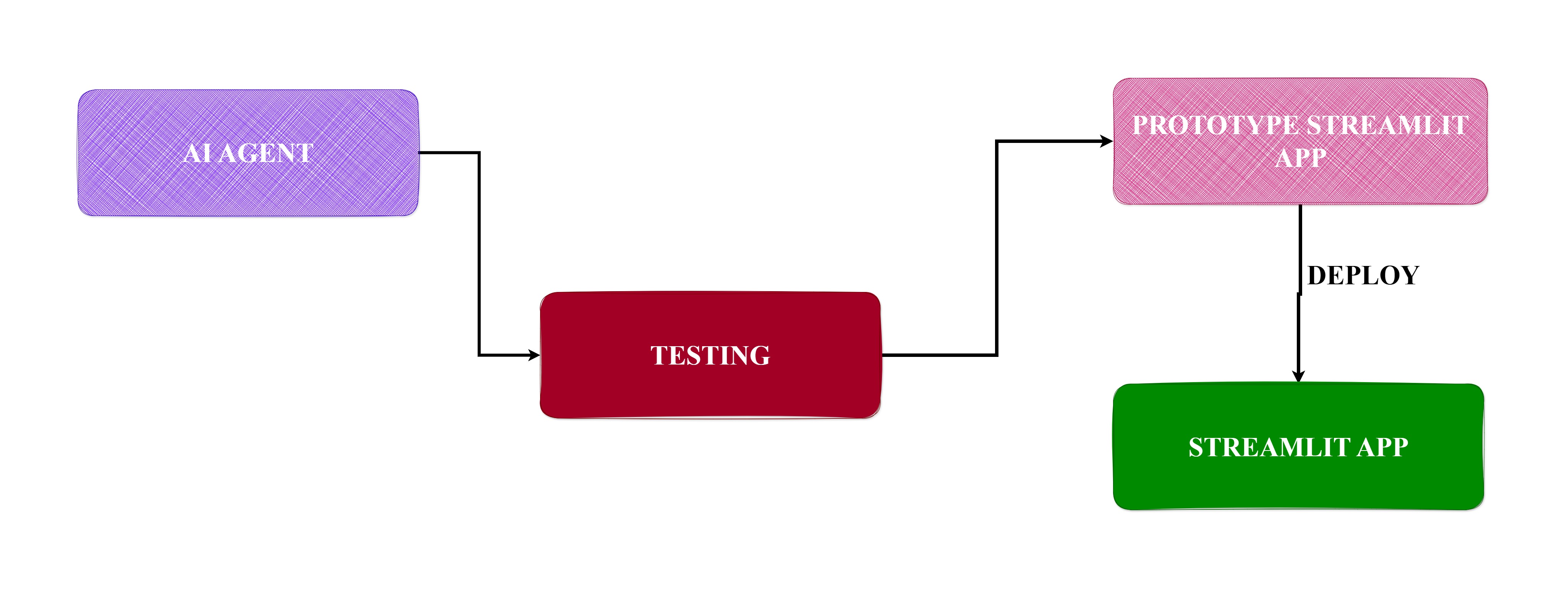

The methodology remains the same as the previous project but with testing with Pytest and Pytest-mock and finally deployment unto Streamlit.

Testing

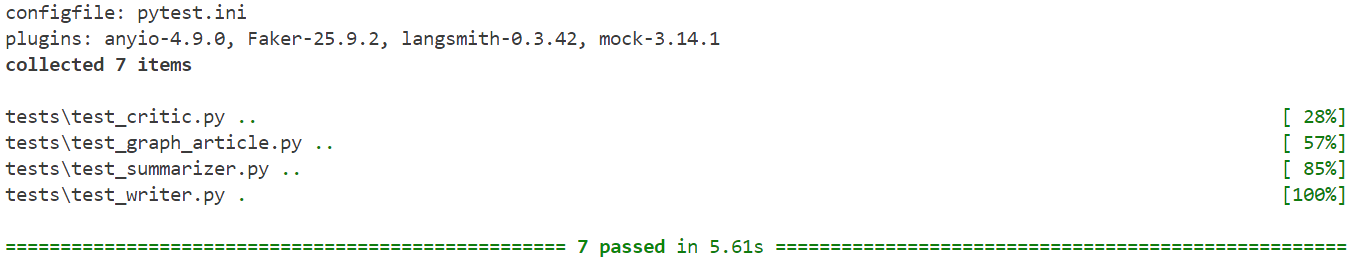

The test framework for the project was designed to ensure; correctness, robustness, and reliability of each modular component within the agentic research assistant. It begins with test_writer.py, which validates the functionality of the writer node responsible for producing research abstracts from a given title and category. By mocking the underlying language model chain, this test isolates the generation logic from any external dependencies, confirming that the resulting abstract is accurately stored within the state’s "abstract" field.

The evaluation process continues with test_summarizer.py, which focuses on the web content summarizer node. Here, the aim is to confirm that when given valid input content, the summarizer produces a coherent and relevant summary. Also, the test verifies graceful fallback behavior, ensuring that the node returns a default message; No content to summarize, when no input text is available.

More complex interactions are tested in test_graph_article.py, which validates the orchestration of the article generation workflow graph. This workflow models a writer–critic loop, where the writer produces an abstract and the critic evaluates its quality. Two key scenarios are tested; one in which the critic immediately accepts the first abstract, and another in which the initial draft is rejected and revised before final approval. These tests confirm that the workflow transitions correctly between nodes and adheres to the intended decision-making process.

The critical evaluation logic itself is isolated and tested in test_critic.py. But by mocking the critic’s evaluation mechanism, this test ensures that approval or rejection decisions are correctly reflected in the output state; either preserving the final_abstracton acceptance or removing it on rejection.

To maintain test speed and reproducibility, conftest.py provides a set of fixtures that replace real API calls and LLM executions with mocked responses. This design helps prevent network calls, avoids rate limits, and ensures that results are consistent regardless of execution environment. Together, these tests form a comprehensive safety net, validating both individual components and their collaborative behavior, while keeping the development process efficient and predictable.

Deployment

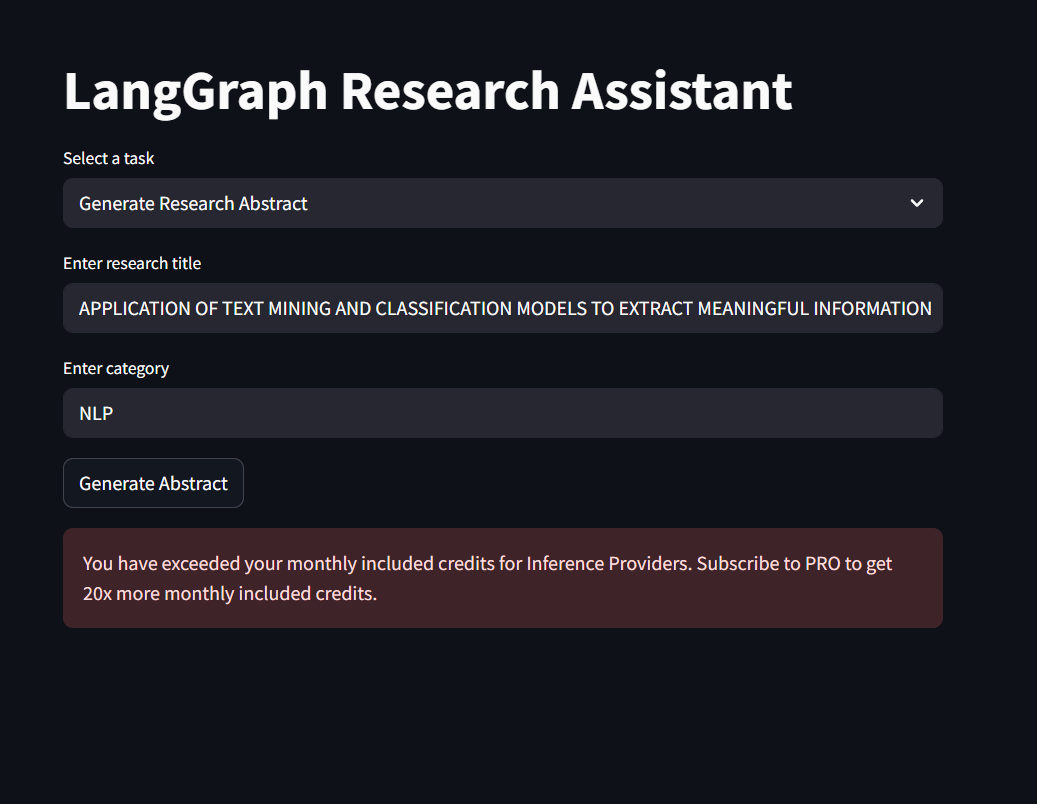

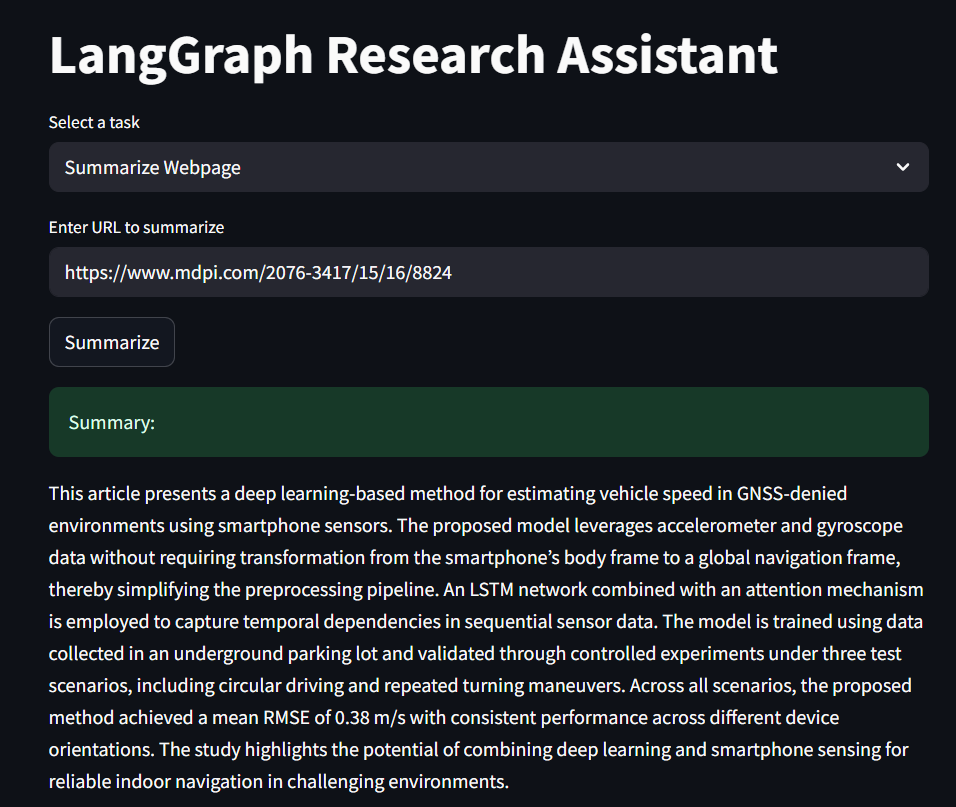

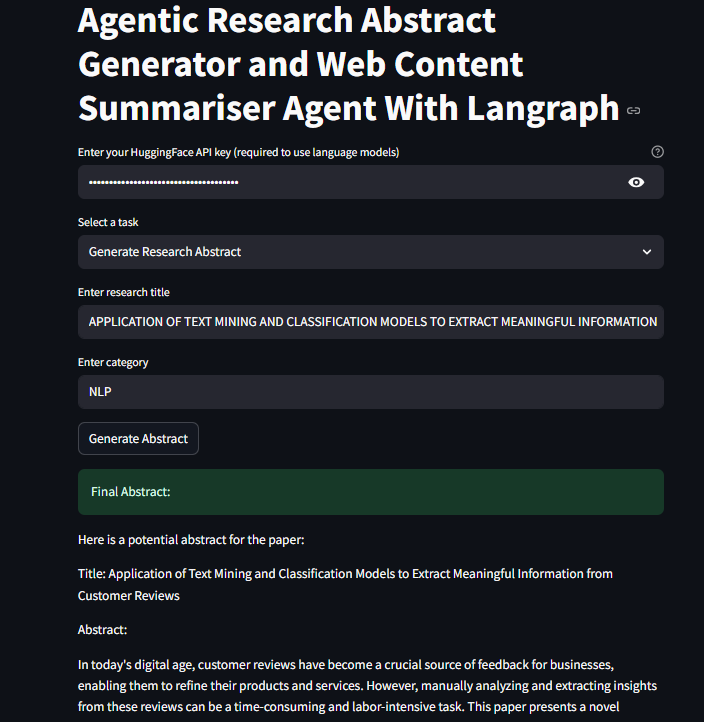

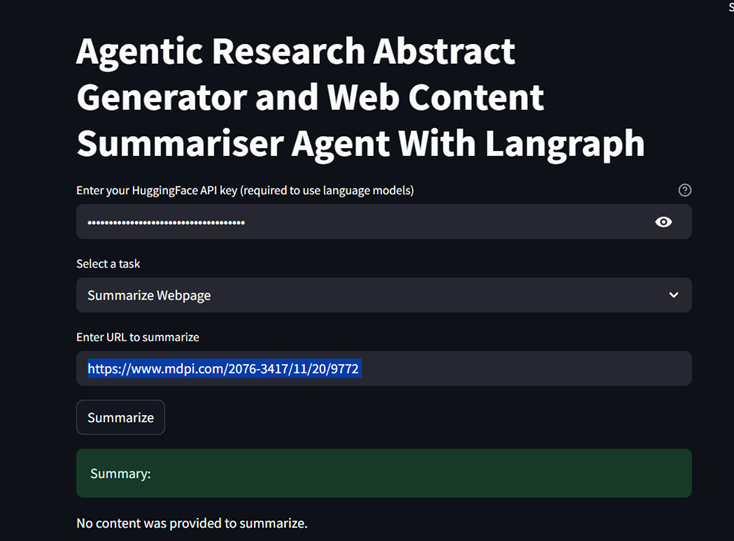

The streamlit_app.py file serves as the main user interface for the project, allowing users to interact with two core functionalities: generating research abstracts and summarizing webpage content. Built with Streamlit, it provides input fields for a HuggingFace API key, research title, category, or URL, and routes requests to the appropriate LangGraph workflow—either article_graph for the writer–critic abstract generation loop or web_graph for web content summarization. The app handles user input validation, API authentication, error messaging, and result display, offering a simple, interactive way to leverage the system’s language model and summarization capabilities directly from a browser.

The project can be replicated on any system configuration. Create a new folder and clone the GitHub repo using;

git clone https://github.com/daniau23/agentic_researcher.git

Once cloned, copy the yml file; environment_file.yml from yml_file folder into the new created folder and follow the installation instructions as shown on the Previous Project's GitHub repo to install all dependencies needed for the clone project. After this, Pytests can be run by using command pytest, after which the streamlit app can be started by using streamlit run streamlit_app.py command.

In conclusion, this project successfully delivers a production-ready agentic AI system that integrates research abstract generation and webpage summarization into a modular, test-driven, and user-friendly Streamlit application. Through comprehensive Pytest, the system ensures reliability, robustness, and maintainability, while strategic use of tools like LangGraph, LangSmith, and BeautifulSoup enables seamless orchestration and deployment. Despite challenges in LLM testing, Selenium integration, and deployment configuration, the final implementation offers an accessible, browser-based interface for researchers and knowledge workers to efficiently generate structured abstracts and concise web content summaries.

This project is licensed under the MIT License. See the LICENSE file for more details.