This project presents a multi-agent system that evaluates a candidate's public GitHub profile against a provided job description (JD). Leveraging LangGraph for agent orchestration, the system automates the extraction of required skills from JDs, analyzes GitHub repositories for tech stack compatibility and activity, and generates a comprehensive evaluation report. The approach demonstrates the power of agentic workflows in automating technical profile screening and recommendation tasks.

Technical hiring often requires manual screening of candidates' open-source contributions to assess their fit for a role. This project automates the process by combining:

Manual technical profile screening is time-consuming, inconsistent, and prone to bias. Existing solutions lack automated, agentic workflows for matching open-source contributions to job requirements.

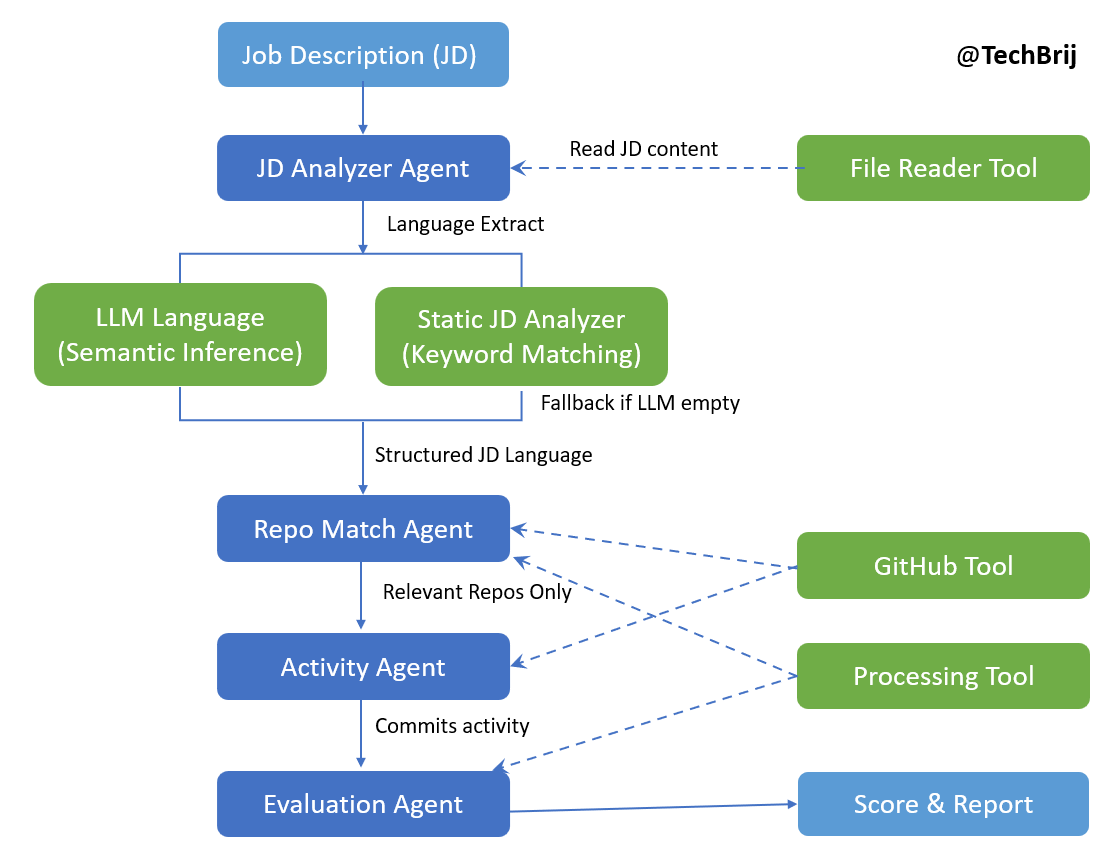

The system is composed of four main agents, each responsible for a distinct stage in the evaluation pipeline:

| Agent Name | Role & Functionality |

|---|---|

| JD Analyzer Agent | Extracts programming languages and skills from the job description using LLM with static analysis fallback. |

| Repo Match Agent | Matches candidate's GitHub repositories to required skills. |

| Activity Agent | Analyzes commit activity in relevant repositories over the past year. |

| Evaluation Agent | Scores the match and generates a human-readable evaluation report. |

The workflow is orchestrated as shown in the image.

data/job-description.txt).The final fit score is calculated in four stages, executed sequentially:

Repository Language Match Score: LLM gives structured list of JD languages with weights. GitHub APIs gives repository information. The agent will find relevant repositories based on the JD languages. All found languages weights will be added. value: (0-1). If all languages are available, the score will be 1.

Activity Scoring: The agent will check how many commits in the relevant repositories in the last one year. In the configuration, minimum commits expectation is defined for the JD. If number of commits is less then the ratio will be score. If number of commits equal or greater than the expectation then it will be 1.

Advanced Scoring: It checks how many relevant repositories are starred and using GitHub Issues to ensure actively managed. value: (0 - 1)

Overall Score = Repo Match Score * 0.5 + Activity Score 0.3 + Advanced Score * 0.2

Result: The final result status is based on the score percentage:

| Score Range | Match Level |

|---|---|

| > 80% | Excellent |

| 50% – 80% | Good |

| < 50% | Partial Match |

pytest for running testsClone the repository

git clone <repo-url> cd multiagent-aaidc-project2

Create Virtual Environment

python -m venv venv

Activate the virtual environment

venv\Scripts\activate

source venv/bin/activate

Install dependencies

pip install -r requirements.txt

Configure environment variables

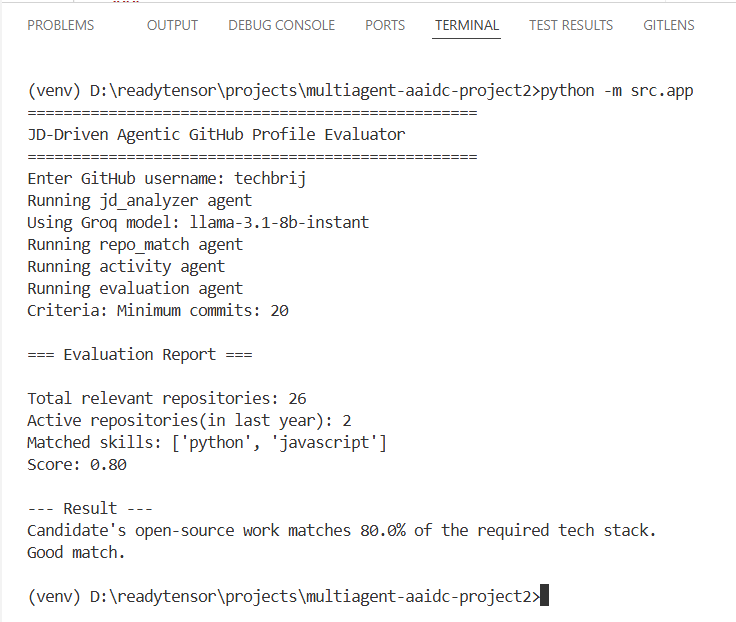

.env.example to .env and set your API keys (Groq) and other configuration parametersGROQ_API_KEY=your_groq_api_key GROQ_MODEL=llama-3.1-8b-instant # Activity criteria EXPECTED_MIN_COMMITS_IN_LAST_ONE_YEAR=20 # Maximum number of repositories to fetch MAX_REPOS_TO_FETCH = 50

You can put your JD file in data folder and modify the path in src/app.py OR overwrite data/job-description.txt file.

Run the main application:

python -m src.app

.env, never commit to repositorypython-dotenv for configurationIn this project, the performance depends on different factors especially on API response, number of repositories and LLM latency.

To assess the performance of our workflow, we developed a dedicated test class using the pytest framework.

Tested the complete LangGraph pipeline, ensuring all agents executed for different GithHub user names one by one.

Tool outputs were manually validated for relevance and completeness.

Application logs were inspected to verify state transitions and error-free execution.

run following command to run the test

pytest tests/test_performance.py -s

Note: set the username and JD path based on your requirement.

Infrastructure: Windows 11, 32 GB RAM, 2TB SSD, i7-13650HX

Job Description: data/job-description.txt

| GitHub Username | Total Relevant Repos | Active Repos | Matched Skills | Score | JD Agent (s) | Repo Match Agent (s) | Activity Agent (s) | Evaluation Agent (s) |

|---|---|---|---|---|---|---|---|---|

| techbrij | 28 | 2 | 3 | 0.85 | 3.1009 | 4.2833 | 0.0007 | 0.0000 |

| ytdl-org | 2 | 2 | 1 | 0.80 | 6.0655 | 3.2459 | 0.0010 | 0.0000 |

| psf | 18 | 9 | 2 | 0.92 | 1.1972 | 16.5992 | 0.0016 | 0.0000 |

| httpie | 13 | 1 | 2 | 0.65 | 0.9489 | 1.0527 | 0.0013 | 0.0000 |

| Textualize | 21 | 8 | 2 | 0.91 | 1.3543 | 6.8981 | 0.0011 | 0.0000 |

| StephenCleary | 2 | 1 | 1 | 0.48 | 1.3852 | 1.1011 | 0.0005 | 0.0000 |

Agent columns show the timings in seconds to complete the related operations by the agent for the user.

The project uses JD language requirement and commit count as criteria. Based on the criteria, the accuracy is greater than 80%. More criteria can be added for better quality results.

Evaluation agent job is to show the report so it takes around 0 sec.

Some user names are executed multiple times and the result variation is 5-10%.

A hiring manager provides a job description for a Senior AI/Python Engineer. The system:

This project is maintained as part of the ReadyTensor Agentic AI Developer Certification Program and is intended as an educational and reference implementation of a multi-agent system.

While using this project, it is recommended to monitor API usage and error rates. For any dependencies, LLM or API changes, it should be updated accordingly.

The project is released under the MIT License, allowing free use, modification, and distribution with proper attribution. Full license text is available in the repository.

This project showcases a complete, multi-agent architecture using LangGraph, custom tools, and structured LLM reasoning. It automates the extraction of required skills from JDs, analyzes GitHub repositories for tech stack compatibility and activity, and generates a comprehensive evaluation report. It reflects strong engineering practices in agentic AI design, tool integration, and workflow orchestration.