Abstract

This project presents a Retrieval-Augmented Generation (RAG) system that enables intelligent question-answering over multi-format document collections. By combining semantic vector search with large language models (LLMs), the assistant provides accurate, context-aware responses from uploaded documents in .txt, .pdf, and .docx formats.

Motivation

Conventional chatbots struggle to answer questions about domain-specific or proprietary content. This project addresses that challenge by creating a searchable knowledge base directly from user-provided documents. Organizations and individuals can therefore build custom AI assistants that deliver accurate, source-backed responses tailored to their unique content.

System Architecture

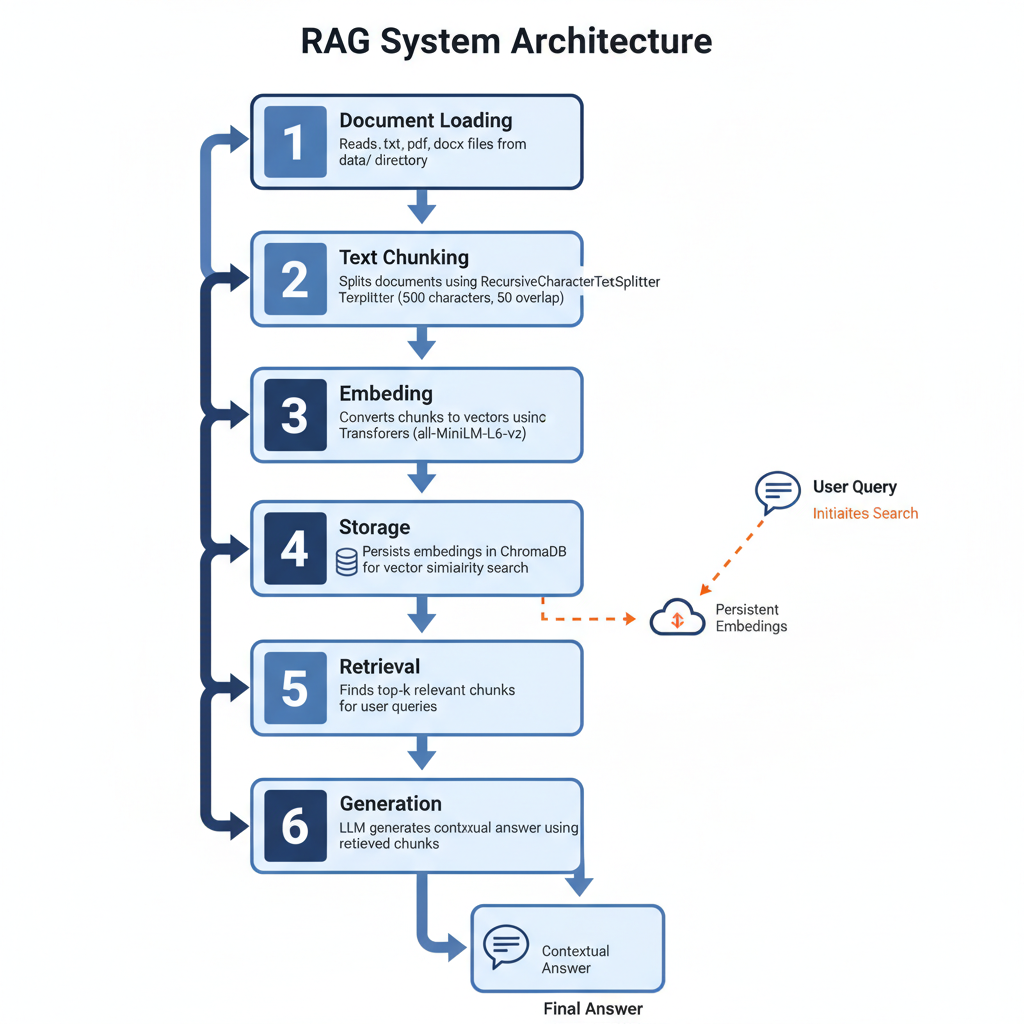

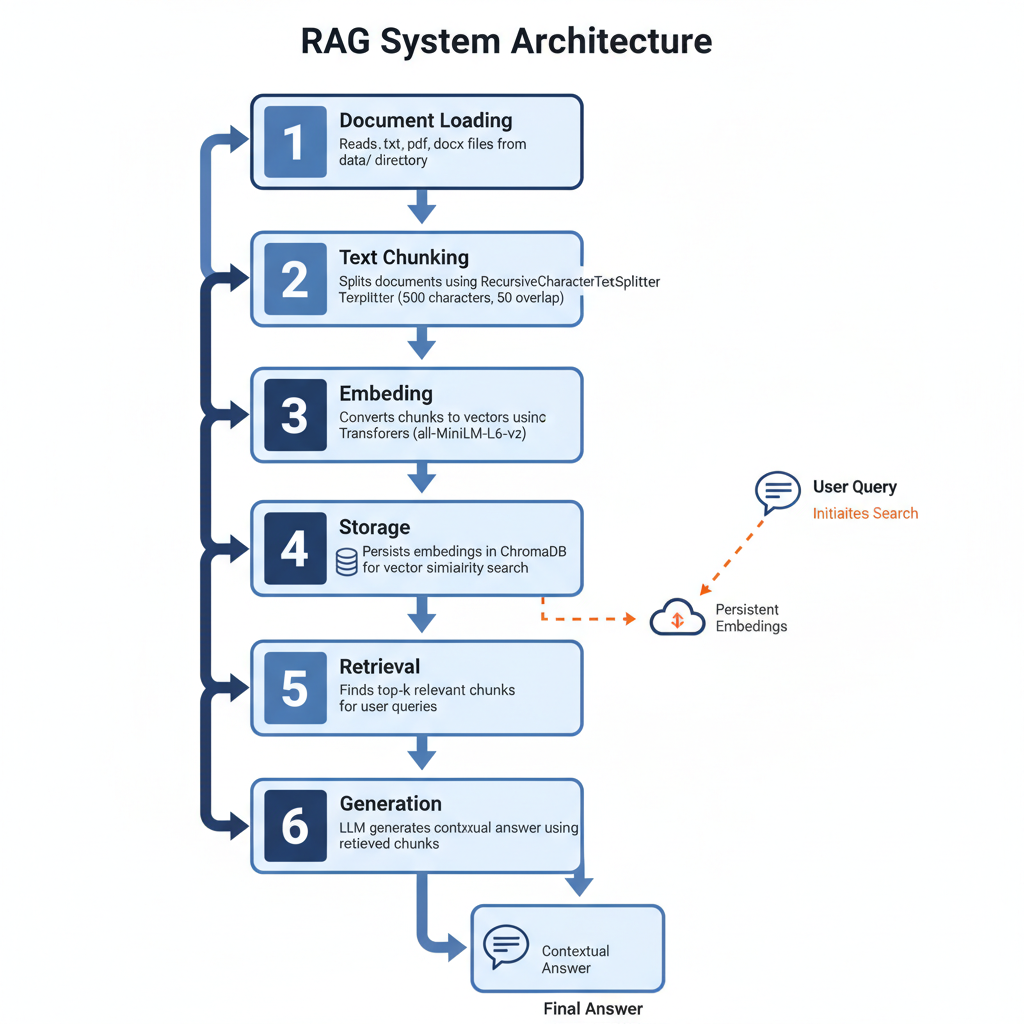

The system implements a complete RAG pipeline:

- Document Loading – Reads .txt, .pdf, .docx files from data/ directory

- Text Chunking – Splits documents using RecursiveCharacterTextSplitter (500 characters, 50 overlap)

- Embedding – Converts chunks to vectors using Sentence Transformers (all-MiniLM-L6-v2)

- Storage – Persists embeddings in ChromaDB for vector similarity search

- Retrieval – Finds top-k relevant chunks for user queries

- Generation – LLM generates contextual answer using retrieved chunks

Technical Implementation

Core Technologies

- Framework: LangChain for orchestration

- Vector Database: ChromaDB (persistent)

- Embeddings: Sentence Transformers

- LLM Integration: OpenAI GPT, Groq Llama, Google Gemini

- Document Processing: PyPDF2, python-docx

Key Features

- Zero-shot understanding across multiple document formats

- Persistent vector storage for reproducibility

- Flexible support for multiple LLM providers

- Interactive CLI for experimentation

- Configurable similarity thresholds

Evaluation & Results

Test Corpus: 11 documents spanning AI, quantum computing, climate science, and biotechnology.

Sample Query

- Query: “What is artificial intelligence?”

- Response: Accurate definition retrieved from AI documents with context-aware synthesis

- Retrieval Time: Sub-second

- Relevance: High semantic similarity score

System Demonstrations

- Multi-document synthesis for comprehensive answers

- Domain-specific knowledge retrieval

- Clear handling of out-of-scope queries

- Optimized for single-turn interactions

Implementation Highlights

Development Practices

- Organized modular codebase (

app.py for pipeline, vectordb.py for DB logic)

- Secure API key management with

.env and .env.example

- Error handling for common issues (e.g., missing keys, unsupported files)

- Documentation with system diagrams for clarity

Practical Considerations

- Handles formats commonly used in organizations

- Straightforward setup and reproducibility

- User feedback during document processing

- Multi-provider LLM support to handle cost/availability constraints

Scalability Features

- Efficient indexing for large corpora

- Configurable chunking parameters

- Persistent vector database suitable for deployment

Limitations & Future Work

Current Optimizations

- Optimized for single-turn queries; multi-turn conversational memory planned.

- Performance is strongest with specific, context-rich queries; future work includes query expansion.

- Responses are limited to the uploaded corpus; planned enhancements include hybrid web + local retrieval.

- Document ingestion is manual; automation for continuous ingestion is under development.

Future Enhancements

- Conversation history and context management

- Automated document ingestion pipelines

- Advanced query reformulation techniques

- Enterprise system integration (e.g., SharePoint, Google Drive)

- Multi-modal retrieval (e.g., images, charts, tables)

Conclusion

This project demonstrates a complete RAG pipeline implementation aligned with Module 1 of the Agentic AI Developer Certification Program. By combining robust document processing, semantic search, and multi-provider LLM integration, the assistant lays a solid foundation for domain-specific AI solutions. It balances technical rigor with practical usability, paving the way for future extensions into conversational, automated, and multi-modal AI assistants.