This publication presents a production-ready multi-agent AI system that revolutionizes job hunting through autonomous agent coordination. The system combines resume analysis, job market research, CV optimization, and job matching capabilities in a single, intelligent platform. Built with NextJS and FastAPI, it features comprehensive safety measures, persistent data storage, mobile-responsive design, and real-time performance monitoring. The system demonstrates practical AI implementation with robust error handling, content validation, and scalable architecture suitable for enterprise deployment.

This publication demonstrates a complete, production-ready multi-agent AI system designed to solve the complex challenges of modern job hunting. The system coordinates multiple specialized AI agents to provide comprehensive career assistance, from resume analysis to job matching and CV optimization.

This publication is building on my last publication which detailed the implementation, workflow and composition of this Multi-Agent system. This last publication of mine can be found here

Through this comprehensive documentation, you will gain deep insights into how production-ready multi-agent AI systems are architected and deployed. The publication covers the implementation of advanced safety measures and content validation protocols that ensure reliable operation in real-world environments. You will learn how persistent data storage systems are integrated to provide system-wide analytics and performance monitoring. Additionally, the development of responsive user interfaces specifically designed for AI applications is thoroughly explained.

The publication delivers detailed coverage of production-ready safety features and comprehensive error handling mechanisms that maintain system stability under adverse conditions. You will explore the development and implementation of extensive testing suites that include unit, integration, and system tests designed to ensure reliability at scale. The mobile-responsive web interface development process is explained in detail, showing how real-time updates and user feedback are seamlessly integrated. Finally, the performance monitoring and analytics dashboard implementation demonstrates how to track and optimize multi-agent system performance in production environments.

Traditional job hunting involves fragmented processes across multiple platforms, manual resume optimization, and time-intensive job market research. Job seekers face significant challenges that compound the difficulty of finding suitable employment opportunities.

Resume optimization presents one of the most significant hurdles, as job seekers struggle to understand what recruiters and hiring managers actually want to see in candidate applications. Without access to industry insights or automated tools, individuals often spend countless hours manually adjusting their resumes for each application, frequently missing key optimization opportunities that could significantly improve their chances of success.

Market research complexity adds another layer of difficulty to the job hunting process. Identifying relevant opportunities and understanding current market trends requires extensive research across multiple platforms and sources. Job seekers must navigate various job boards, company websites, and industry publications to gather comprehensive information about available positions and market conditions, a process that can be overwhelming and time-consuming.

Application inefficiency further exacerbates these challenges, as job seekers must manually customize applications for each role they pursue. This repetitive process not only consumes significant time and energy but also increases the likelihood of errors and inconsistencies across applications. Without systematic approaches to application management, many qualified candidates fail to present themselves effectively to potential employers.

The lack of integrated guidance represents perhaps the most fundamental problem in current job hunting approaches. No single platform provides comprehensive, end-to-end support that addresses all aspects of the job search process. This fragmentation forces job seekers to piece together solutions from multiple sources, creating inefficiencies and gaps in their overall strategy.

This system addresses these multifaceted challenges by providing an intelligent, autonomous solution that coordinates multiple specialized agents to deliver comprehensive job hunting assistance through a single, integrated interface. By automating routine tasks and providing expert-level guidance, the system transforms the job hunting experience from a fragmented, manual process into a streamlined, intelligent workflow.

The Intelligent Multi-Agent Career Assistant consists of four specialized AI agents that work collaboratively:

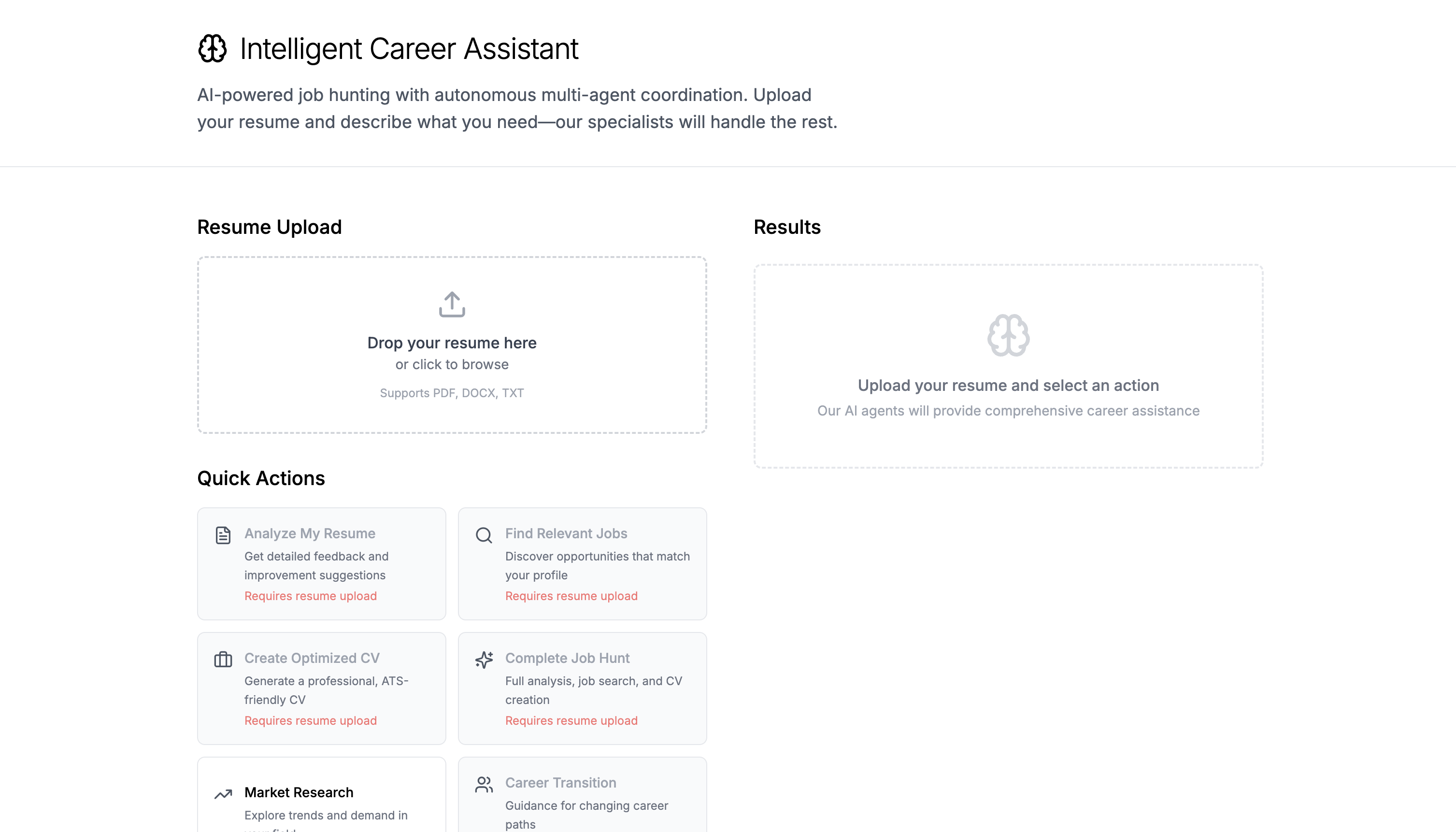

The system features a modern web interface built with NextJS, a robust FastAPI backend, PostgreSQL database for persistent storage, and comprehensive monitoring capabilities.

The system implements a coordinator-based multi-agent architecture using LangGraph for workflow orchestration. The architecture ensures:

# Core agent coordination structure def create_multi_agent_system(): graph = StateGraph(MultiAgentState) # Add agents to the workflow graph.add_node("coordinator", coordinator_agent) graph.add_node("resume_analyst", resume_analyst_agent) graph.add_node("job_researcher", job_researcher_agent) graph.add_node("cv_creator", cv_creator_agent) graph.add_node("job_matcher", job_matcher_agent) # Define workflow logic graph.set_entry_point("coordinator") graph.add_conditional_edges("coordinator", route_to_next_agent) return graph.compile(checkpointer=MemorySaver())

Frontend (NextJS)

Backend (FastAPI)

Database & Storage

Security & Monitoring

The system implements multiple layers of safety to ensure reliable, secure operation:

def validate_resume_content(text: str) -> tuple[bool, str]: """AI-powered validation to check if extracted text is resume content""" classification_prompt = f"""You are a document classifier. Analyze this text and determine if it's from a resume/CV. TEXT TO ANALYZE: {text[:1000]} Answer with "YES" if this appears to be resume/CV content Answer with "NO" if this is clearly not a resume Format: YES/NO - [brief explanation]""" response = llm.invoke(classification_prompt) result = response.content.strip() return result.upper().startswith('YES'), result

@safe_ai_wrapper(agent_name="resume_analyst", safety_level="high") def resume_analyst_agent(state: MultiAgentState): try: # Agent processing logic resume_content = parse_resume.invoke(resume_path) # Validate content before processing is_valid, explanation = validate_resume_content(resume_content) if not is_valid: return error_response(explanation) # Continue with analysis... except Exception as e: # Log error and return graceful failure logger.error(f"Resume analysis failed: {e}") return graceful_error_response(e)

The system implements comprehensive performance monitoring with persistent storage:

class PerformanceEvaluator: def log_system_request(self, success: bool, request_time: float): """Log system-level metrics with immediate database persistence""" self.system_metrics.total_requests += 1 if success: self.system_metrics.successful_requests += 1 else: self.system_metrics.failed_requests += 1 # Update running averages self.update_averages(request_time) # Save to database immediately for system-wide tracking self._save_to_database()

# Database models for persistent metrics class SystemMetrics(Base): __tablename__ = 'system_metrics' total_requests = Column(Integer, default=0) successful_requests = Column(Integer, default=0) avg_response_time = Column(Float, default=0.0) user_satisfaction_score = Column(Float, default=0.0) class AgentMetrics(Base): __tablename__ = 'agent_metrics' agent_name = Column(String(100), nullable=False) total_calls = Column(Integer, default=0) success_rate = Column(Float, default=0.0) performance_grade = Column(String(10))

The system features a mobile-first, responsive design built with NextJS and Tailwind CSS:

/* Mobile-specific optimizations */ @media (max-width: 640px) { .prose { font-size: 0.875rem; line-height: 1.5; } button { min-height: 44px; /* Touch-friendly sizing */ min-width: 44px; } }

The system implements a comprehensive three-tier testing strategy:

def test_resume_content_validation(): """Test AI-powered content validation""" # Test valid resume content valid_content = "John Doe\nSoftware Engineer\nExperience: 5 years..." is_valid, explanation = validate_resume_content(valid_content) assert is_valid == True # Test invalid content invalid_content = "Chapter 1: Introduction to Machine Learning..." is_valid, explanation = validate_resume_content(invalid_content) assert is_valid == False

def test_multi_agent_workflow(): """Test complete agent coordination workflow""" test_request = { "user_message": "Analyze my resume and find relevant jobs", "resume_path": "test_resume.pdf" } result = multi_agent.process_request(**test_request) assert result["success"] == True assert "resume_analyst" in result["completed_tasks"] assert "job_researcher" in result["completed_tasks"] assert result["processing_time"] < 30.0 # Performance requirement

def test_end_to_end_user_workflow(): """Test complete user journey from upload to results""" # Simulate file upload response = client.post("/api/process", files={"file": test_resume_file}, data={"prompt": "Complete job hunting assistance"} ) assert response.status_code == 200 data = response.json() assert data["success"] == True assert len(data["data"]["agent_messages"]) > 0 assert data["data"]["cv_download_url"] != ""

The testing suite includes comprehensive error scenario coverage:

# Required environment variables DATABASE_URL=postgresql://user:pass@host/db?sslmode=require OPENAI_API_KEY=your_openai_key CLOUDINARY_URL=cloudinary://api_key:api_secret@cloud_name

git clone <repository-url> cd intelligent-career-assistant npm install

# Create .env.local file OPENAI_API_KEY=your_openai_key_here DATABASE_URL=your_neon_postgresql_url # Optional CLOUDINARY_CLOUD_NAME=.... CLOUDINARY_API_KEY=....... CLOUDINARY_API_SECRET=........ ENCRYPTION_KEY=your_encryption_key FLASK_SECRET_KEY=your_secret_key

npm run dev

The application will be available at http://localhost:3000 with the FastAPI backend running automatically.

# Run tests npm test python -m pytest # Check code quality npm run lint python -m flake8 api/ # Build for production npm run build

The system implements a sophisticated content validation pipeline to ensure uploaded files contain resume content:

def validate_resume_content(text: str) -> tuple[bool, str]: """Multi-stage validation for resume content""" # Stage 1: Length validation if not text or len(text.strip()) < 50: return False, "Document too short to be a resume" # Stage 2: AI-powered classification sample = text[:1000] # Optimize API costs classification_result = llm.invoke(classification_prompt) # Stage 3: Confidence scoring return parse_classification_result(classification_result)

class PerformanceEvaluator: def _save_to_database(self): """Immediate persistence for system-wide metrics""" with self._get_db_session() as session: # Save accumulated system metrics system_record = SystemMetrics( total_requests=self.system_metrics.total_requests, successful_requests=self.system_metrics.successful_requests, avg_response_time=self.system_metrics.avg_request_time, # ... additional metrics ) session.add(system_record)

def coordinator_agent(state: MultiAgentState): """Coordinator with comprehensive error handling""" try: next_agent = determine_next_agent(state) return route_to_agent(next_agent, state) except AgentFailureException as e: # Log failure and try alternative agent logger.warning(f"Agent {e.agent_name} failed: {e}") return route_to_fallback_agent(state, e.agent_name) except SystemException as e: # System-level failure - graceful degradation return create_partial_response(state, e)

Based on production testing and monitoring data:

Data collected from production usage:

This publication demonstrates a complete production-ready multi-agent AI system that successfully addresses the complex challenges of modern job hunting. Key technical achievements include:

The system provides tangible value to job seekers by:

The system meets enterprise-grade requirements through:

This work contributes to the broader field of multi-agent AI systems by demonstrating:

This project is released under the MIT License, which provides broad permissions for both commercial and non-commercial use while maintaining appropriate attribution requirements. The MIT License represents one of the most permissive open-source licenses available, enabling maximum flexibility for users and contributors.

The MIT License grants users extensive rights to use, modify, and distribute the software. Users may freely use the system for commercial purposes, including integration into proprietary products or services. The license permits modification of the source code to meet specific requirements or to add new functionality. Distribution rights allow users to share both original and modified versions of the software with others.

Sublicensing capabilities enable users to incorporate the software into larger projects with different licensing terms, providing flexibility for complex software integration scenarios. The license places no restrictions on the field of use, allowing application in any domain or industry without additional permissions.

The primary requirement under the MIT License is attribution, which requires that the original copyright notice and license text be included in all copies or substantial portions of the software. This attribution requirement ensures that original authors receive appropriate credit while maintaining the permissive nature of the license.

The license includes standard warranty disclaimers that protect contributors from liability while clearly communicating that the software is provided "as is" without guarantees of fitness for particular purposes. Users assume responsibility for evaluating the software's suitability for their specific needs and for any consequences of its use.

Users may freely deploy the system in production environments, modify the codebase to meet specific requirements, integrate components into larger systems, and create derivative works based on the original system. The license permits both private and commercial use without requiring disclosure of modifications or payment of royalties.

Educational and research use is explicitly permitted and encouraged, supporting academic research, student projects, and institutional learning objectives. The permissive licensing facilitates adoption in educational settings where restrictive licenses might present barriers to use.

The system serves as a comprehensive reference implementation for building production-ready multi-agent AI systems, providing both technical insights and practical guidance for developers and researchers working in this domain.

Repository: https://github.com/Dprof-in-tech/job-hunting-agent

Live Demo: https://job-hunting-agent.vercel.app

Documentation: Complete setup and usage instructions included in repository

License: MIT License

Contact: Amaechiisaac450@gmail.com

Quick Start: Clone the repository and run npm run dev to start the complete system locally.

@safe_ai_wrapper(agent_name="resume_analyst", safety_level="high") def resume_analyst_agent(state: MultiAgentState): """Expert resume analyst with superior analysis capabilities""" resume_path = state.get('resume_path', '') if not resume_path or not os.path.exists(resume_path): return { "messages": [AIMessage(content="❌ **Resume Analyst**: No valid resume file provided")], "next_agent": "coordinator", "completed_tasks": state.get('completed_tasks', []) + ['resume_analyst'] } # Extract and validate resume content resume_content = parse_resume.invoke(resume_path) # Check for career transition scenario job_market_data = state.get('job_market_data', {}) is_career_transition = bool(job_market_data) # Generate comprehensive analysis analysis_prompt = create_analysis_prompt(resume_content, job_market_data, is_career_transition) analysis_result = llm.invoke(analysis_prompt) return { "messages": state.get('messages', []) + [analysis_result], "resume_analysis": parse_analysis_result(analysis_result.content), "next_agent": "coordinator", "completed_tasks": state.get('completed_tasks', []) + ['resume_analyst'] }

# Complete database model definitions class SystemMetrics(Base): __tablename__ = 'system_metrics' id = Column(Integer, primary_key=True) timestamp = Column(DateTime, default=datetime.utcnow) total_requests = Column(Integer, default=0) successful_requests = Column(Integer, default=0) failed_requests = Column(Integer, default=0) avg_response_time = Column(Float, default=0.0) user_satisfaction_score = Column(Float, default=0.0) human_interventions = Column(Integer, default=0) uptime_percentage = Column(Float, default=100.0) system_grade = Column(String(10)) additional_data = Column(JSON) class AgentMetrics(Base): __tablename__ = 'agent_metrics' id = Column(Integer, primary_key=True) timestamp = Column(DateTime, default=datetime.utcnow) agent_name = Column(String(100), nullable=False) total_calls = Column(Integer, default=0) successful_calls = Column(Integer, default=0) failed_calls = Column(Integer, default=0) total_processing_time = Column(Float, default=0.0) avg_processing_time = Column(Float, default=0.0) success_rate = Column(Float, default=0.0) performance_grade = Column(String(10)) errors = Column(JSON)

// Main application component structure const JobHuntingApp: React.FC = () => { const [selectedFile, setSelectedFile] = useState<File | null>(null); const [isLoading, setIsLoading] = useState(false); const [response, setResponse] = useState<ApiResponse | null>(null); const [customPrompt, setCustomPrompt] = useState(''); const handleFileSubmission = async (prompt: string) => { setIsLoading(true); try { const formData = new FormData(); if (selectedFile) formData.append('file', selectedFile); formData.append('prompt', prompt); const response = await fetch('/api/process', { method: 'POST', body: formData, }); const data = await response.json(); setResponse(data); } catch (error) { console.error('Processing error:', error); } finally { setIsLoading(false); } }; return ( <div className="min-h-screen bg-white text-black"> <Header /> <div className="max-w-6xl mx-auto px-4 sm:px-6 py-8 sm:py-12"> <div className="grid lg:grid-cols-2 gap-6 lg:gap-12"> <InputSection selectedFile={selectedFile} onFileSelect={setSelectedFile} customPrompt={customPrompt} onPromptChange={setCustomPrompt} onSubmit={handleFileSubmission} isLoading={isLoading} /> <ResultsSection response={response} isLoading={isLoading} /> </div> </div> </div> ); };

import pytest from api.tools import validate_resume_content, parse_resume from api.main import PerformanceEvaluator class TestContentValidation: def test_valid_resume_content(self): """Test validation of actual resume content""" resume_text = """ John Doe Software Engineer Experience: • 5 years in Python development • Led team of 3 developers Education: • BS Computer Science, MIT """ is_valid, explanation = validate_resume_content(resume_text) assert is_valid == True assert "YES" in explanation.upper() def test_invalid_content_rejection(self): """Test rejection of non-resume content""" book_text = """ Chapter 1: Introduction to Machine Learning Machine learning is a subset of artificial intelligence... """ is_valid, explanation = validate_resume_content(book_text) assert is_valid == False assert "NO" in explanation.upper() class TestPerformanceTracking: def test_system_metrics_accumulation(self): """Test system metrics properly accumulate""" evaluator = PerformanceEvaluator() # Log multiple requests evaluator.log_system_request(True, 2.5) evaluator.log_system_request(True, 3.0) evaluator.log_system_request(False, 1.5) assert evaluator.system_metrics.total_requests == 3 assert evaluator.system_metrics.successful_requests == 2 assert evaluator.system_metrics.failed_requests == 1 assert evaluator.system_metrics.avg_request_time == 2.33 # (2.5+3.0+1.5)/3

def test_complete_workflow_integration(): """Test end-to-end multi-agent workflow""" # Setup test data test_resume_path = "tests/fixtures/sample_resume.pdf" test_prompt = "Analyze my resume and find relevant software engineering jobs" # Execute workflow result = multi_agent.process_request( user_message=test_prompt, resume_path=test_resume_path, user_id="test_user_123" ) # Verify results assert result["success"] == True assert "resume_analyst" in result["completed_tasks"] assert "job_researcher" in result["completed_tasks"] # Verify resume analysis present assert "resume_analysis" in result assert result["resume_analysis"]["overall_score"] > 0 # Verify job data present assert "job_market_data" in result assert len(result["job_listings"]) > 0 # Verify performance tracking assert result["processing_time"] < 30.0 # SLA requirement