Swathi Radhakrishnan

AI Developer

GitHub Repository

This project presents a Retrieval-Augmented Generation (RAG) based intelligent assistant designed for answering Frequently Asked Questions (FAQs) on an e-commerce platform. Leveraging LangChain, Groq’s LLaMA model, Chroma vector database, and HuggingFace embeddings, the assistant delivers fast, accurate, and context-aware responses to customer queries. Trained on real-world FAQ data from The Good Guys e-commerce platform, the system improves the customer support experience by enabling natural language interaction with business-specific information.

Traditional FAQ pages often require users to manually search through lists of static content. This project aims to enhance the customer support experience by implementing a conversational interface that provides semantic, context-aware responses using Retrieval-Augmented Generation (RAG). By integrating LangChain and Groq’s high-speed LLMs with a vector search backend, users can engage in fluid, natural interactions to resolve queries about orders, deliveries, and store policies.

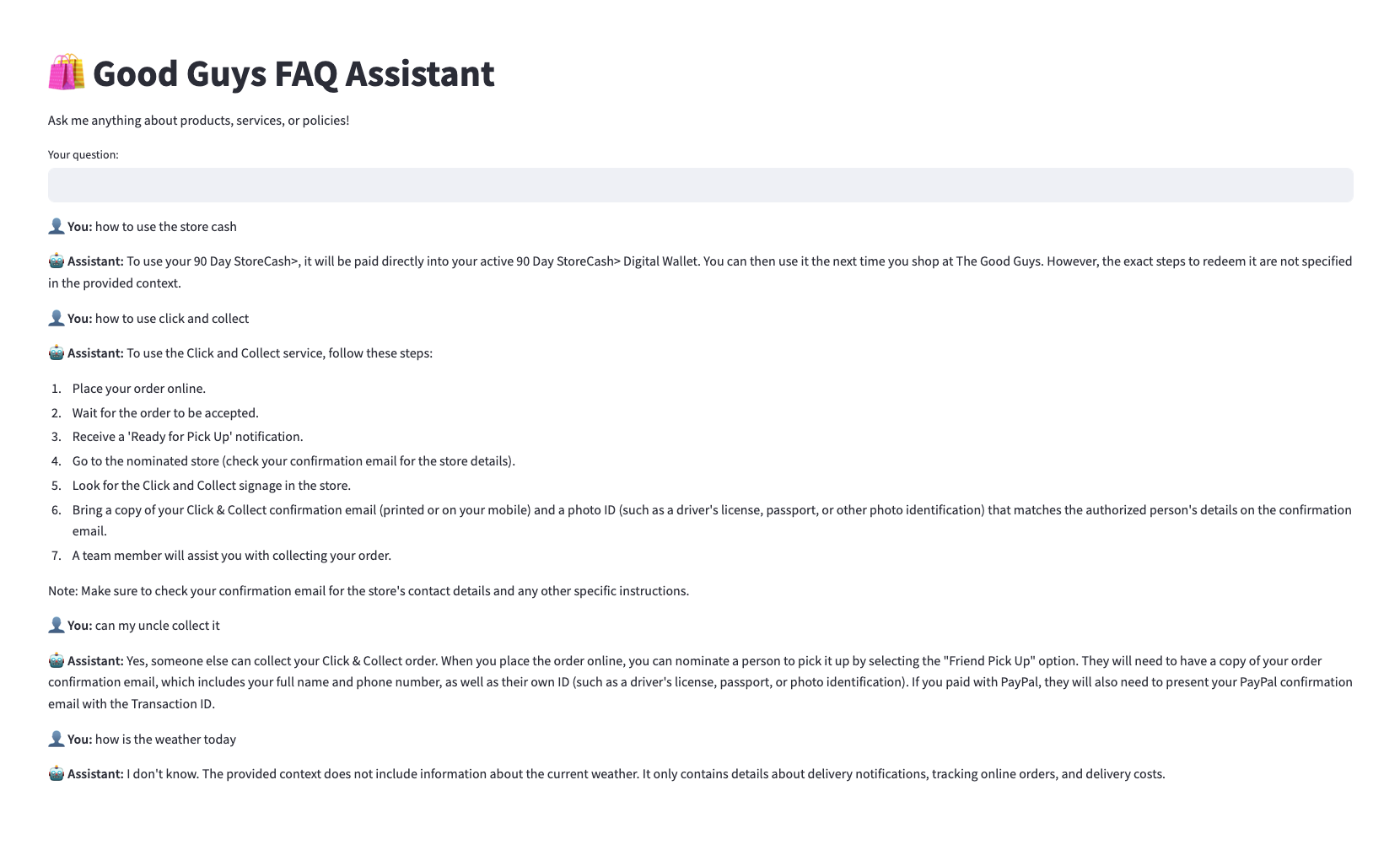

question and answer.assets/ui_preview.png)streamlit run src/app.py

Accuracy: The assistant reliably retrieved the correct context and generated answers with high relevance to the user query.

Latency: Fast response generation was observed using Groq’s LLaMA model, significantly outperforming traditional cloud-hosted LLMs in terms of speed.

User Experience: The natural conversational interface made the assistant easy to use even for non-technical users.

The E-commerce FAQ Assistant demonstrates a practical use case for combining vector search with LLMs to improve customer experience in online retail. The system performs well across a range of common questions, showcasing the power of Retrieval-Augmented Generation in domain-specific applications.

Future Enhancements

This work serves as a strong foundation for deploying conversational AI agents tailored to specific business knowledge bases.