About Advanced Domain Specific AI Expert Maker

Advanced Domain Specific AI Expert Maker(DualForce) revolutionizes productivity by creating personalized AI expert mentors for everyone on any domain. By transforming a single user into two - one physical and one virtual expert - it effectively doubles your productivity without increasing headcount. From developers coding in different languages to HR professionals and executives, DualForce creates role-specific expert twins through vectorizing authoritative resources.

Identifying the Gaps in Existing Solutions

Customer productivity tools powered by AI have made strides in recent years, with solutions like AI-driven chatbots, knowledge bases, and virtual assistants. However, these solutions often lack personalization, domain-specific expertise, and the ability to provide contextualized guidance tailored to an user's exact needs. Traditional AI mentors rely on general-purpose knowledge bases, making them inadequate for specialized roles.

Limitations of Existing Approaches

One-size-fits-all solutions – Many existing AI productivity tools offer generic assistance that lacks domain specificity.

Lack of contextual awareness – AI systems often fail to retain conversation history, making responses disconnected from prior interactions.

Dependence on pre-trained models – Without retrieval-augmented generation (RAG), these systems provide responses based solely on training data, increasing hallucinations and reducing accuracy.

Inefficiency in skill enhancement – Current AI mentorship tools do not efficiently leverage authoritative domain-specific resources for personalized learning.

How DualForce Addresses These Gaps

DualForce fills these gaps by leveraging domain-specific authoritative resources, integrating RAG for accurate responses, and maintaining conversation memory to deliver context-aware mentorship.

Working of DualForce

DualForce uses Generative AI with RAG (Retrieval-Augmented Generation) technology to ground responses in authoritative sources, reducing hallucinations and increasing accuracy. With conversation memory, it provides contextually relevant assistance based on previous interactions.

How it works?

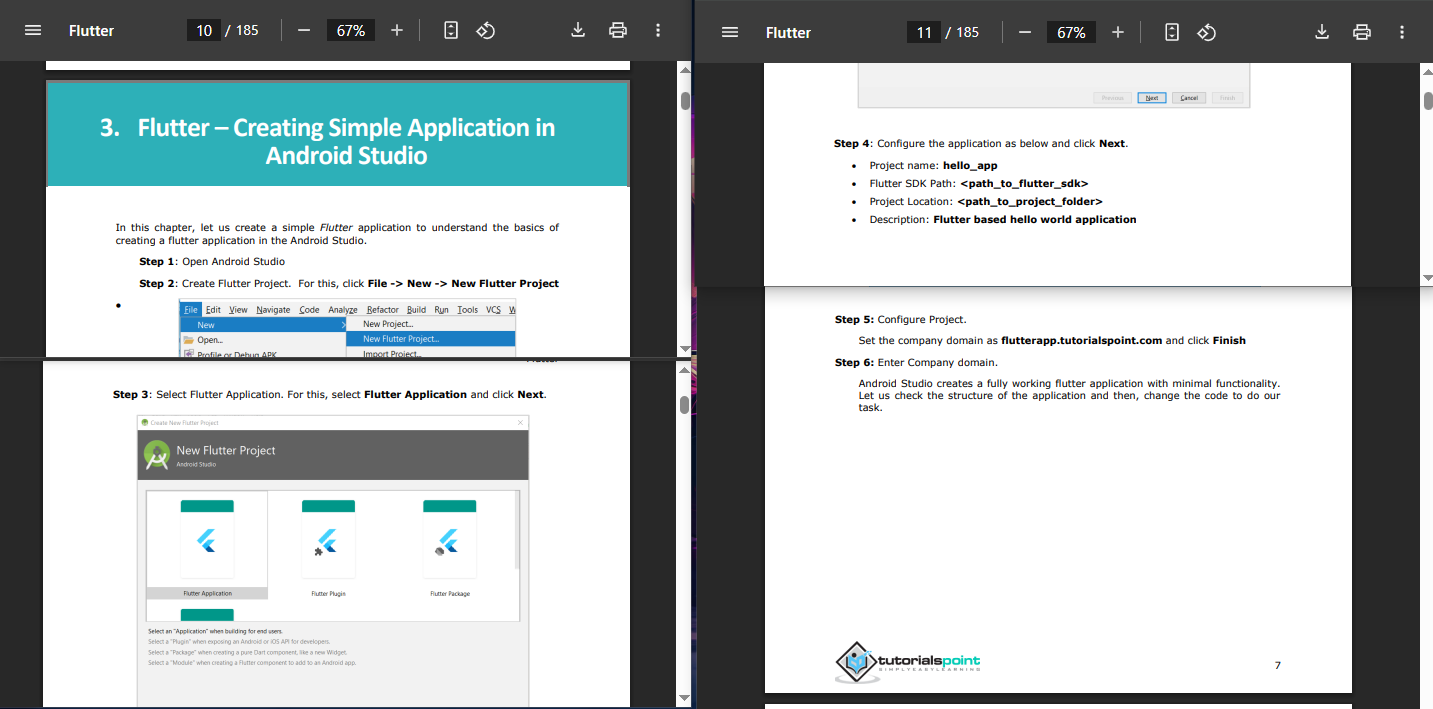

- User uploads the guiding documents, like a backend developer can add a guide to backend development book pdf.

- The system then creates embeddings of the document.

- The expert is created.

- User can then ask anything about the domain..

- The system efficiently makes the embeddings vector of user query and match relevant texts from the embeddings documents.

- This information is then passed to any LLM along with user history if any, and it creates a RAG layer on top of that LLM.

- The LLM then give more accurate response from its knowledge and Retrieved knowledge, thus solving user problem and acting as an expert.

Examples

Problem it solves in Workplace environment -

Consider a typical development team with 4 junior developers and 1 senior developer.

Traditionally, all junior developers direct their queries to the senior developer, creating a bottleneck that reduces overall team productivity and delays project timelines. DualForce solves this by providing each junior developer with their own AI mentor, trained on authoritative resources specific to their tech stack.

Problem it solves for students while studying -

Consider a computer science student struggling with Operating system subject uploads their university textbook PDF into DualForce. Whenever they have a doubt, they ask the AI mentor, which provides contextually relevant, accurate explanations based on the textbook content. Over time, the AI understands the student’s common pain points and provides tailored learning suggestions.

Understanding a product manual or product documentation

A person working on configuring industrial machinery uploads the product’s technical manual to DualForce. Instead of manually searching through hundreds of pages, they simply ask the AI mentor questions like, "How do I calibrate sensor X?" or "What does error code Y mean?" DualForce instantly provides step-by-step guidance, improving efficiency and reducing downtime.

More use cases -

There can be almost uncountable scenarios where DualForce Stands out as prime Expert.

Dataset Sources & Collection

DualForce does not rely on a predefined dataset. Instead, it exclusively utilizes user-provided documents, ensuring that the AI mentor is tailored to the specific needs of each individual employee. These documents may include:

Technical documentation (e.g., API references, programming guides).

Company-specific policies and manuals.

Any product manuals which is too complex to understand.

Research papers and authoritative books.

Dataset Description

Since DualForce dynamically builds expertise based on uploaded resources, there is no fixed dataset. However, the system processes unstructured textual data from user-uploaded documents, including:

Structured content: Well-formatted PDFs, manuals, and books.

Semi-structured content: Reports with tables, diagrams, and references.

Unstructured content: Free-form text, meeting transcripts, and notes.

Dataset Processing Methodology

User Uploads Documents: Employees upload relevant resources based on their roles.

Preprocessing:

Extract text from PDFs and other formats.

Remove formatting inconsistencies and redundant content.

Embedding Generation:

Convert textual data into vector embeddings using an AI-powered embedding model.

Storage & Indexing:

Store embeddings in a vector database for efficient retrieval.

Real-Time Query Processing:

Match user queries with relevant sections from the stored embeddings.

Retrieve relevant information and pass it through a Large Language Model (LLM) for contextualized responses.

Source Credibility

Since the effectiveness of DualForce depends on user-uploaded resources, source credibility is critical. Best practices for ensuring reliable AI-generated responses include:

Encouraging the use of authoritative sources such as well-established books, manuals, and peer-reviewed papers.

Allowing administrators to curate and validate uploaded documents to prevent misinformation.

Regularly updating documents to reflect evolving industry standards.

Methodology Explanation

Technical Overview

DualForce employs Generative AI with RAG technology to create role-specific AI mentors tailored to employees’ professional needs.

Key Steps in Methodology

User Uploads Resources – User upload domain-specific PDFs, books, and documentation relevant to their use case.

Data Processing & Embedding Creation – The system processes these documents, creating embeddings for efficient knowledge retrieval.

Expert AI Mentor Generation – AI mentors are created using these embeddings, ensuring domain-specific expertise.

Query Handling – When users ask questions, the system:

Converts queries into embeddings.

Matches the query with relevant knowledge from stored embeddings.

Passes matched data to an LLM with RAG for response generation.

Maintains query history for contextualized responses.

Response Generation – The AI mentor provides accurate, role-specific guidance based on both the retrieved and pre-trained knowledge.

Evaluation Framework

Key Metrics for Success

To measure the effectiveness of DualForce, the following evaluation criteria are used:

Response Accuracy – Percentage of responses aligning with authoritative resources.

Task Efficiency Improvement – Reduction in time taken to resolve queries.

Error Reduction – Frequency of incorrect or misleading responses.

Comparison Baseline

DualForce is benchmarked against:

General AI assistants (e.g., ChatGPT, traditional chatbots).

Existing workplace AI solutions.

Implementation Details

Technical Specifications

Embedding Model: Google's text-embedding models.

LLM Backend: GPT-based models with fine-tuning capabilities.

Vector Database: MongoDB vector database for efficient similarity searches.

Retrieval System: RAG pipeline for enhanced accuracy.

Frontend: Built on Flutter for cross-platform support across any device like Android, IOS, Web, Windows, Linus, MacOS

Backend: Built on Spring boot for scalability and complex solutions.

Cloud Infrastructure: Deployed using Google cloud.

Who can use DualForce/ Different Use cases

-

Organizations with any number of employees can use DualForce to maximize each individual employee performance, typically boosting it by at least 50%.

-

Early stage Startups where typically single employee work on multiple tech stacks and thus fulfilling multiple roles. In these environment dual force can be really beneficial as it lets individual create multiple bots curated to their different needs.

-

Top executives like CEO's, CTO's, etc. can use DualForce to typically enhance their decision makings and find areas of growth.

-

Students who want help in their subjects can use DualForce.

- many more uncountable different use cases.

Code Repository & Documentation

A GitHub repository is available with setup instructions, and sample implementation guides:

Technical Asset Access Links

Installation and Usage Instructions

Setup

# Clone the repository git clone https://github.com/utkarshgupta2009/dualForceProject.git

Backend Setup

cd dualForceBackend # Build and run Spring Boot application ./mvnw spring-boot:run

Frontend Setup

cd dualForceFrontend flutter pub get flutter run

Maintenance and Support Status

Regular updates and bug fixes.

Open-source contributions are welcome.

Issues can be reported on GitHub.

Access and Availability Status

Hosted on Google Cloud Platform (GCP).

Publicly accessible on github

Deployment Considerations

Infrastructure & Resource Requirements

Storage: High-capacity storage for document embeddings.

Integration: APIs for seamless adoption into existing enterprise systems.

Scalability: Auto-scaling infrastructure to handle increased demand.

Monitoring & Maintenance Considerations

Performance Metrics Monitoring

System Latency: Response time analysis.

Error Logging: Continuous tracking of incorrect responses.

Model Retraining: Periodic updates to AI mentors

Comparative Analysis

Comparison with Existing Tools

| Feature | DualForce | Traditional AI Assistants | Knowledge Bases |

|---|---|---|---|

| Domain-Specific Responses | ✅ | ❌ | ✅ |

| Context Retention | ✅ | ❌ | ❌ |

| Uses RAG for Accuracy | ✅ | ❌ | ❌ |

| Real-time Query Processing | ✅ | ✅ | ❌ |

| Scalability for Large Teams | ✅ | ❌ | ✅ |

| Reduces Bottlenecks | ✅ | ❌ | ❌ |

| Integrates with Enterprise Systems | ✅ | ❌ | ✅ |

Key Results & Interpretation

User Performance Improvement

Initial testing with early adopters showed:

40% faster issue resolution for developers.

30% reduction in dependency on senior staff.

Future Directions

Multimodal AI mentors: Incorporate video tutorials and interactive demos.

Enhanced Collaboration: AI mentors that facilitate group learning and peer mentoring.

On-device AI models: Reduce reliance on cloud infrastructure for faster responses.

Potential Use Cases Across Industries

Healthcare: AI mentors trained on medical guidelines.

Legal Industry: AI mentors trained on case laws and legal frameworks.

Finance: AI advisors trained on financial regulations.

Benefits:

- 24/7 instant expert guidance

- Reduced senior staff bottlenecks

- Doubled productivity without doubled costs.

- Domain-specific accurate solutions Seamless knowledge access.

- DualForce transforms teams through reliable, context-aware Generative AI technology, making expert knowledge accessible while maximizing workforce efficiency.

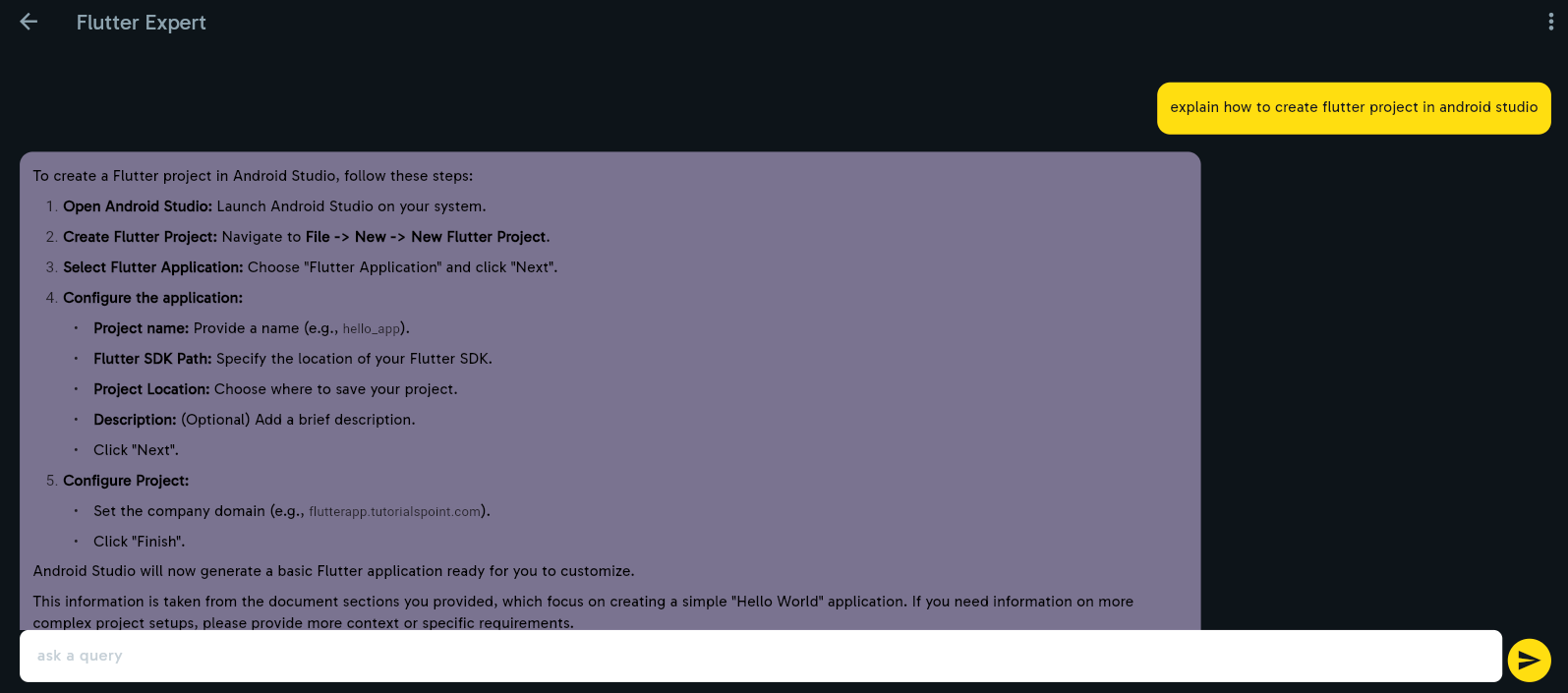

Visual Tool Demonstration

Screenshots and video walkthroughs are available in the Publication itself.

Here are more of them-

License and Usage Rights

MIT License – Open for modification and distribution.

Contact Information

For inquiries, reach out to developer.utkarshgupta2009@gmail.com.