Recent advances in large language models have enabled the development of agentic AI systems capable of planning, executing, and adapting actions to achieve complex goals. Unlike traditional AI workflows that operate in a single input–output step, agentic systems function over multiple iterations, making autonomous decisions throughout the execution process.

However, increased autonomy introduces challenges related to safety, reliability, and termination control. Unbounded agents may loop indefinitely or produce inconsistent results without proper evaluation and constraints.

This project presents a safe autonomous task planner built using a bounded agentic control loop. The system decomposes a high-level user goal into executable tasks, evaluates intermediate outputs, and dynamically adjusts its plan while enforcing strict safety limits. The result is a controlled, interpretable, and production-ready agentic AI system suitable for real-world applications.

This project presents a safe and autonomous task-planning system built using an agentic control loop architecture. The system accepts a high-level user objective, decomposes it into executable steps, evaluates intermediate outputs, and dynamically adapts its plan when necessary.

Unlike traditional automation pipelines that follow rigid workflows, this system emphasizes controlled autonomy, ensuring that decision-making remains bounded, interpretable, and safe for real-world use.

Most AI systems today operate in a simple input → output paradigm. While effective for single-step tasks, this approach struggles with complex goals that require planning, feedback, and adaptation.

However, unrestricted autonomy introduces risks such as infinite loops, poor decision quality, and unpredictable execution. This project focuses on solving that challenge by combining autonomy with explicit safety constraints.

These issues make them unsuitable for multi-step, goal-driven workflows where quality control and termination guarantees are essential.

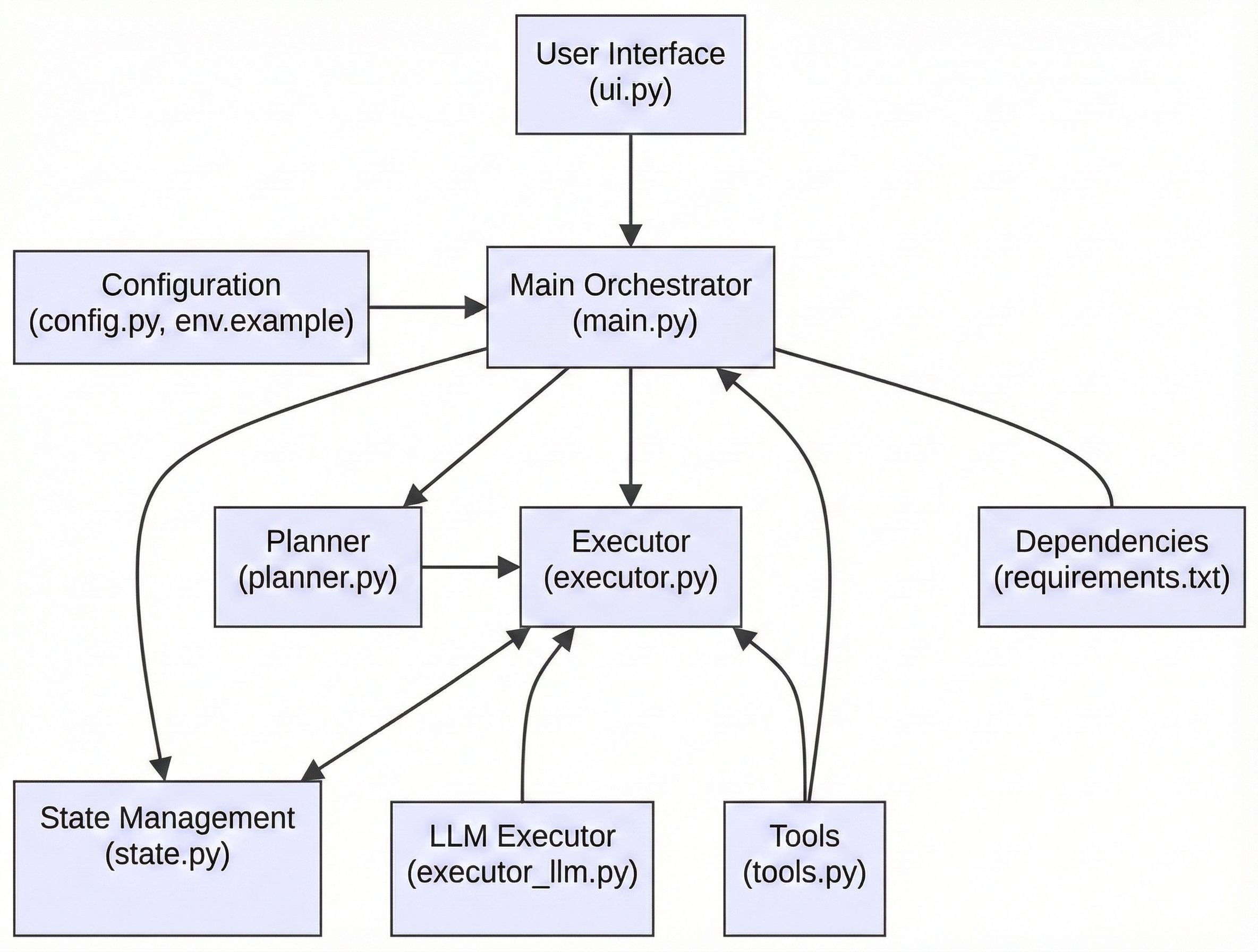

The proposed solution implements a modular agentic architecture with clearly defined responsibilities across agents.

This separation of concerns improves transparency, maintainability, and reliability.

The system is designed as a modular agentic architecture that enables safe, autonomous task execution through a bounded control loop. Each component has a clearly defined responsibility, allowing the system to remain interpretable, extensible, and production-ready.

Figure 1: High-level system architecture of the production-ready agentic task planner. The system follows a bounded control loop to ensure safe and deterministic execution.

At a high level, the architecture follows a Planner → Executor → Evaluator → Control pattern, orchestrated by a central loop that enforces safety and termination constraints.

The Planner is responsible for transforming a high-level user goal into a structured and ordered list of executable tasks. This decomposition enables the system to reason about complex objectives in a step-by-step manner rather than attempting to solve them in a single response.

The Executor carries out individual tasks generated by the planner. The system supports multiple execution strategies, including LLM-based execution, allowing tasks to be performed dynamically based on context and requirements.

The Evaluator assesses the output of each executed task using predefined validation criteria. Based on the evaluation result, it determines whether the task output is acceptable, requires a retry, or triggers a replanning step.

The State Manager maintains execution context across the entire lifecycle of the task. It tracks task progress, retry counts, and completion status, ensuring that decisions are made based on current system state.

The control loop, implemented in the main orchestration layer, coordinates all agents. It enforces safety constraints such as retry limits and termination conditions, ensuring bounded and deterministic execution.

A Streamlit-based UI provides an interactive interface for users to submit goals and observe system behavior, making the agentic workflow easy to test and analyze.

A user submits a high-level goal through the CLI or UI

The Planner generates a structured task plan

The Executor performs the current task

The Evaluator validates the output

The State Manager updates execution state

The Control Loop decides to proceed, retry, replan, or terminate

This cycle continues until the objective is completed or safety limits are reached.

Modularity: Each agent operates independently with a single responsibility

Safety: Explicit guardrails prevent infinite loops and uncontrolled execution

Transparency: Clear task boundaries improve traceability and debugging

Extensibility: Components can be enhanced or replaced without redesigning the system

This architecture provides a strong foundation for building real-world agentic AI systems that require autonomy without sacrificing control or reliability.

AAIDC-Module-3-Production-Agentic-System/

│

├── main.py # Entry point – orchestrates the agentic loop

├── planner.py # Task planning logic

├── executor.py # Task execution strategies

├── executor_llm.py # LLM-powered execution using Groq

├── evaluator.py # Output evaluation and decision logic

├── state.py # Execution state management

├── tools.py # Utility tools for generation and validation

├── ui.py # Streamlit-based UI

├── config.py # Central configuration

├── env.example # Example environment variables

├── requirements.txt # Python dependencies

├── README.md # Project documentation

└── LICENSE # License information

The system follows a bounded agentic workflow designed to safely execute complex objectives through iterative planning, execution, evaluation, and control. Rather than producing a single response, the agent operates over multiple steps, continuously assessing progress and adapting its behavior when necessary.

The methodology is centered around a Plan → Execute → Evaluate → Control loop, ensuring both autonomy and reliability.

The workflow begins when a user provides a high-level goal through either the command-line interface or the Streamlit UI. This goal represents an abstract objective that may require multiple steps to complete.

The Planner Agent analyzes the user goal and decomposes it into a structured sequence of smaller, executable tasks. This step transforms an unstructured request into a clear execution plan, enabling step-by-step reasoning and improved control.

The Executor Agent processes tasks one at a time based on the generated plan. Depending on the task requirements, execution may involve LLM-based reasoning or predefined execution strategies. Each task produces an intermediate output that is passed to the evaluation stage.

The Evaluator Agent examines the task output against predefined validation criteria such as completeness, relevance, or correctness. Based on this evaluation, the system determines whether the task is successfully completed or requires further action.

Using feedback from the evaluator and the current execution state, the control logic decides one of the following actions:

These decisions are governed by explicit safety constraints.

The State Manager maintains contextual information throughout the workflow, including task progress, retry counts, and completion status. This ensures consistent decision-making across iterations and prevents uncontrolled behavior.

The workflow concludes when either:

This guarantees deterministic termination and prevents infinite execution loops.

1.Receive user goal

2.Decompose goal into tasks

3.Execute tasks sequentially

4.Evaluate outputs

5.Apply control decisions

6.Update state

7.Terminate safely

This methodology demonstrates how agentic AI systems can operate autonomously while remaining bounded, interpretable, and production-ready.

The system operates using a bounded control loop:

This loop ensures that every action is evaluated and that the system exits deterministically.

Autonomous agentic systems require explicit safeguards to ensure predictable and reliable behavior. To address the risks associated with unbounded autonomy, this project incorporates multiple safety and control mechanisms that govern execution, decision-making, and termination.

The goal is to balance autonomy with control, enabling the agent to adapt intelligently while preventing runaway execution or inconsistent outcomes.

The system enforces bounded autonomy by limiting how long and how often an agent can retry or replan tasks. Each task is associated with predefined retry limits, ensuring that failures do not result in infinite execution loops.

Once these limits are reached, the system terminates safely instead of continuing indefinitely.

Execution is guaranteed to end under one of the following conditions:

These explicit termination rules ensure deterministic and predictable system behavior.

Rather than blindly retrying failed tasks, the system differentiates between:

This distinction prevents unnecessary repetition and allows the system to adapt intelligently when initial assumptions are incorrect.

All execution decisions are driven by the Evaluator Agent, which validates task outputs against defined criteria. Only evaluated results influence control flow, ensuring that decisions are based on observable outcomes rather than assumptions.

This evaluation-first approach significantly improves reliability and transparency.

The State Manager maintains critical execution metadata, including:

By making safety decisions state-aware, the system ensures consistent behavior across iterations and prevents uncontrolled transitions.

In scenarios where progress cannot be achieved within safety limits, the system fails gracefully by:

This fail-safe design is essential for real-world deployment.

The safety mechanisms implemented in this project ensure that:

These controls demonstrate how agentic AI systems can be designed to operate responsibly without sacrificing adaptability or intelligence.

The autonomous task planner was evaluated through a series of goal-driven scenarios designed to test planning accuracy, execution reliability, and safety behavior. Rather than focusing on numerical benchmarks, the evaluation emphasizes behavioral correctness, control, and robustness, which are critical for production-oriented agentic systems.

The system consistently demonstrated the ability to:

These behaviors confirm that the agentic control loop operates as intended.

Safety mechanisms were validated by intentionally introducing ambiguous or failure-prone tasks. In such cases, the system:

This confirms the effectiveness of bounded autonomy and explicit termination logic.

These limitations provide clear directions for future improvement.

The system successfully demonstrates a production-ready agentic workflow that balances autonomy with control. It reliably handles multi-step objectives while maintaining safety guarantees, making it suitable as a foundation for real-world agentic AI applications.

This project demonstrates:

These principles are essential for deploying agentic AI systems in real-world environments.

This project demonstrates how agentic AI systems can be designed to operate autonomously while remaining safe, reliable, and production-ready. By combining structured planning, controlled execution, evaluation-driven decision making, and explicit safety constraints, the system achieves meaningful autonomy without sacrificing predictability or control.

The modular architecture and bounded agentic control loop provide a clear and extensible foundation for real-world applications that require multi-step reasoning and adaptive behavior. Through careful state management and deterministic termination logic, the system avoids common pitfalls such as infinite loops and uncontrolled execution.

Overall, this work highlights practical engineering principles for building responsible agentic AI systems and serves as a strong reference implementation for production-oriented autonomous agents within the Agentic AI Developer Certification framework.

https://github.com/Sanjaystarc/AAIDC-Module-3-Production-Agentic-System