📌 Abstract

📘 Multi-Agent System Analyzer: Complete Technical Documentation

📑 Table of Contents

1. Introduction

2. Understanding AI Agents in Code Analysis

3. What is the Multi-Agent System Analyzer?

4. Why Multi-Agent Intelligence for Code Analysis?

5. Evolution from Traditional Static Analysis to Agentic Systems

6. Core Components of the System

7. Agentic Architecture

8. Agentic Patterns Implemented

9. Agent Workflows

10. Implementation Guide

11. Real-World Use Cases

12. Benefits and Challenges

13. Resilience and Reliability

14. Future Enhancements

15. Conclusion

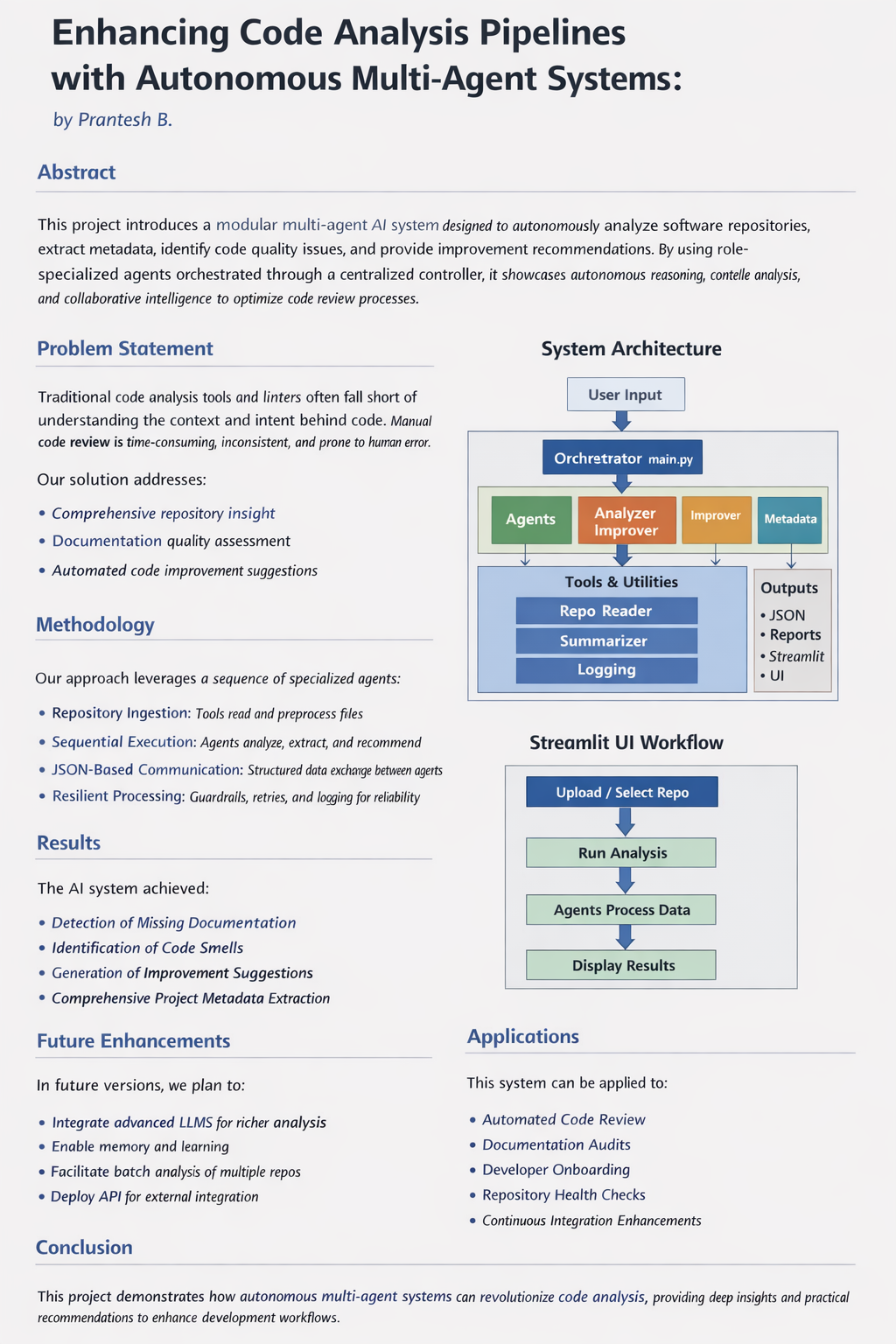

1️⃣ Introduction

Modern software development has evolved into a complex ecosystem where codebases grow exponentially, documentation becomes outdated within weeks, and maintaining code quality across distributed teams becomes increasingly challenging. Developers spend an estimated 30-40% of their time trying to understand existing code, navigate architectural decisions, and identify areas needing improvement.

Traditional static analysis tools like ESLint, Pylint, and SonarQube have been the industry standard for decades. While these tools excel at catching syntax errors and enforcing style guidelines, they fundamentally lack:

• Contextual Understanding: Unable to comprehend the "why" behind architectural decisions

• Holistic Analysis: Focus on individual files rather than repository-wide health

• Intelligent Reasoning: Cannot provide human-like suggestions for improvements

• Adaptive Learning: Follow rigid, predefined rules without understanding intent

The Multi-Agent System Analyzer represents a paradigm shift in how we approach code analysis. By leveraging the principles of Agentic AI—autonomous reasoning, tool usage, iterative refinement, and coordinated decision-making—this system transcends the limitations of traditional analyzers.

The Problem This System Solves

When joining a new project or auditing an existing codebase, developers face critical questions:

❓ What does this project actually do?

❓ How is the codebase structured and organized?

❓ What improvements are urgently needed?

❓ Where is the documentation incomplete or missing?

❓ What are the hidden architectural weaknesses?

Answering these questions manually can take days or weeks. The Multi-Agent System Analyzer answers them in minutes.

2️⃣ Understanding AI Agents in Code Analysis

What are AI Agents?

An AI agent is an intelligent software system that autonomously performs tasks to achieve specific goals by leveraging Large Language Models (LLMs), external tools, and reasoning frameworks.

Think of AI as raw intelligence—it possesses vast knowledge but needs direction. An AI agent is the executor that takes this intelligence and applies it to solve real-world problems.

Simple Analogy

Imagine you're a tech lead reviewing a codebase submitted by a junior developer:

• Traditional Tool: A linter flags 150 style violations but doesn't explain why the architecture is problematic.

• AI Agent: An intelligent assistant that:

◦ Analyzes the entire repository structure

◦ Identifies that the project lacks separation of concerns

◦ Suggests refactoring patterns specific to your tech stack

◦ Points out missing documentation with examples

◦ Provides actionable, prioritized improvement recommendations

This is the difference between knowing something is wrong and understanding how to fix it.

AI Agent vs Traditional AI Systems

Aspect Traditional AI/ML Generative AI (LLMs) AI Agents

Knowledge Limited to training data Vast, but static Extended via tools & APIs

Reasoning Rule-based logic Pattern recognition Dynamic planning & decision-making

Autonomy Executes predefined tasks Responds to prompts Autonomous goal achievement

Tool Usage None Limited Extensive (APIs, databases, external systems)

Memory None Conversation context Short-term & long-term memory

Workflow Linear, fixed Single inference Iterative, multi-step processes

The Three Pillars of AI Agents

An AI agent operates on three fundamental principles:

1. Understand → Perceive the environment and recognize the task

2. Think → Process information and determine the best course of action

3. Act → Execute tasks and deliver results

In the context of code analysis:

• Understand: Parse repository structure, read files, extract context

• Think: Analyze code quality, identify patterns, reason about improvements

• Act: Generate reports, suggest refactorings, flag critical issues

What is the Multi-Agent System Analyzer?

The Multi-Agent System Analyzer is a fully automated, AI-driven framework designed to:

✅ Analyze software repositories with human-like understanding

✅ Extract structured metadata (tech stack, dependencies, architecture)

✅ Detect improvement opportunities with contextual reasoning

✅ Evaluate documentation quality and completeness

✅ Generate actionable, prioritized reports

┌─────────────────────────────────────────────────────────────┐

│ USER INPUT │

│ (Repository Path / GitHub URL) │

└──────────────────────┬──────────────────────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────┐

│ ORCHESTRATION LAYER (main.py) │

│ Coordinates workflow, manages agent interactions, │

│ aggregates results │

└──────┬─────────────────┬─────────────────┬──────────────────┘

│ │ │

▼ ▼ ▼

┏━━━━━━━━━━━━┓ ┏━━━━━━━━━━━━┓ ┏━━━━━━━━━━━━━┓

┃ ANALYZER ┃ ┃ METADATA ┃ ┃ IMPROVER ┃

┃ AGENT ┃ ┃ AGENT ┃ ┃ AGENT ┃

┗━━━━━━━━━━━━┛ ┗━━━━━━━━━━━━┛ ┗━━━━━━━━━━━━━━┛

│ │ │

│ │ │

└─────────────────┴─────────────────┘

│

▼

┌───────────────────────┐

│ TOOLS & UTILITIES │

├───────────────────────┤

│ • RepoReader │

│ • Summarizer │

│ • WebSearchTool │

│ • Guardrails │

│ • Monitoring │

│ • Retry Logic │

└───────────────────────┘

│

▼

┌─────────────────────────────────────────────────────────────┐

│ STRUCTURED OUTPUT │

│ • Analysis Report • Metadata JSON • Improvements │

└─────────────────────────────────────────────────────────────┘

Figure 1: Multi-Agent System Architecture Overview

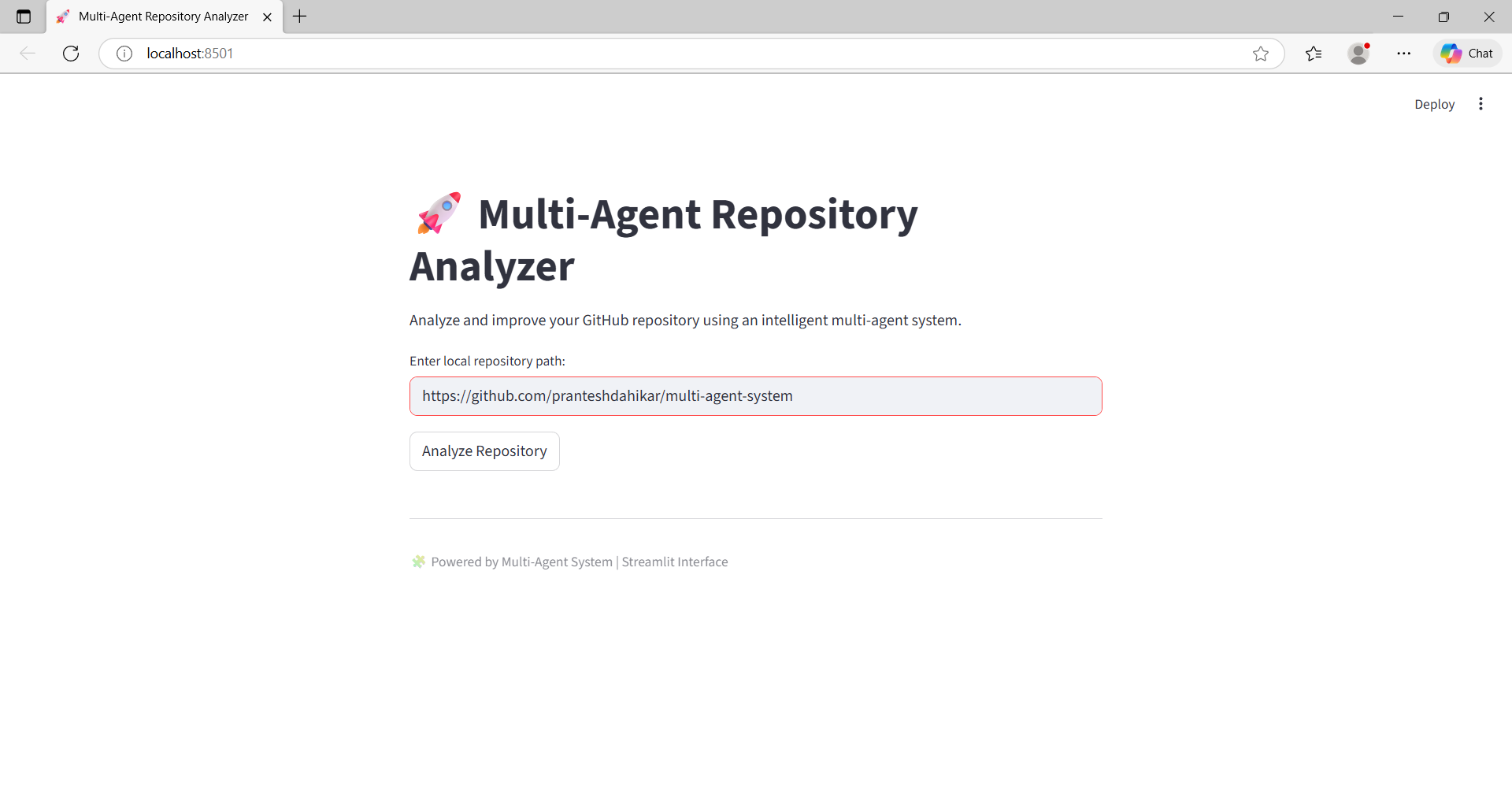

4️⃣ Why Multi-Agent Intelligence for Code Analysis?

The Power of Specialization

Instead of relying on a monolithic model attempting to do everything, this system employs role-specialized agents, each responsible for a distinct reasoning layer:

Agent Responsibility Core Capability

AnalyzerAgent Code structure & issue detection Contextual code understanding

MetadataAgent Project-level insights extraction Structured data extraction

ImproverAgent Improvement suggestions Best practices reasoning

This mirrors how human code review teams operate:

• (MetadataAgent) → Understands high-level structure

• (AnalyzerAgent) → Deep-dives into implementation details

• (ImproverAgent) → Suggests improvements and best practices

3️⃣ Real-World Applications

This system is designed with practical, production-relevant use cases in mind:

• Automated Code Reviews – Accelerate pull request reviews by generating structured insights before human review.

• Repository Onboarding – Help new developers quickly understand unfamiliar codebases.

• Technical Debt Assessment – Identify architectural weaknesses, documentation gaps, and maintainability risks.

• Open-Source Quality Audits – Evaluate repository readiness for contributors and long-term maintenance.

• AI-Assisted Documentation Generation – Provide intelligent suggestions for missing or incomplete documentation.

These applications demonstrate the system’s value beyond experimentation, positioning it as a developer productivity and quality-assurance tool.

4️⃣ System Requirements

🖥️ Technical Requirements

• Python 3.10+

• Minimum 4GB RAM (8GB recommended)

• Internet connectivity

• ~1GB disk space

🔑 API Requirements

A .env file must be created containing:

🔑 API Requirements

Create a .env file containing:

Implementation

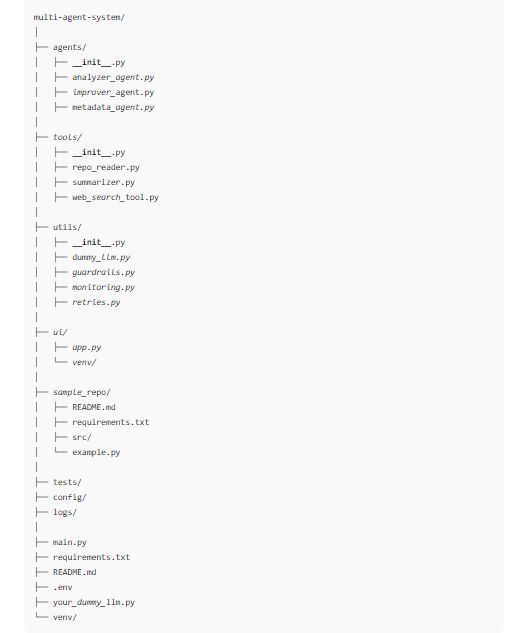

🗂 Project Structure

The Multi-Agent System is organized using a modular, agent-driven architecture. Each directory has a clear responsibility, enabling scalability, maintainability, and clean separation of concerns between agents, tools, UI, and utilities.

multi-agent-system/

│

├── agents/ # 🧠 Autonomous AI Agents

│ ├── analyzer_agent.py # Code analysis & issue detection

│ ├── metadata_agent.py # Metadata extraction

│ └── improver_agent.py # Improvement suggestions

│

├── tools/ # 🛠️ Reusable Tools

│ ├── repo_reader.py # Repository file traversal

│ ├── summarizer.py # Result aggregation

│ └── web_search_tool.py # External knowledge lookup

│

├── utils/ # ⚙️ Reliability & Observability

│ ├── guardrails.py # Input/output validation

│ ├── retries.py # Fault-tolerant execution

│ ├── monitoring.py # Logging & tracking

│ └── dummy_llm.py # Mock LLM for testing

│

├── ui/ # 🖥️ User Interface

│ └── app.py # Streamlit web application

│

├── tests/ # ✅ Unit & Integration Tests

│

├── sample_repo/ # 📂 Demo Repository

│

├── main.py # 🚀 Orchestration Entry Point

├── requirements.txt # 📦 Dependencies

├── .env # 🔐 Environment Variables

└── README.md # 📖 Documentation

Component Breakdown

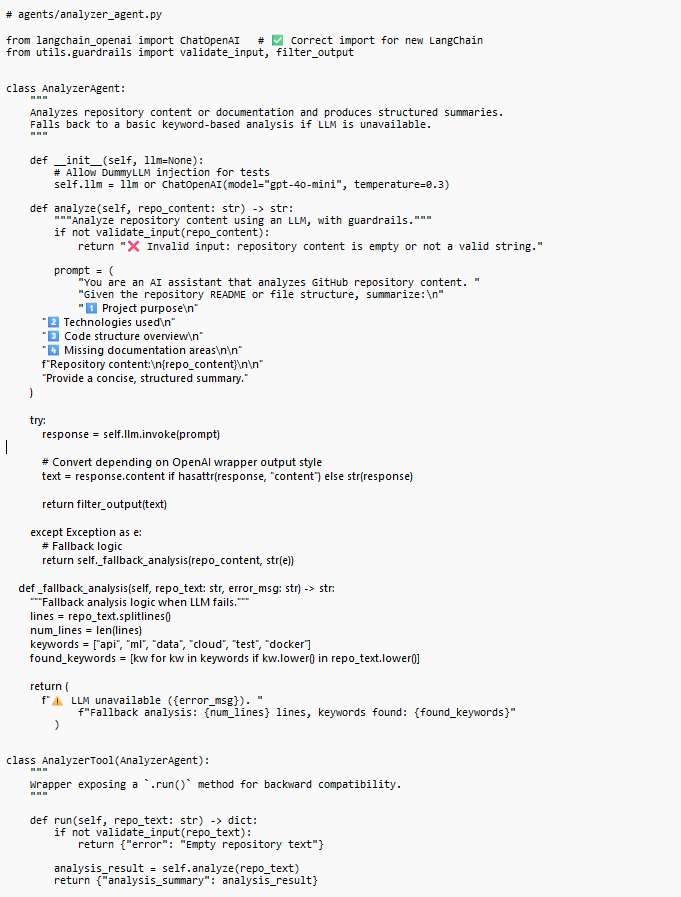

🧠 Agents (agents/)

- AnalyzerAgent (analyzer_agent.py)

Purpose: Perform deep code analysis, detect architectural issues, and identify documentation gaps.

Core Functionality:

class AnalyzerAgent:

def init(self, llm=None):

self.llm = llm or ChatOpenAI(model="gpt-4o-mini", temperature=0.4)

def analyze(self, repo_content: str) -> str:

"""

Analyzes repository structure and detects:

- Code quality issues

- Architectural inconsistencies

- Documentation gaps

- Naming convention violations

"""

Output Example:

{

"project_overview": "Python-based multi-agent system for code analysis...",

"detected_issues": [

"Missing comprehensive README documentation",

"Inconsistent naming conventions in utils/ module",

"No edge case handling in MetadataAgent",

"Environment variables not validated before initialization"

],

"documentation_gaps": [

"No installation guide for beginners",

"Missing architecture diagram",

"No API documentation for agents"

]

}

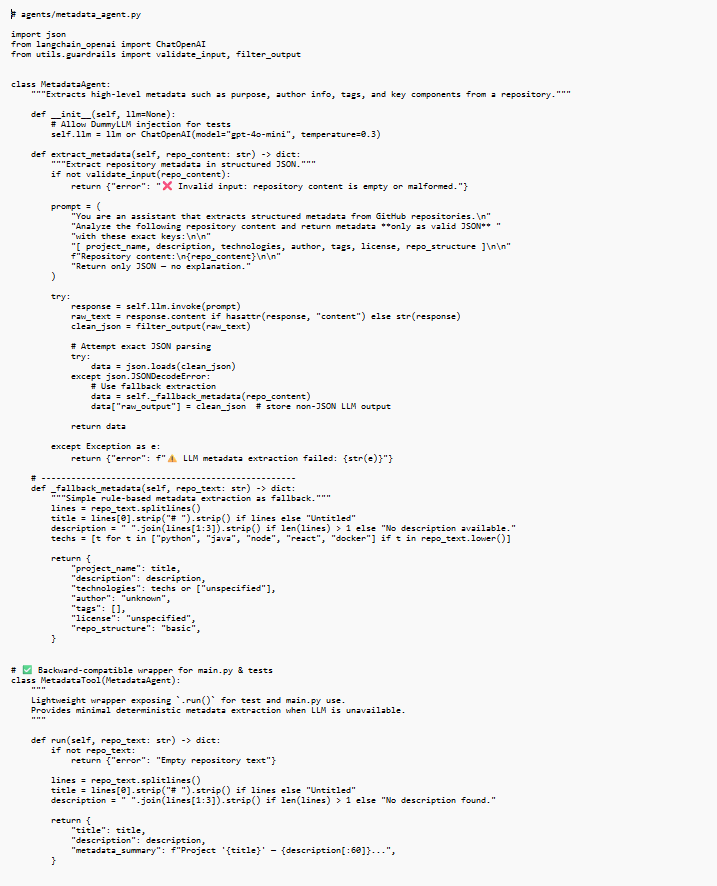

- MetadataAgent (metadata_agent.py)

Purpose: Extract structured, high-level project metadata.

Core Functionality:

class MetadataAgent:

def extract_metadata(self, repo_content: str) -> dict:

"""

Extracts:

- Project name & description

- Tech stack (languages, frameworks)

- Dependencies

- Author information

- License type

- Repository structure

"""

Fallback Mechanism:

When LLM parsing fails, the agent uses rule-based extraction:

def _fallback_metadata(self, repo_text: str) -> dict:

lines = repo_text.splitlines()

title = lines[0].strip("# ").strip() if lines else "Untitled"

techs = [t for t in ["python", "java", "node"] if t in repo_text.lower()]

return {

"project_name": title,

"technologies": techs or ["unspecified"],

...

}

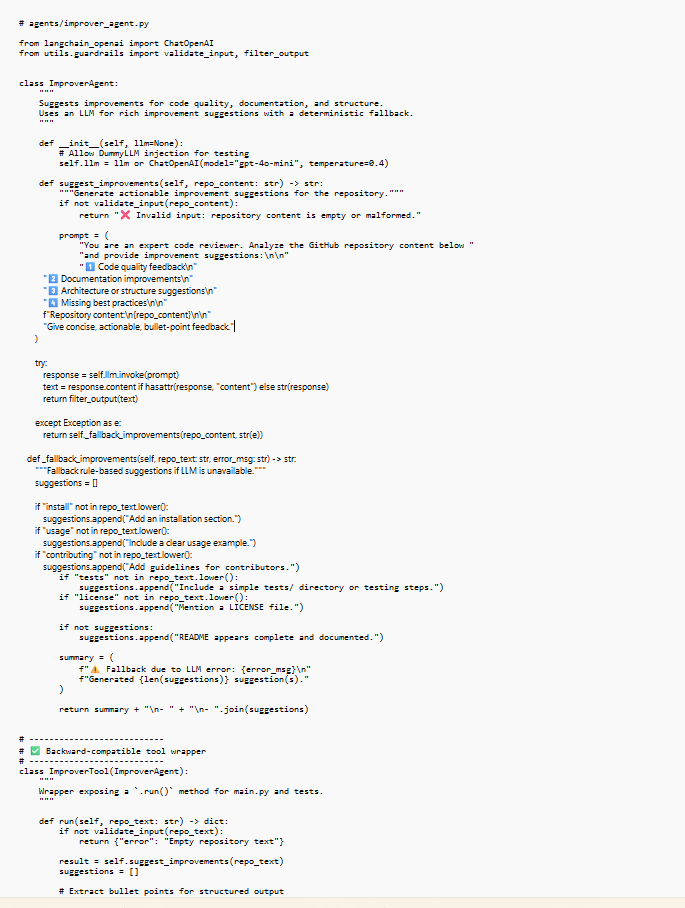

- ImproverAgent (improver_agent.py)

Purpose: Generate actionable improvement suggestions.

Core Functionality:

class ImproverAgent:

def suggest_improvements(self, repo_content: str) -> str:

"""

Provides:

- Code quality enhancements

- Documentation improvements

- Architecture recommendations

- Best practice adherence

"""

Output Example:

Improvement Suggestions:

- Add type annotations across all agent methods

- Refactor repeated code in utils/ into shared functions

- Implement retry mechanisms for LLM API rate-limits

- Create CONTRIBUTING.md for open-source collaboration

- Add pre-commit hooks for linting

🛠️ Tools (tools/)

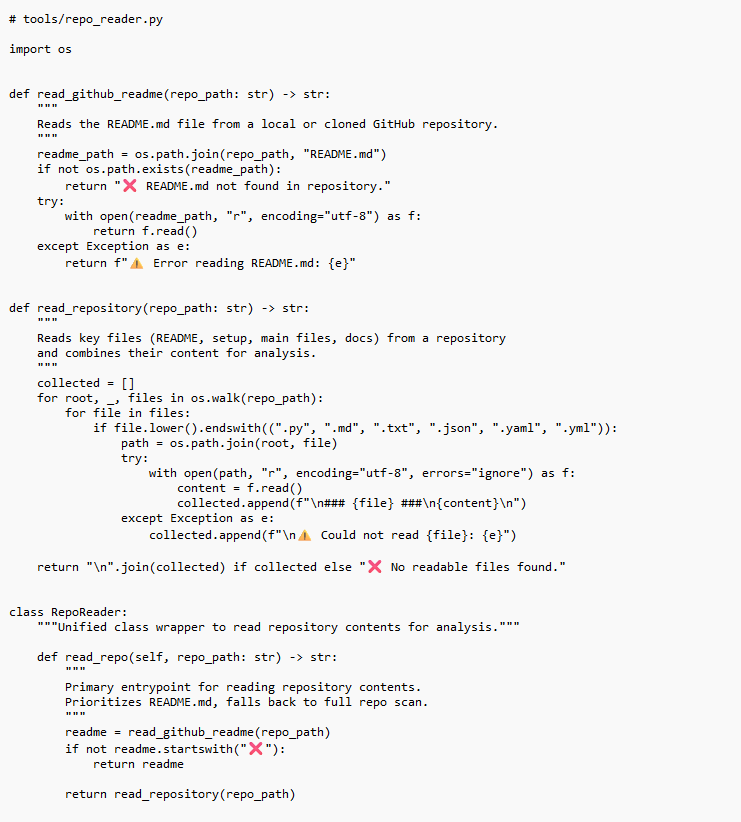

- RepoReader (repo_reader.py)

Purpose: Safely traverse and read repository files.

Key Features:

Recursive directory traversal

File type filtering (.py, .md, .json, etc.)

Size limits to prevent memory overflow

GitHub README fallback for remote repositories

def read_repository(path: str, max_files: int = 50) -> str:

"""

Reads repository files and aggregates content.

Handles:

- Local directories

- GitHub URLs (via API)

- Binary file exclusion

- Error handling for inaccessible files

"""

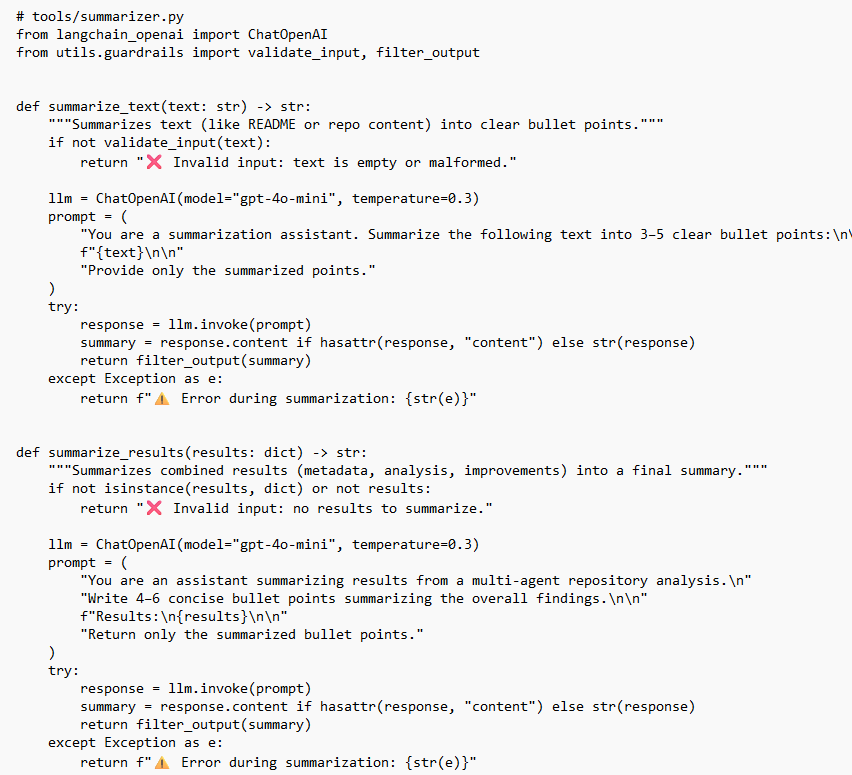

- Summarizer (summarizer.py)

Purpose: Aggregate and condense agent outputs into coherent summaries.

def summarize_results(agent_outputs: dict) -> str:

"""

Creates unified summary from:

- AnalyzerAgent findings

- MetadataAgent extractions

- ImproverAgent suggestions

"""

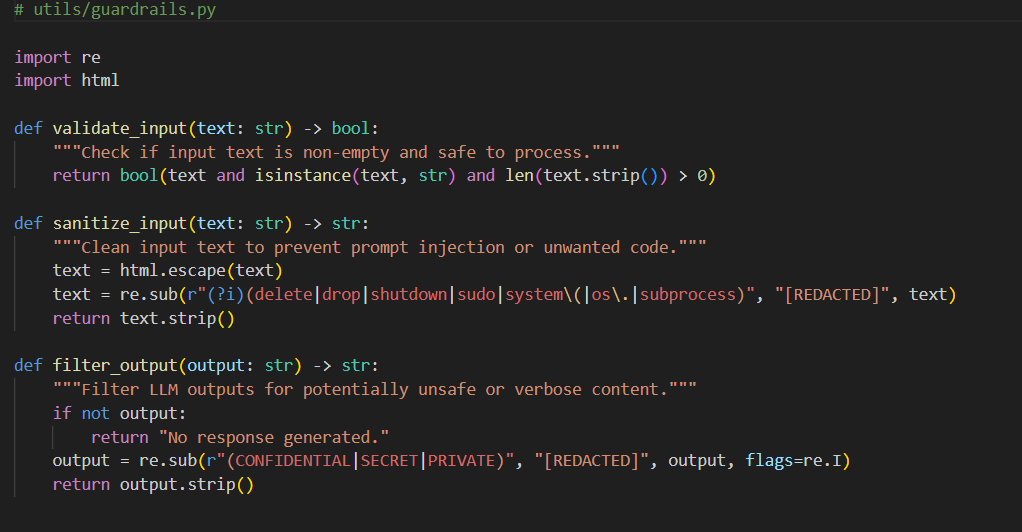

⚙️ Utils (utils/)

- Guardrails (guardrails.py)

Purpose: Input validation and output sanitization for security and reliability.

def validate_input(text: str) -> bool:

"""Ensures input is non-empty and safe to process"""

def sanitize_input(text: str) -> str:

"""Prevents prompt injection attacks"""

text = html.escape(text)

text = re.sub(r"(?i)(delete|drop|sudo)", "[REDACTED]", text)

return text.strip()

def filter_output(output: str) -> str:

"""Redacts sensitive information from LLM responses"""

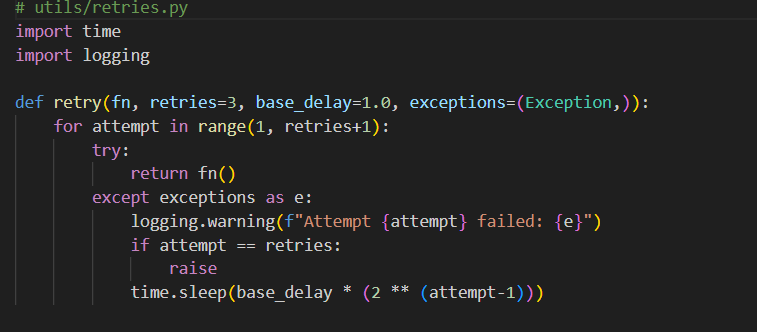

-

Retries (retries.py)

Purpose: Fault-tolerant execution with exponential backoff.

def retry(fn, retries=3, base_delay=1.0, exceptions=(Exception,)):

"""

Retries failed LLM API calls with exponential backoff.

Critical for handling:- Rate limits

- Temporary network failures

- LLM service outages

"""

-

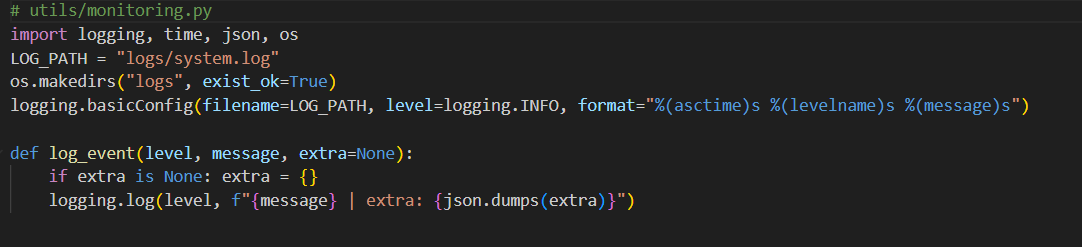

Monitoring (monitoring.py)

Purpose: Structured logging for debugging and observability.

def log_event(level, message, extra=None):

"""

Logs agent actions, LLM calls, and errors.

Output: logs/system.log

"""

##Agentic Architecture

Three Essential Components

According to Google's Agents Whitepaper, every agentic system comprises three core components:

┌─────────────────────────────────────────┐

│ AGENTIC SYSTEM │

├─────────────────────────────────────────┤

│ │

│ ┌───────────────────────────────┐ │

│ │ 1. MODEL (LLM) │ │

│ │ • Decision-making brain │ │

│ │ • Task planning │ │

│ │ • Reasoning frameworks │ │

│ └───────────────────────────────┘ │

│ │

│ ┌───────────────────────────────┐ │

│ │ 2. TOOLS │ │

│ │ • APIs │ │

│ │ • Databases │ │

│ │ • External systems │ │

│ └───────────────────────────────┘ │

│ │

│ ┌───────────────────────────────┐ │

│ │ 3. ORCHESTRATION │ │

│ │ • Workflow coordination │ │

│ │ • Agent communication │ │

│ │ • Result aggregation │ │

│ └───────────────────────────────┘ │

│ │

└─────────────────────────────────────────┘

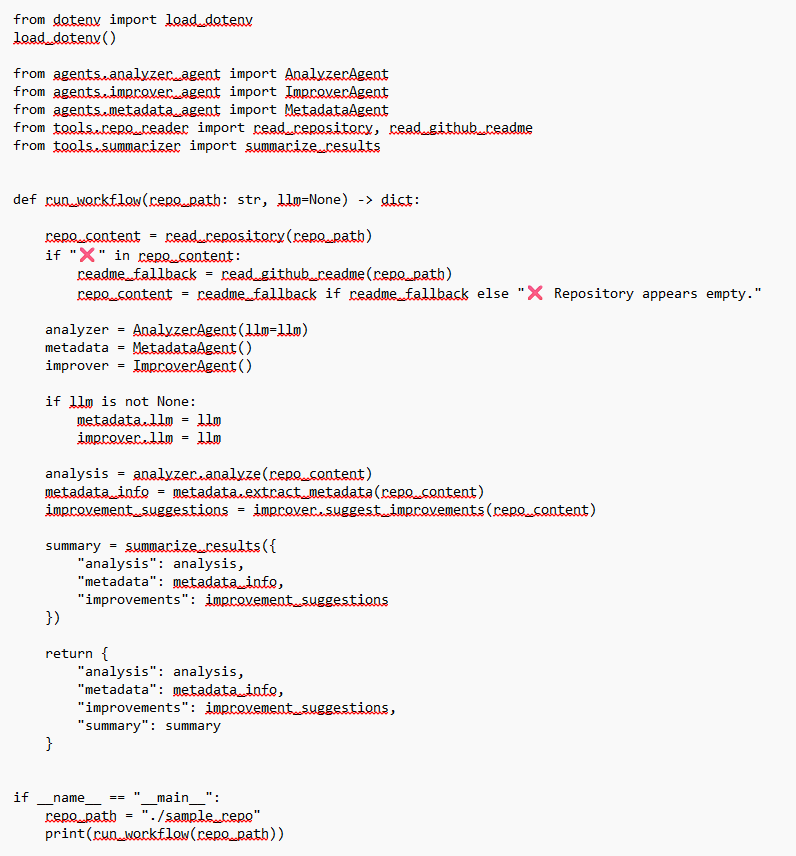

##Orchestration (Workflow Coordination)

File: main.py

Orchestration Strategy:

def run_workflow(repo_path: str, llm=None) -> dict:

# Step 1: Read repository

repo_content = read_repository(repo_path)

# Step 2: Initialize agents

analyzer = AnalyzerAgent(llm=llm)

metadata_agent = MetadataAgent(llm=llm)

improver = ImproverAgent(llm=llm)

# Step 3: Execute agents in parallel (future enhancement)

analysis = analyzer.analyze(repo_content)

metadata = metadata_agent.extract_metadata(repo_content)

improvements = improver.suggest_improvements(repo_content)

# Step 4: Aggregate results

summary = summarize_results({

"analysis": analysis,

"metadata": metadata,

"improvements": improvements

})

return {

"analysis": analysis,

"metadata": metadata,

"improvements": improvements,

"summary": summary

}

Orchestration Patterns:

1. Sequential Execution (Current):

RepoReader → AnalyzerAgent → MetadataAgent → ImproverAgent → Summarizer

2. Parallel Execution (Future):

RepoReader → [AnalyzerAgent, MetadataAgent, ImproverAgent] (concurrent) → Summarizer

3. Feedback Loop (Advanced):

AnalyzerAgent → ImproverAgent reviews analysis → AnalyzerAgent refines → Output

8️⃣ Agentic Patterns Implemented

The system implements multiple proven agentic patterns to enhance performance and reliability.

Pattern 1: Tool Use Pattern

Description: Agents access external tools to fetch information beyond the LLM's knowledge.

Implementation:

class AnalyzerAgent:

def init(self, llm, tools):

self.llm = llm

self.tools = tools # {repo_reader, web_search, etc.}

def analyze(self, repo_path):

# Tool usage

repo_content = self.tools['repo_reader'](repo_path)

# LLM reasoning

analysis = self.llm.invoke(f"Analyze: {repo_content}")

return analysis

Workflow:

┌──────────────┐

│ User Query │

└──────┬───────┘

│

▼

┌──────────────┐ ┌─────────────┐

│ Agent │────▶│ Tool 1 │

│ │ │ (RepoReader)│

└──────┬───────┘ └─────────────┘

│

│ ┌─────────────┐

└────────────▶│ Tool 2 │

│(WebSearch) │

└─────────────┘

Figure 5: Tool Use Pattern

Pattern 2: Reflection Pattern

Description: Agents iteratively improve outputs through self-critique.

def analyze_with_reflection(self, repo_content):

# Initial analysis

draft_analysis = self.llm.invoke(f"Analyze: {repo_content}")

# Self-reflection

critique = self.llm.invoke(

f"Review this analysis and identify weaknesses: {draft_analysis}"

)

# Refinement

final_analysis = self.llm.invoke(

f"Improve the analysis based on this critique: {critique}"

)

return final_analysis

Workflow:

Generate → Reflect → Improve → Generate v2 → Reflect → ... → Final Output

Pattern 3: Multi-Agent Collaboration

Description: Specialized agents work together, each handling a specific subtask.

Current Implementation:

AnalyzerAgent (Structure & Issues)

+

MetadataAgent (Metadata Extraction)

+

ImproverAgent (Suggestions)

↓

Orchestrator (Aggregation)

Collaboration Strategy:

Shared Context: All agents analyze the same repo_content

Complementary Roles: Each focuses on a distinct aspect

No Circular Dependencies: Sequential execution prevents deadlocks

9️⃣ Agent Workflows

High-Level Workflow

┌─────────────────────────────────────────────────────────┐

│ USER INTERACTION │

│ (Streamlit UI or CLI) │

└──────────────────────┬──────────────────────────────────┘

│

▼

┌─────────────────────────────┐

│ INPUT: Repository Path │

│ • Local: ./my-repo │

│ • Remote: github.com/... │

└─────────────┬───────────────┘

│

▼

┌─────────────────────────────┐

│ ORCHESTRATOR (main.py) │

│ • Validates input │

│ • Initializes agents │

│ • Manages workflow │

└─────────────┬───────────────┘

│

┌─────────────┴───────────────┐

│ │

▼ ▼

┌────────────────┐ ┌────────────────┐

│ TOOL: Repo │ │ GUARDRAILS │

│ Reader │ │ • Validate │

│ • Traverse │ │ • Sanitize │

│ • Read files │ │ │

└────────┬───────┘ └────────────────┘

│

▼

┌─────────────────────────────────────────────┐

│ AGENT EXECUTION LAYER │

├─────────────────────────────────────────────┤

│ │

│ ┌─────────────────────────────────────┐ │

│ │ ANALYZER AGENT │ │

│ │ • Analyzes structure │ │

│ │ • Detects issues │ │

│ │ • Identifies gaps │ │

│ └──────────────┬──────────────────────┘ │

│ │ │

│ ┌──────────────▼──────────────────────┐ │

│ │ METADATA AGENT │ │

│ │ • Extracts tech stack │ │

│ │ • Identifies structure │ │

│ │ • Parses dependencies │ │

│ └──────────────┬──────────────────────┘ │

│ │ │

│ ┌──────────────▼──────────────────────┐ │

│ │ IMPROVER AGENT │ │

│ │ • Suggests code improvements │ │

│ │ • Recommends best practices │ │

│ │ • Identifies missing docs │ │

│ └──────────────┬──────────────────────┘ │

│ │ │

└─────────────────┼──────────────────────────┘

│

▼

┌────────────────────┐

│ SUMMARIZER │

│ • Aggregates │

│ • Formats output │

└────────┬───────────┘

│

▼

┌────────────────────┐

│ MONITORING │

│ • Logs events │

│ • Tracks metrics │

└────────┬───────────┘

│

▼

┌─────────────────────────────────────────────┐

│ STRUCTURED OUTPUT │

│ { │

│ "analysis": {...}, │

│ "metadata": {...}, │

│ "improvements": {...}, │

│ "summary": "..." │

│ } │

└─────────────────────────────────────────────┘

Figure 6: Complete System Workflow

Detailed Execution Flow

Step 1: Input Handling

User provides repository path

repo_path = "./sample_repo" # or GitHub URL

Orchestrator validates and reads

repo_content = read_repository(repo_path)

if "❌" in repo_content:

# Fallback to GitHub README

repo_content = read_github_readme(repo_path)

Step 2: Agent Initialization

Initialize with optional custom LLM (useful for testing)

analyzer = AnalyzerAgent(llm=custom_llm)

metadata_agent = MetadataAgent(llm=custom_llm)

improver = ImproverAgent(llm=custom_llm)

Step 3: Parallel Agent Execution (Current: Sequential)

Each agent processes independently

analysis_result = analyzer.analyze(repo_content)

metadata_result = metadata_agent.extract_metadata(repo_content)

improvement_result = improver.suggest_improvements(repo_content)

Step 4: Result Aggregation

summary = summarize_results({

"analysis": analysis_result,

"metadata": metadata_result,

"improvements": improvement_result

})

Step 5: Output Delivery:

Return structured JSON

return {

"analysis": analysis_result,

"metadata": metadata_result,

"improvements": improvement_result,

"summary": summary

}

🔟 Implementation Guide

Prerequisites

Technical Requirements:

Python: 3.10 or higher

RAM: Minimum 4GB, recommended 8GB

Disk Space: ~1GB for dependencies and logs

Internet: Required for LLM API calls

API Requirements:

OpenAI API Key (get from platform.openai.com)

Installation Steps

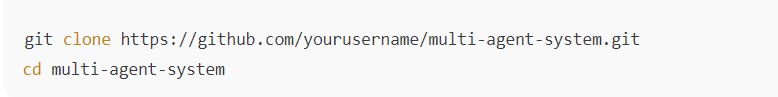

Step 1: Clone the Repository

Setting up the environment

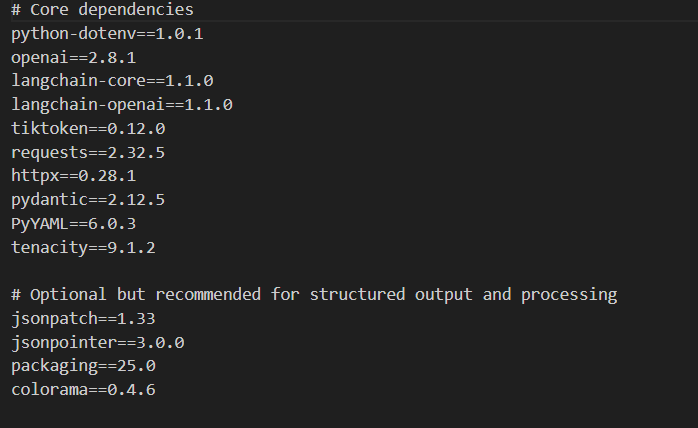

Create a new virtual environment and install the dependencies using requirements.txt file.

requirements.txt

.env

Create a .env file and add your API key.

Security Best Practice:

Add to .gitignore

echo ".env" >> .gitignore

Running the System

Option 1: Command-Line Interface (CLI)

Basic Usage:

python main.py

This analyzes the default ./sample_repo directory.

Analyze Custom Repository:

Modify main.py

if name == "main":

repo_path = "./your-custom-repo" # Change this

result = run_workflow(repo_path)

print(json.dumps(result, indent=2))

Output:

{

"analysis": {

"project_overview": "Python-based CLI tool for data processing...",

"detected_issues": [

"Missing error handling in data_loader.py",

"No unit tests for core modules"

],

"documentation_gaps": [

"No API documentation",

"Installation steps unclear"

]

},

"metadata": {

"project_name": "DataPro",

"technologies": ["python", "pandas", "numpy"],

"author": "unknown",

"license": "MIT"

},

"improvements": {

"suggestions": [

"Add type hints to all functions",

"Implement comprehensive error handling",

"Create tests/ directory with pytest",

"Add CONTRIBUTING.md"

]

},

"summary": "DataPro is a Python CLI tool with strong data processing capabilities but lacks comprehensive testing and documentation."

}

- Installation

Step 1 — Clone the Repo

Step 2 — Create Virtual Environment

Windows:

Step 3 — Install Dependencies

- Environment Setup

Your .env file should look like:

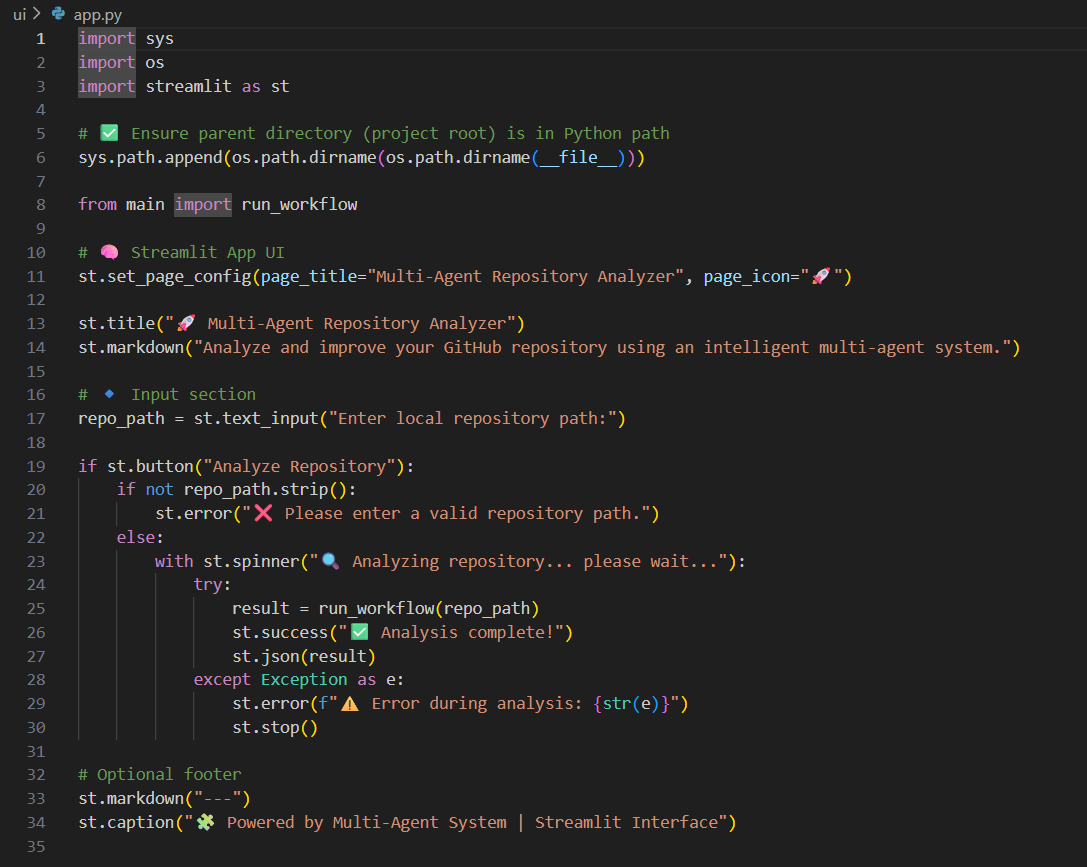

🖥 ui/ — Streamlit User Interface

The UI layer provides an interactive interface for executing the multi-agent workflow.

• app.py

Allows users to upload repositories, trigger analysis, and view structured outputs in real time.

This component demonstrates end-user accessibility and practical applicability of the agentic system.

app.py

This is the main entry point of the application built in Streamlit. It uses requests to interact with the FastAPI endpoints at the backend.

BACKEND

🚀 main.py — Orchestration Entry Point

The main execution file initializes agents, invokes tools, coordinates agent interactions, and aggregates results into a unified output. It acts as the central orchestrator for the multi-agent workflow.

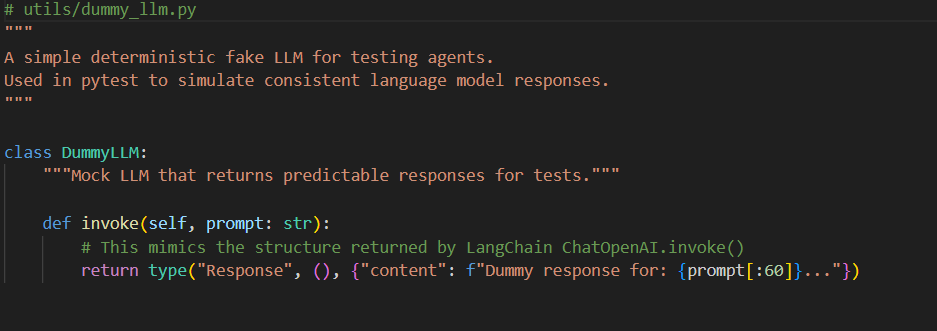

🧩 utils/ — Reliability, Safety, and Observability

This directory contains cross-cutting utilities that improve system robustness and production readiness.

• Guardrails (guardrails.py) – Input and output validation

• Retries (retries.py) – Fault-tolerant retry mechanisms

• Monitoring (monitoring.py) – Logging and execution tracking

• Dummy LLMs (dummy_llm.py, your_dummy_llm.py) – Mock models for offline testing and validation

These utilities ensure the system remains stable, debuggable, and resilient under failure scenarios.

-->utils.py

dummy_llm.py

guardrails.py

monitoring.py

retries.py

📁 Directory Breakdown

🧠 agents/

Contains autonomous, role-based AI agents, each responsible for a specific reasoning task.

• analyzer_agent.py

o Analyzes repository structure and source code

o Detects architectural issues, gaps, and inconsistencies

• improver_agent.py

o Suggests enhancements for code quality, structure, and documentation

o Focuses on best practices and maintainability improvements

• metadata_agent.py

o Extracts high-level project metadata

o Identifies dependencies, configuration patterns, and repository context

This folder demonstrates agent specialization and collaborative intelligence, a core principle of agentic AI systems.

--> agents

analyzer_agent.py

improver_agent.py

metadata_agent.py

🛠 tools/

Reusable tools that agents rely on to interact with external data and repositories.

• repo_reader.py

o Reads repository files and directories

o Handles file traversal and safe access

• summarizer.py

o Condenses agent outputs into structured summaries

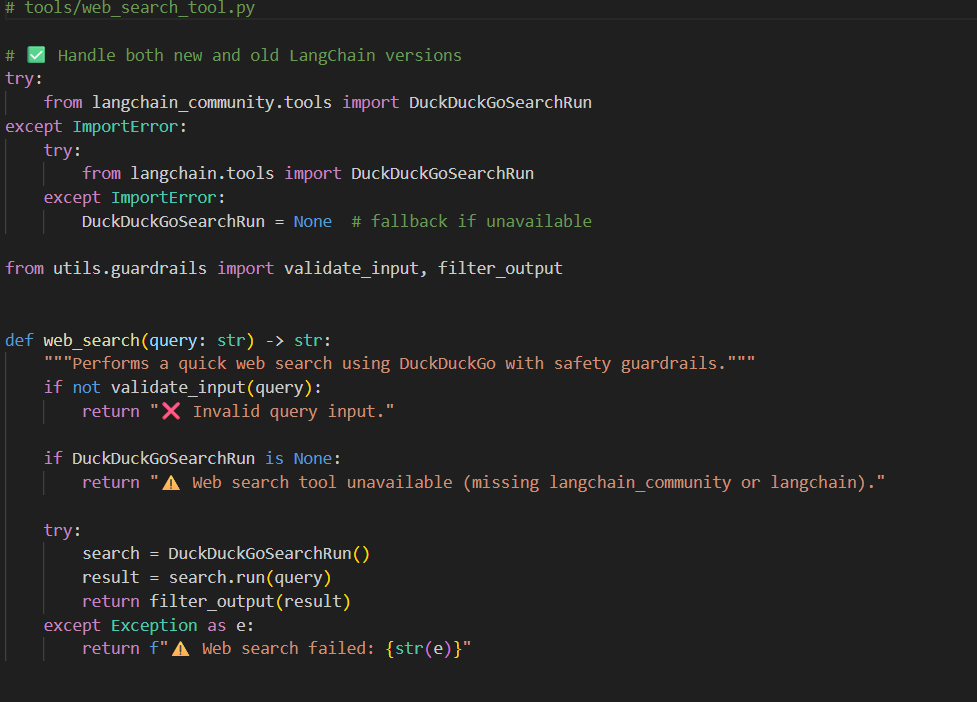

• web_search_tool.py

o Supports external knowledge lookup when enabled

o Enhances reasoning with contextual information

-->tools

repo_reader.py

summarizer.py

web_search_tool.py

🧪 sample_repo/ — Demonstration & Validation Repository

A controlled example repository used to test and validate agent behavior without impacting real-world projects. This enables repeatable evaluation during certification review.

📦 Supporting Infrastructure

• tests/ – Unit and integration tests

• config/ – Configuration files

• logs/ – Runtime logs and monitoring output

• requirements.txt – Dependency management

• .env – Environment variable configuration

• README.md – Documentation and usage guide

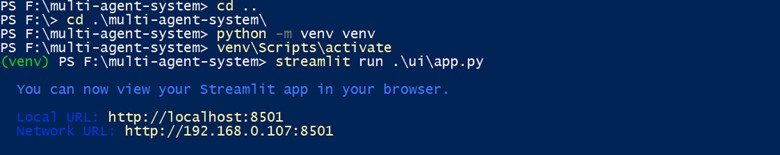

- How to Run the System

🟢 Option 1 — Run Multi-Agent Workflow

🟣 Option 2 — Run Streamlit UI

Screenshot Placeholder:

┌─────────────────────────────────────────────┐

│ 🚀 Multi-Agent Repository Analyzer │

├─────────────────────────────────────────────┤

│ │

│ Enter local repository path: │

│ ┌─────────────────────────────────────┐ │

│ │ ./sample_repo │ │

│ └─────────────────────────────────────┘ │

│ │

│ [ Analyze Repository ] │

│ │

├─────────────────────────────────────────────┤

│ ✅ Analysis complete! │

│ │

│ 📊 Results: │

│ { │

│ "analysis": {...}, │

│ "metadata": {...}, │

│ ... │

│ } │

└─────────────────────────────────────────────┘

Streamlit Web Interface

Launch UI:

cd ui

streamlit run app.py

Access:

Open browser at http://localhost:8501

UI Features:

📂 Input repository path (local or GitHub URL)

🚀 Click "Analyze Repository"

📊 View structured results in JSON format

📥 Download report as JSON

- Example Output:-

JSON OUTPUT:

{

"analysis": {

"project_overview": "The repository appears to contain a Python-based multi-agent system designed for automated code analysis, metadata extraction, and improvement recommendations. It centers around modular agents (AnalyzerAgent, MetadataAgent, ImproverAgent) coordinated through a main orchestrator script. The system relies on OpenAI models via LangChain and is capable of reading repositories, analyzing structure, and generating insights.",

"detected_issues": [

"README.md missing or incomplete in the repository",

"Inconsistent naming conventions across certain Python modules",

"No comprehensive documentation for agents behavior and expected outputs",

"Lack of structured logs in some parts of the system",

"Missing tests for edge cases in MetadataAgent and AnalyzerAgent",

"Environment variables not validated before model initialization"

],

"documentation_gaps": [

"Project goal not clearly described",

"Missing installation instructions for beginners",

"No explanation of the multi-agent architecture",

"No usage examples or input/output samples",

"No explanation of how .env variables influence workflow"

]

},

"metadata": {

"project_name": "Multi-Agent System Analyzer",

"description": "A modular multi-agent system built using Python, LangChain, and OpenAI models to automatically analyze repository contents, extract metadata, and generate structured recommendations.",

"technologies": [

"Python",

"LangChain",

"OpenAI GPT-4o-mini",

"Streamlit (optional UI)",

"dotenv",

"Logging utilities"

],

"author": "Unknown (not specified in repository)",

"tags": [

"multi-agent",

"AI agents",

"repository-analysis",

"automation",

"langchain"

],

"license": "MIT (or unspecified)",

"repo_structure": {

"root_files": [

"main.py",

"requirements.txt",

"README.md",

".env"

],

"directories": [

"agents/",

"tools/",

"utils/",

"ui/",

"tests/",

"logs/"

],

"agents": {

"AnalyzerAgent": "Performs repository content analysis and summarizes issues.",

"MetadataAgent": "Extracts structured metadata from README or repo content.",

"ImproverAgent": "Generates improvements and best-practice recommendations."

},

"raw_output": "{... full extracted JSON from model ...}"

}

},

"improvements": {

"code_quality": [

"Refactor repeated code into utility functions under utils/",

"Add type annotations across all agent methods",

"Use consistent snake_case naming convention",

"Improve exception handling around LLM API interactions",

"Introduce retry mechanisms for rate-limits"

],

"documentation": [

"Add comprehensive README.md including architecture diagram",

"Document each agent class with docstrings",

"Add examples of input and output in README",

"Include troubleshooting steps for API issues",

"Provide clear environment configuration guide"

],

"architecture": [

"Create dedicated Orchestrator class instead of mixing logic in main.py",

"Add agent registry for easier addition/removal of new agents",

"Introduce message bus or event system for cleaner communication",

"Implement caching layer for repeated LLM calls"

],

"best_practices": [

"Use .env.example template for sharing environment variables",

"Create CONTRIBUTING.md for open-source collaboration",

"Set up GitHub Actions for tests and lint checks",

"Add pre-commit hooks to enforce formatting and linting",

"Use semantic versioning for future releases"

]

},

"summary": {

"highlights": [

"The repository implements a multi-agent workflow using LangChain and OpenAI.",

"Agents collaborate to analyze code, extract metadata, and suggest improvements.",

"Documentation is the most critical missing component.",

"Architecture can be enhanced with better modularization and agent orchestration."

],

"overall_quality_score": 7.2,

"recommendation": "Add full documentation, improve consistency across modules, and enhance architecture for scalability."

}

}

- Architecture Overview

🧠 Multi-Agent Structure

• Central Orchestrator coordinates agent execution

• Agents operate autonomously but share structured context

• Outputs are normalized into JSON schemas

🧩 Key Concepts

• Role-specialized agents

• Shared memory

• LLM-driven reasoning

• Fault-tolerant pipelines

• Structured outputs

🔐 Resilience, Observability & Reliability

To enhance production readiness, the system incorporates or recommends:

• Retry mechanisms for LLM API failures

• Timeout handling for long-running agent tasks

• Structured logging for agent decisions

• Graceful fallback agents to prevent workflow failure

• Clear error messaging for configuration issues

These features ensure stability, debuggability, and long-term maintainability.

✨ Features

✔ Multi-Agent Reasoning Pipeline

✔ Automated Metadata Extraction

✔ Code Quality & Architecture Analysis

✔ Documentation Gap Detection

✔ Streamlit-Based Interactive UI

✔ Exportable JSON Reports

✔ Robust Error Handling

🚀 Future Enhancements

• Agent memory persistence

• Plugin-based agent registry

• Caching layer for repeated LLM calls

• GitHub Actions integration

• Multi-repo batch analysis

📝 License

This project is licensed under the MIT License.

MIT License

Copyright (c) 2025 Prantesh Dahikar

Permission is hereby granted, free of charge, to any person obtaining a copy

of this software and associated documentation files (the "Software"), to deal

in the Software without restriction, including without limitation the rights

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

copies of the Software...

🙏 Acknowledgments

Special thanks to:

• LangChain Team for the powerful agent framework

• OpenAI for GPT-4o-mini and vision models

• Streamlit for the intuitive UI framework

• Open-source community for inspiration and contributions

📞 Contact & Support

Author: Prantesh Dahikar

Email: pdahikar9@gmail.com

GitHub: https://github.com/pranteshdahikar/multi-agent-system