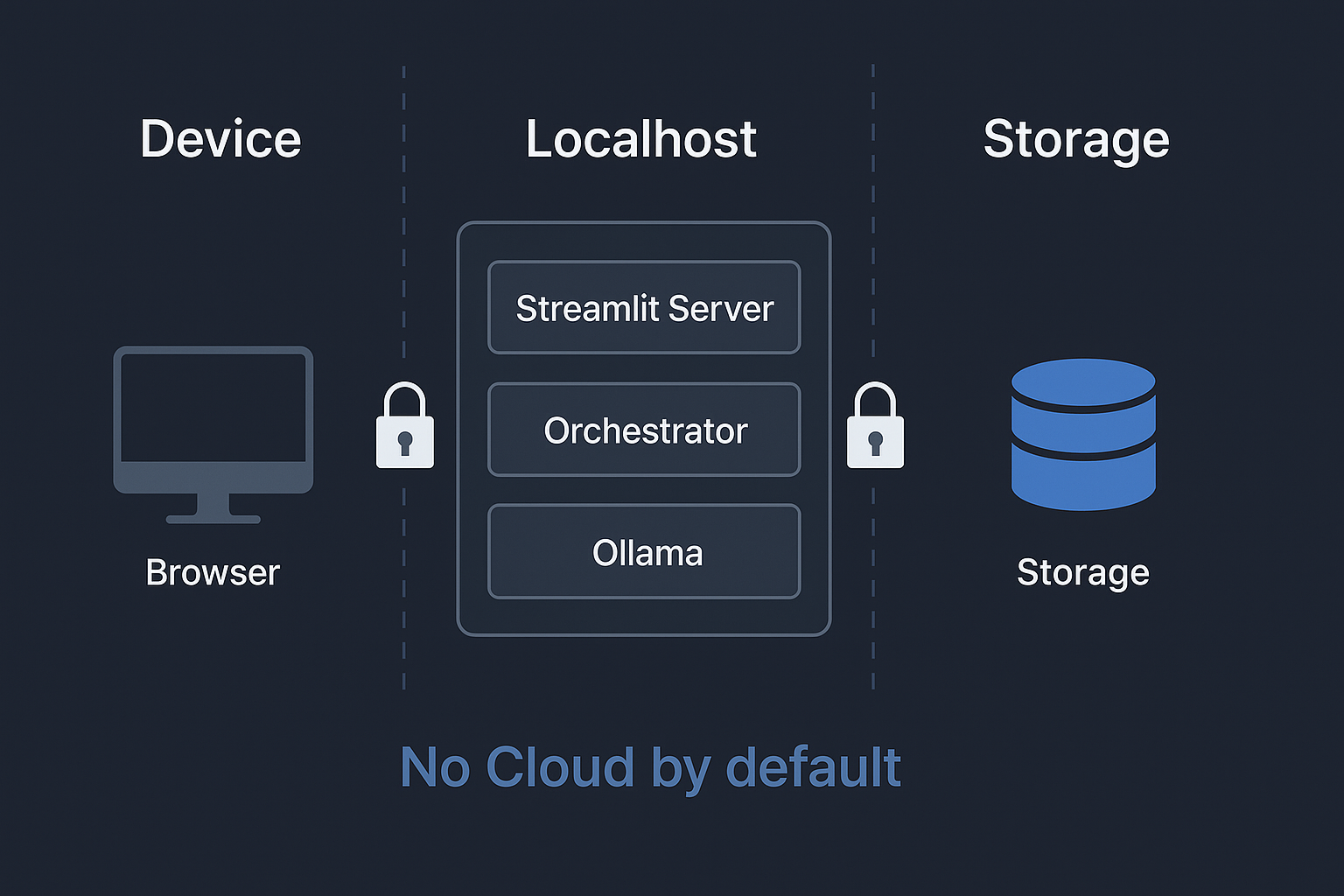

In an age where content creation is both abundant and algorithm-driven, the demand for authentic, high-quality, and privacy-conscious editorial workflows has never been greater. Traditional AI tools often rely on cloud APIs that compromise privacy, incur usage costs, or introduce latency. In contrast, our Content Creation Multi-Agent System introduces a novel, zero-API, local-first architecture that enables autonomous article generation from start to finish—entirely offline.

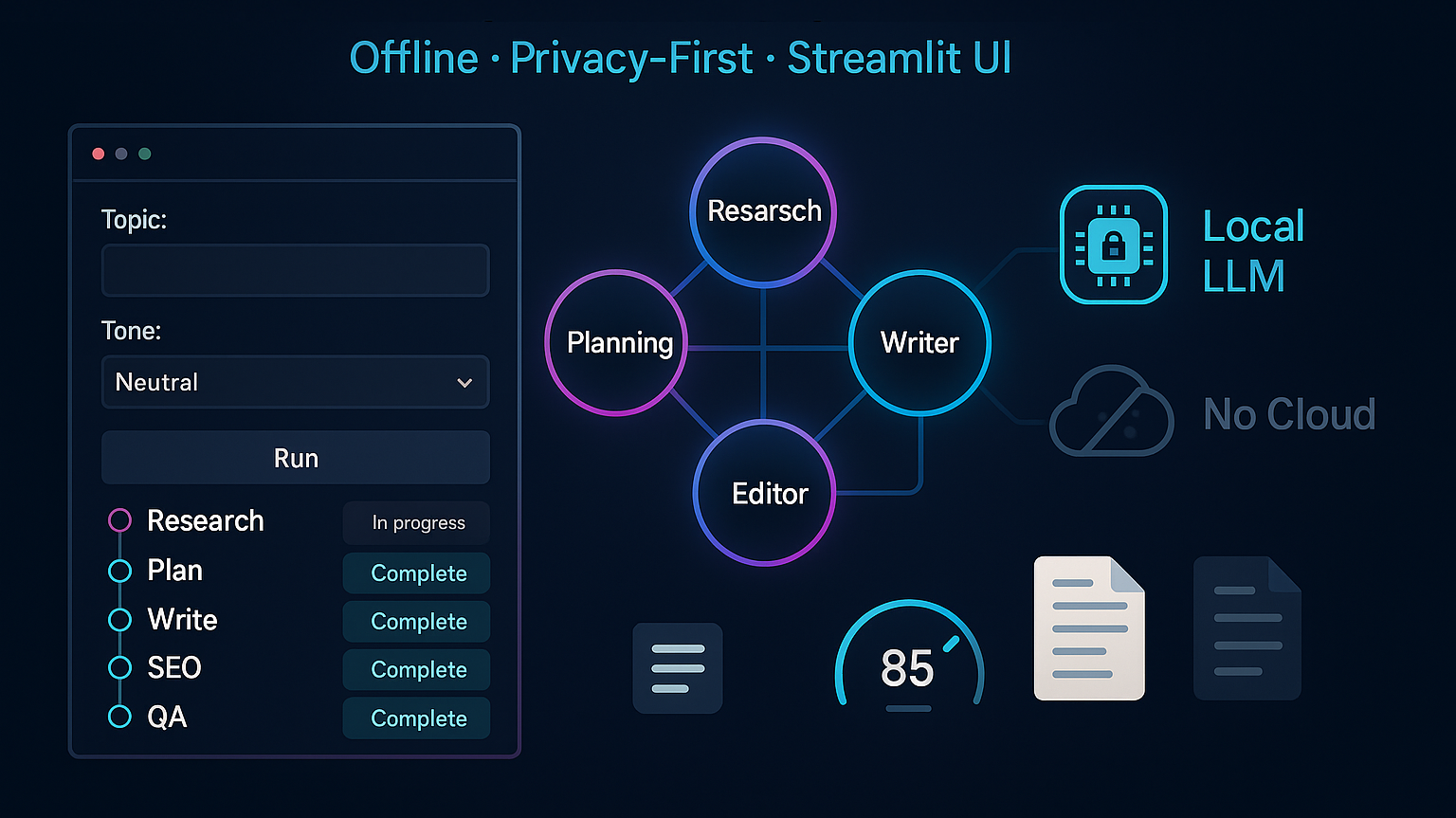

The Content Creation Multi-Agent System is a fully local, privacy-first AI orchestration pipeline designed to automate high-quality content generation. By leveraging LangGraph and local Ollama LLMs, the system coordinates a team of specialized AI agents—from research to quality assurance—to produce SEO-optimized articles without requiring internet or API access.

This system leverages a collaborative pipeline of specialized agents, each responsible for a distinct phase in the content lifecycle. Whether it's performing web research, drafting a coherent article, optimizing for SEO, or conducting quality checks, these agents function harmoniously under the orchestration of LangGraph's state management, and run on your machine using local Ollama models.

Highlights:

This project is updated version of the following project: https://app.readytensor.ai/publications/content-creation-multi-agent-system-KIOS1uHdGJUw

The new version is an updated, production‑ready release of this project with a Streamlit web UI and several enhancements:

| Capability | Previous Project | New (Streamlit Edition) |

|---|---|---|

| UI | None | Streamlit dashboard with live status & preview |

| Inference | Local Ollama | Local Ollama + optional tools |

| Pipeline | Multi‑agent (basic) | Hardened 6‑agent pipeline w/ retries & guards |

| Reproducibility | Partial | Pinned deps, temperature/seed control, run metadata |

| Quality Checks | Basic | Readability, SEO score, structure & keyword coverage |

| Usage Modes | CLI | CLI + Python API + Streamlit UI |

| Outputs | Markdown only | Markdown + artifacts + logs |

The Content Creation Multi-Agent System is built on a foundational belief: AI content generation should be trustworthy, reproducible, and under the user’s full control. This philosophy drives every component of its architecture—from model execution to task orchestration.

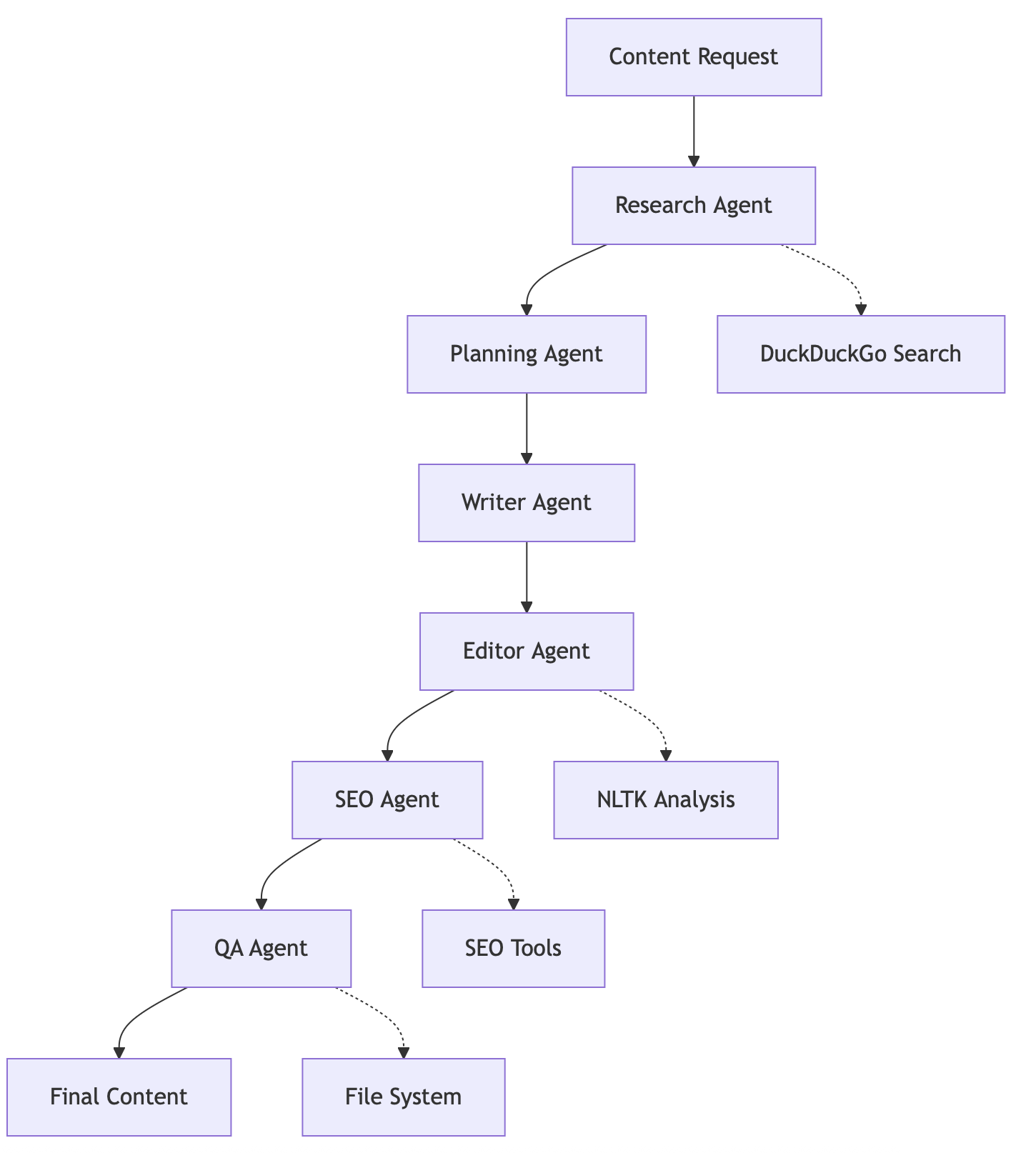

The core of the system is built on a LangGraph StateGraph, which manages the transitions between agents in a modular, traceable fashion. The high-level flow begins when a user submits a topic or request. This request then activates a set of six AI-powered agents, each executing a role akin to human editorial staff in a newsroom.

Agent Workflow is as follows:

| Agent | Description |

|---|---|

| Research Agent | Performs external research using DuckDuckGo APIs |

| Planning Agent | Creates structured outlines for articles |

| Writer Agent | Generates full drafts via local Ollama models |

| Editor Agent | Refines style and grammar using NLTK and internal rules |

| SEO Agent | Optimizes for keywords, structure, and meta descriptions |

| Quality Assurance Agent | Final gatekeeper to evaluate readability and coherence |

Research Agent

Planning Agent

Writer Agent

Editor Agent

SEO Agent

Quality Assurance Agent

All components are tied into a centralized State Management module, ensuring traceable transitions and robust recovery. Each step produces a tangible, inspectable output.

To help visualize how the system works internally, consider the following architecture diagram:

graph TD D[Agent Pipeline] D --> E[ResearchAgent] D --> F[PlanningAgent] D --> G[WriterAgent] D --> H[EditorAgent] D --> I[SEOAgent] D --> J[QualityAssuranceAgent] E --> K[DuckDuckGo API] G --> L[Ollama LLM] H --> M[NLTK Analysis] I --> N[SEO Tools] J --> O[File System]

This agent pipeline model not only enhances readability but also improves modular debugging and replacement of components.

| Local Tool / Service | Purpose | Key Features |

|---|---|---|

Ollama LLM Integration chat_ollama | Local language‑model inference | Supports multiple models (llama3.1, mistral, codellama); configurable parameters and optimization |

Web Search Tool web_search_tool | Information gathering | DuckDuckGo integration; configurable result limits; robust error handling |

Content Analysis Tool content_analysis_tool | Readability & keyword analytics | Flesch‑Kincaid scoring; word count & reading‑time calculation; keyword density analysis via NLTK |

SEO Optimization Tool seo_optimization_tool | Search‑engine optimization | Keyword presence & density checks; SEO score calculation and recommendations; content‑structure suggestions |

File Management Tool save_content_tool | Output handling | Automated file saving with timestamps; organized directory structure; metadata preservation |

content-creation-multi-agent-system/

├── main.py # Core LangGraph-driven workflow

├── demo.py # Interactive use-case demo

├── test_agents.py # Unit tests for each agent

├── resolve_conflicts.py # Fix common local dependency issues

├── outputs/ # Generated articles and logs

├── OLLAMA_SETUP_GUIDE.md # Step-by-step Ollama LLM install

├── .env # Local config for model parameters

Designed with simplicity: 1-click setup, modular customization, and extensive documentation make it ideal for both R&D and real-world use.

Follow these steps to get the Content Creation Multi‑Agent System running on your local machine.

Clone the repository

git clone https://github.com/your-org/content-creation-multi-agent-system.git cd content-creation-multi-agent-system

Create a virtual environment (optional but recommended)

python -m venv .venv source .venv/bin/activate # on macOS/Linux .venv\Scripts\activate # on Windows

Install Python dependencies

pip install -r requirements.txt

Install Ollama and download a local LLM

The project is tested against llama3 (7‑B and 13‑B) models.

See OLLAMA_SETUP_GUIDE.md for platform‑specific instructions.

# Example brew install ollama # macOS (Homebrew) ollama run llama3

Run the pipeline

python main.py

All generated articles and logs will appear in the outputs/ directory.

Try

python demo.pyfor an interactive walkthrough.

# Launch the UI streamlit run streamlit_app.py

UI highlights

| Asset | Link |

|---|---|

| Source Code | https://github.com/hakangulcu/content-creation-multi-agent-system?tab=readme-ov-file#multi-agent-system |

| Setup Guides | README.md,OLLAMA_SETUP_GUIDE.md |

| Example Outputs | outputs/ folder after a sample run |

The Content Creation Multi-Agent System uses a comprehensive test suite with PyTest to ensure reliability, performance, and correctness across all components. The testing framework includes unit tests, integration tests, end-to-end tests, and tool validation tests.

Purpose: Test individual agent functionality in isolation

Coverage: 15 tests across 6 agents + error handling

Focus: Agent initialization, core methods, exception handling

Purpose: Test agent-to-agent interactions and state flow

Coverage: 14 tests covering pipeline stages and state management

Focus: Data flow, state consistency, feedback accumulation

Purpose: Test complete content creation workflows

Coverage: 9 tests covering real-world scenarios

Focus: Full pipeline execution, different content types

Purpose: Test external tool integrations

Coverage: 13 tests covering web search, analysis, SEO, file operations

Focus: API integration, error handling, data validation

Detailed test configuration and test runs are provided in **TEST_DOCUMENTATION.md** in code repository.

Here is a video of a streamlit sample:

By orchestrating specialised agents entirely offline, this system proves that high‑quality, SEO‑ready content can be generated without surrendering data to cloud providers or incurring API fees.

Threat model & trust boundaries

./outputs/ with access limited to the host user.Controls

.env file is optional; never logged. Secrets are read at process start and not written to disk.pip-audit and safety checks in CI; SBOM generation with pipdeptree.Compliance alignment

./outputs/ purge).Security test cases (part of CI)

This project is released under the MIT License:

A full license text is available in the accompanying LICENSE file.

When redistributing, please retain copyright notices.

Model License: Local LLMs pulled via Ollama are subject to the model creator’s license.

Ensure compliance when integrating third‑party models.