Comprehensive Guide to Evaluation Metrics for Computer Vision Task

Table of contents

When evaluating a Computer Vision model, there are various metrics that you can use depending

on the specific needs and goals of your project. Below is a breakdown of some common metrics

across varying tasks.

1. Evaluation Metrics for Image Classification

1.1 Confusion Matrix

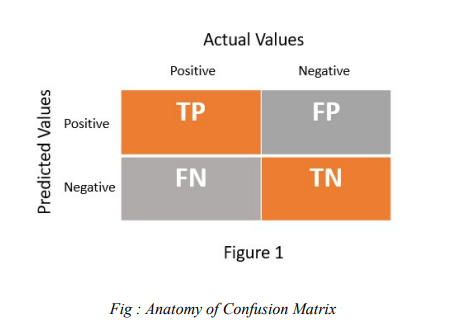

In machine learning, evaluation is often the primary focus. However, in classification problems, it

is equally important to consider the rates of correct and incorrect classifications. Therefore, we require a method that not only reports accuracy but also provides insights into correct and incorrect predictions. The confusion matrix fulfills this need. It is an N×N matrix that evaluates the performance of a machine learning model for classification tasks. Figure 1 illustrates the confusion matrix for a binary classification problem.

1.2 Accuracy

Accuracy is a metric used to measure how well a model or system performs by calculating the

proportion of correct predictions or outcomes to the total number of predictions or outcomes.

The formula for computation of Accuracy is given as :

1.3 F1 Score

The F1 score is a performance metric used to evaluate the accuracy of a classification model. It is particularly useful in cases of imbalanced datasets, where the classes are not evenly distributed. The F1 score combines precision and recall into a single metric, offering a balanced measure that accounts for both false positives and false negatives.

or

1.4 Classification Report

A classification report is a detailed summary of a classification model's performance on a specific dataset. It provides key metrics for evaluating the quality of predictions, including precision, recall, F1 score, and support for each class. This report is widely used in machine learning to assess the effectiveness of classification models, particularly in multi-class scenarios. The classification report is a versatile and widely used tool for evaluating the performance of classification models. By providing metrics for each class and overall averages, it enables a clear understanding of how well the model performs and helps in identifying areas for improvement. It is particularly valuable in multi-class and imbalanced classification problems.

| precision | recall | f1-score | support | |

|---|---|---|---|---|

| Class A | 0.83 | 1.00 | 0.91 | 5 |

| Class B | 1.00 | 0.80 | 0.89 | 5 |

| accuracy | 0.90 | 10 | ||

| macro avg | 0.92 | 0.90 | 0.90 | 10 |

| weighted avg | 0.92 | 0.90 | 0.90 | 10 |