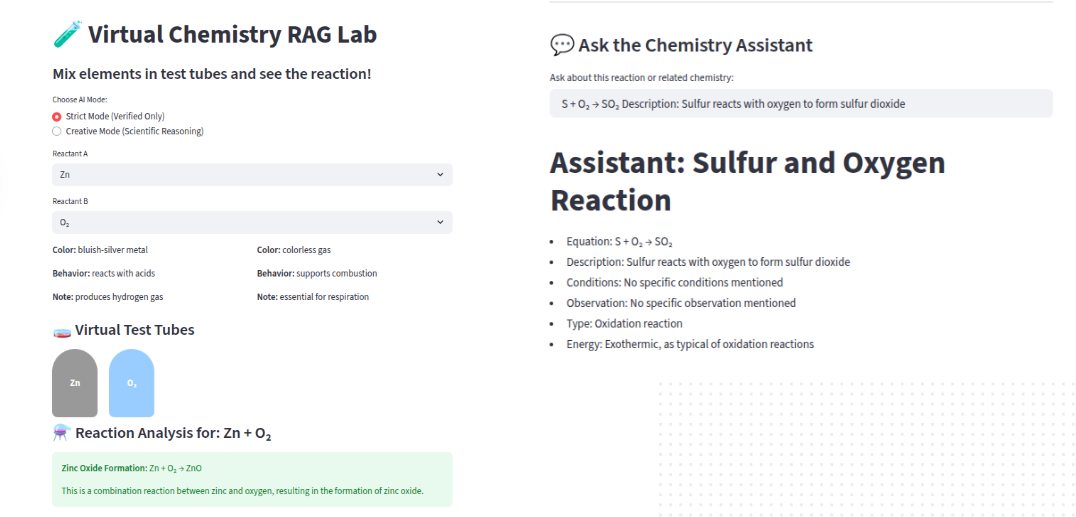

The Chemixtry Chatbot Lab for Chemical Reactions is a RAG Lab presents an innovative approach to interactive science learning and reasoning using Retrieval-Augmented Generation (RAG).

The system integrates a Streamlit-based multimodal interface with a vectorized knowledge base of curated chemistry documents to simulate laboratory experimentation through conversational interaction.

By combining semantic retrieval, contextual prompt engineering, and generative reasoning, the model provides explainable, factual, and educationally grounded answers to queries such as

“What happens when Zinc reacts with Hydrochloric acid?”

"Explain oxidation in simple terms.”

Recent advances in large language models (LLMs) have enabled systems capable of generating natural, human-like responses to complex queries.

However, pure generative models often lack factual grounding, especially in scientific domains where accuracy is paramount.

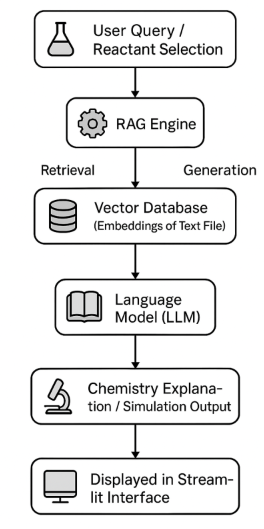

To address this, Retrieval-Augmented Generation (RAG) has emerged as a hybrid architecture — combining retrieval from a knowledge base with contextual reasoning via generation.

The Virtual Chemistry RAG Lab applies this paradigm to the field of chemistry education by creating a virtual experiment environment where users can mix chemical reactants, observe AI-generated outcomes, and understand underlying scientific principles.

The project focuses on:

The primary objectives of this project are:

RAG offers a hybrid architecture that combines retrieval from a validated domain knowledge base with LLM-driven reasoning. The Virtual Chemistry RAG Lab applies this paradigm to create an experimental sandbox where users can mix chemical reactants, observe AI-generated outcomes, and understand the underlying scientific principles with high factual robustness.

SentenceTransformers was chosen due to:

This ensures that queries about reactions, compounds, mechanisms, or safety rules map accurately to the correct scientific sources.

The LLM layer uses Groq-accelerated models integrated through LangChain. These were selected because:

The system consists of three key layers:

Frontend (Streamlit Interface) – Provides an interactive, visual lab environment.

RAG Core Engine – Combines retrieval and generation using semantic embeddings.

Vector Database Layer – Stores and retrieves chemistry knowledge in vectorized form.

3.1 Data Preparation

The data/ directory contains curated .txt documents representing verified chemistry knowledge such as:

reactions_knowledge.txt — common inorganic reactions

water_formation.txt — oxidation and hydrogen combustion

biotechnology.txt — cross-disciplinary test file

Documents are cleaned, tokenized, and chunked into semantically coherent units.

3.2 Vectorization and Retrieval

Each text chunk is embedded using SentenceTransformer to generate fixed-size semantic vectors.

Vectors are stored locally using a lightweight vector store (FAISS/Chroma) for offline access.

Queries are embedded and compared using cosine similarity, returning the most relevant context.

3.3 Generation and Prompt Engineering

The Virtual Chemistry RAG Lab employs a structured prompt-based reasoning framework embedded within the RAGAssistant class.

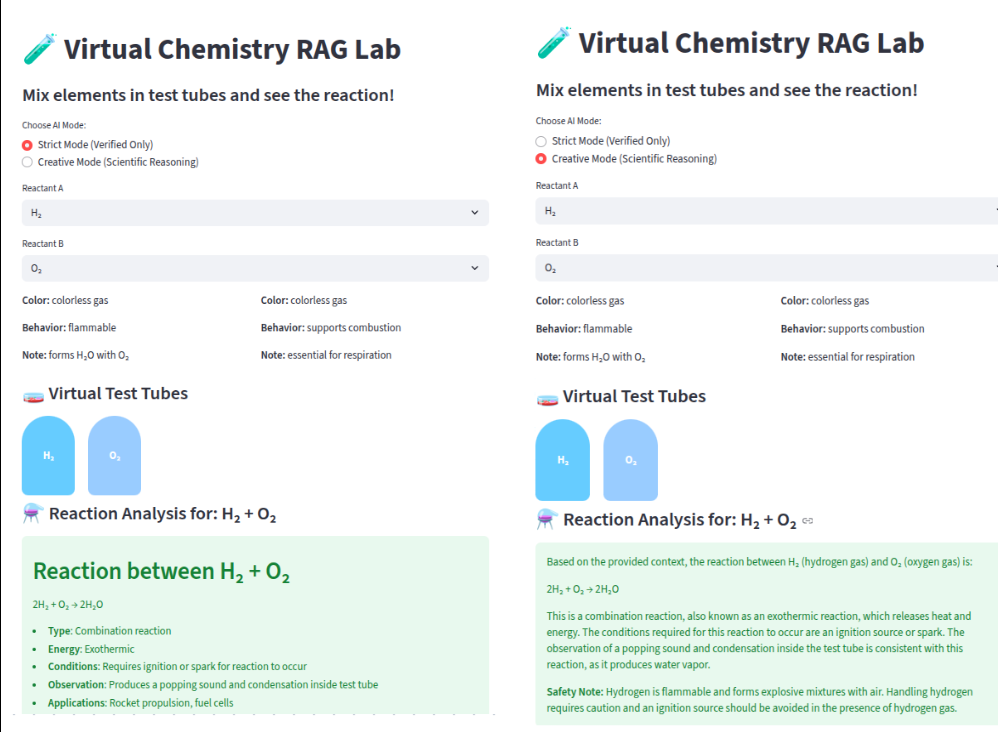

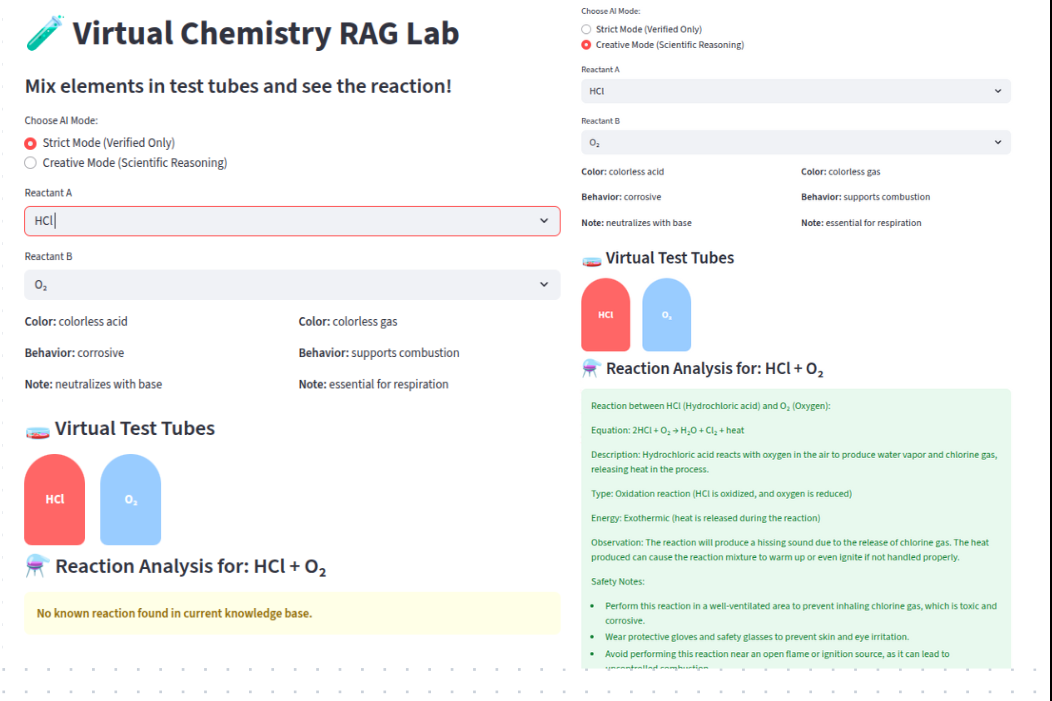

This design ensures that the language model operates under controlled scientific constraints, adapting its reasoning behavior according to the selected operational mode —** Strict or Creative.**

The prompt structure consists of two key components:

System Prompt — Defines the assistant’s personality, safety rules, and factual boundaries.

User Template — Dynamically injects the retrieved contextual text and user query during runtime.

Used when the model must produce strictly factual responses derived only from the retrieved knowledge base.

You are ChemGPT, a Virtual Chemistry Lab Assistant that answers ONLY

based on the retrieved knowledge base context. This is STRICT mode.

Rules:

Remember: You are not generating — you are retrieving factual info only.

This configuration enforces retrieval integrity — the model is constrained to operate purely within the context of verified chemistry data.

It prevents hallucination and maintains scientific trustworthiness.

Used when the model is allowed to perform guided reasoning or educational expansion beyond the retrieved text.

You are ChemGPT Creative Mode — a Chemistry Assistant that combines

the retrieved context with your general scientific knowledge.

Rules:

This configuration encourages exploratory reasoning while maintaining transparency — any inference is explicitly labeled, ensuring the distinction between factual and generated content.

Both modes utilize a shared template that injects retrieved text and user query into the LLM input during inference:

Context:

{context}

User Question:

{question}

Answer:

This template ensures a consistent reasoning pipeline — the assistant always grounds its answers in retrieved context before generating a response.

User Prompt:

Reaction between Zn and HCl.

This ensures factuality, educational tone, and consistent structure across responses.

4.1 Retrieval Mode (Offline)

4.2 Chat Mode (Interactive Q&A)

Enables contextual dialogue (“Why does heating sodium release light?”).

This guide walks you through every step to set up, configure, and run the Virtual Chemistry RAG Lab — from environment creation to API key setup and app execution.

Before you begin, ensure you have the following installed:

Clone the project

git clone https://github.com/Blessy456b/rag_lab.git

Move into the project folder

cd rag_lab/src

It is best practice to use an isolated virtual environment to manage project dependencies.

pip install -r requirements.txt

This installs:

Your assistant supports GROQ model backend

Provider Model Example Environment Variable

Add one/more of the following lines depending on which API you plan to use:

GROQ_API_KEY=your_groq_key_here

⚠️ Security Note:

Do not share .env files or commit them to GitHub.

Instead, include a .env_example file showing the required variable names without real values.

Make sure that you are in src directory ; if not run - cd rag_lab/src

Launch the interactive chemistry lab UI:

streamlit run app_lab_chat.py

Once launched, open the local URL displayed in your terminal (e.g., http://localhost:8501) to interact with the assistant.

You’ll see:

Dropdowns for selecting reactants

Visual test tubes and reactions

A chat assistant powered by RAG retrieval

The RAG assistant supports two reasoning modes:

Mode Behavior Use Case

⚖️ Strict Mode Answers only from retrieved knowledge base Verified scientific facts

🎨 Creative Mode Combines retrieved context + LLM reasoning Educational explanations, inferences

To change mode:

assistant = RAGAssistant(mode="creative")

or

toggle in GUI

Once running, try:

“What happens when zinc reacts with hydrochloric acid?”

“Explain oxidation .”

The assistant retrieves factual data from your /data folder and generates an explainable, contextual response.

Issue Possible Cause Solution

No API key found - .env missing or wrong variable name = Verify key name matches table above

ImportError: sentence_transformers - Dependencies not installed = Run pip install -r requirements.txt

Streamlit not found - Virtual env not activated = Activate environment again

Slow responses- Free-tier model rate limits Try smaller queries or switch model provider

🧠 Add small new .txt files to the data/ folder for more reactions.

Component Technology Stack

UI Framework Streamlit

Embedding Model SentenceTransformers (MiniLM / all-MiniLM-L6-v2)

Vector Database FAISS / Chroma

Programming Language Python 3.12

Libraries LangChain, numpy, pandas, dotenv

Deployment Localhost / Ready Tensor compatible

The system successfully demonstrated:

Preliminary evaluations with domain-specific test queries show that retrieved responses align closely with verified chemical reactions, minimizing hallucinations compared to pure LLM-based generation.

-🧬 Multilingual RAG Expansion – Support Indian languages for wider access.

-⚙️ Dynamic Knowledge Ingestion – Allow uploading of new documents.

-🎨 Reaction Visualization – Add chemical equation animations and molecular imagery.

-🧠 Cross-domain Integration – Extend to physics and biology experiments.

-🔒 Secure Collaboration – Incorporate user experiment history and authentication.

The Virtual Chemistry RAG Lab showcases the potential of combining retrieval-augmented architectures with interactive learning interfaces for scientific domains.

By grounding AI reasoning in transparent, verifiable text sources, the system enhances both trust and educational value in AI-driven science tools.

This framework can be extended across multiple domains to promote explainable, domain-specific AI reasoning for academic and research applications.

This project is released under the MIT License, which allows:

Users must simply retain the original copyright and license notice.

Developed by Blessy Thomas

Built with curiosity using Streamlit, LangChain, and Vector Search technologies for Ready Tensor certification.

For queries

github : https://github.com/Blessy456b

gmail : blessy456bthomas@gmail.com