📖 BART Chatbot Training & Deployment

📌 Overview

This project trains a chatbot using the facebook/bart-large-cnn model. The chatbot is fine-tuned on a custom dataset to improve text generation capabilities. The trained model can generate responses based on input questions.

🚀 Features

- Uses

facebook/bart-large-cnnas the base model. - Fine-tunes the model on a Q&A dataset.

- Supports training with Hugging Face's

Trainer. - Implements optimized text generation with advanced decoding strategies.

- Allows real-time chatbot interaction via the terminal.

📂 Dataset

The dataset is a CSV file containing:

question: The input query.answer: The corresponding response.

📥 Load Dataset

from datasets import load_dataset dataset = load_dataset('csv', data_files='dataset.csv', encoding='utf-8') train_dataset = dataset['train'] eval_dataset = train_dataset.train_test_split(test_size=0.2, shuffle=True)['test']

🛠️ Preprocessing

The dataset is tokenized using AutoTokenizer from Hugging Face.

from transformers import AutoTokenizer tokenizer = AutoTokenizer.from_pretrained("facebook/bart-large-cnn") tokenizer.pad_token = tokenizer.eos_token def preprocess_function(examples): model_inputs = tokenizer(examples['question'], truncation=True, padding='max_length', max_length=256) labels = tokenizer(examples['answer'], truncation=True, padding='max_length', max_length=256) model_inputs['labels'] = labels['input_ids'] return model_inputs

🎯 Training the Model

from transformers import BartForConditionalGeneration, Trainer, TrainingArguments model = BartForConditionalGeneration.from_pretrained("facebook/bart-large-cnn") training_args = TrainingArguments( output_dir='./results', num_train_epochs=10, per_device_train_batch_size=12, save_strategy="epoch", evaluation_strategy="epoch", logging_dir='./logs', load_best_model_at_end=True, ) trainer = Trainer( model=model, args=training_args, train_dataset=tokenized_train_dataset, eval_dataset=tokenized_eval_dataset, ) trainer.train()

📝 Text Generation

Using the trained model for text generation with optimized decoding parameters.

def generate_text(prompt, min_length=100, max_length=300, num_return_sequences=1): input_ids = tokenizer.encode(prompt, return_tensors='pt') output = model.generate( input_ids, min_length=min_length, max_length=max_length, num_return_sequences=num_return_sequences, no_repeat_ngram_size=3, do_sample=True, temperature=0.9, top_p=0.92, repetition_penalty=1.2, length_penalty=1.5 ) return [tokenizer.decode(output[i], skip_special_tokens=True) for i in range(num_return_sequences)]

💾 Save Model

After training, the model and tokenizer are saved for future use.

model_save_path = "./saved_model" trainer.save_model(model_save_path) tokenizer.save_pretrained(model_save_path)

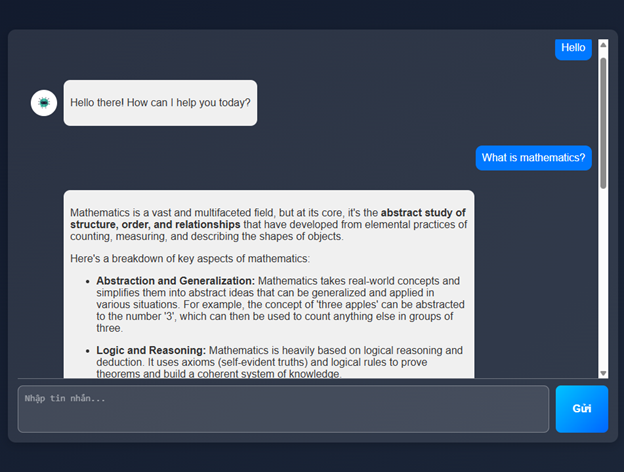

📢 Running the Chatbot

Interactive chatbot session:

if __name__ == '__main__': print("Chatbot is ready! Type your question or 'exit' to stop.") while True: prompt = input("You: ") if prompt.lower() == "exit": print("Chat ended.") break response = generate_text(prompt, max_length=200, num_return_sequences=1) print(f"Chatbot: {response[0]}")

📤 Upload Model to Hugging Face

You can upload the trained model to Hugging Face Hub for sharing.

from huggingface_hub import upload_folder upload_folder(folder_path="./saved_model", repo_id="your_username/your_model_name", repo_type="model")

🎯 Next Steps

- Improve training with a larger dataset.

- Experiment with different fine-tuning techniques like LoRA, PEFT.

- Deploy the chatbot as a FastAPI service.

📌 Author: ConThoBanSung

📅 Date: March 2025