Because sometimes, even AI needs a cheat sheet

Abstract

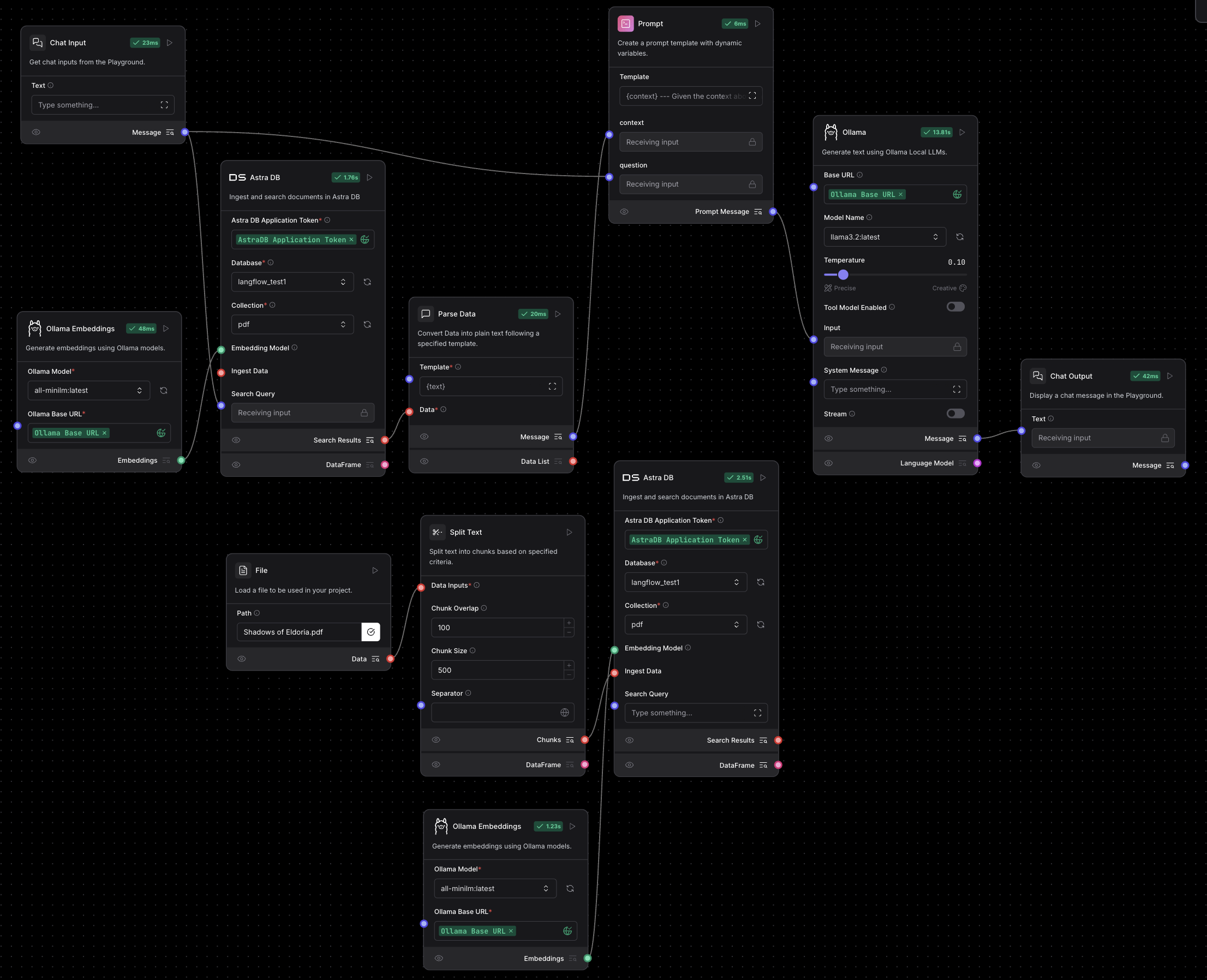

This project builds a Retrieval-Augmented Generation (RAG) pipeline that answers questions about Shadows of Eldoria - a fantasy story I wrote with ChatGPT. Think of it like teaching a robot to read a book and then quiz it. The pipeline uses Langflow (a visual tool for building AI workflows), Astra DB (for storage), and Ollama (to run AI models locally).

The twist? It works on your laptop. No cloud required.

Getting Started

Let's get this show on the road

Setup Instructions

- Download Ollama

Grab it from ollama.com. Then open your terminal and paste:

ollama pull llama3.2:latest ollama pull all-minilm:latest ollama serve # Keep this running!

Pro tip: If your laptop starts humming like a microwave, you're doing it right.

- Install Langflow

pip install langflow langflow run # This starts the UI at http://localhost:7860

3. Load the Pipeline

- Go to http://127.0.0.1:7860

- Import the Vector-Store-RAG.json file

- Boom. Your AI book club is ready.

How It Works

Pipeline Breakdown

- Upload Your Document

I used a 3,000-word fantasy story, but you could use anything:

- Recipes

- Legal docs

- Your grandma's shopping list

- Chop & Embed

- Cuts text into 500-character chunks (with 100-character overlaps)

- Turns words into math using Ollama's all-minilm model

Imagine Google Translate, but for human → robot.

- Store & Search

Chunks get saved in Astra DB. When you ask a question:

- It finds the 5 closest text matches

- Feeds them to Llama3.2 (the brain)

- Generates answers like a student cribbing from notes

Make It Yours

Customization options even your cat could use

Swap These Parts

- Vector Databases

Don't like Astra DB? Try:

- Chroma DB (free & local)

- Pinecone (for heavy lifting)

How? Just update the "Vector Store" node in Langflow.

- AI Models

Llama3.2 too big? Use:

- Mistral (lightweight)

- Google Gemini (if you want cloud)

Change the "OllamaModel" component with any other model component.

- Embeddings

Ollama not your jam? Switch to OpenAI embeddings. Just change the "Embeddings" node.

What's Missing?

The "uh-oh" list!

- Evaluation Framework

Right now, I judge answers by reading them. Not exactly science.

- Deployment

It lives on my laptop. To share it, I'd need to:

- Dockerize the setup

- Add user authentication

- Monitoring

What if the database crashes mid-query? No alerts exist.

Baby step: Add logging.

- Audience Clarity

Built for developers.

- Performance Stats

How fast is it? No idea.

Why This Matters

Beyond my laptop's overheating issues

Real-World Uses

-

Customer Support

Train it on your FAQ docs. -

Education

Let students query textbooks naturally. -

Personal Use

I once built a version that explains my own notes back to me.

The big picture? This is LEGO for AI pipelines.

Your Turn

What should you do next?

- Steal The Setup

Follow the instructions above.

- Break It

Try uploading a Wikipedia article instead of a story.

- Tweak

Swap Llama3.2 for Mistral. Notice speed differences?

Remember: AI isn't magic. It's just a very eager intern.

Final Thoughts

Building this felt like teaching a parrot to read. Slow. Frustrating. But when it finally answered "Who is the villain in Eldoria?" correctly? Chef's kiss.

Good AI isn't about smart models. It's about smart pipelines.

Now go build yours.