ChatGPT, Claude, Perplexity, and Gemini integrations for chat, real-time information retrieval, and text processing tasks, such as paraphrasing, simplifying, or summarizing.

With support for third party proxies and local LLMs.

1 / Setup

- Configure Hotkeys to quickly view the current chat, archive, and inference actions.

- For instance ⌥⇧A, ⌘⇧A, and ⌥⇧I (recommended).

- Install the SF Pro font from Apple to display icons.

- Enter your API keys for the services you want to use.

- Configure your proxy or local host settings in the Environment Variables (optional).

- For example configurations see the wiki.

- Install pandoc to pin conversations (recommended).

2 / Usage

2.1 Ayai Chat

Converse with your Primary via the ask keyword, Universal Action, or Fallback Search.

- ↩ Continue the ongoing chat.

- ⌘↩ Start a new conversation.

- ⌥↩ View the chat history.

- Hidden Option

- ⌘⇧↩ Open the workflow configuration.

2.1.1 Chat Window

- ↩ Ask a question.

- ⌘↩ Start a new conversation.

- ⌥↩ Copy the last answer.

- ⌃↩ Copy the full conversation.

- ⇧↩ Stop generating an answer.

- ⌘⌃↩ View the chat history.

- Hidden Options

- ⇧⌥⏎ Show configuration info in HUD

- ⇧⌃⏎ Speak the last answer out loud

- ⇧⌘⏎ Edit multi-line prompt in separate window

- ⇧↩ Switch to Editor / Markdown preview

- ⌘↩ Ask the question.

- ⇧⌘⏎ Start a new conversation.

Sticky Preview example:

2.1.2 Chat History

- Search: Type to filter archived chats based on your query.

- ↩ Continue archived conversation.

- ⇥ Open conversation details.

- ⌃ View message count, creation and modification date.

- ⇧ View message participation details.

- ⇧⌥ View available tags or keywords.

- ⌘↩ Reveal the chat file in Finder.

- ⌘L Inspect the unabridged preview as Large Type.

- ⌘⇧↩ Send conversation to the trash.

!Bang Filters

Type ! to see all filters currently available and hit ↩ to apply it.

2.1.3 Conversation Details

Conversations can be marked both as favorites or pinned. Pinned conversations will always stay on top, while favorites can be filtered and searched further via the !fav bang filter.

- ⌘1 · Same as above, except

- ⇥ Go back to the chat history.

- ⌥↩ Open static preview of the conversation.

- ⌘Y (or tap ⇧) Quicklook conversation preview.

- ⌘3

- ⌘L Inspect message and token details as Large Type.

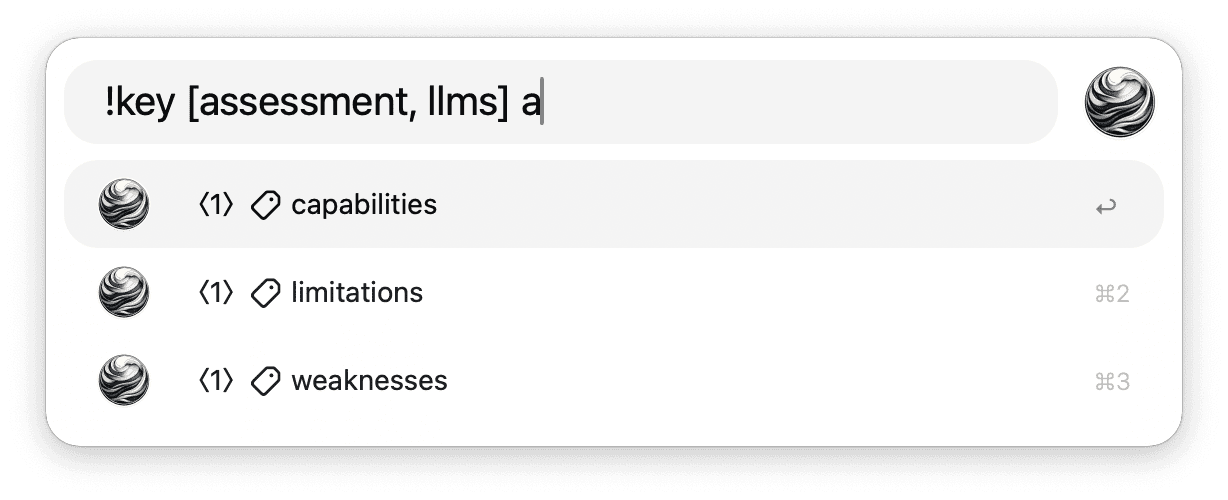

2.2 File Attachments

You can use Ayai to chat with your documents or attach images to your conversations.

2.2.1 Universal Action: Attach Document

By default, when starting a new conversation or attaching a file to an ongoing chat, a summary will be created. You can also enter an optional prompt that will be taken into account.

Currently supported are PDF, docx, all plain text and source code files.

To extract text from docx-files, Ayai will use pandoc if it is installed. Otherwise a crude workaround will be used

- ↩ (or ⌘↩) Summarize and ask with optional prompt starting new chat.

- ⌥↩ Summarize and ask with optional prompt continuing chat.

- ⌘⇧↩ Don't summarize and ask with prompt starting new chat.

- ⌥⇧↩ Don't summarize and ask with prompt continuing chat.

- ⌃↩ Edit multi-line prompt in a separate Text View.

- There, the same options are available.

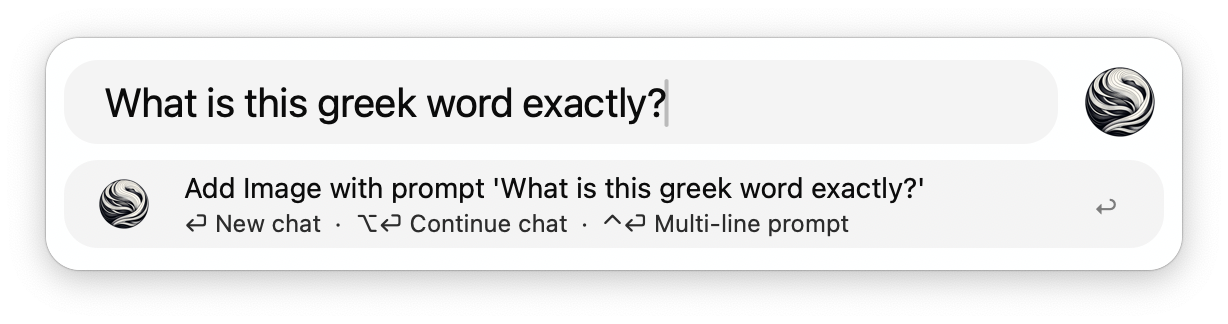

2.2.2 Universal Action: Attach Image

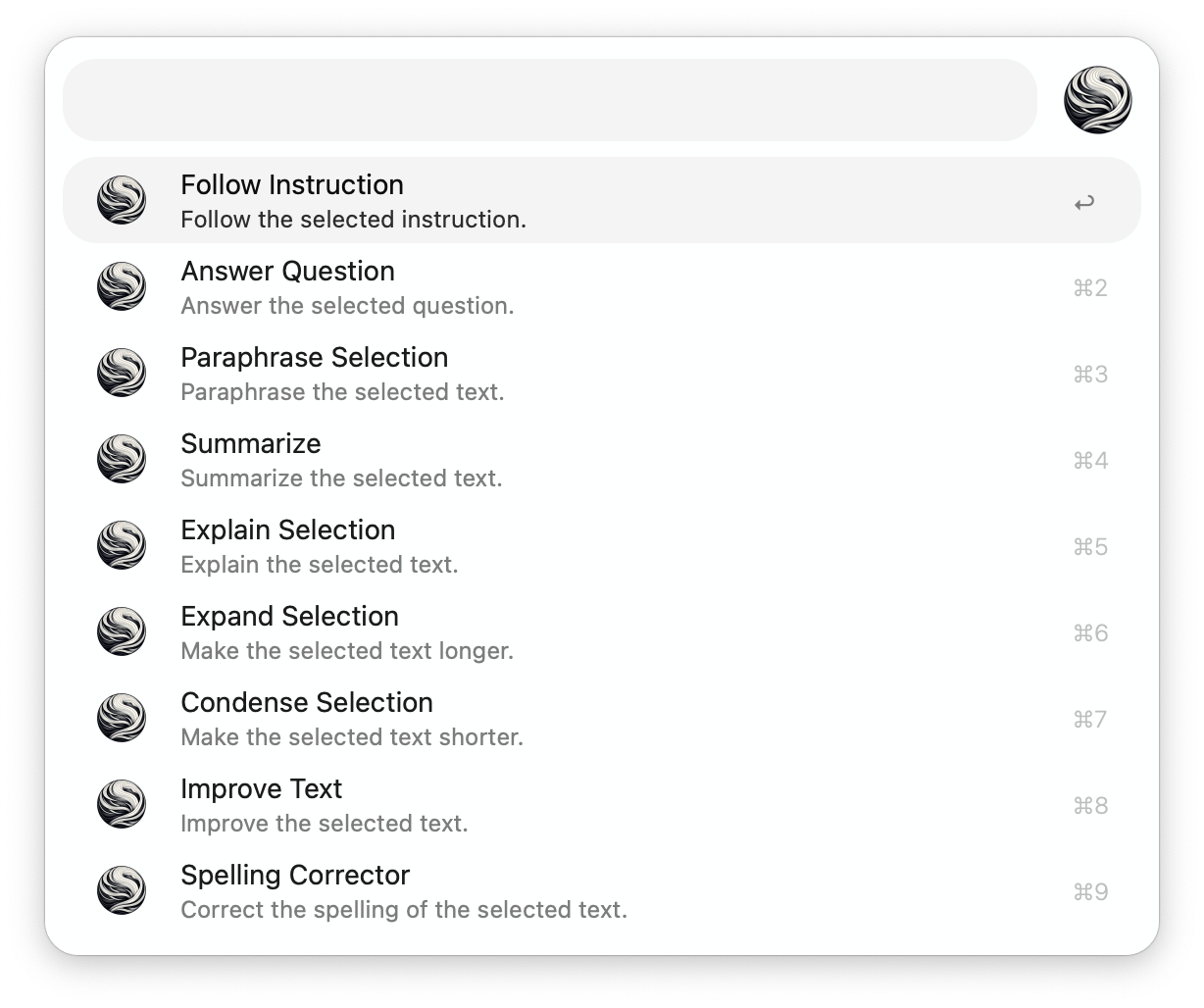

2.3 Inference Actions1

Inference Actions provide a suite of language tools for text generation and transformation. These tools enable summarization, clarification, concise writing, and tone adjustment for selected text. They can also correct spelling, expand and paraphrase text, follow instructions, answer questions, and improve text in other ways.

Access a list of all available actions via the Universal Action or by setting the Hotkey trigger.

- ↩ Generate the result using the configured default strategy.

- ⌘↩ Paste the result and replace selection.

- ⌥↩ Stream the result and preserve selection.

- ⌃↩ Copy the result to clipboard.

Tip: Write a detailed prompt directly into the Inference Action filter to customize your instruction or question.

Inference Action Customization

The inference actions are generated from a JSON file called actions.json, located in the workflow folder. You can customize existing actions or add new ones by editing the file directly or by editing actions.config.pkl and then evaluating this file with pkl.

Important

Always back up your customized Inference Actions before updating the workflow or your changes will be lost.

3 / Prompting

A prompt is the text that you give the model to elicit, or "prompt," a relevant output. A prompt is usually in the form of a question or instructions.

References

- General prompt engineering guide

- OpenAI prompt engineering guide | Prompt Gallery

- Anthropic prompt engineering guide | Prompt Gallery

- Google AI prompt engineering guide | Prompt Gallery

4 / Configuration

Primary

The primary configuration setting determines the service that is used for conversations.

OpenAI Proxies2

If you want to use a third party proxy, define the correlating host, path, API key, model, and if required the url scheme or port in the environment variables.

The variables are prefixed as alternatives to OpenAI, because Ayai expects the returned stream events and errors to mirror the shape of those returned by the OpenAI API.

Local LM's3

If you want to use a local language model, define the correlating url scheme, host, port, path, and if required the model in the environment variables to establish a connection to the local HTTP initiated and maintained by the method of your choice.

The variables are prefixed as alternatives to OpenAI, because Ayai expects the returned stream events and errors to mirror the shape of those returned by the OpenAI API.

Note: Additional stop sequences can be provided via the shared finish_reasons environment variable.

Footnotes

-

Ayai will make sure that the frontmost application accepts text input before streaming or pasting, and will simply copy the result to the clipboard if it does not. This requires accessibility access, which you may need to grant in order to use inference actions. Note: You can override this behaviour with the Safety Exception configuration option. ↩

-

Third party proxies such as OpenRouter, Groq, Fireworks or Together.ai (see wiki) ↩

-

Local HTTP servers can be set up with interfaces such as LM Studio or Ollama ↩