Project Documentation: Seeker - Autonomous Deep Research Agent

Abstract

Seeker is a local research assistant designed to automate iterative, deep-dive investigations. It utilizes search engines, web scraping technology (Firecrawl), and large language models (LLMs) to conduct research based on user prompts. Seeker operates locally via both a command-line interface (CLI) and a REST API, providing flexibility in usage. The system features configurable research breadth and depth, supports multiple LLM providers (OpenAI, Fireworks.ai, custom endpoints), and generates outputs as either detailed markdown reports or concise answers.

1. Introduction

The Seeker project aims to streamline and automate the complex process of online research. Traditional research often involves manually generating search queries, sifting through numerous web pages, extracting relevant information, and synthesizing findings. Seeker automates these steps by leveraging AI. It takes an initial user query, optionally clarifies the research intent through follow-up questions (in CLI mode), generates relevant search engine queries, scrapes web content, extracts structured learnings using an LLM, and recursively deepens the research based on findings. The final output consolidates the gathered knowledge into a structured report or a direct answer. The system is implemented in TypeScript and designed for local execution.

2. Methodology

The research process in Seeker follows a structured workflow orchestrated primarily by the src/deep-research.ts module:

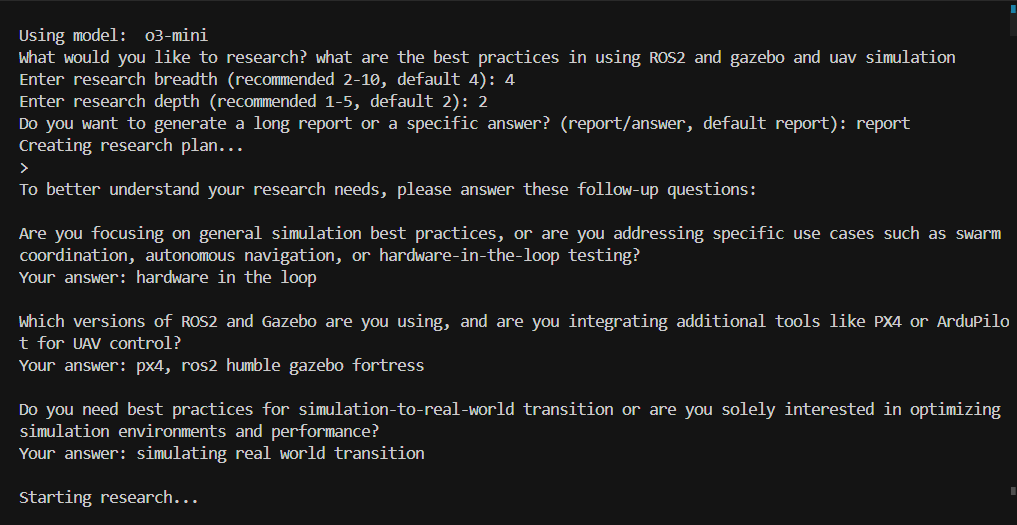

- User Input & Clarification: The process begins with a user-provided research query. In CLI mode (

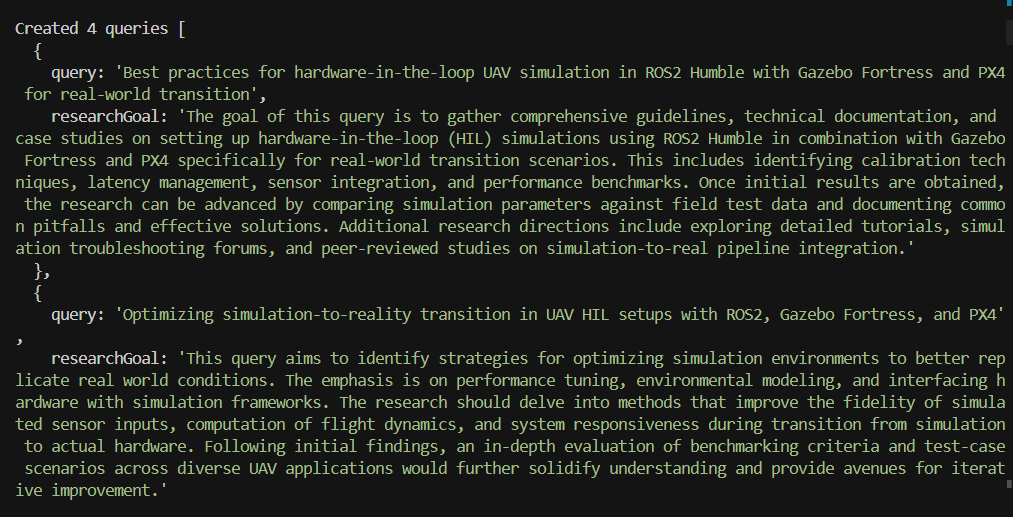

src/run.ts), the system can optionally generate follow-up questions using an LLM (src/feedback.ts) to refine the research scope and intent based on the initial query. The user's answers are incorporated to form a more detailed research prompt. For API usage (src/api.ts), the user provides the query, breadth, and depth directly. - Query Generation: Based on the refined prompt and any learnings from previous iterations (if applicable), an LLM generates a set of search engine (SERP) queries. The number of queries generated per iteration is controlled by the

breadthparameter specified by the user. This step aims to break down the main research topic into smaller, targeted search tasks. - Web Scraping: Each generated SERP query is executed using the Firecrawl web scraping service (

@mendable/firecrawl-js). Seeker fetches the content from the search results, typically requesting markdown format for easier processing. Web scraping requests are parallelized using thep-limitlibrary to improve efficiency, with the concurrency level configurable via theRESEARCH_CONCURRENCYenvironment variable. - Learning Extraction: The markdown content scraped from the web is processed by an LLM. The LLM's task is to summarize the content, extract key learnings, and potentially identify further questions or research avenues based on the findings. Learnings are designed to be concise, information-dense, and include specific entities, metrics, or dates. Long texts are chunked using a

RecursiveCharacterTextSplitter(src/ai/text-splitter.ts) to fit within the LLM's context window (MAX_TOKENS). - Recursion (Depth-first Exploration): If the user-specified

depthparameter is greater than 1, the process iterates. New SERP queries are generated based on the accumulated learnings from the previous cycle. This allows Seeker to delve deeper into specific sub-topics identified during the initial breadth-first exploration. The cycle of query generation, scraping, and learning extraction repeats until the maximum depth is reached. - Output Generation: Upon completion of the research cycles (reaching the specified depth), Seeker synthesizes the results. Based on user preference (specified in CLI or implicitly via API endpoint structure), it generates either:

- Detailed Report: A comprehensive markdown document (

report.mdin CLI mode) including all extracted learnings, structured findings, and a list of visited URLs as sources. The LLM is prompted to write a detailed report based on the initial query and all accumulated learnings. - Concise Answer: A direct, short answer (

answer.mdin CLI mode, or theanswerfield in the API response) to the original query, synthesized from the learnings. The LLM is specifically prompted for brevity and adherence to the original question's format if applicable.

- Detailed Report: A comprehensive markdown document (

- LLM Abstraction: The system uses an abstraction layer (

src/ai/providers.ts) to interact with different LLM providers (OpenAI, Fireworks.ai) selected via environment variables (OPENAI_KEY,FIREWORKS_KEY,OPENAI_BASE_URL,OPENAI_MODEL). This allows flexibility in choosing the backend model.

Clariciation of Search Intent

Research Process

End of Task

3. Results

The primary outputs of the Seeker system are:

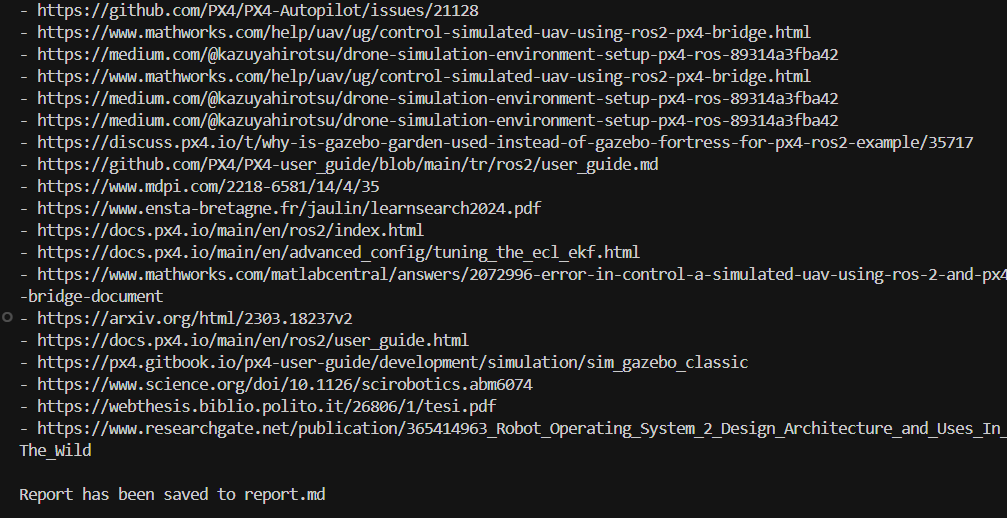

- Accumulated Learnings: A list of concise, structured pieces of information extracted from the web content during each research iteration.

- Visited URLs: A list of source URLs from which the learnings were derived.

- Final Report/Answer: Depending on the user's choice, either a detailed markdown report synthesizing all learnings or a concise answer to the initial query.

Example CLI Session Flow:

- User starts the application (

npm start). - User provides an initial research query (e.g., "Effects of climate change on coffee production").

- User sets

breadth(e.g., 4) anddepth(e.g., 2). - User chooses output format (e.g., "report").

- System generates follow-up questions (e.g., "What region?", "Economic impacts?").

- User provides answers (e.g., "Brazil", "Economic").

- System performs 2 levels (depth) of research, generating 4 queries (breadth) in the first level, potentially fewer in the second level based on learnings.

- System scrapes content via Firecrawl for each query.

- System extracts learnings using an LLM.

- System generates a final markdown report (

report.md) containing synthesized findings and source URLs.

API Interaction:

- User sends a POST request to

/api/research. - Request body includes

query,breadth,depth. - The API triggers the

deepResearchfunction. - Upon completion, the API generates a concise

answerusingwriteFinalAnswer. - The API responds with a JSON object containing

{ success: true, answer, learnings, visitedUrls }.

4. Conclusion

Seeker provides a functional framework for automating deep-dive online research. By combining iterative query generation, web scraping, and LLM-based learning extraction, it can explore topics with configurable breadth and depth. The system offers both CLI and API interfaces, supports multiple LLM backends, and produces structured outputs in the form of reports or concise answers. While reliant on the quality of LLM outputs and scraped web content, Seeker demonstrates a practical approach to automating complex research tasks locally. The codebase reflects the described features and workflow.

5. Limitations

- Dependency on LLM Accuracy: The quality of generated queries, extracted learnings, and final reports is highly dependent on the performance of the chosen LLM.

- Web Content Availability: The system relies on the availability and accessibility of relevant web content via Firecrawl. Paywalls or dynamic content might pose challenges.

- Lack of Semantic Filtering: The current implementation does not incorporate advanced semantic filtering or knowledge graph construction beyond basic LLM summarization.

- No Verification: Information extracted is based on web content and LLM interpretation; there's no built-in fact-checking mechanism.