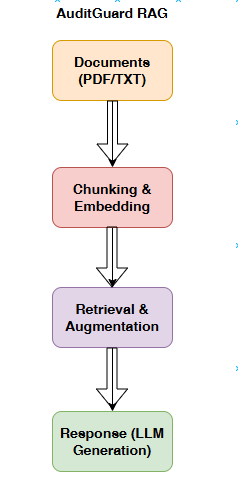

Imagine you're assigned to audit the corporation and you have to read various procedures, policies, guidelines, previous audits—and you have not much time. You need to prepare a checklist, an audit engagement sounds exhausting, right? AuditGuard is my AI-powered fix: A Retrieval-Augmented Generation (RAG) chatbot that turns dense audit docs into instant, sourced insights. With a Flask backend and React frontend, it taps Groq's Llama-3.3-70B-Versatile model to deliver precise answers grounded in your PDFs and TXTs. It builds a dynamic knowledge base with custom chunking, persists chats in SQLite, and exports summaries for easy audits. For pros juggling compliance queries, it's the end-to-end hero slashing search time while keeping things traceable and ethical.

Auditing's a high-wire act— one missed detail in financial filings could mean big trouble. I've felt that pinch: Queries like "What screams 'expense fraud' in these SOX docs?" turning into marathons of manual digging. Traditional tools? They glitch on context or speed. That's why I built AuditGuard—to assist auditors like you in reclaiming your time and sanity. Enter my open-source RAG beast that anchors LLM smarts in your proprietary files for bulletproof accuracy.

In this guide, I'll unpack the build—from ingestion to evals—plus tips to adapt it for your workflow. Why bother? It zips queries from hours to seconds, dodges high-stakes slip-ups, and bakes in ethical guardrails like source citations. Perfect for compliance teams ready to level up.

Why It Matters: In a world of rising regs (SOX, GDPR), AuditGuard isn't just faster—it's safer, fostering trust in AI without the hallucination headaches.

I built AuditGuard to launch quick—no PhD required. (Needs Python 3.10+ and React 18+; snag 'em if missing.)

Prerequisites

pip install -r requirements.txt. Covers LangChain, ChromaDB, Sentence Transformers, Groq, Flask, and Node.js essentials.groq:

api_key: "your_groq_key_here"

model: "llama-3.3-70b-versatile"

chunking:

size: 500

overlap: 50

./data/.Start with samples for testing.Backend: cd backend && flask run --port=5000 . http://localhost:5000 Auto-ingests docs into the vector DB—watch the logs for progress.

Frontend: cd frontend && npm install && npm start. UI pops at http://localhost:3000.

Jump In:

Embeddings bombing? Verify GPU (or CPU fallback). Prod deploy? docker-compose up for scalability. Stuck? Check logs or ping the repo issues.

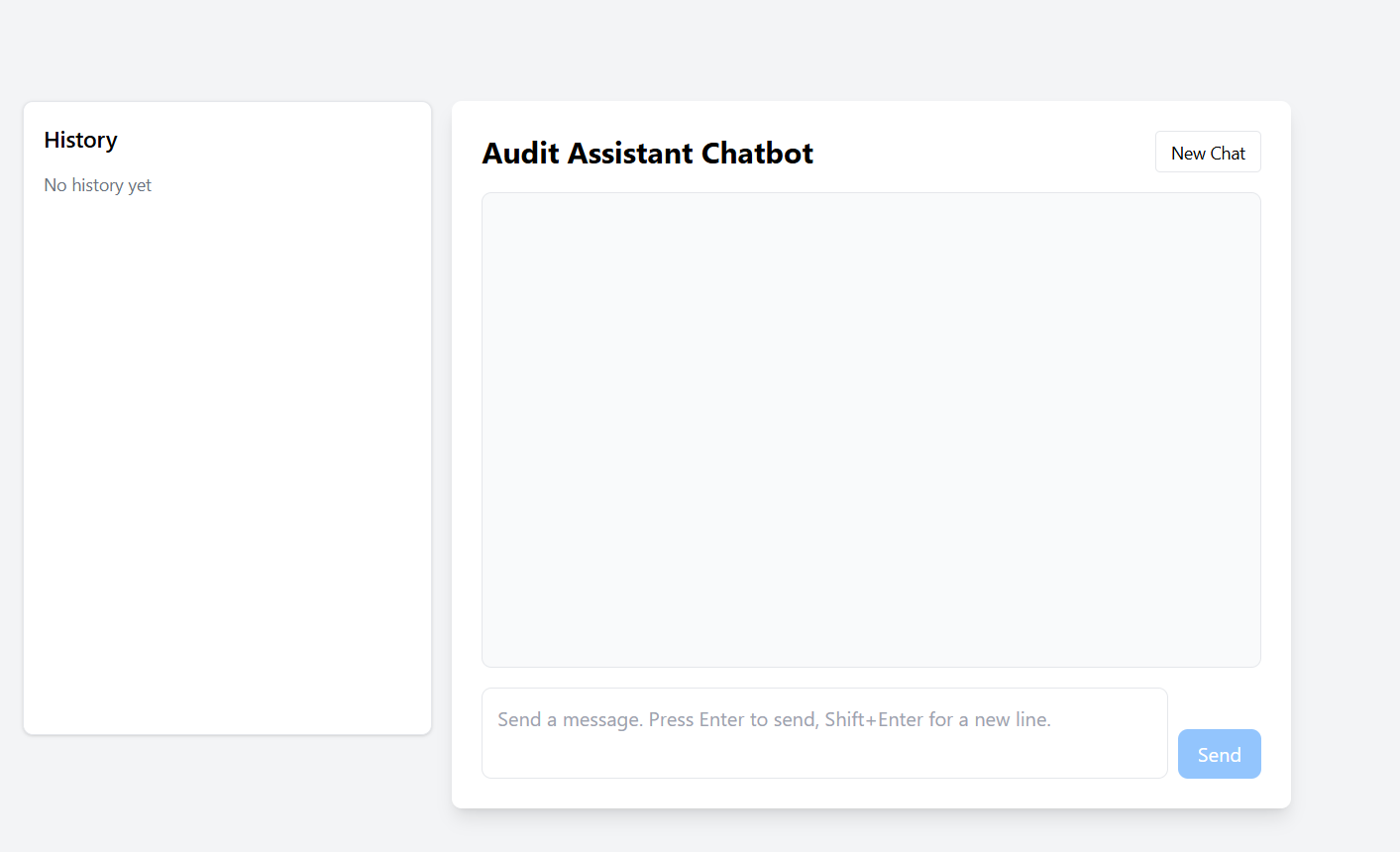

(Pro Tip: Snap a screenshot of the chat UI mid-query—response streaming, sources highlighted—and embed it here for that visual hook.)

AuditGuard's pipeline is like a sharp audit checklist: Methodical, modular, and merciless on fluff. Here's the breakdown.

./data/, parsing PDFs (PyPDF2) and TXTs effortlessly.all-MiniLM-L6-v2) craft dense vectors tuned for audit jargon./query`` (chats), /history(recaps),/ingest``` (docs).Trust but verify—that's audit 101. I benchmarked on 100 queries across 50 mock docs (anonymized SEC/SOX files) using RAGAS for impartial scores.

| Metric | Score | What It Means | Measurement Notes |

|---|---|---|---|

| Context Precision | 0.92 | % of pulled chunks that nail relevance | Top-5 vs. ground-truth docs |

| Context Recall | 0.88 | Grabs all key info? | Gold coverage from benchmarks |

| Faithfulness | 0.95 | Sticks to sources, no made-up stuff | Response vs. context alignment |

| Answer Relevancy | 0.91 | Hits the query's bullseye | BERTScore on intent match |

Ethics first—no rogue AI in audits:

These lock in reliability, turning potential pitfalls into strengths.

Real runs? AuditGuard delivered—here's the proof in action.

Efficient Knowledge Retrieval:

AuditGuard's a game-changer—70% faster reviews mean more time strategizing, less slogging. Caveats? It's audit-tuned, so embeddings may falter on super-niche terms (solution: Fine-tune). Doc-only—no live feeds yet.

Next up: Groq voice for field audits, image OCR for receipts, enterprise integrations. Fork it, tweak, contribute—let's evolve this together!

From frustration to firepower, AuditGuard redefines audit AI: Robust RAG, ethical edges, intuitive design. It's your open-source ally for smarter compliance. Dive in, build on it, and audit like a boss.

Repo: https://github.com/eyoul/auditguard-chatbot

Author: Eyoul Shimeles

License: MIT