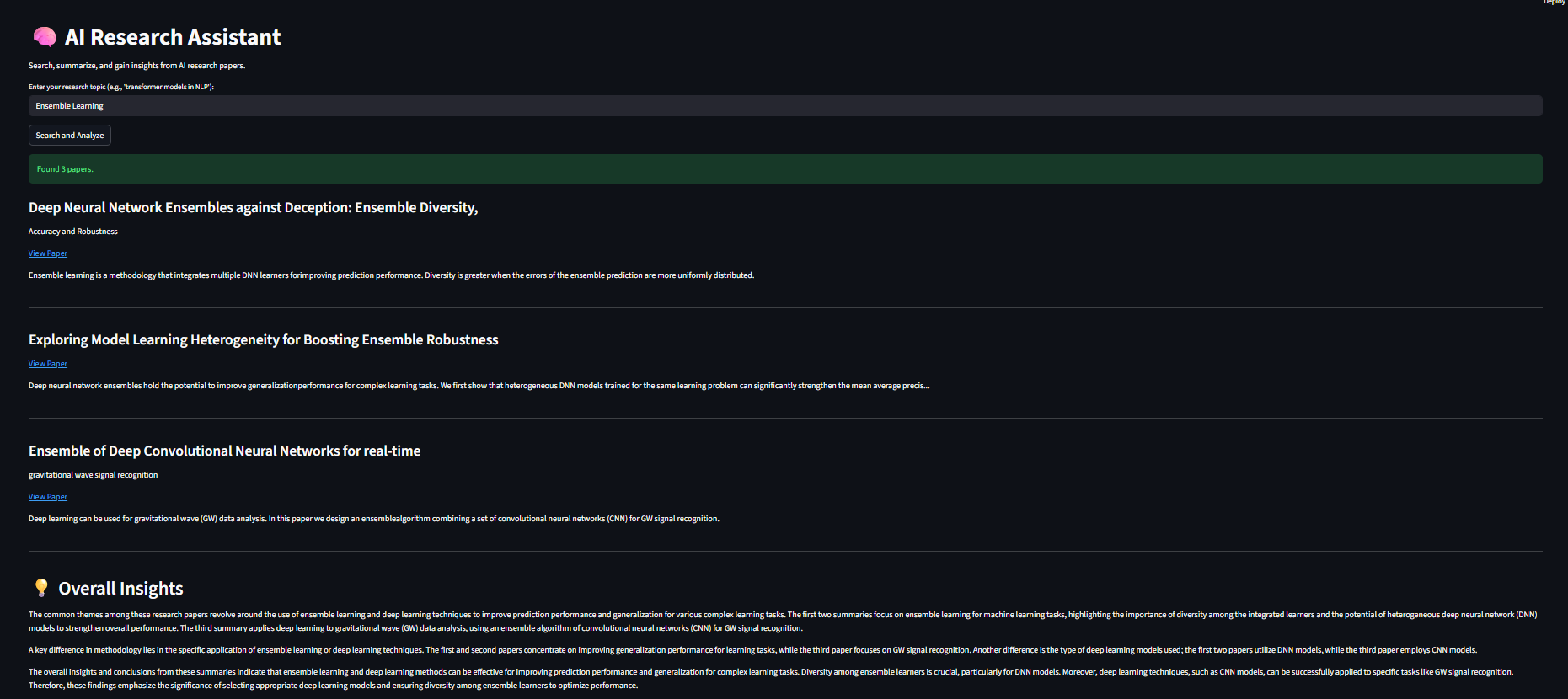

The rapid expansion of AI research literature presents significant challenges for researchers seeking to stay updated with current trends and breakthroughs. This project — AI Research Assistant — aims to streamline that process by providing an automated multi-agent system capable of retrieving, summarizing, and synthesizing insights from academic papers in real time.

Built using Python, Hugging Face APIs, and Streamlit, this assistant integrates three core agents — a Search Agent, Summarizer Agent, and Insight Agent — orchestrated under a unified workflow.

The system queries open-access sources like arXiv, generates concise abstractive summaries using transformer-based models (BART), and synthesizes thematic insights via LLMs (Mixtral or Falcon-7B).

By transforming unstructured research text into structured insights, this system assists students, educators, and AI professionals in performing faster literature reviews and trend analysis.

With the exponential growth of publications in AI and machine learning, traditional manual literature reviews have become inefficient.

Modern researchers require intelligent tools capable of:

This project extends the Module 2 multi-agent system by improving it into a production-grade application as part of AAIDC Module 3.

Enhancements include improved UI via Streamlit, robust error handling, safety guardrails, model fallback support, and test coverage for agentic workflows.

The assistant demonstrates how agentic AI design can be applied to real-world research workflows — combining retrieval, reasoning, and summarization into a coherent pipeline.

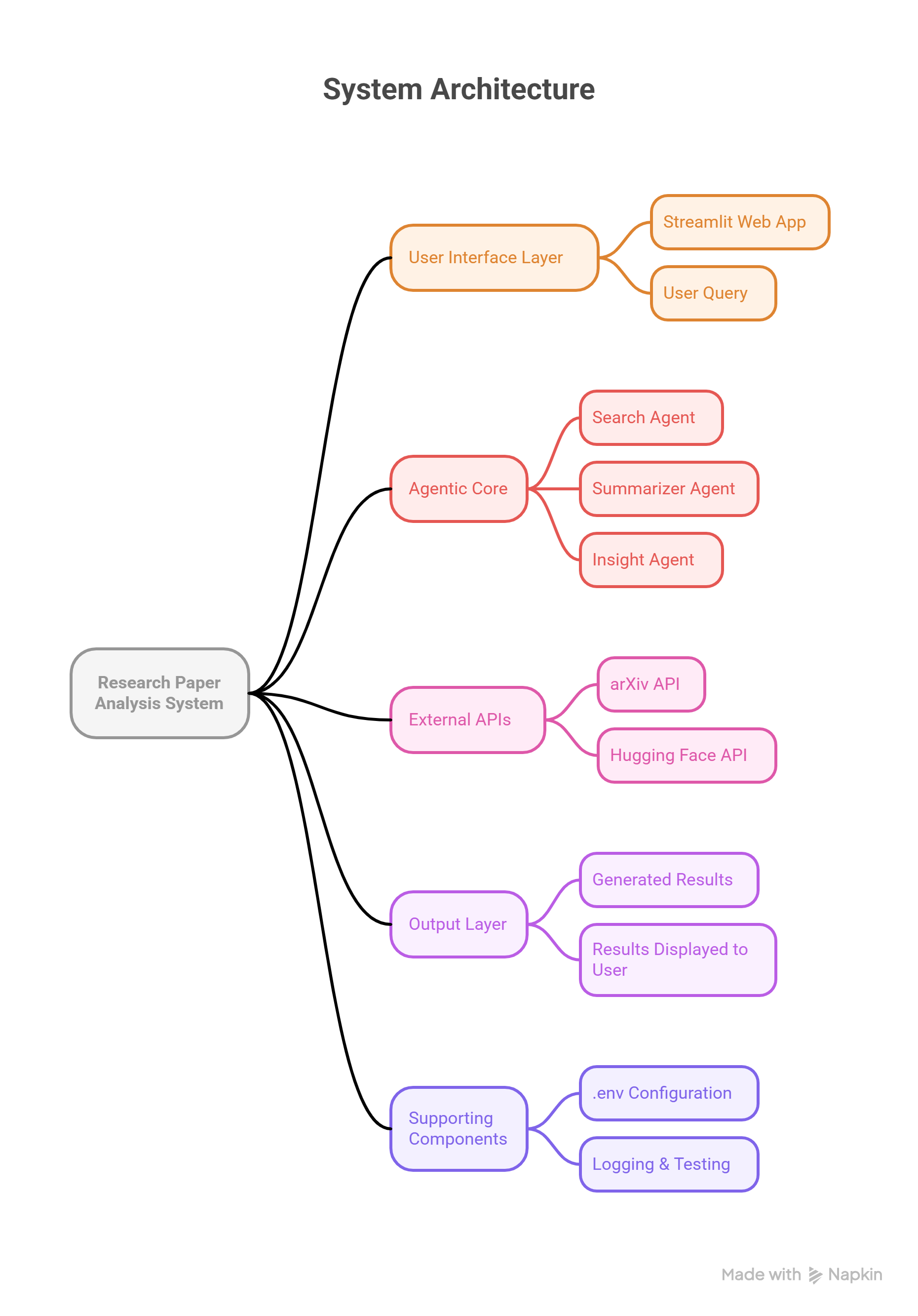

The system follows a multi-agent architecture, where each agent is responsible for a distinct stage of the workflow:

Each agent operates independently but communicates via a centralized orchestrator (main.py), ensuring a modular, extensible structure.

Data Flow:

.png?Expires=1770402153&Key-Pair-Id=K2V2TN6YBJQHTG&Signature=SOjFmG9KH~BneFZJvKUe4il5-tLU28qyXBSPK7JP10o~uD6zgeKSDpc8aFKSnF2Ji5UQQvwflRi7zqYUh6nnybyI8ARQu3fu9EwkbW9tuR3VRpixKR3BztPevI0C-LqaMTu2IEAdQKBFXLyWZx4~squVLewEGy9~4IbQijmGvsmoUO6rwtY53HC4Bfegz9a8gTUJFBVMVRu5dX1d2g2z4pEg0gaw11PbQvhaT4SFJMH-D0rgq6nCPzqZsPvUfjBudwi4up7SQyRO7pJo7LqVi92PEoRnEj~1x95iJrsuO0ARkWiHCBPqSxW5fi-Q1WhNLl04SFfK3ufeyGJ4at0htw__)

Figure 1: Multi-agent architecture of the AI Research Assistant showing the modular interaction between agents, APIs, and supporting components.

The AI Research Assistant uses a lightweight, Python-based orchestration layer (main.py) to coordinate multiple agents without relying on heavy external agent frameworks such as LangChain or LangGraph. This design keeps the system modular, easier to debug, and production-friendly for academic projects and small-scale deployments.

The orchestrator is responsible for the following steps:

SearchAgent, which queries the arXiv API and returns filtered metadata about relevant research papers.SummarizerAgent, which generates concise, human-readable summaries using BART via the Hugging Face Transformers pipeline.InsightAgent, which synthesizes cross-paper themes such as trends, comparisons, and conclusions using an LLM (e.g., Mixtral or Falcon through the Hugging Face Inference API)..env files using python-dotenv.

To set up locally:

git clone https://github.com/MokshithRao/ai-research-assistant.git cd ai-research-assistant pip install -r requirements.txt Then, add your Hugging Face API key to a .env file: HUGGINGFACE_API_KEY=your_api_key_here Run via: streamlit run app.py

Future Enhancements:

While numerous tools exist for academic paper retrieval or summarization, most focus on only one stage of the research workflow — such as text extraction or summarization — without integrating multi-stage reasoning.

Current systems like standard arXiv search or isolated summarization models (e.g., BART) lack a unified agentic pipeline that combines:

- Intelligent query retrieval,

- Abstractive summarization, and

- Thematic insight generation.

Moreover, traditional tools require manual data collection and lack contextual analysis across multiple papers.

The AI Research Assistant addresses these gaps by providing an end-to-end automated pipeline that synthesizes knowledge across papers, reducing human cognitive load and time-to-insight.

Despite its effectiveness, the current version of the AI Research Assistant has certain limitations:

- Model Dependency: Summarization and insight generation depend on third-party models hosted via Hugging Face APIs; API rate limits or model downtime can affect availability.

- Abstractive Summarization Constraints: The summarizer (BART) may occasionally truncate or oversimplify technical content for very long abstracts.

- Lack of Deep Citation Tracking: Current implementation does not yet extract or analyze references and citation networks.

- No Database Persistence: User search history or previous queries are not yet stored persistently.

- Limited Domain Adaptation: Insights are generalized and may vary in accuracy across highly specialized or non-English domains.

Future releases will mitigate these issues by integrating semantic retrieval (FAISS), local model hosting, and persistent user sessions.

The AI Research Assistant is optimized for lightweight academic research tasks and can run efficiently on modest hardware.

System Requirements

| Resource | Minimum | Recommended |

|---|---|---|

| CPU | Dual-core 2.0 GHz | Quad-core 3.0 GHz or higher |

| RAM | 4 GB | 8 GB+ for better inference |

| Disk Space | 500 MB | 1 GB (deps & cache) |

| Internet | Required | Required (for API) |

Performance Benchmarks

Scalability

The architecture supports easy scaling:

- Agents operate independently, allowing parallel summarization for multiple papers.

- Can be containerized with Docker and deployed on cloud platforms (AWS, GCP, or Hugging Face Spaces).

Code Repository: https://github.com/MokshithRao/ai-research-assistant

License: Open-source under the MIT License. The project may be modified, extended, or redistributed freely with proper attribution.

Availability: Publicly accessible on GitHub. Requires a valid Hugging Face API key for full operation and inference capabilities.

Contact: KV Mokshith Rao – Developer & Maintainer

🌐 [https://github.com/MokshithRao]

The AI Research Assistant project is actively maintained as part of the Agentic AI Developer Certification (AAIDC) and will continue to receive updates focused on reliability, performance, and feature expansion.

Ongoing support includes:

Users and collaborators are encouraged to extend and customize the tool for their own workflows.

Suggestions, bug reports, or feature requests can be submitted via the GitHub Issues section of the project repository:

👉 https://github.com/MokshithRao/ai-research-assistant/issues

Pull requests are welcome, and community contributions will be reviewed and integrated when appropriate.