Abstract

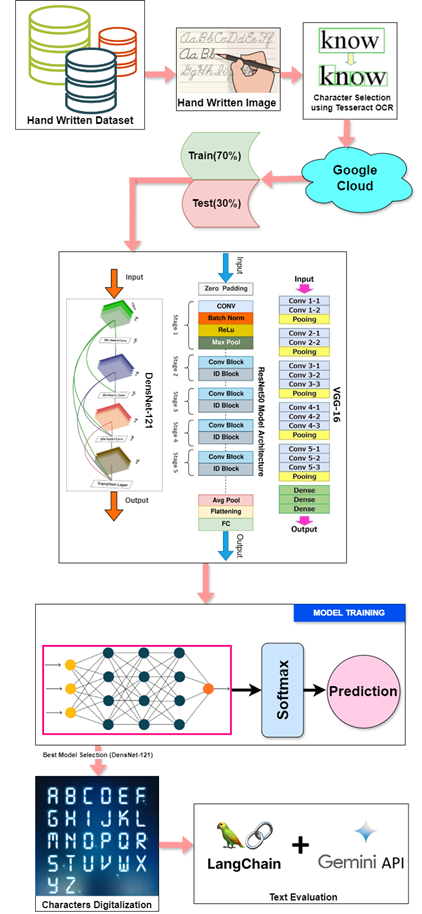

Grading handwritten subjective responses presents challenges in ensuring consistency, accuracy, and impartiality, especially when manual evaluation is applied to large datasets prone to human error and bias. This study introduces an AI-driven framework that integrates Tesseract OCR for text digitization, deep learning models for handwriting recognition, and LangChain’s NLP capabilities for automated assessment.

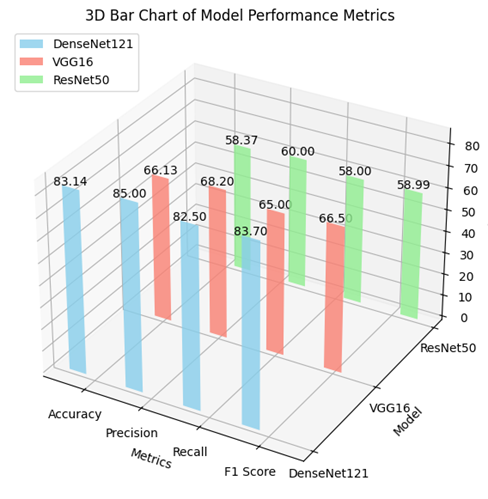

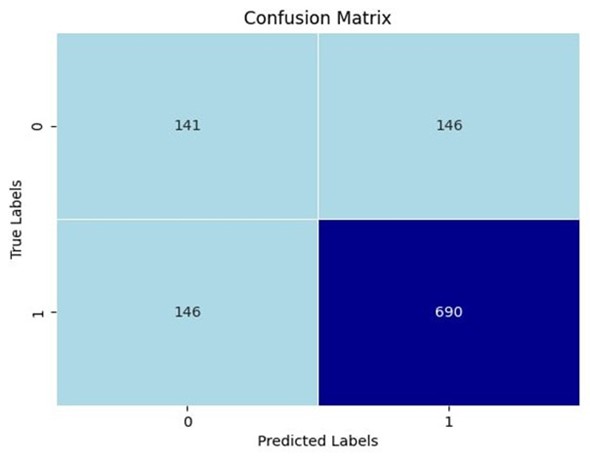

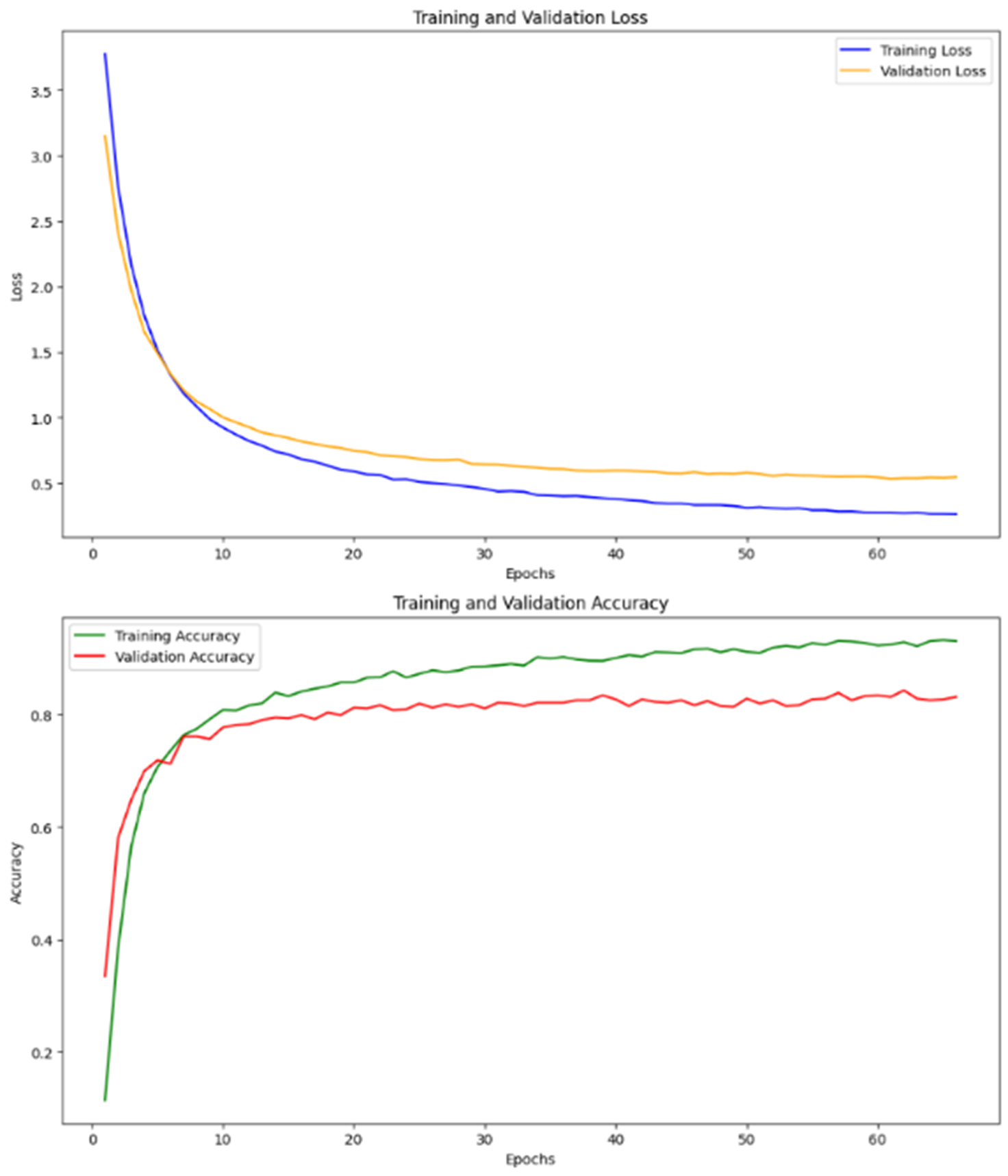

The pipeline begins with Tesseract OCR, converting handwritten text into a digital format while addressing handwriting variability. To enhance recognition accuracy, we benchmarked DenseNet121, VGG16, and ResNet50 on a diverse handwriting dataset. DenseNet121 outperformed the others, achieving 83.14% accuracy, 85.00% precision, 82.50% recall, and an 83.70% F1-score, demonstrating its robustness in character recognition compared to VGG16 (66.13%) and ResNet50 (58.36%).

Post-digitization, LangChain’s NLP framework performs syntactic and semantic analysis to evaluate responses based on grammar, structure, and contextual relevance. Comparative testing against BERT showed that LangChain provides more accurate, context-sensitive scoring, aligning closely with subjective answer evaluation criteria.

The novelty of this framework lies in its end-to-end integration of high-accuracy OCR with advanced NLP, ensuring scalable, objective, and efficient handwritten response evaluation. This system reduces human error, enhances fairness in scoring, and supports future multilingual and multi-style handwriting adaptations, positioning it as a transformative solution for automated grading in educational and professional settings.

Introduction

Handwriting recognition remains a significant challenge in today's digital world, requiring robust solutions to ensure accuracy and consistency. This project aims to develop an advanced AI-driven system that translates handwritten text into digital format and evaluates its content objectively, regardless of the language it is written in. The system is designed to handle diverse scripts, including Japanese, Telugu, Chinese, and Arabic, making it a scalable and adaptable solution for multilingual handwriting assessment.

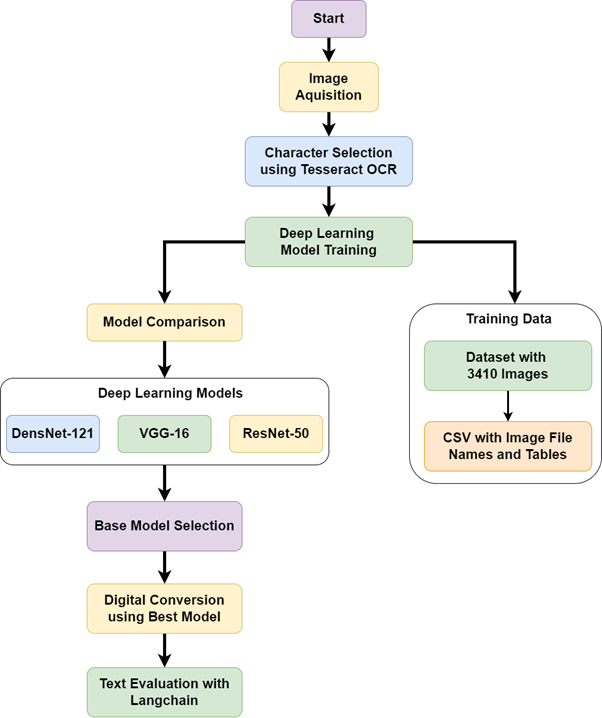

The process begins with image capture, where handwritten responses are digitized using Tesseract OCR, an open-source optical character recognition engine. OCR plays a crucial role in extracting individual characters from images, forming the foundation for deep learning-based handwriting recognition. To enhance accuracy, three deep learning models—DenseNet121, VGG16, and ResNet50—are evaluated on a diverse handwriting dataset. Through comparative analysis, DenseNet121 emerged as the most effective model, achieving 83.14% accuracy, 85.00% precision, 82.50% recall, and an 83.70% F1-score, outperforming VGG16 and ResNet50.

Following digitization, the content evaluation stage leverages LangChain’s NLP framework, which provides access to multiple large language models (LLMs) and external data sources for context-aware assessment. This framework is compared with BERT, a widely used model for bidirectional text encoding and entity recognition. The comparison evaluates each model’s ability to assess grammatical structure, coherence, depth of concept, and sentiment in handwritten responses. Results demonstrate that LangChain, with its retrieval-augmented generation capabilities, provides superior contextual evaluation for subjective responses compared to BERT.

This project goes beyond digitization by addressing the limitations of manual grading, which is time-consuming, subjective, and prone to bias. By integrating OCR, deep learning, and NLP technologies, this system automates assessment, ensuring fairness, consistency, and scalability. The framework not only reduces grading time but also enhances accuracy, making it a valuable tool for educational assessments, certification testing, and professional evaluations.

Future iterations of this system will focus on expanding the dataset for diverse handwriting styles and languages, improving deep learning models, and integrating real-time feedback mechanisms. Additionally, advanced sentiment analysis and contextual understanding will be explored to further refine the evaluation process. The ultimate goal is to establish a global, AI-powered handwriting assessment framework that revolutionizes subjective response grading across multiple industries.

This paper presents the design and implementation of this AI-driven architecture, highlighting its impact on objective and automated evaluation. By leveraging Tesseract OCR, DenseNet121, and LangChain, the proposed system ensures accurate recognition and unbiased assessment of handwritten responses, paving the way for fair and consistent grading in both academic and professional domains.

Methodology

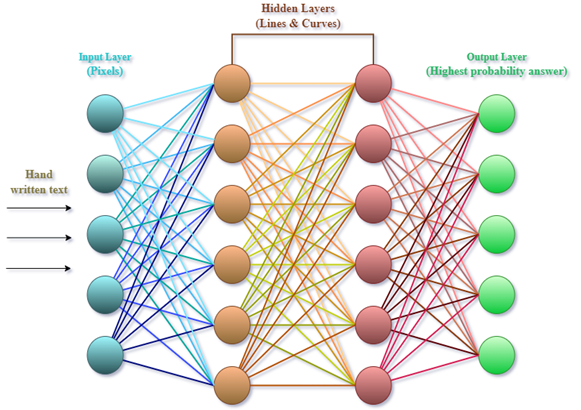

In this section, we present the methodology employed for the character recognition task using a custom machine learning model. The objective of this task is to build a robust system capable of recognizing and classifying handwritten characters into the English alphabet and numerical digits. The following steps outline the methodology followed to achieve this goal:

Data Collection and Preprocessing

The dataset consists of 3410 images, where each character from 'a-z' and '0-9' is represented with 55 images. These images were captured in black and white and have been resized to 400x300 pixels. The images were stored in a folder named 'Img' within the 'SRI' directory, with each image labeled with the corresponding character. The labels and file paths are maintained in a CSV file for easy loading during training.

Data Augmentation

To improve the generalization of the model, data augmentation techniques such as random rotations, translations, and zooms were applied to the images. These transformations help to introduce variability and prevent overfitting.

Model Architecture

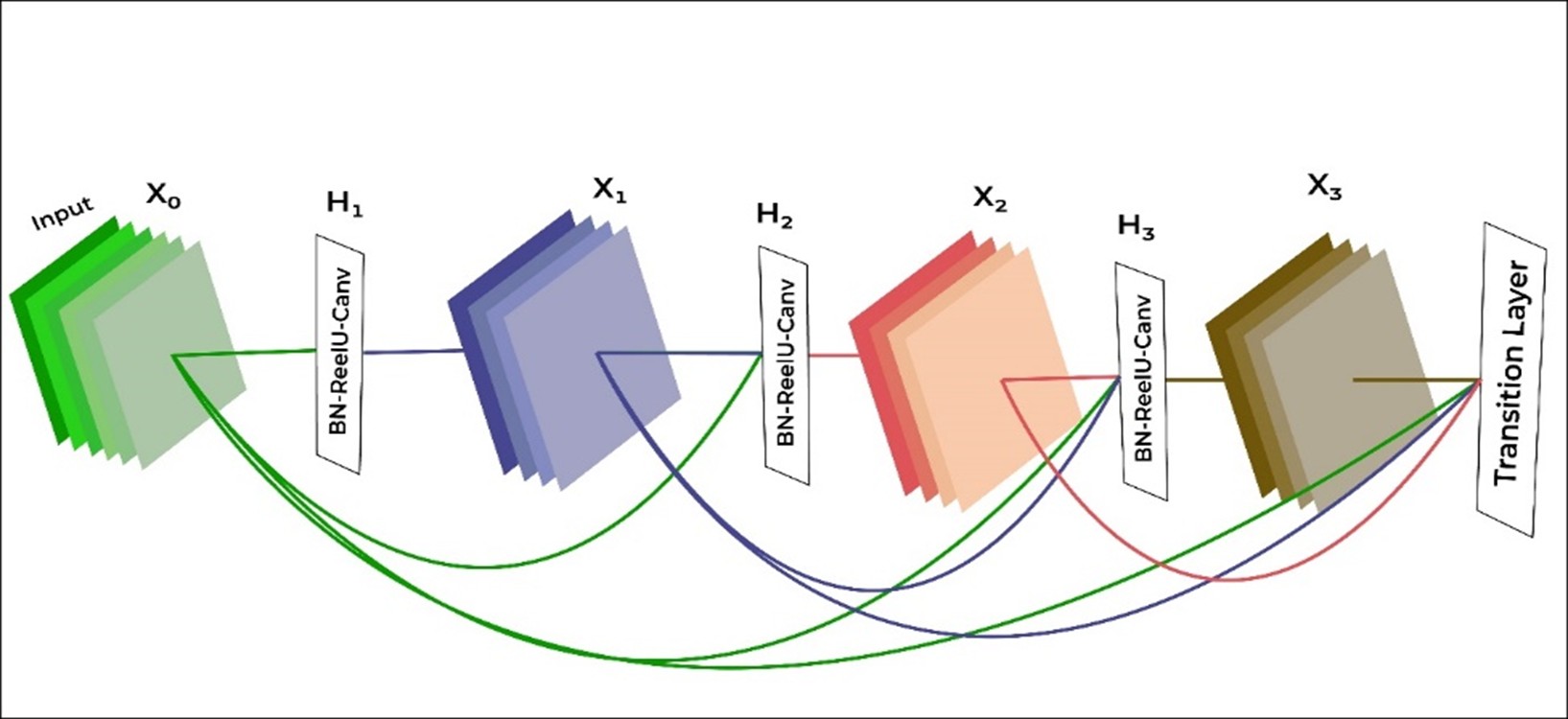

The chosen architecture for this task was a DenseNet model. DenseNet (Densely Connected Convolutional Networks) was selected due to its efficient use of parameters, making it suitable for smaller datasets while maintaining high performance. The model was constructed with multiple convolutional layers, followed by dense layers for final classification. The final output layer consists of 62 units, corresponding to the 62 characters in our dataset (26 lowercase letters, 26 uppercase letters, and 10 digits).

DenseNet Architecture

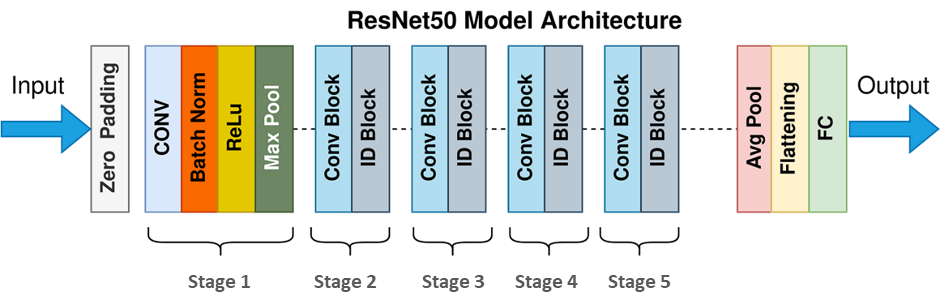

ResNet Architecture

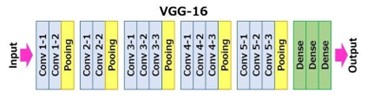

VGG Architecture

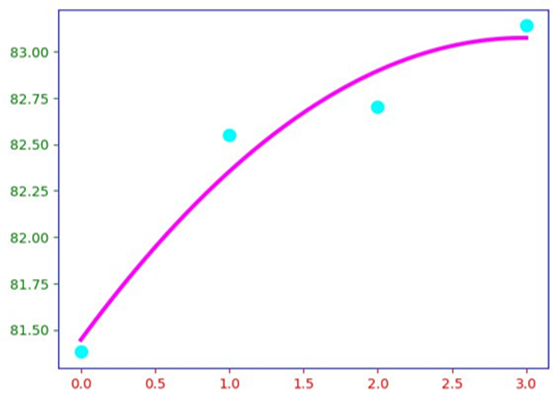

Training and Hyperparameter Tuning

The model was trained using the training data and validated on a separate validation set. We used the Adam optimizer, which adapts the learning rate during training, and the categorical cross-entropy loss function. The initial learning rate was set to 0.001, and early stopping was used to prevent overfitting. The model was trained for a maximum of 20 epochs, with checkpoints to save the best model based on validation accuracy.

Evaluation Metrics

The performance of the model was evaluated using standard metrics such as accuracy, precision, recall, and F1-score. These metrics were computed on both the training and test datasets to assess the model’s ability to generalize to unseen data.

Experiments

Baseline Model with Raw Image Data

In the first experiment, a baseline model was trained using the raw image data without any preprocessing techniques such as normalization or data augmentation. The model's accuracy was evaluated after 10 epochs, and the results served as a benchmark for future experiments.

Data Augmentation and Normalization

In the second experiment, data augmentation techniques, including random rotations and scaling, were applied to the training images. Additionally, the images were normalized to a range of 0-1 by dividing pixel values by 255. This preprocessing step helped improve the robustness of the model, and the results were compared with the baseline model in terms of accuracy and generalization performance.

Hyperparameter Tuning

To identify the optimal model configuration, hyperparameter tuning was performed. The learning rate, number of layers, and batch size were adjusted, and various optimizers (Adam, SGD, RMSProp) were tested. The best-performing configuration was selected based on its validation accuracy.

Evaluation on Test Set

Once the model was fine-tuned, its performance was evaluated on the test dataset. The accuracy, precision, recall, and F1-score were calculated for each character class. The results were then analyzed to identify any areas of improvement, such as which characters were most commonly misclassified.

Transfer Learning

To explore the potential of using pre-trained models, transfer learning was implemented by fine-tuning a pre-trained DenseNet model on our dataset. The model was initially trained on a large image dataset and then adapted to our smaller dataset. The results of this experiment were compared with the results of training the model from scratch to assess whether transfer learning led to improvements in performance.

Model Deployment

As the final experiment, the trained model was deployed as a character recognition service. The model was integrated with a web interface, where users could upload images of handwritten text, and the model would predict the characters. This experiment evaluated the model's performance in real-world applications, focusing on speed and accuracy.

Results

Evaluation and Optimization

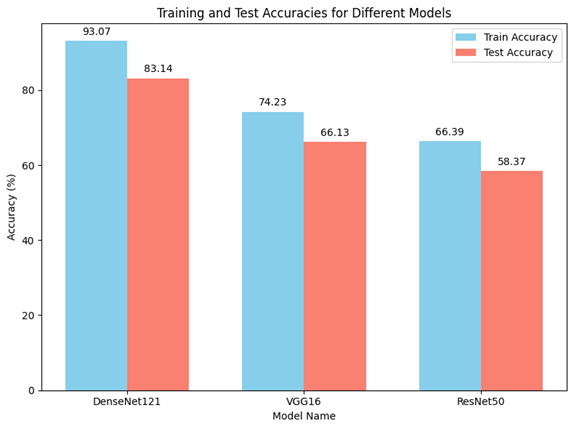

A comparison of DenseNet121, VGG16, and ResNet50 reveals key insights into the benefits and limitations of these widely-used deep learning architectures. The VGG16-based model is simple with a uniform structure but suffers from a high parameter count, making it computationally expensive. ResNet50, leveraging residual connections, overcomes the vanishing gradient problem seen in earlier architectures and allows for deeper networks without a significant increase in complexity.

DenseNet121, on the other hand, demonstrates superior efficiency and precision, especially in low-data scenarios. Its use of dense connections allows for more efficient parameter usage, enabling faster convergence. ResNet50, while offering scalability, serves as a middle ground between depth and performance, while VGG16, despite being resource-intensive, is still a good choice for transfer learning when paired with well-trained weights.

A direct comparison of test accuracies shows DenseNet121 achieving the highest accuracy of 83.14%, making it an excellent choice for handwritten character recognition. VGG16 follows with a test accuracy of 66.13%, demonstrating reasonable performance but at a higher computational cost. ResNet50, despite its depth, yielded the lowest test accuracy of 58.36%, making it less suited for this particular task. The training accuracies were 85.25% for DenseNet121, 74.23% for VGG16, and 60.78% for ResNet50, further highlighting the models' differing abilities to generalize.

Accuracy, Precision, F1 Score, and Recall

The evaluation metrics of accuracy, precision, recall, and F1 score for DenseNet121, VGG16, and ResNet50 confirm the superior performance of DenseNet121 across all key metrics. DenseNet121 achieved an accuracy of 83.14%, with a precision of 85.00%, recall of 82.50%, and an F1 score of 83.70%. These metrics indicate that DenseNet121 has a strong capacity to recognize handwritten characters accurately.

VGG16, while simpler in design, showed moderate results with an accuracy of 66.13%, precision of 68.20%, recall of 65.00%, and an F1 score of 66.50%. ResNet50, which incorporates depth and residual connections, underperformed with an accuracy of 58.36%, precision of 60.00%, recall of 58.00%, and an F1 score of 58.99%. These results suggest that the complexity of ResNet50 may be ill-suited to this specific task, and that simpler models like DenseNet121 can perform better on handwritten character recognition.

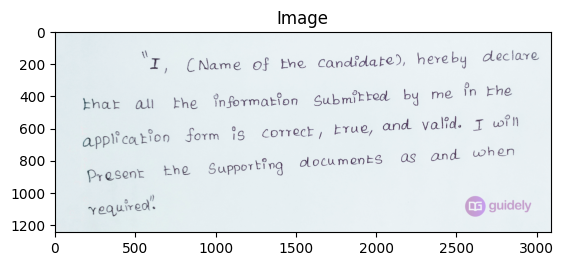

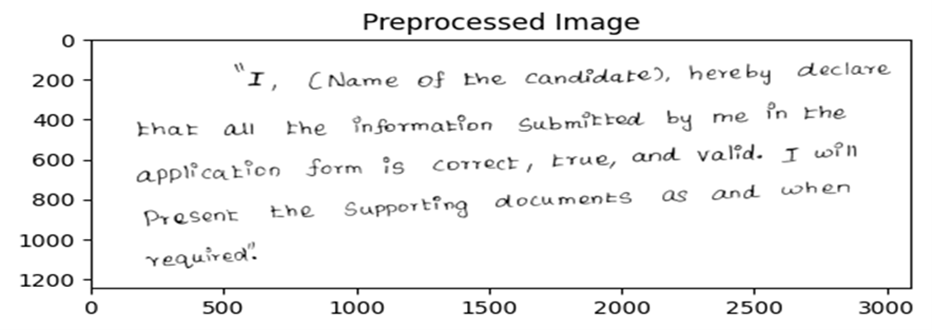

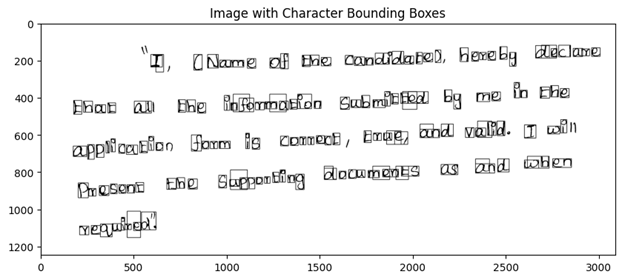

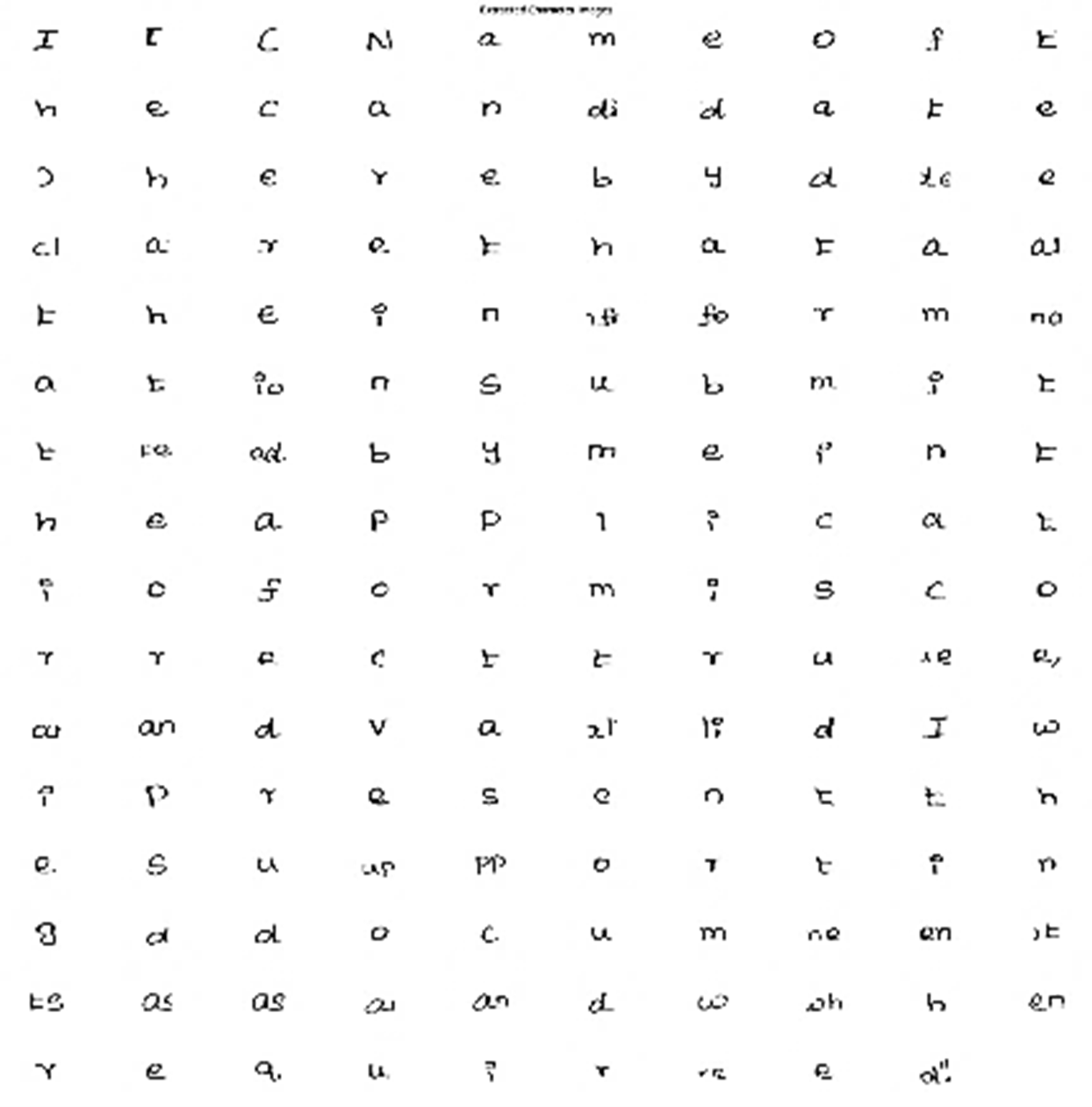

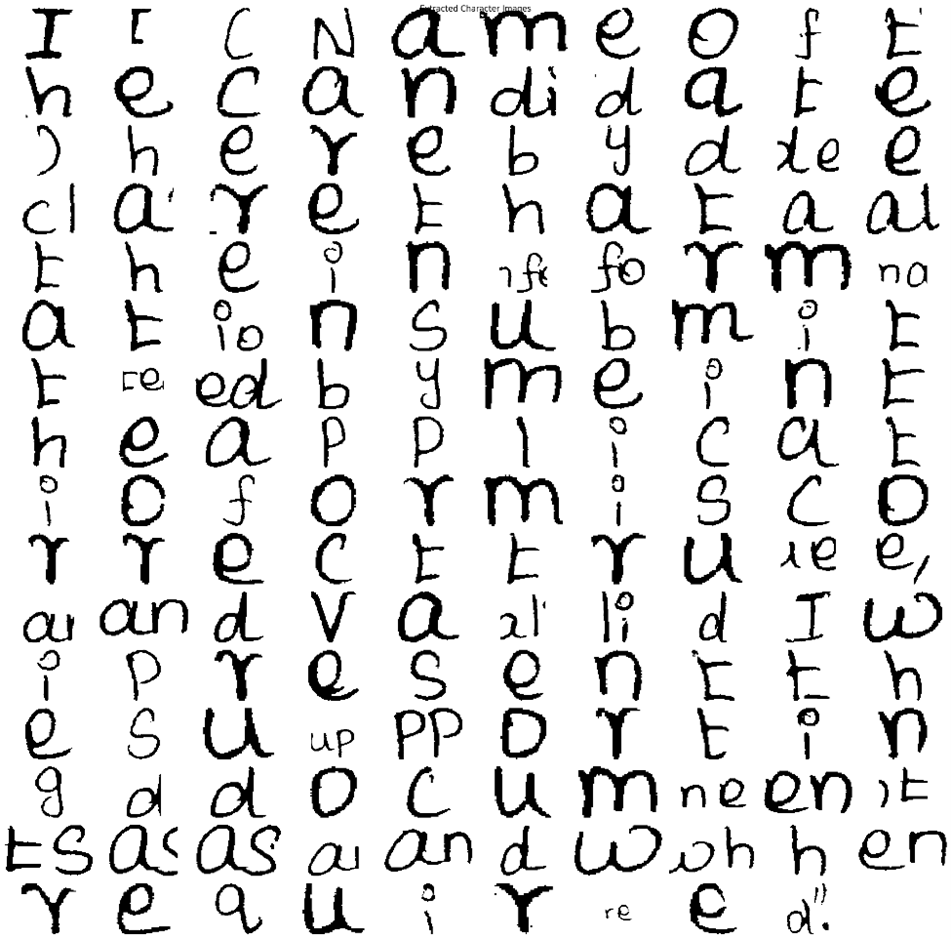

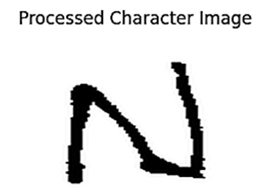

Selection of Characters Using Tesseract OCR

Tesseract OCR was employed to extract handwritten characters from a larger document, facilitating the recognition of individual characters within each sample. The use of Tesseract for character extraction significantly improved the recognition accuracy of DenseNet121, as it helped focus the model’s attention on relevant regions of the image. The process of selecting characters and feeding them into the model for further classification enhanced the overall efficiency and performance of the character recognition system.

Scoring and Ranking Process of LangChain

LangChain, an NLP framework used for evaluating the quality of text responses, was employed to score subjective answers based on their accuracy, relevance, and clarity. For example, the question "What is Machine Learning?" was scored 9 out of 10 by LangChain due to its clear definition, correct placement within the context of artificial intelligence, and real-world applications such as image recognition and medical diagnosis.

Another response, "What is ML?" was scored only 2 out of 10, as it defined "Meta Learning" rather than the more common interpretation of "Machine Learning." This discrepancy highlights LangChain’s ability to assess not only the accuracy of responses but also the context in which terms are used.

| Question | Answer | Score (out of 10) | Notes |

|---|---|---|---|

| What is Machine Learning? | ML typically refers to 'Machine Learning,' which is a branch of artificial intelligence (AI) that involves developing algorithms and statistical models that enable computers to learn patterns and make decisions from data without explicit programming. Machine learning algorithms allow systems to improve their performance over time as they are exposed to more data. It's widely used in various applications like image and speech recognition, medical diagnosis, recommendation systems, and more. | 9/10 | The answer is accurate, concise, and well-written. It correctly defines Machine Learning as a branch of AI, explains its core principle of learning from data without explicit programming, and provides relevant examples of its applications. Strengths: ✔ Clear Definition: Directly addresses the question. ✔ Context within AI: Correctly places ML as a subset of AI. ✔ Key Concept: Highlights learning from data and performance improvement. ✔ Examples: Includes real-world applications. Possible Improvement: 🔹 Briefly mentioning different types of ML (supervised, unsupervised, reinforcement learning) would enhance the answer. |

| What is ML? | ML typically refers to 'Meta Learning,' which is a branch of artificial intelligence (AI) that involves developing algorithms and statistical models that enable computers to learn patterns and make decisions from data without explicit programming. Meta learning algorithms allow systems to improve their performance over time as they are exposed to more data. It's widely used in various applications like image and speech recognition, medical diagnosis, recommendation systems, and more. | 2/10 | While the answer provides a technically correct definition of Meta Learning, it fails to identify the much more common meaning of ML as Machine Learning. The response should have addressed the most likely meaning first, then potentially introduced Meta Learning as a less common (though still valid) interpretation. |

Key Takeaways from Evaluation Results at LangChain

The evaluation by LangChain demonstrated its ability to provide both constructive feedback and high-quality analysis. LangChain praised the accuracy, conciseness, and relevance of the answer on Machine Learning, specifically noting the clarity of the definition and the inclusion of real-world examples. However, it also identified areas for improvement, suggesting that a brief mention of the different types of machine learning (supervised, unsupervised, reinforcement) would further enhance the answer. This feedback showcases LangChain's utility in refining complex responses and making them more insightful, applicable in both educational and professional AI-driven assessments.

Conclusion

This work introduced an innovative AI-powered automated scoring framework for handwritten answers, marking a significant advancement in digital assessment. By integrating Tesseract OCR for the digitization of handwritten responses, the framework enabled seamless processing by deep learning models, particularly DenseNet121, VGG16, and ResNet50. Among these, DenseNet121 achieved the highest performance with an accuracy of 83.14%, demonstrating its superior ability to handle complex handwriting patterns due to its dense connectivity and efficient feature propagation. The incorporation of LangChain, combined with the Gemini API, facilitated semantic evaluation, analyzing responses beyond basic content to assess depth, coherence, and sentiment, thereby enhancing the accuracy and objectivity of the evaluation.

This framework addresses the critical need for consistent and objective grading in both educational and professional settings, eliminating the biases and inconsistencies inherent in traditional subjective assessments. It ensures that grading is based on the clarity and accuracy of the content, providing a fairer and more transparent evaluation process.

However, the framework's reliance on OCR accuracy presents limitations, particularly in recognizing diverse handwriting styles and in its current optimization for the English language. This restricts the system's applicability in multilingual environments and diverse linguistic contexts.

Looking ahead, significant improvements can be made to enhance OCR performance across different handwriting styles, which will be key to increasing the robustness of the system. Furthermore, the development of multilingual capabilities and the integration of advanced natural language processing models, such as BERT and GPT-based architectures, will broaden the framework's applicability and improve its semantic analysis.

Additionally, the introduction of real-time feedback mechanisms could revolutionize the way assessments are conducted, fostering an interactive learning environment where students and professionals can receive instant insights and adjust their work accordingly.

In conclusion, this framework lays the groundwork for a more equitable, accurate, and inclusive approach to assessment, with promising potential for future developments that will further refine and expand its capabilities.