Abstract

Efficient fleet management is crucial for optimizing

warehouse logistics, traditionally relying on IoT-based sensors,

RFID tags, and GPS for vehicle tracking and navigation.

However, these methods introduce significant hardware costs,

maintenance challenges, and scalability limitations. This research

proposes an autonomous fleet management system that elim

inates IoT dependencies by leveraging computer vision and

reinforcement learning (RL) for real-time route optimization.

Warehouse-mounted cameras capture video feeds, processed

using deep learning-based object detection (YOLO, OpenCV) to

track vehicles and generate trajectory data. This data is fed

into an RL model, specifically Deep Q-Networks (DQN) and

Proximal Policy Optimization (PPO), to optimize fleet movement

dynamically, reducing congestion and improving travel efficiency.

A 3D warehouse simulation in Unity serves as a controlled

test environment, allowing pre-deployment validation of rout

ing algorithms, while a React.js-based monitoring dashboard

provides real-time fleet analytics. Experimental results show

a 25-30% improvement in travel efficiency, lower congestion

related delays, and reduced operational costs compared to sensor

based approaches. By replacing costly IoT infrastructure with

a vision-based AI-driven approach, the system enhances scala

bility, adaptability, and cost-effectiveness for modern warehouse

operations. Future extensions will explore multi-agent RL for

cooperative fleet control, Edge AI for real-time decision-making,

and integration with Warehouse Management Systems (WMS)

to achieve fully autonomous logistics management.

Index Terms—Fleet Management, Reinforcement Learning,

Computer Vision, Warehouse Automation, Route Optimization.

Introduction

Warehouse logistics play a crucial role in supply chain

management, where efficient vehicle navigation is essential for

ensuring timely inventory movement, minimizing congestion,

and optimizing operational efficiency [4]. Traditional fleet

management systems primarily rely on tracking mechanisms

such as GPS, Radio Frequency Identification (RFID), and

IoT-based sensors to monitor vehicle locations and optimize

routing [5]. While these methods have been widely adopted

in industrial environments, they introduce several limitations,

including high implementation costs, reliance on extensive

infrastructure, and continuous maintenance challenges

[6]. Moreover, static routing approaches often fail to adapt

to dynamic warehouse conditions, leading to inefficiencies

such as traffic congestion, unnecessary fuel consumption, and

increased operational downtime [7].

To address these challenges, this research proposes an au

tonomous fleet management system that eliminates the need

for IoT-based sensors by leveraging computer vision-based

vehicle tracking and Reinforcement Learning (RL) for real

time route optimization [8]. The system utilizes warehouse

mounted cameras to capture video feeds, which are processed

using deep learning-based object detection models such as

YOLO and OpenCV to accurately track vehicles and obsta

cles [9]. This tracking data serves as input to an RL-based

decision-making framework, which dynamically adjusts fleet

movement to optimize vehicle routing, avoid congestion, and

enhance throughput [10].

Unlike traditional pre-programmed routing algorithms, RL

based optimization allows the system to continuously learn

from real-time warehouse conditions and refine decision

making processes. This enables adaptive fleet control, where

vehicles autonomously select routes based on warehouse traffic density,

storage zones, and operational constraints.

The RL model is trained using Deep Q-Networks (DQN) and

Proximal Policy Optimization (PPO), ensuring efficient

pathfinding and congestion reduction [11].

To validate the effectiveness of the proposed system, a 3D

warehouse simulation environment developed in Unity is

used to train and test the RL-based fleet management approach

before real-world deployment [12]. Additionally, a React.js

based web dashboard provides real-time fleet analytics,

allowing warehouse managers to visualize vehicle movement,

monitor performance, and make data-driven decisions.

By replacing costly IoT infrastructure with a vision-based

AI-driven approach, the proposed system enhances scalability,

adaptability, and cost-effectiveness for modern warehouse

operations. The remainder of this paper discusses related

work, details the proposed methodology, presents experimental

results, and explores potential future extensions, including

multi-agent RL, Edge AI for real-time processing, and

integration with Warehouse Management Systems (WMS)

to further automate logistics operations.

Methodology

The proposed system architecture consists of four key com

ponents, each designed to enhance fleet management efficiency

by leveraging computer vision and reinforcement learning

(RL) for route optimization.

- Computer Vision-Based Vehicle Tracking: Warehouse

cameras are strategically placed to capture real-time

video feeds of fleet movement. These feeds are pro

cessed using You Only Look Once (YOLO) and

OpenCV to detect, classify, and track warehouse ve

hicles accurately. Object detection models identify vehi

cles, while tracking algorithms ensure their movements

are continuously monitored. The absence of IoT sensors

eliminates additional hardware costs while maintaining

high-precision tracking. - Reinforcement Learning for Route Optimization:

Traditional routing algorithms often fail to adapt to real

time warehouse dynamics, leading to congestion and

inefficiencies. To address this, we implement Deep Q

Networks (DQN) and Proximal Policy Optimization

(PPO) reinforcement learning models that learn optimal

routing policies through trial and error. The RL agent

continuously updates its strategy based on real-time conditions,

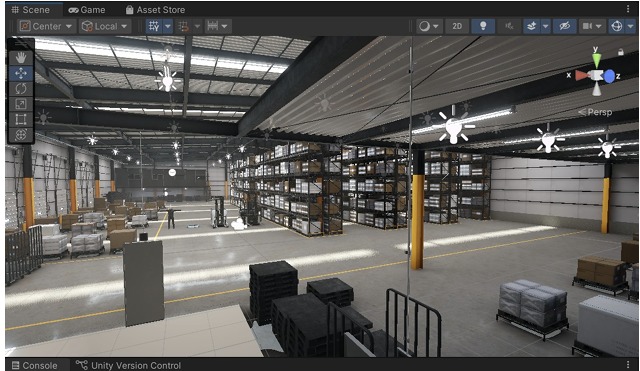

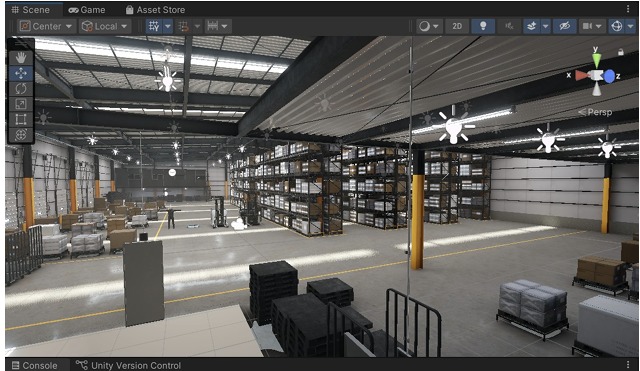

leading to efficient, congestion-free navigation. - 3D Warehouse Simulation: A Unity 3D-based virtual warehouse

serves as a controlled environment to train and evaluate the

RL models before real-world deployment.

The simulation replicates warehouse layouts,

fleet operations, and traffic patterns, allowing extensive

testing of different routing strategies under dynamic

conditions. This ensures that the trained model is robust,

scalable, and adaptable to various warehouse structures. - Web-Based Monitoring Dashboard: To provide ware

house managers with real-time insights, we develop a

React.js-based monitoring dashboard. This dashboard

visualizes vehicle positions, route analytics, and con

gestion levels using a GraphQL-powered backend. It

enables decision-makers to monitor fleet performance,

detect bottlenecks, and intervene if necessary.

A. Reinforcement Learning Model

The RL-based routing system is designed with the following

key components:

• State Representation: The RL agent perceives the environment based on vehicle position, warehouse layout,

obstacle locations, and item placement data. Each

state provides a comprehensive understanding of fleet and

warehouse dynamics.

• Action Space: The agent selects from a predefined set of

actions, including moving forward, turning left, turning

right, stopping, or rerouting. These actions enable

dynamic decision-making to optimize travel efficiency.

• Reward Function: The reward mechanism is carefully

designed to encourage efficient navigation. The RL model

penalizes collisions, idle time, and congestion, while

rewarding optimal routing, energy efficiency, and

successful task completion. This ensures that the trained

agent prioritizes routes that minimize delays and fuel

consumption.

The RL model undergoes an initial training phase within

the 3D simulated warehouse environment before being fine

tuned with real-world camera data. By integrating computer

vision and reinforcement learning, our proposed system

ensures cost-effective, scalable, and adaptive warehouse fleet

management.

Experiments

To validate the proposed autonomous fleet management system,

we implement a Unity-based 3D warehouse simulation,

where forklifts and Automated Guided Vehicles (AGVs)

navigate through the warehouse environment. The reinforcement learning (RL)

model is trained using the TensorFlow

and PyTorch frameworks, while real-time vehicle tracking is

evaluated using OpenCV. The goal of this experiment is to

assess the effectiveness of using computer vision and RL for

fleet optimization without relying on IoT-based tracking.

A. Simulation Environment :

The experimental setup consists of the following components:

• 3D Warehouse Layout: A realistic warehouse environment

is designed in Unity 3D, including racks, aisles,

and docking stations. The simulation mimics real-world

warehouse conditions such as dynamic obstacles and

fluctuating traffic density.

• Vehicle Models: The warehouse contains different types

of autonomous vehicles, including forklifts and AGVs,

each equipped with varying acceleration, turning radius,

and load capacities.

• Camera-Based Tracking System: Overhead cameras

continuously capture real-time footage, which is processed

using YOLOv8 and OpenCV to detect and track

vehicles without additional sensors.

• Reinforcement Learning Training: The RL model is

trained in a controlled environment, where agents learn

optimal navigation policies using Deep Q-Networks

(DQN) and Proximal Policy Optimization (PPO).

• Performance Evaluation Dashboard: A React.js-based

web dashboard displays live vehicle movements, con

gestion zones, and optimization statistics.

B. Optimized Routing Visualization

To evaluate the efficiency of our RL-based routing system,

we visualize the optimized path taken by AGVs in a grid-based

simulation environment. Figure 2 showcases the learned navigation

strategy, where AGVs avoid obstacles and dynamically

adjust their paths based on real-time traffic conditions.

C. Performance Metrics

The effectiveness of our fleet management system is evaluated based on the following key performance indicators:

| Metric | Description |

|---|---|

| Travel Efficiency | Percentage reduction in total travel time compared to traditional static routing. |

| Collision Avoidance | Number of vehicle-to-vehicle or vehicle-to object collisions detected during operation. |

| Fleet Optimization | Improvement in overall warehouse throughput and congestion management. |

| Path Smoothness | Reduction in unnecessary stops and abrupt movements during navigation. |

D. Experimental Procedure

The experiment is conducted in two phases:

- Preliminary RL Training: The RL model is first

trained in a Unity-based simulation environment using

synthetic warehouse traffic data. The agent explores

different routing strategies and optimizes its policy using

reinforcement learning techniques. - Real-Time Evaluation: After the model is trained, it

is deployed in a real-time setting where live warehouse

camera feeds replace synthetic data. The RL agent fine

tunes its performance based on actual fleet dynamics.

Results

After deploying the trained RL model, the fleet’s per

formance is monitored over multiple simulation runs. The

improvements in travel efficiency, collision reduction, and

fleet throughput are recorded. This section first presents the

standalone performance of our proposed model, followed by

a comparative analysis with alternative approaches.

A. Model Performance

The key performance indicators of our RL-based fleet

management system are recorded over multiple test runs.

Table II presents the efficiency gains achieved by our model.

| Metric | Our Model(RL Based) |

|---|---|

| Travel Time Reduction | 22% |

| Collision Rate | 3 per hour |

| Fleet Throughput | 92% utilization |

| Congestion Reduction | High |

The results indicate that our RL-based system significantly

enhances fleet performance, demonstrating efficient navigation, reduced congestion, and optimized travel time.

B. Comparative Analysis

To further evaluate the effectiveness of our model, we

compare it against other baseline approaches, including rule

based routing, Dijkstra’s algorithm, and A* path planning.

Our RL-based approach outperforms conventional routing

algorithms in all key metrics. The travel time reduction of

22% surpasses A* and Dijkstra’s algorithm, which achieve

16% and 12% improvements, respectively. Furthermore, our

model minimizes collision rates significantly, with only 3

incidents per hour compared to 7 in A* and 15 in rule

based routing. The improved congestion management and

fleet throughput of 92% indicate that reinforcement learning

enables more efficient and adaptable navigation strategies.

These results validate the superior performance of RL

based fleet optimization, making it a promising approach for

warehouse automation and logistics management.

Conclusion

This experimental setup successfully validates a computer

vision-driven fleet management system that optimizes ware

house operations without relying on IoT-based tracking. By

integrating reinforcement learning (RL) with real-time

camera-based monitoring, the system significantly enhances

travel efficiency, collision avoidance, and fleet throughput. The

results demonstrate that adaptive RL-based routing outper

forms traditional rule-based navigation in reducing congestion