GitHub Repository:

https://github.com/lookmohan/multi-agent-healthcare-rag-langgraph

Google Colab Notebook (Executable):

https://colab.research.google.com/drive/1S58SpHUMD9Yr65NqIbQjLizXTbKFFF0_?usp=sharing

Large Language Models (LLMs) have rapidly become powerful tools for natural language understanding and generation. In domains such as healthcare, they show promise for tasks like clinical question answering, literature summarization, and decision support. However, healthcare is a high-stakes domain, where incorrect, biased, or overconfident responses can have serious consequences.

Traditional LLM-based systems and even standard Retrieval-Augmented Generation (RAG) pipelines often suffer from key limitations:

This project introduces a confidence-aware multi-agent RAG system built using LangGraph, designed to address these challenges through explicit agent collaboration, risk analysis, and iterative refinement.

Single-agent RAG systems typically follow a linear pipeline: retrieve documents → generate answer. While effective in some domains, this approach is insufficient for healthcare because:

The goal of this project is to design a system that:

Healthcare decision-making naturally involves multiple perspectives: research evidence, clinical insight, risk assessment, and quality evaluation. This project mirrors that structure by assigning distinct responsibilities to different AI agents, rather than relying on a single monolithic model.

Using a multi-agent architecture enables:

LangGraph is used as the orchestration framework to manage agent execution, state transitions, and iterative control.

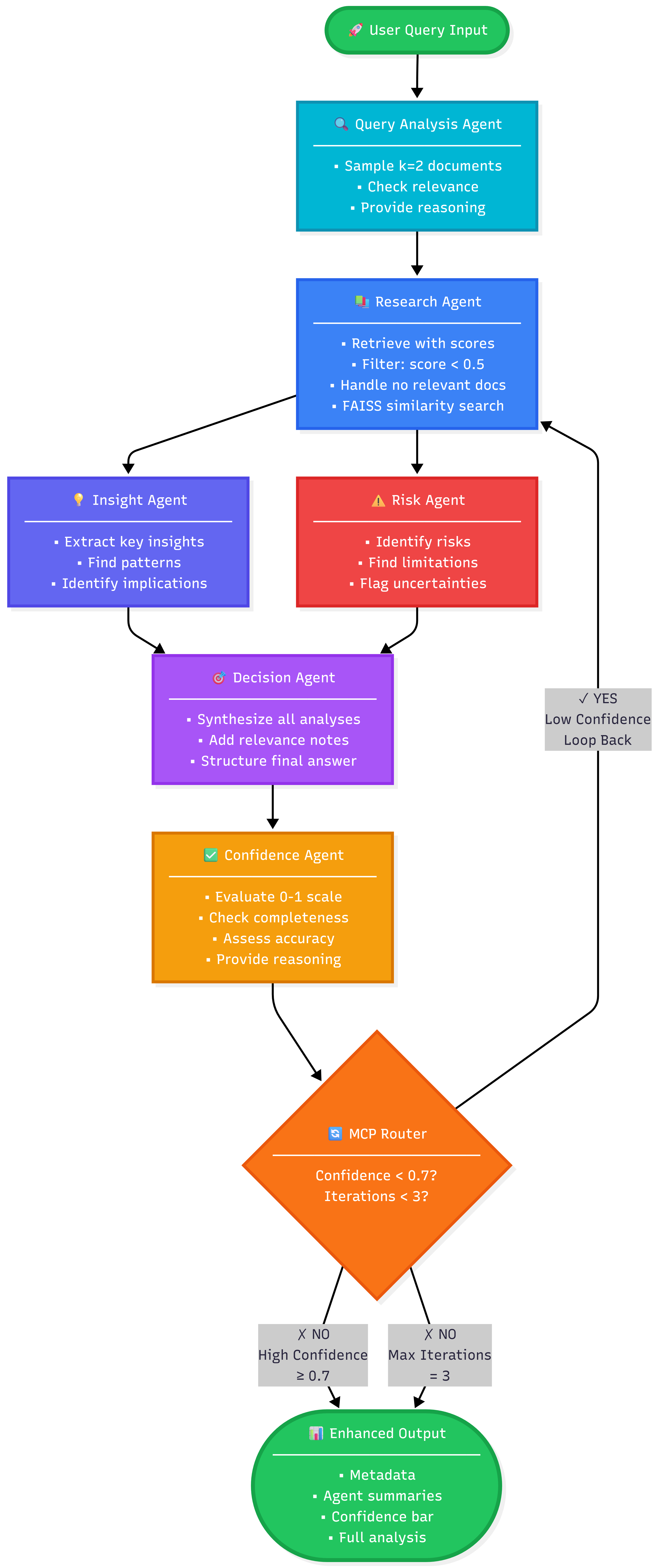

The system follows a structured, agentic workflow:

A confidence-aware routing mechanism is implemented:

This prevents both:

This project is designed to run entirely in Google Colab, ensuring easy reproducibility.

Open the Colab notebook using the link below:

[https://colab.research.google.com/drive/1S58SpHUMD9Yr65NqIbQjLizXTbKFFF0](https://colab.research.google.com/drive/1S58SpHUMD9Yr65NqIbQjLizXTbKFFF0)_

Runtime → Change runtime type → Hardware Accelerator → GPU

!pip install langgraph langchain faiss-cpu sentence-transformers pypdf groq

import os os.environ["GROQ_API_KEY"] = "your_api_key_here"

result = app.invoke({ "query": "What are the main risks and best practices for deploying LLMs in healthcare?", "messages": [] })

The system produces:

If no relevant documents are found, the system explicitly states this and lowers the confidence score, avoiding misleading certainty.

This project demonstrates how agentic AI principles and multi-agent orchestration using LangGraph can significantly improve the safety, transparency, and reliability of RAG systems in healthcare. By decomposing reasoning across specialized agents and introducing confidence-based control, the system provides a strong foundation for responsible AI deployment in high-risk domains.