🧾 Abstract

This work presents a production-ready multi-agent publication review system capable of analyzing GitHub repository documentation and generating actionable improvement guidance. The system extends earlier prototypes by incorporating Human-in-the-Loop validation, deterministic pipelines, enhanced reliability safeguards, automated testing, and a graphical user interface. The agents collaboratively inspect README structure, extract semantic keywords, propose metadata enhancements, and rewrite content for improved clarity, before synthesizing findings into a consolidated professional report. The project demonstrates how agent-based reasoning can be successfully translated into deployable AI systems with strong usability, resilience, and interpretability characteristics.

📝 Introduction

Modern AI systems require more than raw model intelligence — they demand orchestrated workflows, safety constraints, interpretability, and meaningful human control. This project builds on earlier agentic foundations by converting a prototype multi-agent reviewer into a production-ready software system capable of analyzing GitHub repositories, synthesizing feedback, and providing human-editable recommendations. The work fuses modular agents, supervision mechanisms, execution resilience, logging observability, and a simple interface into a unified platform. The objective is not just to automate insight generation, but to engineer a system that behaves predictably, recovers from failure, communicates transparently with users, and supports iterative refinement — characteristics fundamental to deployable agentic AI applications.

🧪 Methodology

This work was executed through an engineering-driven, iteration-based development cycle. The system evolved from the Module 2 prototype into a production-ready multi-agent architecture by refining agent roles, enforcing controlled orchestration, and strengthening reliability guarantees. The pipeline remained sequential — analyzing the repository, extracting keywords, generating improved content suggestions, and synthesizing a final reviewer outcome — but the internal execution logic was hardened through state isolation, error handling, and deterministic transitions.

To support dependable operation, the GitHub retrieval mechanism was redesigned with retry logic, raw content fallbacks, and failure messaging, reducing sensitivity to network inconsistencies and broken repository files. Human-in-the-loop controls were embedded into the workflow, enabling users to inspect, accept, or edit intermediate results; this ensured oversight, accountability, and qualitative influence on downstream reasoning. Safety was addressed through exception supervision, input validation, and early-exit detection when content was missing or invalid, allowing graceful degradation rather than model failure.

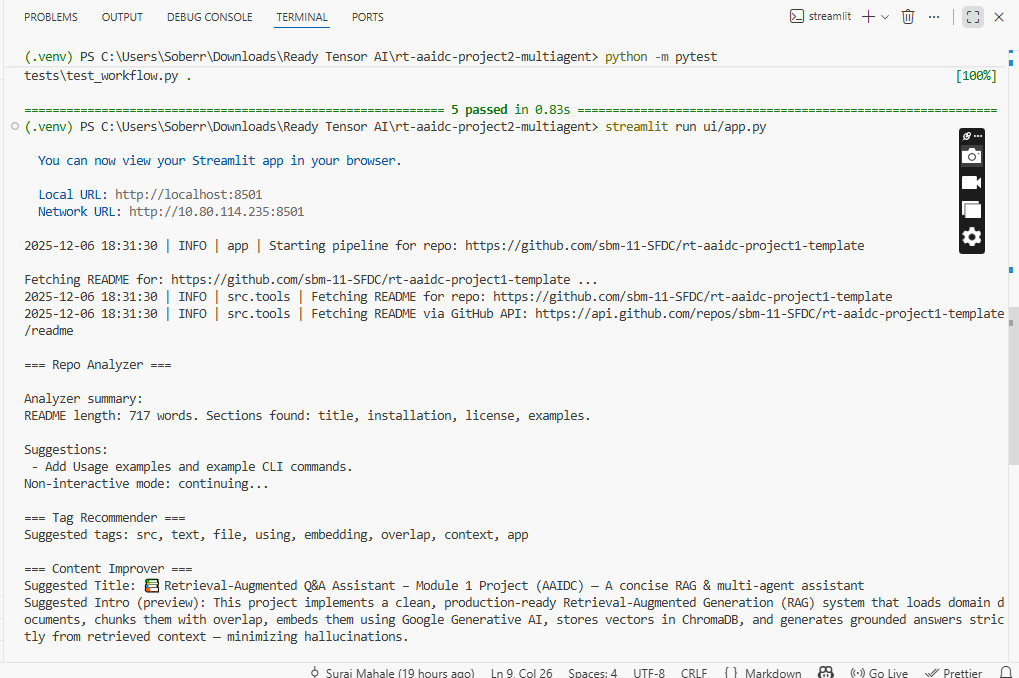

The reliability of the system was validated using an automated testing suite covering sanitization, tool behavior, orchestration integrity, and the flow of human edits through the pipeline. A lightweight UI was then developed using Streamlit, abstracting the pipeline behind an accessible interface and enabling non-technical users to interact with the system without CLI knowledge. Logging, timestamped output storage, .env-based configuration, and repository documentation ensured traceability, repeatability, and deployment readiness. Collectively, these methodological steps transformed a functional prototype into a usable, resilient multi-agent system aligned with real-world engineering expectations.

🔍 Problem Statement

While AI-generated documentation feedback exists, most systems lack structured reasoning, human supervision, robustness against failure, and a usable interface for non-technical stakeholders. Repository reviews often produce surface-level suggestions without explainability or refinement loops. This project addresses the need for a production-ready system capable of multi-step reasoning, evaluative synthesis, human correction, and reliable execution — transforming raw repository data into actionable technical improvement guidance.

🎯 High-Level Objective

The goal of the system is to convert GitHub repository documentation into professionally analyzable review output while ensuring reliability, transparency, and user control. The system orchestrates multiple specialized agents to analyze structure, extract keywords, suggest improvements, and consolidate insights into a reviewer-style report, all while enabling user interaction and refining outputs for practical adoption.

🏗️ Architecture Overview

The system is designed as a pipeline orchestrating four collaborative agents:

| Agent | Responsibility |

|---|---|

| Repo Analyzer | inspects README structure and builds baseline assessment |

| Tag Recommender | extracts semantic keywords and proposes tags |

| Content Improver | rewrites titles and introductions for clarity |

| Reviewer Agent | synthesizes and summarizes all recommendations |

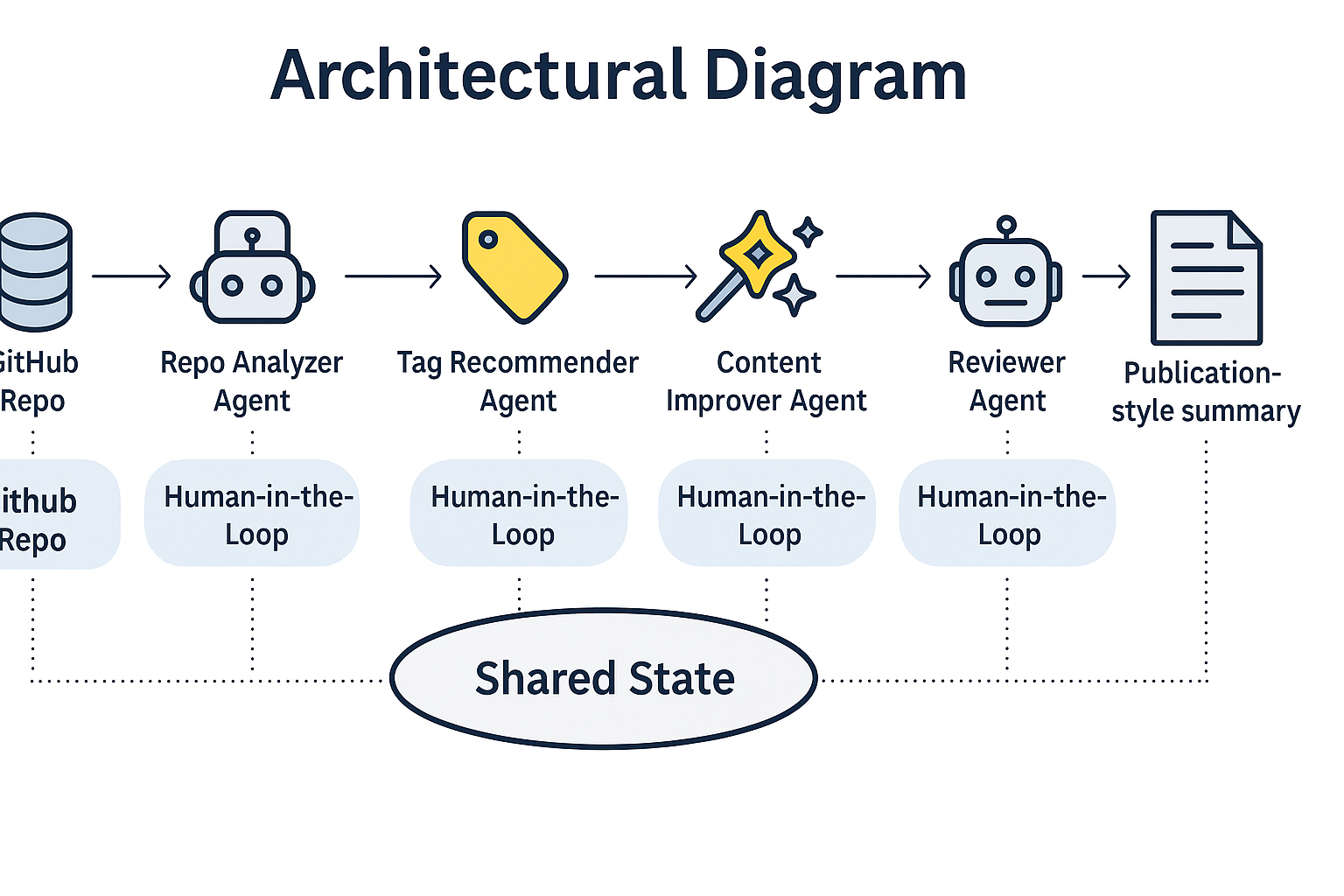

🏗️ Architectural Diagram

🏗️ Architecture Diagram Explanation

At its core, the system follows a structured multi-agent pipeline. The Fetcher retrieves repository README content. The Repo Analyzer Agent interprets structural signals, identifies missing elements, and populates state. Its output feeds the Tag Recommender Agent, which extracts keywords using YAKE and synthesizes meaningful project tags. Results then flow into the Content Improver Agent, which constructs candidate titles and introductory text based on semantic cues. The Reviewer Agent collects all accumulated outputs, fuses them into a single narrative, and produces a publication-style summary.

A shared state container ensures deterministic progression and avoids information loss across agent boundaries. Human-in-the-Loop checkpoints sit between stages, allowing decision making and text refinement before processing continues. The CLI or Streamlit UI acts as the interaction surface, while logging utilities write both raw state and final outputs to disk.

This architecture yields explainability, modular extensibility, and safety — each stage is observable, interruptible, editable, and traceable, which aligns with professional expectations for AI systems deployed in real-world settings.

🧑⚖️ HITL — Human-In-The-Loop Supervision

Human oversight is embedded at every major decision point. Users can approve, reject, or edit intermediate agent outcomes before execution proceeds. This mechanism prevents blind automation, improves quality through expert correction, and establishes accountability — achieving a balance where machine efficiency is paired with human judgment to produce more trustworthy outcomes.

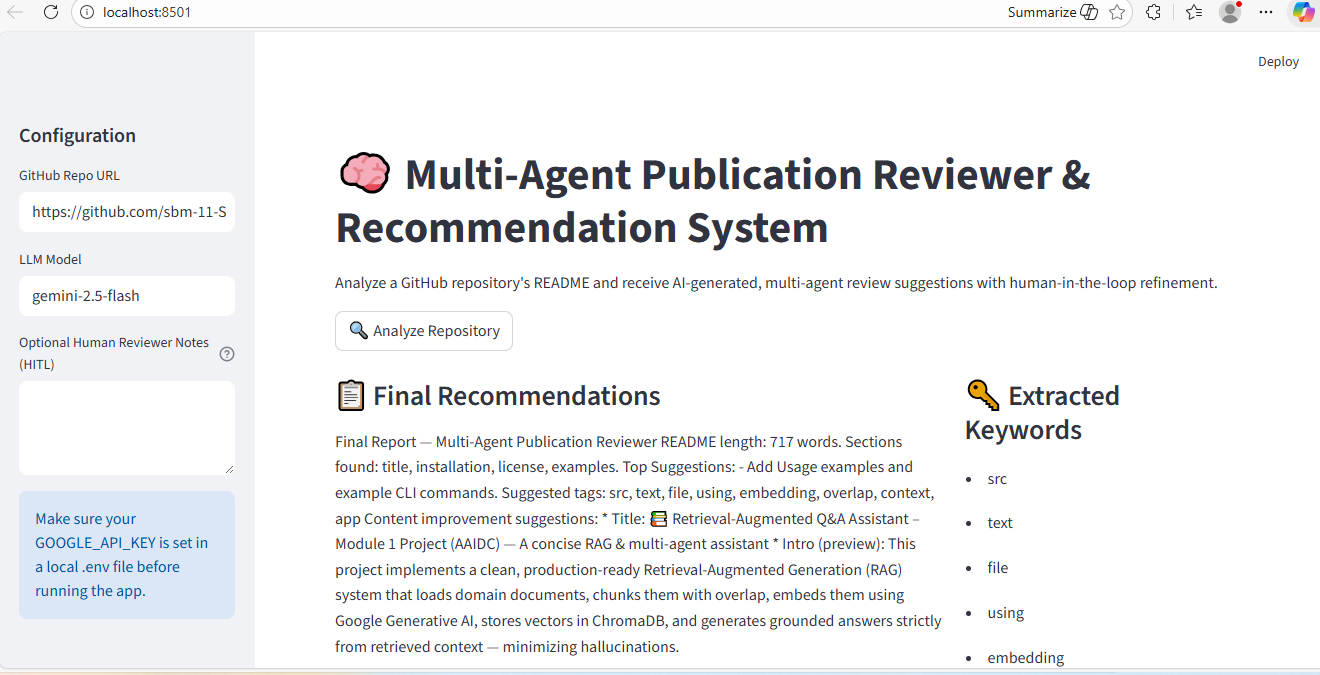

🖥️ User Interface (UI Enhancements)

A lightweight Streamlit-based interface abstracts system complexity and exposes agent actions through a guided workflow. Users input a repository URL, optionally provide review notes, and observe outputs in structured panels. The UI supports validation feedback, error notifications, and readable final reports — making the system accessible beyond command-line environments.

🔧 System Enhancements for Reliability

Resilience improvements include backoff-based retry logic for failed GitHub fetches, structured error propagation, early exit detection where content is missing, sanitization of review text, and deterministic state management. These upgrades ensure that the system reacts predictably to noise, malformed inputs, and network inconsistencies rather than failing silently or producing unusable results.

🧪 Testing & Validation

A dedicated automated test suite validates tools, pipeline logic, keyword extraction, and end-to-end execution. Mocked GitHub calls ensure functionality without external dependency. Tests also verify that human edits flow through the state and influence final outputs, guaranteeing correctness and enabling maintainability for iterative development.

🔁 Execution Flow

The system retrieves the README, analyzes structure and completeness, amplifies semantic tags, rewrites messaging, and synthesizes a final narrative report. At optional checkpoints, user edits override agent decisions, and logging persists outputs to disk. Whether invoked via CLI or UI, the same orchestrated multi-agent pipeline executes deterministically.

🛠️ Technology Stack

| Layer | Tools |

|---|---|

| Language | Python 3.9+ |

| UI | Streamlit |

| Agents | Rule-based logic with state sharing |

| API Fetch | GitHub content endpoints |

| Logging | Python logging |

| Safety | Input validation, retry logic |

| Testing | pytest |

| Environment | dotenv |

| Output | Text reports |

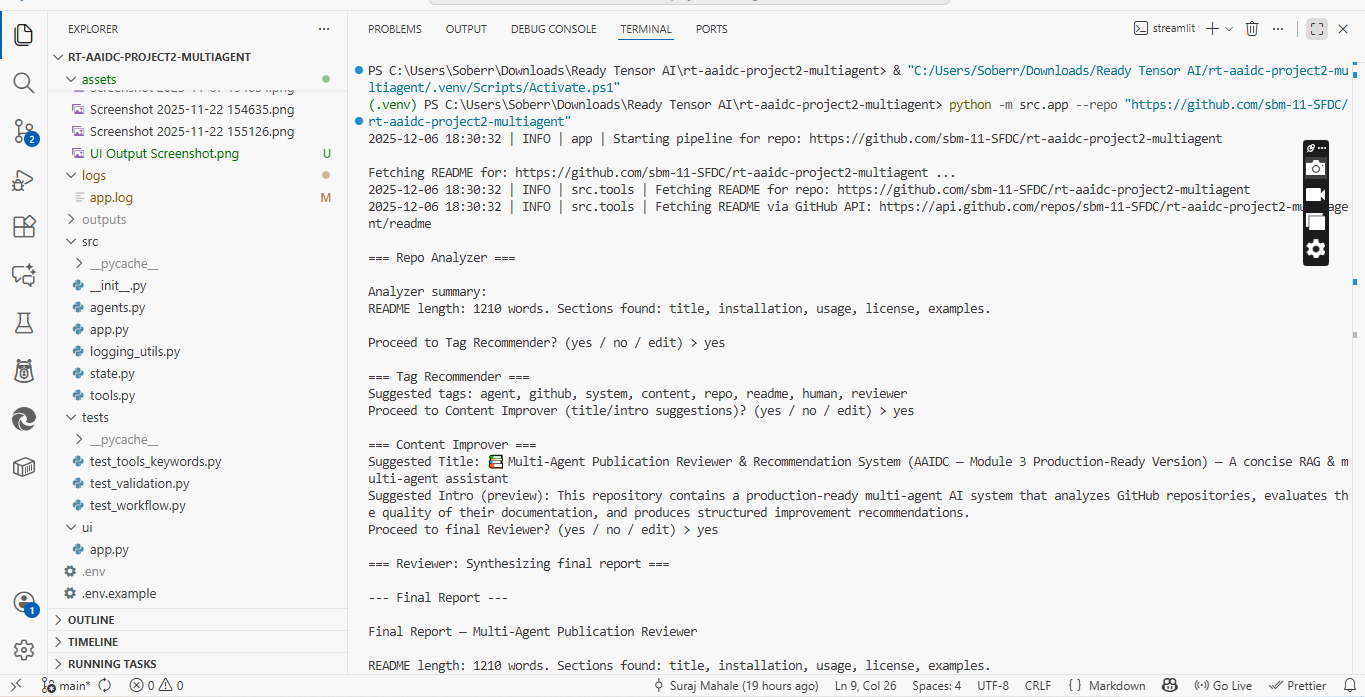

📊 Experiments

🔎 1. Retrieval Robustness

Multiple repositories were analyzed to observe stability in README extraction. Network faults and missing files triggered retries and fallback behaviors. These experiments validated safe failure and graceful degradation rather than abrupt termination.

🤝 2. Agent Coordination Efficiency

Repeated pipeline executions measured whether agents preserved context, consumed upstream edits, and produced coherent downstream reasoning — confirming reliable multi-agent handoff and state continuity.

🧍♂️ 3. Human-in-the-Loop Interaction

Experiments simulated user approvals, denials, and edits. The system consistently re-injected human-edited content into later agents and final output, demonstrating controllability and explainability.

🛡️ 4. Error Recovery and Resilience

Malformed URLs, missing files, and API disruption were tested. The pipeline handled them through logging, retries, and explicit exit messaging — validating robustness and fault awareness.

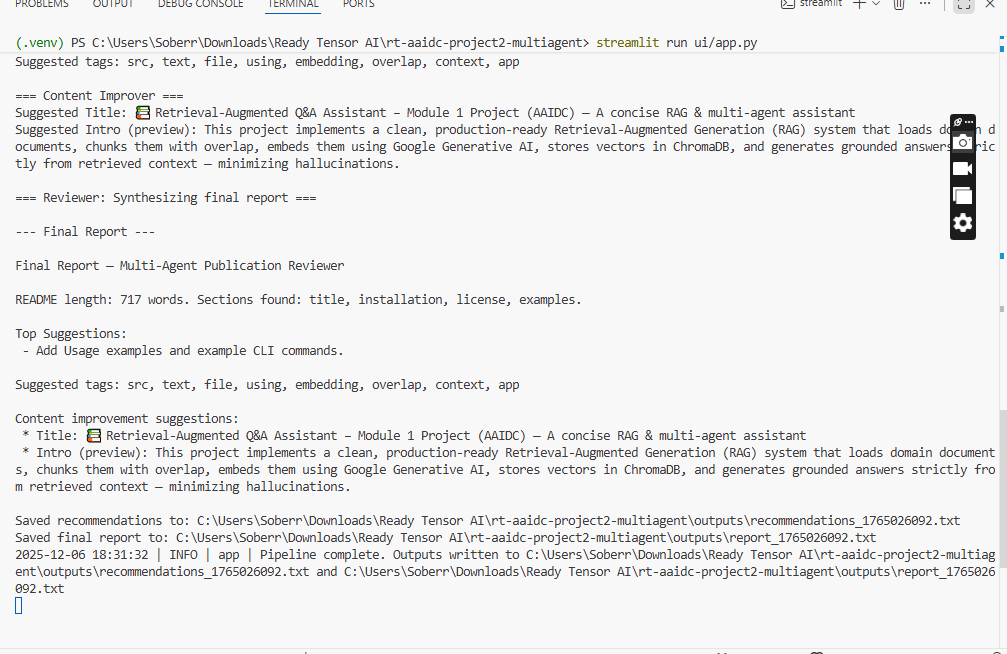

👩💻 5. System Usability Test

Non-technical users interacted with the Streamlit UI, and observed automated output generation without CLI usage, confirming accessibility and operational experience improvements.

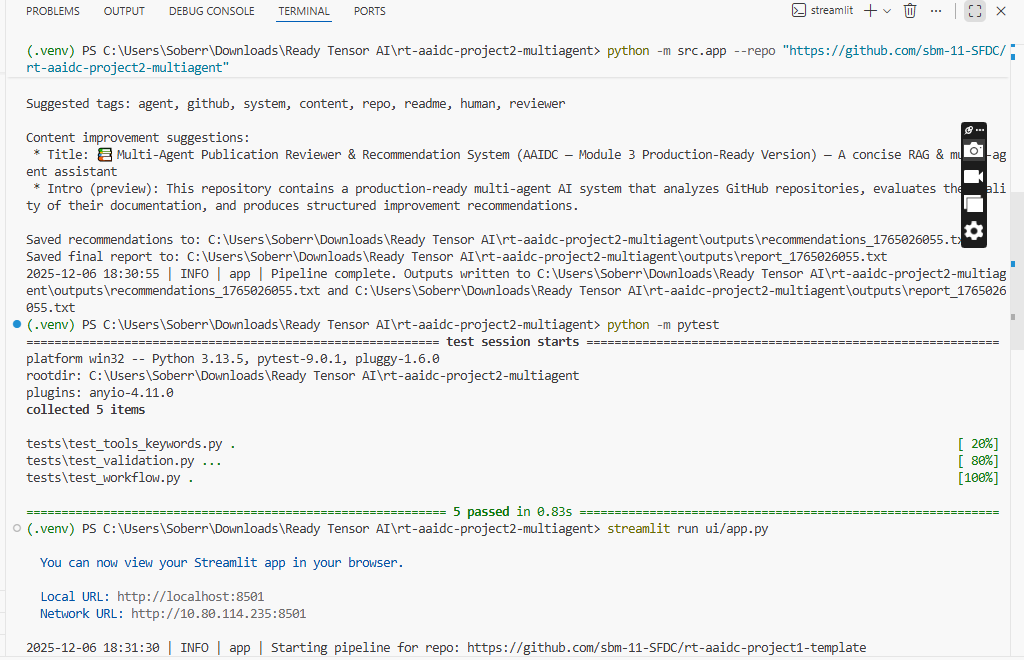

✔️ 6. Automated Validation

Pytest-driven validation confirmed correct tool execution, predictable orchestration behavior, keyword extraction accuracy, and HITL propagation across all control paths.

🌍 Significance / Contributions

This project demonstrates how prototype agentive reasoning can be operationalized into real-world software. Its core value lies in three contributions:

First, it shows a principled integration of Human-In-The-Loop supervision, enabling oversight and correction of autonomous reasoning — a critical requirement for trust in AI systems.

Second, it exhibits production engineering discipline: retry logic, validation checks, structured state management, and automated testing combine to create reliability and operational clarity absent in typical academic agent demos.

Third, it lowers interaction barriers through a user interface, enabling non-technical users to explore multi-agent reasoning without understanding the underlying orchestration mechanics.

Collectively, these contributions illustrate how agentic AI can evolve from an experimental pipeline into usable, reviewable, and deployable software — aligning directly with industry expectations for safe, auditable, and human-aligned AI.

📸 Screenshots (Execution Proof)

▶️ Practical Demonstration Link:

🚀 Future Work

While this system establishes a reliable agentic workflow, several extensions would elevate its capability and real-world applicability.

A natural evolution is plug-in agent architecture, allowing domain-specific reviewer agents (security, code quality, documentation structure, compliance) to be added dynamically. The UI could be expanded to support document uploads, version comparison, historical diffing, and workflow automation, enabling iterative improvement loops over repository lifecycles. A cloud-deployed backend with authenticated user accounts would enable persistence, dashboards, and shared collaboration. Finally, LLM guardrails and feedback learning loops would enable the system to internalize human edits over time, increasingly personalizing output to preferred writing styles and review priorities. These directions align with how agentic tooling evolves toward enterprise-scale adoption.

🏆 Results

The enhanced system performed consistently, recovered gracefully from failure cases, upheld human edits throughout workflow propagation, and provided usable recommendations through both CLI and browser interfaces. Automated tests passed entirely, demonstrating functional completeness and operational readiness. Feedback loops improved clarity of generated reports, and usability experiments showed successful adoption by individuals unfamiliar with agentic architectures.

🏁 Conclusion

This capstone transformed a conceptual multi-agent reasoning pipeline into a production-grade system embodying safety, reliability, explainability, and accessibility. The contribution lies not in creating more agents, but in engineering the existing architecture to behave like software — testable, observable, human-steerable, recoverable, and usable. Through HITL design, orchestrated flow, UI enablement, and resilience strategies, the system now reflects real-world expectations placed on agentic AI products, demonstrating both capability and maturity for professional deployment.