Abstract

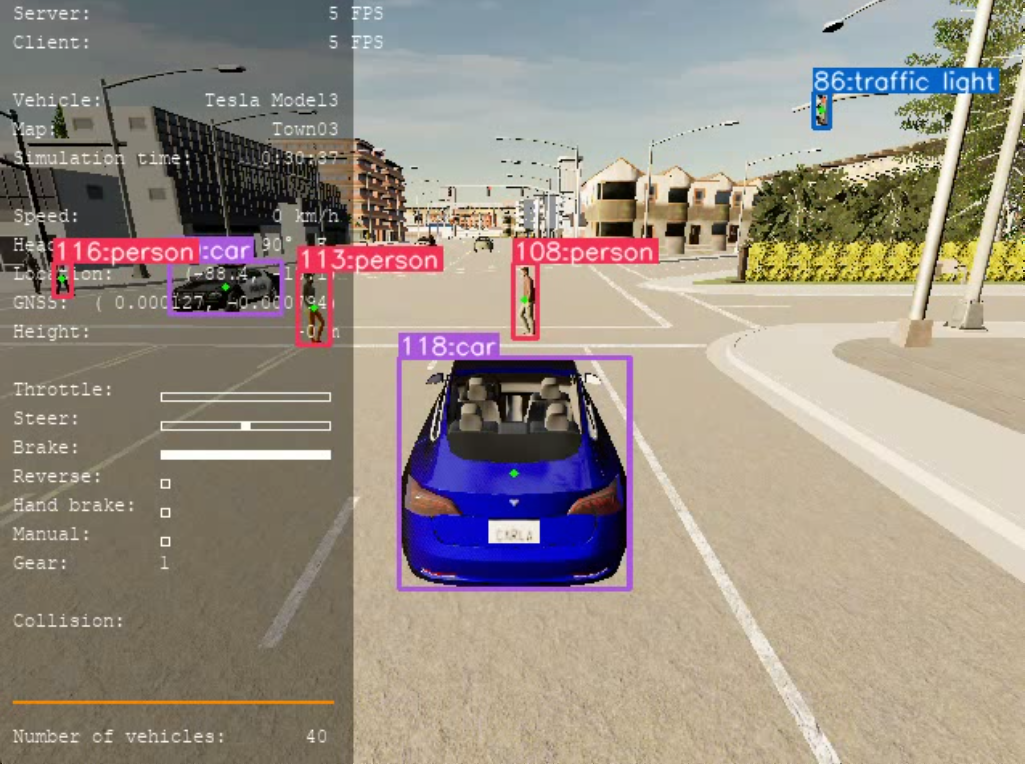

Autonomous driving research demands robust solutions for object detection and tracking in dynamic environments. This project integrates YOLOv9, a state-of-the-art object detection algorithm, with DeepSORT for real-time multi-object tracking in the CARLA Simulator. By combining these technologies, the system can accurately identify and follow objects across frames, supporting applications like trajectory planning and decision-making. The results demonstrate the efficiency of this pipeline, achieving high detection accuracy and reliable tracking under various simulated conditions. This publication outlines the methodology, experimental setup, and results, providing a foundation for further research in autonomous systems.

Introduction

Object tracking is a fundamental task in computer vision, with applications ranging from surveillance and autonomous driving to sports analytics and robotics. It involves detecting and continuously monitoring objects across frames in a video stream, ensuring that each detected entity maintains a unique identity over time. While object detection alone provides valuable insights, tracking adds another layer of intelligence by enabling real-time motion analysis, behavioral understanding, and predictive modeling.

In recent years, the YOLO (You Only Look Once) series of deep learning models has revolutionized object detection, offering state-of-the-art speed and accuracy. The latest iteration, YOLOv9, brings enhanced precision and efficiency, making it a strong candidate for real-time applications. However, YOLOv9 alone does not perform tracking—it only detects objects in individual frames without maintaining their identities. This is where DeepSORT (Simple Online and Realtime Tracking with a Deep Association Metric) comes into play.

DeepSORT is a widely used tracking algorithm that extends the original SORT (Simple Online and Realtime Tracker) framework by incorporating deep learning-based feature embeddings for robust tracking. By integrating YOLOv9 for detection with DeepSORT for tracking, we can build a powerful system capable of identifying and following objects across frames with high accuracy.

This article explores the integration of YOLOv9 and DeepSORT, detailing their individual functionalities, the process of combining them, and practical applications in real-world scenarios. By the end of this guide, you’ll understand how to implement a real-time object tracking system, optimize its performance, and apply it to various industries.

Let’s dive in!

Understanding YOLOv9

YOLOv9 represents a significant advancement in the field of real-time object detection, building upon the strengths of its predecessors in the YOLO (You Only Look Once) series. Introduced in February 2024 by Chien-Yao Wang, I-Hau Yeh, and Hong-Yuan Mark Liao, YOLOv9 addresses critical challenges in deep neural networks, such as information loss and gradient reliability, through innovative architectural enhancements.

Evolution of YOLO

The YOLO series has consistently pushed the boundaries of object detection since its inception in 2015. Each iteration has introduced improvements in speed, accuracy, and efficiency:

- YOLOv1: Framed object detection as a single regression problem, enabling real-time processing.

- YOLOv2: Introduced batch normalization and anchor boxes, expanding detectable object categories.

- YOLOv3: Enhanced feature extraction with a more complex backbone network and multi-scale predictions.

- YOLOv4: Optimized speed and accuracy with innovations like CSPDarknet53 and PANet.

- YOLOv5: Streamlined deployment with a focus on ease of use and integration.

- YOLOv7: Further refined real-time detection capabilities with architectural tweaks.

YOLOv9 builds upon this rich legacy, introducing novel concepts to tackle persistent challenges in deep learning.

Core Innovations in YOLOv9

YOLOv9's architecture incorporates several groundbreaking features designed to enhance performance and efficiency:

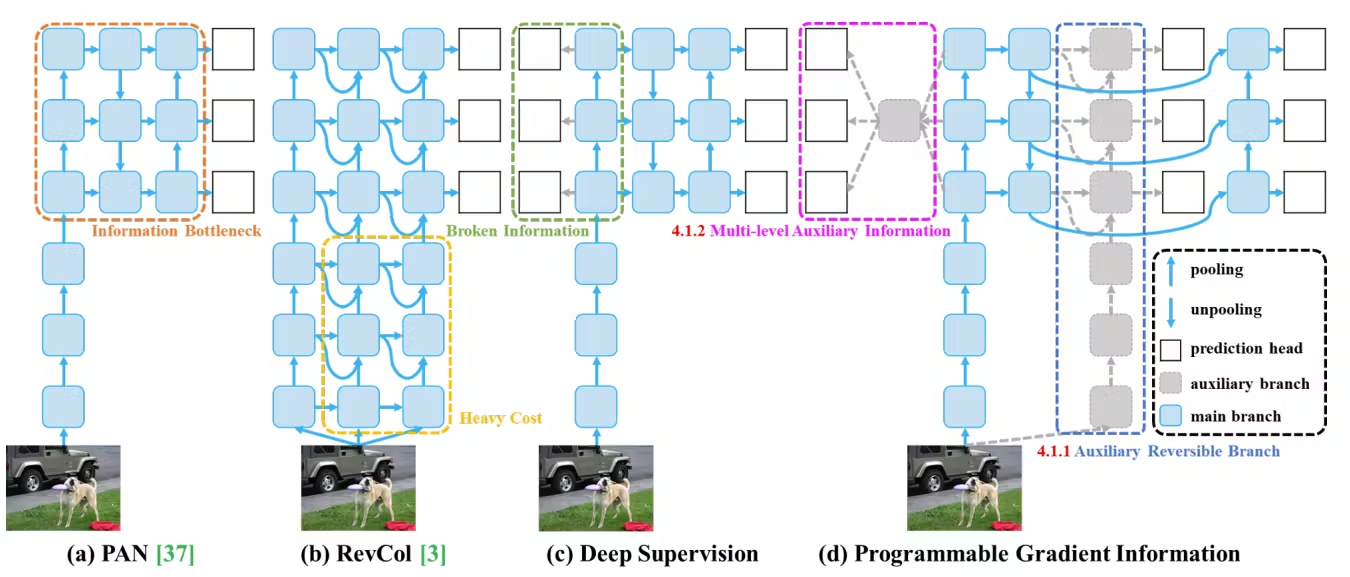

Programmable Gradient Information (PGI)

PGI addresses the issue of information loss as data propagates through deep network layers. By preserving essential data across the network, PGI ensures the generation of reliable gradients, facilitating accurate model updates and improved convergence. This mechanism is particularly beneficial for training from scratch, enabling models to achieve superior results without reliance on pre-trained weights.

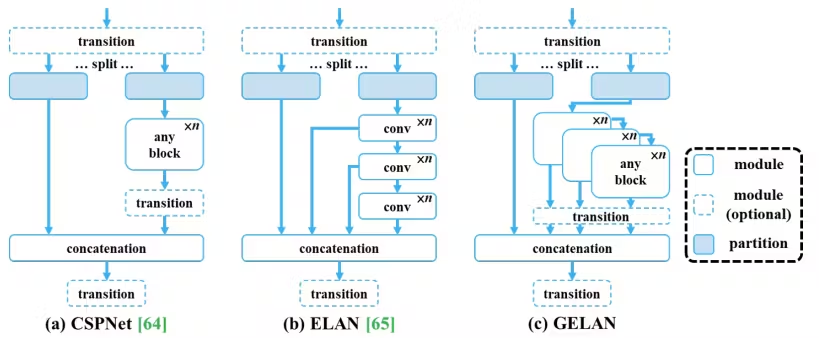

Generalized Efficient Layer Aggregation Network (GELAN)

GELAN is a novel network architecture that focuses on efficient gradient path planning. It enhances parameter utilization and computational efficiency, making YOLOv9 adept at handling lightweight models without compromising accuracy. GELAN's design allows for flexible integration of various computational blocks, optimizing the balance between model complexity and performance.

Reversible Functions

To combat the degradation of information in deeper layers, YOLOv9 incorporates reversible functions within its architecture. These functions maintain the original information flow, enabling the network to invert transformations without loss. This approach ensures that critical data is retained throughout the detection process, contributing to more accurate and reliable object detection outcomes.

Performance Benchmarks

YOLOv9 has demonstrated remarkable performance on benchmark datasets such as MS COCO. It offers multiple model variants—v9-S, v9-M, v9-C, and v9-E—catering to different application requirements. Notably, YOLOv9 achieves higher mean Average Precision (mAP) scores compared to its predecessors, while also reducing computational complexity. For instance, the YOLOv9-C model attains a 53.0% AP on the COCO validation set with 25.3 million parameters and 102.1 GFLOPs, showcasing an optimal balance between accuracy and efficiency.

In summary, YOLOv9 stands as a significant leap forward in real-time object detection, offering enhanced accuracy, efficiency, and adaptability. Its innovative features address longstanding challenges in deep learning, paving the way for more robust and scalable object detection solutions across various applications.

DeepSORT: An Overview

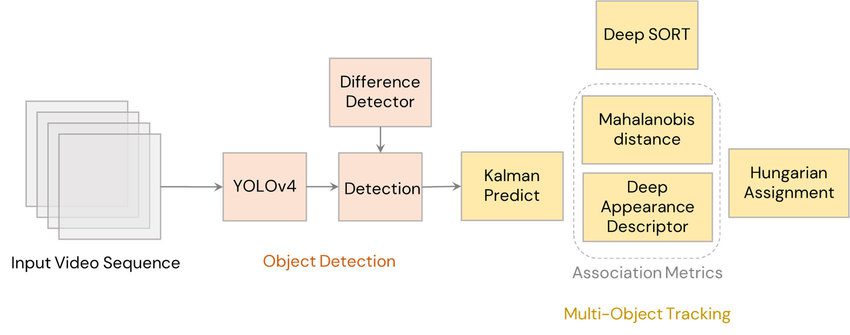

DeepSORT (Deep Simple Online and Realtime Tracking) is an advanced multi-object tracking algorithm that enhances the capabilities of its predecessor, SORT, by incorporating deep learning-based appearance descriptors. This integration significantly improves the tracker's performance, especially in scenarios involving occlusions and object re-identification.

Evolution from SORT to DeepSORT

The original SORT (Simple Online and Realtime Tracking) algorithm was designed for high-speed tracking by relying solely on motion information, using techniques like the Kalman filter for state estimation and the Hungarian algorithm for data association. While efficient, SORT faced challenges with identity switches, particularly during object occlusions or when objects had similar motion patterns.

To address these limitations, DeepSORT was developed by integrating appearance information into the tracking process. By employing a deep convolutional neural network (CNN) to extract feature embeddings for each detected object, DeepSORT creates a more robust association metric that combines both motion and appearance data. This enhancement reduces identity switches and improves the tracker's ability to maintain consistent object identities over time.

Key Components of DeepSORT

-

Detection: Utilizes an object detector (e.g., YOLO) to identify and locate objects within each frame, providing bounding boxes and confidence scores.

-

Feature Extraction: For each detected object, a pre-trained CNN extracts a feature vector (embedding) that captures the object's appearance characteristics. This step enables the tracker to distinguish between objects with similar motion patterns based on visual features.

-

State Estimation: Employs a Kalman filter to predict the future positions of tracked objects, accounting for their motion dynamics. This prediction aids in associating detections with existing tracks, even when detections are momentarily lost.

-

Data Association: Combines motion-based metrics (e.g., Mahalanobis distance) and appearance-based metrics (e.g., cosine distance between feature vectors) to match detections to existing tracks. The Hungarian algorithm is used to solve this assignment problem optimally.

-

Track Management: Handles the initiation, continuation, and termination of tracks. New tracks are created for unmatched detections, existing tracks are updated with matched detections, and tracks are deleted if they remain unmatched for a predefined number of frames.

Advantages of DeepSORT

-

Reduced Identity Switches: By incorporating appearance information, DeepSORT significantly lowers the occurrence of identity switches, maintaining consistent tracking even during occlusions or interactions between objects.

-

Robustness to Occlusion: The appearance-based association allows the tracker to re-identify objects that were temporarily occluded, enhancing tracking continuity.

-

Scalability: DeepSORT maintains real-time performance, making it suitable for applications requiring the tracking of multiple objects simultaneously without substantial computational overhead.

In summary, DeepSORT enhances traditional tracking methods by integrating deep learning-based appearance descriptors, resulting in more reliable and accurate multi-object tracking in complex environments.

Integrating YOLOv9 and DeepSORT within the CARLA Simulator enables the development of advanced real-time object detection and tracking systems, essential for autonomous vehicle research. This combination leverages YOLOv9's high-precision object detection and DeepSORT's robust multi-object tracking capabilities in CARLA's realistic simulation environment.

System Overview

The integration process involves several key components:

-

CARLA Simulation Environment: Provides high-fidelity urban and rural settings for testing autonomous driving scenarios.

-

YOLOv9 Object Detection: Identifies objects such as vehicles, pedestrians, and traffic signs within the simulation.

-

DeepSORT Tracker: Maintains consistent identities of detected objects across video frames.

-

Integration Framework: Utilizes Python and OpenCV to facilitate real-time data processing and visualization.

Implementation Steps

To achieve this integration, follow these steps:

-

Set Up the CARLA Simulator:

- Download and install CARLA 0.9.11 from the official CARLA releases page.

- Launch the simulator to create the testing environment.

-

Data Collection in CARLA:

- Use CARLA's Python API to create scripts that simulate vehicle navigation and record sensor data, such as video footage from onboard cameras.

- For instance, a

trajectory_planning.pyscript can be employed to automate vehicle movements and capture the necessary data.

-

Implement YOLOv9 for Object Detection:

- Clone the YOLOv9 repository:

git clone https://github.com/WongKinYiu/yolov9.git cd yolov9 - Install the required dependencies:

pip install -r requirements.txt - Use a script like

detect_dual_tracking.pyto process the recorded video frames, performing object detection and preparing data for tracking.

- Clone the YOLOv9 repository:

-

Integrate DeepSORT for Object Tracking:

- Incorporate the DeepSORT algorithm to associate detected objects across frames, maintaining their identities over time.

- Ensure that the feature extraction model used by DeepSORT is compatible with the detected objects from YOLOv9.

-

Run the Integrated System:

- Execute the combined detection and tracking script to process the simulation data in real-time.

- Visualize the results, observing how objects are detected and tracked consistently within the CARLA environment.

For a practical implementation and access to the necessary scripts, refer to the YOLOv9-DeepSORT real-time object tracking in CARLA project.

Applications

This integrated system has several applications:

-

Autonomous Vehicle Development: Enhances perception systems by providing accurate detection and tracking of dynamic objects, crucial for navigation and decision-making.

-

Traffic Monitoring: Assists in analyzing traffic patterns and detecting anomalies within simulated urban environments.

-

Safety Testing: Evaluates how autonomous systems respond to various obstacles and moving entities, ensuring robust performance before real-world deployment.

By combining YOLOv9 and DeepSORT within the CARLA Simulator, researchers and developers can create sophisticated models that accurately perceive and interpret dynamic environments, advancing the field of autonomous driving.

# References-

Addo-Asante Darko, R. (n.d.). YOLOv9-DeepSORT real-time object tracking in CARLA [GitHub repository]. GitHub. Retrieved February 18, 2025, from https://github.com/ROBERT-ADDO-ASANTE-DARKO/YOLOv9-DeepSORT-realtime-object-tracking-CARLA

-

CARLA Simulator. (n.d.). 3rd party integrations - CARLA simulator documentation. Retrieved February 18, 2025, from https://carla.readthedocs.io/en/latest/3rd_party_integrations

-

Tran, D. A., & Pham, M. C. (2023). City-scale multi-camera vehicle tracking of vehicles based on YOLOv7. Semantic Scholar. Retrieved from https://www.semanticscholar.org/paper/City-Scale-Multi-Camera-Vehicle-Tracking-of-based-Tran-Pham/e2c4c70ec5fc4284eac25c70166867a1783f9806

-

Zhang, H., Liu, S., & Wang, J. (2024). Traffic co-simulation framework empowered by infrastructure camera sensing and reinforcement learning. arXiv. Retrieved from https://arxiv.org/html/2412.03925v1