Revolutionizing Mobility: Brain-Controlled Wheelchairs for Enhanced Independence

Movement remains a significant challenge for many individuals, including the elderly, pregnant women, and those with severe motor disabilities or paralysis. Traditional solutions, such as relying on personal assistants or using standard electric wheelchairs controlled by joysticks, often fall short. Joysticks, while helpful, can be difficult for individuals with conditions like Parkinson’s disease or paralysis, leading to hand fatigue and reduced autonomy.

Objective: Empowering Independence Through Brain-Computer Interface

Our bachelor project addresses this pressing issue by developing a brain-controlled wheelchair. This innovative system utilizes Brain-Computer Interface (BCI) technology to enable users to control their wheelchair using brain signals rather than physical movement. The goal is to provide individuals with severe motor impairments the freedom to navigate their environments independently, without the need for constant external assistance.

Brain Computer Interface (BCI)

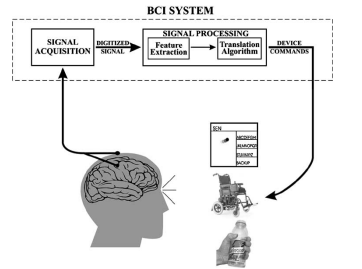

A brain computer interface (BCI) is a technology type that enables the brain to send and receive signals to/from an external device. They are also called Brain-machine interfaces. BCIs collect brain impulses through EEG (electroencephalogram) headsets. After analyzing these brain signals, they send them to a connected machine, which responds with commands based on the signals received.

The BCI technology is a way to communicate between the brain and any external device such as robotic arms, wheelchairs, spellers, cursors, robots and it also can be used in gaming. A BCI system can provide a substitute, augmentative communication, and control feature for people with severe neuromuscular disorders like brain stem stroke, spinal cord injury, or Amyotrophic Lateral Sclerosis (ALS), allowing them to interact with their surroundings without using their peripheral nerves and muscles. There are three various types of BCIs, which are active, reactive, and passive.

Steady state visually evoked potential (SSVEP)

A BCI system based on SSVEP can be used to move a cursor or a wheelchair in numerous directions or to communicate with the environment. Flickering objects of various shapes, colors, and frequencies can be used to construct an SSVEP-based paradigm depending on the user’s intention.

SSVEPs mainly rely on the phenomena of the brain, that whenever the user focuses his attention on the flickering visual stimuli, after performing a fast Fourier transform on the brain signals, the ElectroEncephaloGram (EEG) signals from the brain will have the fundamental component and harmonics of the flashing frequency. However, if the SSVEP is used for a long time, the stimulation with flickering squares can bring discomfort.

SSVEP-based paradigm can be constructed by using a graphical user interface (GUI) containing several flickering boxes with different flashing frequencies and each box having a command associated with it.

If the users want to select a specific command, they should focus only on the box with that command for a specific period. The users’ brain signals will have the same frequency as the flickering stimulus they were looking at, and thus the command chosen by the users can be detected.

ElectroEncephaloGram (EEG)

Electroencephalography (EEG) is a non-invasive method of obtaining brain impulses from the human scalp’s surface and recording of electrical activity along the scalp. EEG measures voltage fluctuations resulting from ionic current flows within the neurons of the brain.

Because of its affordable cost, simple, and safe approach, it is commonly employed in BCI systems.

Event Related Potentials (ERPs), Slow Cortical Potentials (SCPs), P300 potentials, and Steady-State

Visual Evoked Potentials (SSVEPs) are some of the brain activity that can be effectively recorded from the scalp using EEG.

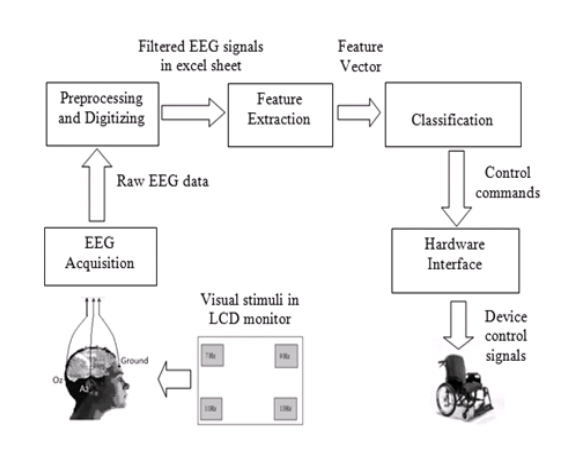

Implementation

The Brain Computer Interface System (BCI) consists of five necessary stages: data acquisition, data

pre-processing, feature extraction, classification, and a device command. The preprocessing and training steps occurred twice, once on the MAMEM dataset and another for the real data.

Data set

The MAMEM 3 dataset was examined, and the SSVEP-based experimental protocol was carried out

on 11 people, with each subject completing a 5-session process that included 25 trials and each session was divided into two sections. So, for each individual, there are ten Matlab files holding the EEG brain signals as well as the event codes to conveniently determine when the trial began and ended.

The experiment’s stimuli were five violet boxes blinking at five distinct frequencies simultaneously (6.66, 7.50, 8.57, 10.00 and 12.00 Hz). Each box flickered at a distinct frequency, and they were all displayed concurrently for 5 seconds (the trial), followed by a 5-second period without visual stimuli before the flickering boxes reappeared. One of the boxes was marked with a yellow arrow prior to the stimulation phase, indicating the box on which patients were to concentrate. During the trial, the pointing arrow is visible, making it easier for the subjects to concentrate during the whole period. During the experiment, the background was black. The visual stimuli were displayed on LCD Screen with a refresh rate of 60 Hz.

Each subject’s trial was started with a 100-second adaption time. The adaptation duration consisted

of presenting the 5 selected stimuli to the subject in a random order, with the subject focused on the

first one.

It was viewed as a critical component of the process because the subject was able to become

comfortable with the visual stimulation. The first of five similar sessions in the experiment began 30

seconds after the adaption phase ended. Each session consists of 25 trials divided into two sections by a 30-second break period. The first half contains 12 trials, while the second contains 13. In order to avoid repeated exposure, the target in each trial is chosen at random. The subject is then given a 5-minute break after that to recuperate and prepare for the next session. The labels (1, 2, 3, 4, and 5) correspond to frequencies of 12.00, 10.00, 8.57, 7.50, and 6.66 respectively. The participants were asked to focus on the flickering boxes in the following sequence per session: 4 2 3 5 1 2 5 4 2 3 1 5 4 3 2 4 1 2 5 3 4 1 3 1 3.

With the help of the Emotiv SDK, raw data from the Emotiv EPOC headset was collected and sent

to OpenVibe via a Labstreaminglayer inlet3.

The OpenVibe application conducted the synchronization between the stimuli and the EEG signal, and the output was saved in GDF format. The signals were 20 filtered using bandpass and notch filters.

To import the Matlab files into Python for pre-processing and analysis, the load.mat function from

the scipy library (scipy.io) was used. The dictionary holding the data of each file has two main at-

tributes: EEG and Events. The EEG 2D array contains the subject’s brain waves, whereas the events

2D array has four columns, the second column displays some event codes. An event code of 32779 specifies the start of a trial, while the 32780 code marks the end of a trial.

The next step was to loop over all the events arrays one by one to find the start and end of each

trial. Whenever the start code(32779) is encountered followed by the end code(32780), their corresponding indices which are the values in the next column, these indices are sent to another function called getFromEEG, to obtain the relative EEG signals from the corresponding EEG numpy array after applying CAR Filter to it.

O1 and O2 are 2D numpy arrays that consist of 125 elements, where each element in them is a 1D

array of size 640, storing the brain signals corresponding to each trial. O1 has the EEG data from row 6 while O2 has the data from row 7.

To determine which brain signals belong to which frequency and box, we must divide the O1 and O2

arrays into five 2D arrays (three of size 25, one of size 30, and one of size 20), each of which contains all of the EEG brain signals recorded when focusing on one of the frequencies.

For example, the frequencies 12.00,10.00, 8.57, 7.50, and 6.66 Hz are represented by arrays (O1Box1, O1Box2,...,O1Box5).

The diagrams showing the plots after applying FFT on MAMEM dataset are in figures 3.6,...,3.10.

Below are the plottings of the FFT of each box for a 5 second interval.

Data Acquisition

The EEG signals of the subjects’ brains are the data to be collected in this work. When the GUI con-

taining the flashing stimuli is displayed to the individuals, these brain signals will be captured to see how focusing on different frequencies affects the brain signals. The brain signals of participants focusing on a flickering visual stimulus with a given frequency are predicted to have the same frequency, according to the SSVEP phenomena

Data Pre-processing

Raw EEG data are a mixture of brain activity, biological artifacts, and external noise. Therefore, pre-

processing is vital to eliminate noise, remove artifacts, and enhance the signal-to- noise ratio of the EEG signals. Re-referencing, filtering, extracting data segments, removing bad segments, and interpolating bad electrodes are examples of pre-processing procedures.

Numerous functions were constructed using the scipy.signal module, which provides multiple tools for analyzing and designing processing systems, with the goal of making the design and application of digital filters to the signal easier. In this way, filters having a pass band at the desired frequencies can be designed to extract the presence of an SSVEP phenomena at those frequencies.

Poor electrode insertion on the scalp is the most common cause of power line interference detected

in EEG. This noise is frequently caused by high-impedance electrodes that, when attached to the recording device, alter the amplifier’s common mode rejection ratio, which fluctuates when the electrode and scalp impedances are not matched. Several attempts to eliminate power line interference using digital signal processing techniques have been made. To remove noise from data, it is a common practice to employ a 60/50 Hz notch filter with a set null in its frequency response characteristic.

First of all, a notch filter with a sampling frequency of 250 Hz, notch frequency of 50 ,and a qual-

ity factor of 20 is applied to the signals to remove the artifacts from the raw EEG signals. It is a band

rejection filter which reduces the power associated with desired frequency signals and does not affect all other frequency components, making it useful when trying to remove specific frequency components from the input signal.

The butter worth bandpass filter is then used to keep only the frequencies between the low cut frequency (1.0 Hz) and the high cut frequency (60.0 Hz) in order to isolate primitive frequency components out of cyclic input data to be further used by the Fourier series indicator and to filter the undesired frequency components that are present in the EEG data, creating a smoother representation of the raw EEG signals, making them cleaner for the next stage which is applying Fast Fourier Transform as well as removing the undesired frequencies less than 1 and greater than 60.

After that, a Common Average Reference Filter(CAR Filter) is performed over all electrodes to re-

duce the error in each electrode, this can be done by calculating the mean/average of all the EEG signals coming from the 8 electrodes of the Unicorn headset and then subtracting this mean from all the electrodes for every time sample to average the model error over all the channels.

Feature extraction

Fast Fourier Transform (FFT) is one of the well-known feature extraction techniques for EEG in BCI

applications, which is employed in this experiment. FFT transforms a signal from the time domain into frequency domain. In this way, EEG recordings can be plotted in a frequency power-spectrum graph and perform a spectral analysis showing how power is distributed and observe the peaks at the desired frequencies. Feature extraction reduces the dimentionality of data, the size of the trial before FFT is 1250 and after FFT is 760.

The sampling rate used was 250 Hz. A bandwidth-limited time signal’s samples must be captured

at least twice the rate of the bandwidth, according to the sampling theorem. The function performing FFT returns the power (Y) and its corresponding frequencies (X).

After the performed preprocessing techniques, FFT has been applied to all the EEG sessions for all

the electrodes trial by trial with 1250 samples and 250 Hz for the sampling frequency following the

Nyquist theorem. The Nyquist–Shannon sampling theorem is a key theorem in signal processing that bridges the gap between continuous-time and discrete-time signals. It sets a necessary condition for a sample rate that allows a discrete sequence of samples to capture all of the data from a continuous-time signal with finite bandwidth. More than twice the number of samples per second (threshold) is required for a sufficient sampling rate, this threshold is named as the Nyquist frequency.

This experiment has 5 different frequencies in the GUI, thus the brain signals are expected to have

one of these frequencies, however, they can have their frequencies, second and third harmonics, or the first preceding or following frequencies. Consequently, all the other frequencies are not crucial so only the mentioned frequencies were kept by selecting them from the output of the FFT. These desired frequencies are 45 frequencies which are 12.00, 10.00, 8.57, 7.50, and 6.66 Hz, and their harmonics (multiplied by 2 and by 3) and taking the consecutive preceding and following frequencies. However, due to the use of the Tkinter library in making the graphical user interface of the flickering stimuli, the flickering frequencies were not exactly the same, so filtering the desired frequencies may cause a decrease in the accuracy. As a result, it is better to not to filter only the 45 frequencies and to keep all the harmonics as is.

Classification

Support Vector Machines (SVMs)

SVMs are powerful supervised machine learning algorithms that are used for both classification and

regression. But generally, they are used in classification problems. Lately, they are extremely popular

because of their ability to handle multiple continuous and categorical variables. In this experiment, SVM is used for classification. The used classifier is SVC(Support Vector Classifier) and it can be imported from scikit sklearn.

The most used kernel functions of SVM are Gaussian radial basis function (RBF), polynomial, sigmoid and linear. Linear, polynomial, and RBF were used in this experiment. A for loop was used to try all the kernels and to print the accuracy associated with each one of them, it turned out that the best accuracy was resulting from the RBF kernel.

Conclusion

Our project successfully demonstrates the potential of a Brain-Computer Interface (BCI) system using Steady State Visual Evoked Potential (SSVEP) technology to control a wheelchair. By capturing and processing EEG signals while users focus on flickering stimuli, we can translate brain activity into commands that control wheelchair movement.

The system operates by filtering and processing these EEG signals to train a model, which then predicts control commands in real-time. These predictions are used to direct an Arduino, which in turn controls the wheelchair. Experimental results indicate that the system achieves an impressive accuracy of 80% in controlled settings. However, it is important to note that not all subjects exhibit strong SSVEP responses, leading to variability in accuracy among different individuals.

In practical applications, our system was tested with a small prototype wheelchair, achieving a real-time accuracy of 60%. This underscores the system’s promise and potential, while also highlighting the need for further refinement to improve its effectiveness across a broader range of users. Overall, this BCI-based approach offers a significant step forward in enhancing mobility and independence for individuals with severe motor impairments.